Processing Natural Language for the Spotify API Are Sophisticated Natural Language Processing Algorithms Necessary When Processing Language in a Limited Scope?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

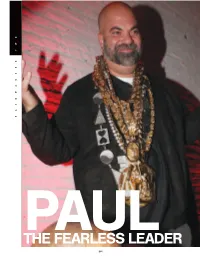

The Fearless Leader Fearless the Paul 196

TWO RAINMAKERS PAUL THE FEARLESS LEADER 196 ven back then, I was taking on far too many jobs,” Def Jam Chairman Paul Rosenberg recalls of his early career. As the longtime man- Eager of Eminem, Rosenberg has been a substantial player in the unfolding of the ‘‘ modern era and the dominance of hip-hop in the last two decades. His work in that capacity naturally positioned him to seize the reins at the major label that brought rap to the mainstream. Before he began managing the best- selling rapper of all time, Rosenberg was an attorney, hustling in Detroit and New York but always intimately connected with the Detroit rap scene. Later on, he was a boutique-label owner, film producer and, finally, major-label boss. The success he’s had thus far required savvy and finesse, no question. But it’s been Rosenberg’s fearlessness and commitment to breaking barriers that have secured him this high perch. (And given his imposing height, Rosenberg’s perch is higher than average.) “PAUL HAS Legendary exec and Interscope co-found- er Jimmy Iovine summed up Rosenberg’s INCREDIBLE unique qualifications while simultaneously INSTINCTS assessing the State of the Biz: “Bringing AND A REAL Paul in as an entrepreneur is a good idea, COMMITMENT and they should bring in more—because TO ARTISTRY. in order to get the record business really HE’S SEEN healthy, it’s going to take risks and it’s going to take thinking outside of the box,” he FIRSTHAND told us. “At its height, the business was run THE UNBELIEV- primarily by entrepreneurs who either sold ABLE RESULTS their businesses or stayed—Ahmet Ertegun, THAT COME David Geffen, Jerry Moss and Herb Alpert FROM ALLOW- were all entrepreneurs.” ING ARTISTS He grew up in the Detroit suburb of Farmington Hills, surrounded on all sides TO BE THEM- by music and the arts. -

United States District Court for the Western District of Pennsylvania

Case 2:08-cv-01327-GLL Document 8 Filed 11/26/08 Page 1 of 3 UNITED STATES DISTRICT COURT FOR THE WESTERN DISTRICT OF PENNSYLVANIA ZOMBA RECORDING LLC, a Delaware limited liabiHty company; ELECTRONICALLY FILED BMG MUSIC, a New York general partnership; INTERSCOPE RECORDS, a Califorma general partnership; UMG CIVIL AcrION NO. 2:08-cv-01327-GLL RECORDINGS, INC., a Delaware corporation; and WARNER BROS. RECORDS INC., a Delaware corporation, Plaintiffs, vs. ClARA MICHELE SAURO, Defendant. DEFAULT .JUDGMENT AND PERMANENT IN,JUNCTION Based upon Plaintiffs' Motion For Default Judgment By The Court, and good cause appearing therefore, it is hereby Ordered and Adjudged that: 1. Plaintiffs seek the minimum statutory damages of $750 per infringed work, as authorized under the Copyright Act (17 U.S.c. § 504(c)(1», for each of the ten sound recordings listed in Exhibit A to the Complaint. Accordingly, having been adjudged to be in default, Defendant shall pay damages to Plaintiffs for infringement of Plaintiffs' copyrights in the sound recordings listed in Exhibit A to the Complaint, in the total principal sum of Seven Thousand Five Hundred Dollars ($7,500.00). 2. Defendant shall further pay Plaintiffs' costs of suit herein in the amount of Four Hundred Twenty Dollars ($420.00). Case 2:08-cv-01327-GLL Document 8 Filed 11/26/08 Page 2 of 3 3. Defendant shall be and hereby is enjoined from directly or indirectly infringing Plaintiffs' rights under federal or state law in the following copyrighted sound recordings: • "Cry Me a River," on album "Justified," -

MUSIC NOTES: Exploring Music Listening Data As a Visual Representation of Self

MUSIC NOTES: Exploring Music Listening Data as a Visual Representation of Self Chad Philip Hall A thesis submitted in partial fulfillment of the requirements for the degree of: Master of Design University of Washington 2016 Committee: Kristine Matthews Karen Cheng Linda Norlen Program Authorized to Offer Degree: Art ©Copyright 2016 Chad Philip Hall University of Washington Abstract MUSIC NOTES: Exploring Music Listening Data as a Visual Representation of Self Chad Philip Hall Co-Chairs of the Supervisory Committee: Kristine Matthews, Associate Professor + Chair Division of Design, Visual Communication Design School of Art + Art History + Design Karen Cheng, Professor Division of Design, Visual Communication Design School of Art + Art History + Design Shelves of vinyl records and cassette tapes spark thoughts and mem ories at a quick glance. In the shift to digital formats, we lost physical artifacts but gained data as a rich, but often hidden artifact of our music listening. This project tracked and visualized the music listening habits of eight people over 30 days to explore how this data can serve as a visual representation of self and present new opportunities for reflection. 1 exploring music listening data as MUSIC NOTES a visual representation of self CHAD PHILIP HALL 2 A THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF: master of design university of washington 2016 COMMITTEE: kristine matthews karen cheng linda norlen PROGRAM AUTHORIZED TO OFFER DEGREE: school of art + art history + design, division -

Eminem 1 Eminem

Eminem 1 Eminem Eminem Eminem performing live at the DJ Hero Party in Los Angeles, June 1, 2009 Background information Birth name Marshall Bruce Mathers III Born October 17, 1972 Saint Joseph, Missouri, U.S. Origin Warren, Michigan, U.S. Genres Hip hop Occupations Rapper Record producer Actor Songwriter Years active 1995–present Labels Interscope, Aftermath Associated acts Dr. Dre, D12, Royce da 5'9", 50 Cent, Obie Trice Website [www.eminem.com www.eminem.com] Marshall Bruce Mathers III (born October 17, 1972),[1] better known by his stage name Eminem, is an American rapper, record producer, and actor. Eminem quickly gained popularity in 1999 with his major-label debut album, The Slim Shady LP, which won a Grammy Award for Best Rap Album. The following album, The Marshall Mathers LP, became the fastest-selling solo album in United States history.[2] It brought Eminem increased popularity, including his own record label, Shady Records, and brought his group project, D12, to mainstream recognition. The Marshall Mathers LP and his third album, The Eminem Show, also won Grammy Awards, making Eminem the first artist to win Best Rap Album for three consecutive LPs. He then won the award again in 2010 for his album Relapse and in 2011 for his album Recovery, giving him a total of 13 Grammys in his career. In 2003, he won the Academy Award for Best Original Song for "Lose Yourself" from the film, 8 Mile, in which he also played the lead. "Lose Yourself" would go on to become the longest running No. 1 hip hop single.[3] Eminem then went on hiatus after touring in 2005. -

KYGO/Denver PD Record and Radio Industries These Days

MARCONI AWARDS POWER NOMINEES JAN & UNSUNG JEFFRIES HEROES Airplay31 Leaders The Interview PAGE 7 PAGE 15 PAGE 33 b&d_cov_airchk:LayoutSEPTEMBER 2009 1 8/25/09 1:07 PM Page 1 ® © 2 0 0 9 S O N Y M U S I C E N T E R T A I N M E N T 4 08 Turning Twenty he “good ol’ days” typically get a lot better press than they deserve. Unless, pretty easy to see where the potential on the roster was. of course, you’re talking about 1989, country music and Country radio. The Nobody knew big numbers here. I marketed the first album right along with the second one, which was what nobody got. NFL may have the quarterback class of ‘83 – Elway, Marino, Kelly, et al. – but When a new record comes out you force them to do catalog Nashville’s “Class of ‘89” tops even that illustrious group. and market them side-by-side. I was getting reorders of a T million units from one account. Amazing. You made them, “I remember this kind of stocky kid who kept coming into DuBois says. “I’d never run a record label so I can’t say it you shipped them and they disappeared.” the station because he had nothing else to do during the day,” changed all of a sudden, but radio was just so open to new The balloon was on the way up. “That Class of ‘89 says former KPLX/Dallas GM music. I used to call it the giant flush. -

Kygo Releases New Track “Gone Are the Days” Ft

KYGO RELEASES NEW TRACK “GONE ARE THE DAYS” FT. JAMES GILLESPIE Global superstar, producer and DJ, Kyrre Gørvell-Dahll – a.k.a Kygo releases his new song “Gone Are The Days” ft. James Gillespie. “Gone Are The Days,” out now via RCA Records, is Kygo’s first new track release in 2021 and comes alongside a beautiful piano version. Listen HERE. Earlier this year Kygo performed a breathtaking set which was livestreamed on Moment House. Filmed on a snow-capped mountain top in the Sunnmore Alps in his home country of Norway, the one-of-a-kind virtual experience allowed audiences around the world to watch the global superstar deliver a career-spanning set amidst a breathtaking environment unlike anywhere they’ve seen him perform before. 2020 was a massive year for Kygo. He released a remix of Donna Summer’s Grammy Award winning, platinum, #1 song “Hot Stuff” as well as a remix of Tina Turner’s “What’s Love Got To Do With It” to rave reviews. He also released his critically acclaimed third full-length album Golden Hour which features his hit single “Lose Somebody” with OneRepublic as well as “Higher Love” with Whitney Houston, the latter of which has garnered over 1.1 billion streams worldwide and charted at Top 40 radio. BUY/STREAM GOLDEN HOUR HERE With over 100 MILLION streams worldwide, James Gillespie emerges as one of the most quietly explosive artists in the UK. After performing with P!NK he went on to sell out his European tour and is set to tour the U.S this fall. -

What's Included

WHAT’S INCLUDED ENCORE (EMINEM) » Curtains Up » Just Lose It » Evil Deeds » Ass Like That » Never Enough » Spend Some Time » Yellow Brick Road » Mockingbird » Like Toy Soldiers » Crazy in Love » Mosh » One Shot 2 Shot » Puke » Final Thought [Skit] » My 1st Single » Encore » Paul [Skit] » We as Americans » Rain Man » Love You More » Big Weenie » Ricky Ticky Toc » Em Calls Paul [Skit] CURTAIN CALL (EMINEM) » Fack » Sing For The Moment » Shake That » Without Me » When I’m Gone » Like Toy Soldiers » Intro (Curtain Call) » The Real Slim Shady » The Way I Am » Mockingbird » My name Is » Guilty Conscience » Stan » Cleanin Out My Closet » Lose Yourself » Just Lose It » Shake That RELAPSE (EMINEM) » Dr. West [Skit] » Stay Wide Awake » 3 A.M. » Old Time’s Sake » My Mom » Must Be the Ganja » Insane » Mr. Mathers [Skit] » Bagpipes from Baghdad » Déjà Vu » Hello » Beautiful » Tonya [Skit] » Crack a Bottle » Same Song & Dance » Steve Berman [Skit] » We Made You » Underground » Medicine Ball » Careful What You Wish For » Paul [Skit] » My Darling Royalties Catalog | For more information on this catalog, contact us at 1-800-718-2891 | ©2017 Royalty Flow. All rights reserved. Page. 1 WHAT’S INCLUDED RELAPSE: REFILL (EMINEM) » Forever » Hell Breaks Loose » Buffalo Bill » Elevator » Taking My Ball » Music Box » Drop the Bomb On ‘Em RECOVERY (EMINEM) » Cold Wind Blows » Space Bound » Talkin’ 2 Myself » Cinderella Man » On Fire » 25 to Life » Won’t Back Down » So Bad » W.T.P. » Almost Famous » Going Through Changes » Love the Way You Lie » Not Afraid » You’re Never Over » Seduction » [Untitled Hidden Track] » No Love THE MARSHALL MATHERS LP 2 (EMINEM) » Bad Guy » Rap God » Parking Lot (Skit) » Brainless » Rhyme Or Reason » Stronger Than I Was » So Much Better » The Monster » Survival » So Far » Legacy » Love Game » Asshole » Headlights » Berzerk » Evil Twin Royalties Catalog | For more information on this catalog, contact us at 1-800-718-2891 | ©2017 Royalty Flow. -

Album Top 1000 2021

2021 2020 ARTIEST ALBUM JAAR ? 9 Arc%c Monkeys Whatever People Say I Am, That's What I'm Not 2006 ? 12 Editors An end has a start 2007 ? 5 Metallica Metallica (The Black Album) 1991 ? 4 Muse Origin of Symmetry 2001 ? 2 Nirvana Nevermind 1992 ? 7 Oasis (What's the Story) Morning Glory? 1995 ? 1 Pearl Jam Ten 1992 ? 6 Queens Of The Stone Age Songs for the Deaf 2002 ? 3 Radiohead OK Computer 1997 ? 8 Rage Against The Machine Rage Against The Machine 1993 11 10 Green Day Dookie 1995 12 17 R.E.M. Automa%c for the People 1992 13 13 Linkin' Park Hybrid Theory 2001 14 19 Pink floyd Dark side of the moon 1973 15 11 System of a Down Toxicity 2001 16 15 Red Hot Chili Peppers Californica%on 2000 17 18 Smashing Pumpkins Mellon Collie and the Infinite Sadness 1995 18 28 U2 The Joshua Tree 1987 19 23 Rammstein Muaer 2001 20 22 Live Throwing Copper 1995 21 27 The Black Keys El Camino 2012 22 25 Soundgarden Superunknown 1994 23 26 Guns N' Roses Appe%te for Destruc%on 1989 24 20 Muse Black Holes and Revela%ons 2006 25 46 Alanis Morisseae Jagged Liale Pill 1996 26 21 Metallica Master of Puppets 1986 27 34 The Killers Hot Fuss 2004 28 16 Foo Fighters The Colour and the Shape 1997 29 14 Alice in Chains Dirt 1992 30 42 Arc%c Monkeys AM 2014 31 29 Tool Aenima 1996 32 32 Nirvana MTV Unplugged in New York 1994 33 31 Johan Pergola 2001 34 37 Joy Division Unknown Pleasures 1979 35 36 Green Day American idiot 2005 36 58 Arcade Fire Funeral 2005 37 43 Jeff Buckley Grace 1994 38 41 Eddie Vedder Into the Wild 2007 39 54 Audioslave Audioslave 2002 40 35 The Beatles Sgt. -

GLOBAL SUPERSTAR KYGO UNVEILS MUSIC VIDEO for NEW SINGLE “Remind Me to Forget” FEATURING MIGUEL WATCH HERE

GLOBAL SUPERSTAR KYGO UNVEILS MUSIC VIDEO FOR NEW SINGLE “Remind Me To Forget” FEATURING MIGUEL WATCH HERE DUO SET TO PERFORM ON The Tonight Show Starring Jimmy Fallon MAY 14TH! KIDS IN LOVE TOUR MAKES FINAL STOPS IN WASHINGTON D.C, BROOKLYN, NY, AND BOSTON, MA PURCHASE YOUR TICKETS HERE May 7th, 2018 - New York, NY- Today, global superstar/producer/DJ, Kyrre Gørvell-Dahll - a.k.a. Kygo, releases the music video for his explosive new single “Remind Me To Forget” with GRAMMY® Winner, Miguel. The visual showcases a ballerina performing a gritty emotional dance around a darkened, self- destructive home “leaving her mark” in the middle of the chaos. You can watch “Remind Me To Forget” here : http://smarturl.it/xRMTF Kygo performs his final four shows of his Phase 2 Kids In Love Tour- with back to back stops in Washington D.C at The Anthem, a performance in NY at the Barclays Center, wrapping up in Boston, MA at TD Garden. You can purchase tickets here. KIDS IN LOVE TOUR Monday, May 07, 2018- Washington, DC @ The Anthem Tuesday, May 8, 2018- Washington DC @ The Anthem Friday, May 11, 2018- Brooklyn, NY @ Barclays Center Saturday, May 12, 2018- Boston, MA @ TD Garden ABOUT KYGO Kygo is a Norwegian-born, world-renowned producer, songwriter, DJ and music marvel who has turned himself into an international sensation in unprecedented time. He was crowned Spotify’s Breakout Artist of 2015, as songs including “Firestone (feat. Conrad Sewell)” and “Stole The Show (feat. Parson James)”, which was certified gold or platinum in sixteen countries, helped him become the fastest artist to reach 1 billion streams on Spotify. -

Journal of the Society for American Music Eminem's “My Name

Journal of the Society for American Music http://journals.cambridge.org/SAM Additional services for Journal of the Society for American Music: Email alerts: Click here Subscriptions: Click here Commercial reprints: Click here Terms of use : Click here Eminem's “My Name Is”: Signifying Whiteness, Rearticulating Race LOREN KAJIKAWA Journal of the Society for American Music / Volume 3 / Issue 03 / August 2009, pp 341 - 363 DOI: 10.1017/S1752196309990459, Published online: 14 July 2009 Link to this article: http://journals.cambridge.org/abstract_S1752196309990459 How to cite this article: LOREN KAJIKAWA (2009). Eminem's “My Name Is”: Signifying Whiteness, Rearticulating Race. Journal of the Society for American Music, 3, pp 341-363 doi:10.1017/S1752196309990459 Request Permissions : Click here Downloaded from http://journals.cambridge.org/SAM, IP address: 140.233.51.181 on 06 Feb 2013 Journal of the Society for American Music (2009) Volume 3, Number 3, pp. 341–363. C 2009 The Society for American Music doi:10.1017/S1752196309990459 ⃝ Eminem’s “My Name Is”: Signifying Whiteness, Rearticulating Race LOREN KAJIKAWA Abstract Eminem’s emergence as one of the most popular rap stars of 2000 raised numerous questions about the evolving meaning of whiteness in U.S. society. Comparing The Slim Shady LP (1999) with his relatively unknown and commercially unsuccessful first album, Infinite (1996), reveals that instead of transcending racial boundaries as some critics have suggested, Eminem negotiated them in ways that made sense to his target audiences. In particular, Eminem’s influential single “My Name Is,” which helped launch his mainstream career, parodied various representations of whiteness to help counter charges that the white rapper lacked authenticity or was simply stealing black culture. -

Lady Gaga Leads “2020 Mtv Ema” Nominations Followed by Bts and Justin Bieber in Show Airing Globally November 8Th

LADY GAGA LEADS “2020 MTV EMA” NOMINATIONS FOLLOWED BY BTS AND JUSTIN BIEBER IN SHOW AIRING GLOBALLY NOVEMBER 8TH PRESS ASSETS CAN BE FOUND HERE SOCIAL TAGS: #MTVEMA @MTVEMA NEW YORK/LONDON—OCT. 6, 2020—MTV today announced nominations for the “2020 MTV EMAs.” Lady Gaga is in the lead with seven nods, including “Best Artist,” “Best Pop,” and “Best Video” for her duet with Ariana Grande, “Rain On Me,” which also secured a position in the “Best Song” and “Best Collaboration” categories. BTS and Justin Bieber each received five nods, within categories including “Biggest Fans” and “Best Pop.” The nominations include three new categories: “Best Latin,” “Video for Good” and “Best Virtual Live.” The “Best Local Act” category returns, with nominations including: Lady Gaga, Megan Thee Stallion and Cardi B for “Best US Act,” Justin Bieber and The Weeknd for “Best Canadian Act” and Dua Lipa and Little Mix for “Best UK & Ireland Act,” and more. Marking the 27th edition of one of the biggest nights in global music, the “2020 MTV EMAs” will unite fans at home with the world’s hottest names in music. Details on performers and presenters will be announced soon. | 1 LADY GAGA LEADS “2020 MTV EMA” NOMINATIONS FOLLOWED BY BTS AND JUSTIN BIEBER IN SHOW AIRING GLOBALLY NOVEMBER 8TH The two-hour “2020 MTV EMAs” will air globally on MTV in 180 countries and territories on Sunday, November 8, 2020. Fans can begin to cast their votes today, as voting is now open at mtvema.com until November 2 at 11:59pm CET. -

“LOSE SOMEBODY” by KYGO Meets National Core Arts Standards 7-9

SONG OF THE MONTH “LOSE SOMEBODY” BY KYGO Meets National Core Arts Standards 7-9 OBJECTIVES What new instruments are heard during the instrumental break? • Perceive and analyze artistic work (Re7) What is this song about? • Interpret intent and meaning in artistic work (Re8) Is this song fast or slow? • Apply criteria to evaluate artistic work (Re9) How would you describe the beats? How does it make you feel? Why do you think it makes you feel that MATERIALS way? • Music Alive! magazines (Vol.40 No.2) Which part of the song is your favorite and why? • Computer or mobile device with Internet access CLOSE START Watch the music video for “Lose Somebody” How does the video capture the 1. Ask students to read the text on pages XX-XX on their own essence of the lyrical content of the song? 2. Have one of the students read aloud the text on page 16 3. Play Kygo’s “Lose Somebody” (Hear the Music track 1 on DISCUSS musicalive.com), The music video for “Lose Somebody” shows a variety of clips. Do these relate while the students read through the notation on pages 17-18 to the purpose of the lyrics about losing love? Which ones resonate with you the most and why? Do you think this video was successful in sharing its intended DEVELOP message? Ask students about the story: ASSESS When did Electronic Dance Music become popular in Did the students follow along with the song? the US? Did they answer the discussion questions? Which major event did Tiesto use to bring EDM into the international spotlight? Where was Kygo born and where does he live now? How did Kygo learn to become a DJ? CROSSWORD SOLUTION How did Kygo get his stage name? Why did Kygo’s music stand out com- pared to other EDM at the time? Kygo is among the youngest and fastest to stream one million views on which platform? Kygo shot into the international spot- light in December 2013 when he did a remix of which track? Which famous song did he create with Selena Gomez that went on to win numerous awards? 2.