Practical Linux Examples: Exercises

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Top 15 ERP Software Vendors – 2010

Top 15 ERP Software Vendors – 2010 Profiles of the Leading ERP Vendors Find the best ERP system for your company. For more information visit Business-Software.com/ERP. About ERP Software Enterprise resource planning (ERP) is not a new concept. It was introduced more than 40 years ago, when the first ERP system was created to improve inventory control and management at manufacturing firms. Throughout the 70’s and 80’s, as the number of companies deploying ERP increased, its scope expanded quite a bit to include various production and materials management functions, although it was designed primarily for use in manufacturing plants. In the 1990’s, vendors came to realize that other types of business could benefit from ERP, and that in order for a business to achieve true organizational efficiency, it needed to link all its internal business processes in a cohesive and coordinated way. As a result, ERP was transformed into a broad-reaching environment that encompassed all activities across the back office of a company. What is ERP? An ERP system combines methodologies with software and hardware components to integrate numerous critical back-office functions across a company. Made up of a series of “modules”, or applications that are seamlessly linked together through a common database, an ERP system enables various departments or operating units such as Accounting and Finance, Human Resources, Production, and Fulfillment and Distribution to coordinate activities, share information, and collaborate. Key Benefits for Your Company ERP systems are designed to enhance all aspects of key operations across a company’s entire back-office – from planning through execution, management, and control. -

Introduction to Linux – Part 1

Introduction to Linux – Part 1 Brett Milash and Wim Cardoen Center for High Performance Computing May 22, 2018 ssh Login or Interactive Node kingspeak.chpc.utah.edu Batch queue system … kp001 kp002 …. kpxxx FastX ● https://www.chpc.utah.edu/documentation/software/fastx2.php ● Remote graphical sessions in much more efficient and effective way than simple X forwarding ● Persistence - can be disconnected from without closing the session, allowing users to resume their sessions from other devices. ● Licensed by CHPC ● Desktop clients exist for windows, mac, and linux ● Web based client option ● Server installed on all CHPC interactive nodes and the frisco nodes. Windows – alternatives to FastX ● Need ssh client - PuTTY ● http://www.chiark.greenend.org.uk/~sgtatham/putty/download.html - XShell ● http://www.netsarang.com/download/down_xsh.html ● For X applications also need X-forwarding tool - Xming (use Mesa version as needed for some apps) ● http://www.straightrunning.com/XmingNotes/ - Make sure X forwarding enabled in your ssh client Linux or Mac Desktop ● Just need to open up a terminal or console ● When running applications with graphical interfaces, use ssh –Y or ssh –X Getting Started - Login ● Download and install FastX if you like (required on windows unless you already have PuTTY or Xshell installed) ● If you have a CHPC account: - ssh [email protected] ● If not get a username and password: - ssh [email protected] Shell Basics q A Shell is a program that is the interface between you and the operating system -

Avoiding the Top 10 Software Security Design Flaws

AVOIDING THE TOP 10 SOFTWARE SECURITY DESIGN FLAWS Iván Arce, Kathleen Clark-Fisher, Neil Daswani, Jim DelGrosso, Danny Dhillon, Christoph Kern, Tadayoshi Kohno, Carl Landwehr, Gary McGraw, Brook Schoenfield, Margo Seltzer, Diomidis Spinellis, Izar Tarandach, and Jacob West CONTENTS Introduction ..................................................................................................................................................... 5 Mission Statement ..........................................................................................................................................6 Preamble ........................................................................................................................................................... 7 Earn or Give, but Never Assume, Trust ...................................................................................................9 Use an Authentication Mechanism that Cannot be Bypassed or Tampered With .................... 11 Authorize after You Authenticate ...........................................................................................................13 Strictly Separate Data and Control Instructions, and Never Process Control Instructions Received from Untrusted Sources ........................................................................................................... 14 Define an Approach that Ensures all Data are Explicitly Validated .............................................16 Use Cryptography Correctly .................................................................................................................... -

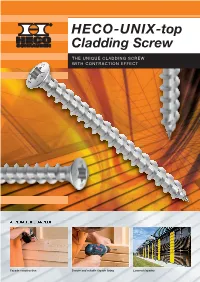

HECO-UNIX-Top Cladding Screw Into the Timber

HECO-UNIX -top Cladding Screw THE UNIQUE CLADDING SCREW WITH CONTRACTION EFFECT APPLICATION EXAMPLES Façade construction Secure and reliable façade fixing Louvred façades 1 2 3 The variable pitch of the HECO-UNIX full The smaller thread pitch means that the Gap free axial fixing of the timber board thread takes hold boards are clamped firmly together via the HECO-UNIX full thread PRODUCT CHARACTERISTICS The HECO-UNIX-top Cladding Screw into the timber. The façade is secured axially via with contraction effect the thread, which increases the pull-out strength. HECO-UNIX-top full thread This results in fewer fastening points and an Timber façades are becoming increasingly popular ultimately more economical façade construction. Contraction effect thanks to the full in both new builds and renovations. This applica - Thanks to the full thread, the sole function of thread with variable thread pitch tion places high demands on fastenings. The the head is to fit the screw drive. As such, the Axial fixing of component façade must be securely fixed to the sub-structure HECO-UNIX-top façade screw has a small and the fixings should be invisible or attractive raised head, which allows simple, concealed Reduced spreading effect thanks to to look at. In addition, the structure must be installation preventing any water penetration the HECO-TOPIX ® tip permanently sound if it is constantly exposed to through the screw. The screws are made of the weather. The HECO-UNIX-top façade screw stainless steel A2, which safely eliminates the Raised countersunk head is the perfect solution to all of these requirements. -

BSD UNIX Toolbox 1000+ Commands for Freebsd, Openbsd

76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page iii BSD UNIX® TOOLBOX 1000+ Commands for FreeBSD®, OpenBSD, and NetBSD®Power Users Christopher Negus François Caen 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page ii 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page i BSD UNIX® TOOLBOX 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page ii 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page iii BSD UNIX® TOOLBOX 1000+ Commands for FreeBSD®, OpenBSD, and NetBSD®Power Users Christopher Negus François Caen 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page iv BSD UNIX® Toolbox: 1000+ Commands for FreeBSD®, OpenBSD, and NetBSD® Power Users Published by Wiley Publishing, Inc. 10475 Crosspoint Boulevard Indianapolis, IN 46256 www.wiley.com Copyright © 2008 by Wiley Publishing, Inc., Indianapolis, Indiana Published simultaneously in Canada ISBN: 978-0-470-37603-4 Manufactured in the United States of America 10 9 8 7 6 5 4 3 2 1 Library of Congress Cataloging-in-Publication Data is available from the publisher. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600. Requests to the Publisher for permis- sion should be addressed to the Legal Department, Wiley Publishing, Inc., 10475 Crosspoint Blvd., Indianapolis, IN 46256, (317) 572-3447, fax (317) 572-4355, or online at http://www.wiley.com/go/permissions. -

What Is UNIX? the Directory Structure Basic Commands Find

What is UNIX? UNIX is an operating system like Windows on our computers. By operating system, we mean the suite of programs which make the computer work. It is a stable, multi-user, multi-tasking system for servers, desktops and laptops. The Directory Structure All the files are grouped together in the directory structure. The file-system is arranged in a hierarchical structure, like an inverted tree. The top of the hierarchy is traditionally called root (written as a slash / ) Basic commands When you first login, your current working directory is your home directory. In UNIX (.) means the current directory and (..) means the parent of the current directory. find command The find command is used to locate files on a Unix or Linux system. find will search any set of directories you specify for files that match the supplied search criteria. The syntax looks like this: find where-to-look criteria what-to-do All arguments to find are optional, and there are defaults for all parts. where-to-look defaults to . (that is, the current working directory), criteria defaults to none (that is, select all files), and what-to-do (known as the find action) defaults to ‑print (that is, display the names of found files to standard output). Examples: find . –name *.txt (finds all the files ending with txt in current directory and subdirectories) find . -mtime 1 (find all the files modified exact 1 day) find . -mtime -1 (find all the files modified less than 1 day) find . -mtime +1 (find all the files modified more than 1 day) find . -

LS Series Pistons Professional Professional As the Leader in LS-1 Forged Pistons, Wiseco Pulled out All the Stops When Designing the LS Series

Pro Prof ProfessionalProfessional Professional P Professional Hi-PerformanceForged ROFESSIONAL Professional Automotive Professional Professional ProfessionalCHEVY Professional ProfessionalRacing Pistons Professional Professional ProfessionalEFI Late Models Professional Professio for Professional NEW ArmorGlide Coated Skirts LS Series Pistons Professional Professional As the leader in LS-1 forged pistons, Wiseco pulled out all the stops when designing the LS Series. These pistons are designed to accommodate the reluctor ring on stroker cranks without having to notch the pin bosses. Offset pins like O.E. and skirt shapes specifi cally designed for street applications make these the best available. They have been tested successfully in the highest horsepower applications. LS Series LS 5.3 • 3.622 Stroke Std. Tension Rings Kit Bore Comp. Stroke Rod 0 Deck at Std. Avg. Volume Compression ratio at: Pin Part # 1/16, 1/16, 3mm Valve Part # Ht. Deck Ht. Wt. 58cc 61cc (included) (included) Pocket K474M96 3.780 3785HF 1.300 3.622 6.098 9.209 9.240 FT -2.2 10.9 10.4 S643 LS1,2,6 K474M965 3.800 6.125 9.236 .927 x 2.250 3805HF LS 5.3 • 4.000 Stroke K473M96 3.780 3785HF 1.110 4.000 6.098 9.209 9.240 -7cc 11.2 10.8 S643 LS1,2,6 K473M965 3.800 6.125 9.236 .927 x 2.250 3805HF LS7 • OE Titanium Rod & Pin Dia. Kit Comp. Std. Avg. Compression ratio at: Pin Part # Std. Tension Rings Valve Bore Stroke Rod 0 Deck at Volume 1.2, 1.2, 3mm Part # Ht. Deck Ht. Wt. 72cc (included) (included) Pocket K0004X125 4.125 4127GFX 1.175 4.000 6.067 9.242 9.240 2.5 12.0 S761 LS7 K0004X130 4.130 .9252 x 2.250 4137GFX • 2618 Alloy: High strength. -

GNU M4, Version 1.4.7 a Powerful Macro Processor Edition 1.4.7, 23 September 2006

GNU M4, version 1.4.7 A powerful macro processor Edition 1.4.7, 23 September 2006 by Ren´eSeindal This manual is for GNU M4 (version 1.4.7, 23 September 2006), a package containing an implementation of the m4 macro language. Copyright c 1989, 1990, 1991, 1992, 1993, 1994, 2004, 2005, 2006 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License.” i Table of Contents 1 Introduction and preliminaries ................ 3 1.1 Introduction to m4 ............................................. 3 1.2 Historical references ............................................ 3 1.3 Invoking m4 .................................................... 4 1.4 Problems and bugs ............................................. 8 1.5 Using this manual .............................................. 8 2 Lexical and syntactic conventions ............ 11 2.1 Macro names ................................................. 11 2.2 Quoting input to m4........................................... 11 2.3 Comments in m4 input ........................................ 11 2.4 Other kinds of input tokens ................................... 12 2.5 How m4 copies input to output ................................ 12 3 How to invoke macros........................ -

Unix: Beyond the Basics

Unix: Beyond the Basics BaRC Hot Topics – September, 2018 Bioinformatics and Research Computing Whitehead Institute http://barc.wi.mit.edu/hot_topics/ Logging in to our Unix server • Our main server is called tak4 • Request a tak4 account: http://iona.wi.mit.edu/bio/software/unix/bioinfoaccount.php • Logging in from Windows Ø PuTTY for ssh Ø Xming for graphical display [optional] • Logging in from Mac ØAccess the Terminal: Go è Utilities è Terminal ØXQuartz needed for X-windows for newer OS X. 2 Log in using secure shell ssh –Y user@tak4 PuTTY on Windows Terminal on Macs Command prompt user@tak4 ~$ 3 Hot Topics website: http://barc.wi.mit.edu/education/hot_topics/ • Create a directory for the exercises and use it as your working directory $ cd /nfs/BaRC_training $ mkdir john_doe $ cd john_doe • Copy all files into your working directory $ cp -r /nfs/BaRC_training/UnixII/* . • You should have the files below in your working directory: – foo.txt, sample1.txt, exercise.txt, datasets folder – You can check they’re there with the ‘ls’ command 4 Unix Review: Commands Ø command [arg1 arg2 … ] [input1 input2 … ] $ sort -k2,3nr foo.tab -n or -g: -n is recommended, except for scientific notation or start end a leading '+' -r: reverse order $ cut -f1,5 foo.tab $ cut -f1-5 foo.tab -f: select only these fields -f1,5: select 1st and 5th fields -f1-5: select 1st, 2nd, 3rd, 4th, and 5th fields $ wc -l foo.txt How many lines are in this file? 5 Unix Review: Common Mistakes • Case sensitive cd /nfs/Barc_Public vs cd /nfs/BaRC_Public -bash: cd: /nfs/Barc_Public: -

LAB MANUAL for Computer Network

LAB MANUAL for Computer Network CSE-310 F Computer Network Lab L T P - - 3 Class Work : 25 Marks Exam : 25 MARKS Total : 50 Marks This course provides students with hands on training regarding the design, troubleshooting, modeling and evaluation of computer networks. In this course, students are going to experiment in a real test-bed networking environment, and learn about network design and troubleshooting topics and tools such as: network addressing, Address Resolution Protocol (ARP), basic troubleshooting tools (e.g. ping, ICMP), IP routing (e,g, RIP), route discovery (e.g. traceroute), TCP and UDP, IP fragmentation and many others. Student will also be introduced to the network modeling and simulation, and they will have the opportunity to build some simple networking models using the tool and perform simulations that will help them evaluate their design approaches and expected network performance. S.No Experiment 1 Study of different types of Network cables and Practically implement the cross-wired cable and straight through cable using clamping tool. 2 Study of Network Devices in Detail. 3 Study of network IP. 4 Connect the computers in Local Area Network. 5 Study of basic network command and Network configuration commands. 6 Configure a Network topology using packet tracer software. 7 Configure a Network topology using packet tracer software. 8 Configure a Network using Distance Vector Routing protocol. 9 Configure Network using Link State Vector Routing protocol. Hardware and Software Requirement Hardware Requirement RJ-45 connector, Climping Tool, Twisted pair Cable Software Requirement Command Prompt And Packet Tracer. EXPERIMENT-1 Aim: Study of different types of Network cables and Practically implement the cross-wired cable and straight through cable using clamping tool. -

GNU M4, Version 1.4.19 a Powerful Macro Processor Edition 1.4.19, 28 May 2021

GNU M4, version 1.4.19 A powerful macro processor Edition 1.4.19, 28 May 2021 by Ren´eSeindal, Fran¸coisPinard, Gary V. Vaughan, and Eric Blake ([email protected]) This manual (28 May 2021) is for GNU M4 (version 1.4.19), a package containing an implementation of the m4 macro language. Copyright c 1989{1994, 2004{2014, 2016{2017, 2020{2021 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled \GNU Free Documentation License." i Table of Contents 1 Introduction and preliminaries ::::::::::::::::: 3 1.1 Introduction to m4 :::::::::::::::::::::::::::::::::::::::::::::: 3 1.2 Historical references :::::::::::::::::::::::::::::::::::::::::::: 3 1.3 Problems and bugs ::::::::::::::::::::::::::::::::::::::::::::: 4 1.4 Using this manual :::::::::::::::::::::::::::::::::::::::::::::: 5 2 Invoking m4::::::::::::::::::::::::::::::::::::::: 7 2.1 Command line options for operation modes ::::::::::::::::::::: 7 2.2 Command line options for preprocessor features ::::::::::::::::: 8 2.3 Command line options for limits control ::::::::::::::::::::::: 10 2.4 Command line options for frozen state ::::::::::::::::::::::::: 11 2.5 Command line options for debugging :::::::::::::::::::::::::: 11 2.6 Specifying input files on the command line ::::::::::::::::::::: -

Install and Run External Command Line Softwares

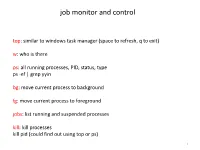

job monitor and control top: similar to windows task manager (space to refresh, q to exit) w: who is there ps: all running processes, PID, status, type ps -ef | grep yyin bg: move current process to background fg: move current process to foreground jobs: list running and suspended processes kill: kill processes kill pid (could find out using top or ps) 1 sort, cut, uniq, join, paste, sed, grep, awk, wc, diff, comm, cat All types of bioinformatics sequence analyses are essentially text processing. Unix Shell has the above commands that are very useful for processing texts and also allows the output from one command to be passed to another command as input using pipe (“|”). less cosmicRaw.txt | cut -f2,3,4,5,8,13 | awk '$5==22' | cut -f1 | sort -u | wc This makes the processing of files using Shell very convenient and very powerful: you do not need to write output to intermediate files or load all data into the memory. For example, combining different Unix commands for text processing is like passing an item through a manufacturing pipeline when you only care about the final product 2 Hands on example 1: cosmic mutation data - Go to UCSC genome browser website: http://genome.ucsc.edu/ - On the left, find the Downloads link - Click on Human - Click on Annotation database - Ctrl+f and then search “cosmic” - On “cosmic.txt.gz” right-click -> copy link address - Go to the terminal and wget the above link (middle click or Shift+Insert to paste what you copied) - Similarly, download the “cosmicRaw.txt.gz” file - Under your home, create a folder