Lecture VI: Tensor Calculus in Flat Spacetime

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On the Spectrum of Volume Integral Operators in Acoustic Scattering M Costabel

On the Spectrum of Volume Integral Operators in Acoustic Scattering M Costabel To cite this version: M Costabel. On the Spectrum of Volume Integral Operators in Acoustic Scattering. C. Constanda, A. Kirsch. Integral Methods in Science and Engineering, Birkhäuser, pp.119-127, 2015, 978-3-319- 16726-8. 10.1007/978-3-319-16727-5_11. hal-01098834v2 HAL Id: hal-01098834 https://hal.archives-ouvertes.fr/hal-01098834v2 Submitted on 20 Apr 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. 1 On the Spectrum of Volume Integral Operators in Acoustic Scattering M. Costabel IRMAR, Université de Rennes 1, France; [email protected] 1.1 Volume Integral Equations in Acoustic Scattering Volume integral equations have been used as a theoretical tool in scattering theory for a long time. A classical application is an existence proof for the scattering problem based on the theory of Fredholm integral equations. This approach is described for acoustic and electromagnetic scattering in the books by Colton and Kress [CoKr83, CoKr98] where volume integral equations ap- pear under the name “Lippmann-Schwinger equations”. In electromagnetic scattering by penetrable objects, the volume integral equation (VIE) method has also been used for numerical computations. -

1.2 Topological Tensor Calculus

PH211 Physical Mathematics Fall 2019 1.2 Topological tensor calculus 1.2.1 Tensor fields Finite displacements in Euclidean space can be represented by arrows and have a natural vector space structure, but finite displacements in more general curved spaces, such as on the surface of a sphere, do not. However, an infinitesimal neighborhood of a point in a smooth curved space1 looks like an infinitesimal neighborhood of Euclidean space, and infinitesimal displacements dx~ retain the vector space structure of displacements in Euclidean space. An infinitesimal neighborhood of a point can be infinitely rescaled to generate a finite vector space, called the tangent space, at the point. A vector lives in the tangent space of a point. Note that vectors do not stretch from one point to vector tangent space at p p space Figure 1.2.1: A vector in the tangent space of a point. another, and vectors at different points live in different tangent spaces and so cannot be added. For example, rescaling the infinitesimal displacement dx~ by dividing it by the in- finitesimal scalar dt gives the velocity dx~ ~v = (1.2.1) dt which is a vector. Similarly, we can picture the covector rφ as the infinitesimal contours of φ in a neighborhood of a point, infinitely rescaled to generate a finite covector in the point's cotangent space. More generally, infinitely rescaling the neighborhood of a point generates the tensor space and its algebra at the point. The tensor space contains the tangent and cotangent spaces as a vector subspaces. A tensor field is something that takes tensor values at every point in a space. -

Vector Calculus and Multiple Integrals Rob Fender, HT 2018

Vector Calculus and Multiple Integrals Rob Fender, HT 2018 COURSE SYNOPSIS, RECOMMENDED BOOKS Course syllabus (on which exams are based): Double integrals and their evaluation by repeated integration in Cartesian, plane polar and other specified coordinate systems. Jacobians. Line, surface and volume integrals, evaluation by change of variables (Cartesian, plane polar, spherical polar coordinates and cylindrical coordinates only unless the transformation to be used is specified). Integrals around closed curves and exact differentials. Scalar and vector fields. The operations of grad, div and curl and understanding and use of identities involving these. The statements of the theorems of Gauss and Stokes with simple applications. Conservative fields. Recommended Books: Mathematical Methods for Physics and Engineering (Riley, Hobson and Bence) This book is lazily referred to as “Riley” throughout these notes (sorry, Drs H and B) You will all have this book, and it covers all of the maths of this course. However it is rather terse at times and you will benefit from looking at one or both of these: Introduction to Electrodynamics (Griffiths) You will buy this next year if you haven’t already, and the chapter on vector calculus is very clear Div grad curl and all that (Schey) A nice discussion of the subject, although topics are ordered differently to most courses NB: the latest version of this book uses the opposite convention to polar coordinates to this course (and indeed most of physics), but older versions can often be found in libraries 1 Week One A review of vectors, rotation of coordinate systems, vector vs scalar fields, integrals in more than one variable, first steps in vector differentiation, the Frenet-Serret coordinate system Lecture 1 Vectors A vector has direction and magnitude and is written in these notes in bold e.g. -

Mathematical Theorems

Appendix A Mathematical Theorems The mathematical theorems needed in order to derive the governing model equations are defined in this appendix. A.1 Transport Theorem for a Single Phase Region The transport theorem is employed deriving the conservation equations in continuum mechanics. The mathematical statement is sometimes attributed to, or named in honor of, the German Mathematician Gottfried Wilhelm Leibnitz (1646–1716) and the British fluid dynamics engineer Osborne Reynolds (1842–1912) due to their work and con- tributions related to the theorem. Hence it follows that the transport theorem, or alternate forms of the theorem, may be named the Leibnitz theorem in mathematics and Reynolds transport theorem in mechanics. In a customary interpretation the Reynolds transport theorem provides the link between the system and control volume representations, while the Leibnitz’s theorem is a three dimensional version of the integral rule for differentiation of an integral. There are several notations used for the transport theorem and there are numerous forms and corollaries. A.1.1 Leibnitz’s Rule The Leibnitz’s integral rule gives a formula for differentiation of an integral whose limits are functions of the differential variable [7, 8, 22, 23, 45, 55, 79, 94, 99]. The formula is also known as differentiation under the integral sign. H. A. Jakobsen, Chemical Reactor Modeling, DOI: 10.1007/978-3-319-05092-8, 1361 © Springer International Publishing Switzerland 2014 1362 Appendix A: Mathematical Theorems b(t) b(t) d ∂f (t, x) db da f (t, x) dx = dx + f (t, b) − f (t, a) (A.1) dt ∂t dt dt a(t) a(t) The first term on the RHS gives the change in the integral because the function itself is changing with time, the second term accounts for the gain in area as the upper limit is moved in the positive axis direction, and the third term accounts for the loss in area as the lower limit is moved. -

A Brief Tour of Vector Calculus

A BRIEF TOUR OF VECTOR CALCULUS A. HAVENS Contents 0 Prelude ii 1 Directional Derivatives, the Gradient and the Del Operator 1 1.1 Conceptual Review: Directional Derivatives and the Gradient........... 1 1.2 The Gradient as a Vector Field............................ 5 1.3 The Gradient Flow and Critical Points ....................... 10 1.4 The Del Operator and the Gradient in Other Coordinates*............ 17 1.5 Problems........................................ 21 2 Vector Fields in Low Dimensions 26 2 3 2.1 General Vector Fields in Domains of R and R . 26 2.2 Flows and Integral Curves .............................. 31 2.3 Conservative Vector Fields and Potentials...................... 32 2.4 Vector Fields from Frames*.............................. 37 2.5 Divergence, Curl, Jacobians, and the Laplacian................... 41 2.6 Parametrized Surfaces and Coordinate Vector Fields*............... 48 2.7 Tangent Vectors, Normal Vectors, and Orientations*................ 52 2.8 Problems........................................ 58 3 Line Integrals 66 3.1 Defining Scalar Line Integrals............................. 66 3.2 Line Integrals in Vector Fields ............................ 75 3.3 Work in a Force Field................................. 78 3.4 The Fundamental Theorem of Line Integrals .................... 79 3.5 Motion in Conservative Force Fields Conserves Energy .............. 81 3.6 Path Independence and Corollaries of the Fundamental Theorem......... 82 3.7 Green's Theorem.................................... 84 3.8 Problems........................................ 89 4 Surface Integrals, Flux, and Fundamental Theorems 93 4.1 Surface Integrals of Scalar Fields........................... 93 4.2 Flux........................................... 96 4.3 The Gradient, Divergence, and Curl Operators Via Limits* . 103 4.4 The Stokes-Kelvin Theorem..............................108 4.5 The Divergence Theorem ...............................112 4.6 Problems........................................114 List of Figures 117 i 11/14/19 Multivariate Calculus: Vector Calculus Havens 0. -

An Introduction to Fluid Mechanics: Supplemental Web Appendices

An Introduction to Fluid Mechanics: Supplemental Web Appendices Faith A. Morrison Professor of Chemical Engineering Michigan Technological University November 5, 2013 2 c 2013 Faith A. Morrison, all rights reserved. Appendix C Supplemental Mathematics Appendix C.1 Multidimensional Derivatives In section 1.3.1.1 we reviewed the basics of the derivative of single-variable functions. The same concepts may be applied to multivariable functions, leading to the definition of the partial derivative. Consider the multivariable function f(x, y). An example of such a function would be elevations above sea level of a geographic region or the concentration of a chemical on a flat surface. To quantify how this function changes with position, we consider two nearby points, f(x, y) and f(x + ∆x, y + ∆y) (Figure C.1). We will also refer to these two points as f x,y (f evaluated at the point (x, y)) and f . | |x+∆x,y+∆y In a two-dimensional function, the “rate of change” is a more complex concept than in a one-dimensional function. For a one-dimensional function, the rate of change of the function f with respect to the variable x was identified with the change in f divided by the change in x, quantified in the derivative, df/dx (see Figure 1.26). For a two-dimensional function, when speaking of the rate of change, we must also specify the direction in which we are interested. For example, if the function we are considering is elevation and we are standing near the edge of a cliff, the rate of change of the elevation in the direction over the cliff is steep, while the rate of change of the elevation in the opposite direction is much more gradual. -

A Some Basic Rules of Tensor Calculus

A Some Basic Rules of Tensor Calculus The tensor calculus is a powerful tool for the description of the fundamentals in con- tinuum mechanics and the derivation of the governing equations for applied prob- lems. In general, there are two possibilities for the representation of the tensors and the tensorial equations: – the direct (symbolic) notation and – the index (component) notation The direct notation operates with scalars, vectors and tensors as physical objects defined in the three dimensional space. A vector (first rank tensor) a is considered as a directed line segment rather than a triple of numbers (coordinates). A second rank tensor A is any finite sum of ordered vector pairs A = a b + ... +c d. The scalars, vectors and tensors are handled as invariant (independent⊗ from the choice⊗ of the coordinate system) objects. This is the reason for the use of the direct notation in the modern literature of mechanics and rheology, e.g. [29, 32, 49, 123, 131, 199, 246, 313, 334] among others. The index notation deals with components or coordinates of vectors and tensors. For a selected basis, e.g. gi, i = 1, 2, 3 one can write a = aig , A = aibj + ... + cidj g g i i ⊗ j Here the Einstein’s summation convention is used: in one expression the twice re- peated indices are summed up from 1 to 3, e.g. 3 3 k k ik ik a gk ∑ a gk, A bk ∑ A bk ≡ k=1 ≡ k=1 In the above examples k is a so-called dummy index. Within the index notation the basic operations with tensors are defined with respect to their coordinates, e. -

Lecture 33 Classical Integration Theorems in the Plane

Lecture 33 Classical Integration Theorems in the Plane In this section, we present two very important results on integration over closed curves in the plane, namely, Green’s Theorem and the Divergence Theorem, as a prelude to their important counterparts in R3 involving surface integrals. Green’s Theorem in the Plane (Relevant section from Stewart, Calculus, Early Transcendentals, Sixth Edition: 16.4) 2 1 Let F(x,y) = F1(x,y) i + F2(x,y) j be a vector field in R . Let C be a simple closed (piecewise C ) curve in R2 that encloses a simply connected (i.e., “no holes”) region D ⊂ R. Also assume that the ∂F ∂F partial derivatives 2 and 1 exist at all points (x,y) ∈ D. Then, ∂x ∂y ∂F ∂F F · dr = 2 − 1 dA, (1) IC Z ZD ∂x ∂y where the line integration is performed over C in a counterclockwise direction, with D lying to the left of the path. Note: 1. The integral on the left is a line integral – the circulation of the vector field F over the closed curve C. 2. The integral on the right is a double integral over the region D enclosed by C. Special case: If ∂F ∂F 2 = 1 , (2) ∂x ∂y for all points (x,y) ∈ D, then the integrand in the double integral of Eq. (1) is zero, implying that the circulation integral F · dr is zero. IC We’ve seen this situation before: Recall that the above equality condition for the partial derivatives is the condition for F to be conservative. -

Math 132 Exam 1 Solutions

Math 132, Exam 1, Fall 2017 Solutions You may have a single 3x5 notecard (two-sided) with any notes you like. Otherwise you should have only your pencils/pens and eraser. No calculators are allowed. No consultation with any other person or source of information (physical or electronic) is allowed during the exam. All electronic devices should be turned off. Part I of the exam contains questions 1-14 (multiple choice questions, 5 points each) and true/false questions 15-19 (1 point each). Your answers in Part I should be recorded on the “scantron” card that is provided. If you need to make a change on your answer card, be sure your original answer is completely erased. If your card is damaged, ask one of the proctors for a replacement card. Only the answers marked on the card will be scored. Part II has 3 “written response” questions worth a total of 25 points. Your answers should be written on the pages of the exam booklet. Please write clearly so the grader can read them. When a calculation or explanation is required, your work should include enough detail to make clear how you arrived at your answer. A correct numeric answer with sufficient supporting work may not receive full credit. Part I 1. Suppose that If and , what is ? A) B) C) D) E) F) G) H) I) J) so So and therefore 2. What are all the value(s) of that make the following equation true? A) B) C) D) E) F) G) H) I) J) all values of make the equation true For any , 3. -

Tensor Calculus and Differential Geometry

Course Notes Tensor Calculus and Differential Geometry 2WAH0 Luc Florack March 10, 2021 Cover illustration: papyrus fragment from Euclid’s Elements of Geometry, Book II [8]. Contents Preface iii Notation 1 1 Prerequisites from Linear Algebra 3 2 Tensor Calculus 7 2.1 Vector Spaces and Bases . .7 2.2 Dual Vector Spaces and Dual Bases . .8 2.3 The Kronecker Tensor . 10 2.4 Inner Products . 11 2.5 Reciprocal Bases . 14 2.6 Bases, Dual Bases, Reciprocal Bases: Mutual Relations . 16 2.7 Examples of Vectors and Covectors . 17 2.8 Tensors . 18 2.8.1 Tensors in all Generality . 18 2.8.2 Tensors Subject to Symmetries . 22 2.8.3 Symmetry and Antisymmetry Preserving Product Operators . 24 2.8.4 Vector Spaces with an Oriented Volume . 31 2.8.5 Tensors on an Inner Product Space . 34 2.8.6 Tensor Transformations . 36 2.8.6.1 “Absolute Tensors” . 37 CONTENTS i 2.8.6.2 “Relative Tensors” . 38 2.8.6.3 “Pseudo Tensors” . 41 2.8.7 Contractions . 43 2.9 The Hodge Star Operator . 43 3 Differential Geometry 47 3.1 Euclidean Space: Cartesian and Curvilinear Coordinates . 47 3.2 Differentiable Manifolds . 48 3.3 Tangent Vectors . 49 3.4 Tangent and Cotangent Bundle . 50 3.5 Exterior Derivative . 51 3.6 Affine Connection . 52 3.7 Lie Derivative . 55 3.8 Torsion . 55 3.9 Levi-Civita Connection . 56 3.10 Geodesics . 57 3.11 Curvature . 58 3.12 Push-Forward and Pull-Back . 59 3.13 Examples . 60 3.13.1 Polar Coordinates in the Euclidean Plane . -

Introduction to Theoretical Methods Example

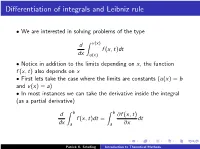

Differentiation of integrals and Leibniz rule • We are interested in solving problems of the type d Z v(x) f (x; t)dt dx u(x) • Notice in addition to the limits depending on x, the function f (x; t) also depends on x • First lets take the case where the limits are constants (u(x) = b and v(x) = a) • In most instances we can take the derivative inside the integral (as a partial derivative) d Z b Z b @f (x; t) f (x; t)dt = dt dx a a @x Patrick K. Schelling Introduction to Theoretical Methods Example R 1 n −kt2 • Find 0 t e dt for n odd • First solve for n = 1, 1 Z 2 I = te−kt dt 0 • Use u = kt2 and du = 2ktdt, so 1 1 Z 2 1 Z 1 I = te−kt dt = e−udu = 0 2k 0 2k dI R 1 3 −kt2 1 • Next notice dk = 0 −t e dt = − 2k2 • We can take successive derivatives with respect to k, so we find Z 1 2n+1 −kt2 n! t e dt = n+1 0 2k Patrick K. Schelling Introduction to Theoretical Methods Another example... the even n case R 1 n −kt2 • The even n case can be done of 0 t e dt • Take for I in this case (writing in terms of x instead of t) 1 Z 2 I = e−kx dx −∞ • We can write for I 2, 1 1 Z Z 2 2 I 2 = e−k(x +y )dxdy −∞ −∞ • Make a change of variables to polar coordinates, x = r cos θ, y = r sin θ, and x2 + y 2 = r 2 • The dxdy in the integrand becomes rdrdθ (we will explore this more carefully in the next chapter) Patrick K. -

Fast Higher-Order Functions for Tensor Calculus with Tensors and Subtensors

Fast Higher-Order Functions for Tensor Calculus with Tensors and Subtensors Cem Bassoy and Volker Schatz Fraunhofer IOSB, Ettlingen 76275, Germany, [email protected] Abstract. Tensors analysis has become a popular tool for solving prob- lems in computational neuroscience, pattern recognition and signal pro- cessing. Similar to the two-dimensional case, algorithms for multidimen- sional data consist of basic operations accessing only a subset of tensor data. With multiple offsets and step sizes, basic operations for subtensors require sophisticated implementations even for entrywise operations. In this work, we discuss the design and implementation of optimized higher-order functions that operate entrywise on tensors and subtensors with any non-hierarchical storage format and arbitrary number of dimen- sions. We propose recursive multi-index algorithms with reduced index computations and additional optimization techniques such as function inlining with partial template specialization. We show that single-index implementations of higher-order functions with subtensors introduce a runtime penalty of an order of magnitude than the recursive and iterative multi-index versions. Including data- and thread-level parallelization, our optimized implementations reach 68% of the maximum throughput of an Intel Core i9-7900X. In comparison with other libraries, the aver- age speedup of our optimized implementations is up to 5x for map-like and more than 9x for reduce-like operations. For symmetric tensors we measured an average speedup of up to 4x. 1 Introduction Many problems in computational neuroscience, pattern recognition, signal pro- cessing and data mining generate massive amounts of multidimensional data with high dimensionality [13,12,9]. Tensors provide a natural representation for massive multidimensional data [10,7].