Incremental Power by Mode

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Impact of Graphics Cards on Desktop Computer Energy Consumption

+–– The Impact of Graphics Cards on Desktop Computer Energy Consumption September 2012 BY Pierre Delforge | NRDC Christopher Wold | CLASP Acknowledgements: Project Co-Managed by Pierre Delforge, NRDC Christopher Wold, CLASP Report Written by Pierre Delforge, NRDC Executive Summary Prepared by Christopher Wold, CLASP Methodology, Data Collection, and Analysis Conducted by Eric Wanless, Brendan Trimboli, Jeffrey Swofford, Craig Billingsley, Ecova William Westwater Report Reviewed by Jonathan Wood, Tenvic Jim McMahon, Better Climate Steven Pantano, Kathleen Callaghy, CLASP 1 Contents Executive Summary ................................................................................................................................... 4 1. Introduction .......................................................................................................................................... 6 2. Background .......................................................................................................................................... 8 2.1 Scope ................................................................................................................................................. 8 2.2 Computer Energy Use ..................................................................................................................... 8 2.3 Discrete Graphics Energy Use ....................................................................................................... 9 3. Methodology ..................................................................................................................................... -

Intel® Desktop Board DP67BG Technical Product Specification

Intel® Desktop Board DP67BG Technical Product Specification December 2012 Order Number: G13848-002 The Intel® Desktop Board DP67BG may contain design defects or errors known as errata that may cause the product to deviate from published specifications. Current characterized errata are documented in the Intel Desktop Board DP67BG Specification Update. Revision History Revision Revision History Date -001 First release of the Intel® Desktop Board DP67BG Technical Product January 2011 Specification -002 Specification Clarification December 2012 This product specification applies to only the standard Intel® Desktop Board DP67BG with BIOS identifier BGP6710J.86A. Changes to this specification will be published in the Intel Desktop Board DP67BG Specification Update before being incorporated into a revision of this document. INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. All Intel® desktop boards are evaluated as Information Technology Equipment (I.T.E.) for use in personal computers (PC) for installation in homes, offices, schools, computer rooms, and similar locations. -

TP67XE Motherboard TP67XE Specifcation

TP67XE Motherboard • Supports the Intel 2nd generation Core i7 and Core i5 processors in the 1155 package • Intel P67 single chip architecture • 10 Phases Power Design • Support 4-DIMM DDR3- 2200(OC)/2133(OC)/2000(OC)/1600(OC)/1333 up to 32G maximum capacity • Support ATI crossfireX and NVIDIA SLI technology • Support SATA 6Gb/s 2X speed than current SATA 3G • Support USB 3.0 • THX(TruStudio Pro) Technology for true studio audio experience • 100% X.D.C solid capacitor • BIOSTAR G.P.U (Green Power Utility) Technology for Energy Saving • BIOSTAR Toverclocker utility TP67XE Specifcation CPU SUPPORT Intel® Core™ i7 LGA 1155 Processor Intel® Core™ i5 LGA 1155 Processor Intel® Core™ i3 LGA 1155 Processor MEMORY BIOSTARSupport Dual Channel DDR3 2200(OC)/2133(OC)/2000(OC)/1600(OC)/1333/1066 MHz 4 x DDR3 DIMM Memory Slot Max. Supports up to 32GB Memory It is recommended to install memory module on the red DDR3 DIMM slot (DDR3_A2 or DDR3_B2) to boot the system. STORAGE 2 x SATA III Connector 3 x SATA II Connector 1 x eSATA II Connector Support SATA RAID: 0,1,5,10 LAN Realtek RTL8111E - 10/100/1000 Controller AUDIO CODEC Realtek ALC892 8-Channel Blu-ray Audio USB 2 x USB 3.0 Port 6 x USB 2.0 Port 3 x USB 2.0 Header EXPANSION SLOT 2 x PCI-E 2.0 x16 Slot(CFX x8) 2 x PCI-E 2.0 x1 Slot 2 x PCI Slot REAR I/O 1 x PS/2 Keyboard 1 x eSATA2 Connector 1 x FireWire IEEE 1394a Port 2 x USB 3.0 Port 6 x USB 2.0 Port 1 x LAN Port 6 x Audio Jacks 1 x S/PDIF Out Port INTERNAL I/O 3 x USB 2.0 Header 2 x SATA III Connector (6Gb/s) 3 x SATA II Connector (3Gb/s ) 1 -

P67 Pro3.Pdf

P67 Pro3 User Manual Version 1.0 Published September 2010 Copyright©2010 ASRock INC. All rights reserved. 1 Copyright Notice: No part of this manual may be reproduced, transcribed, transmitted, or translated in any language, in any form or by any means, except duplication of documentation by the purchaser for backup purpose, without written consent of ASRock Inc. Products and corporate names appearing in this manual may or may not be regis- tered trademarks or copyrights of their respective companies, and are used only for identifi cation or explanation and to the owners’ benefi t, without intent to infringe. Disclaimer: Specifi cations and information contained in this manual are furnished for informa- tional use only and subject to change without notice, and should not be constructed as a commitment by ASRock. ASRock assumes no responsibility for any errors or omissions that may appear in this manual. With respect to the contents of this manual, ASRock does not provide warranty of any kind, either expressed or implied, including but not limited to the implied warran- ties or conditions of merchantability or fi tness for a particular purpose. In no event shall ASRock, its directors, offi cers, employees, or agents be liable for any indirect, special, incidental, or consequential damages (including damages for loss of profi ts, loss of business, loss of data, interruption of business and the like), even if ASRock has been advised of the possibility of such damages arising from any defect or error in the manual or product. This device complies with Part 15 of the FCC Rules. -

Fatal1ty P67 Professional Series

Fatal1ty P67 Professional Series User Manual Version 1.0 Published November 2010 1 Fatal1ty Story Who knew that at age 19, I would be a World Champion PC gamer. When I was 13, I actually played competitive billiards in professional tournaments and won four or five games off guys who played at the highest level. I actually thought of making a career of it, but at that young age situations change rapidly. Because I’ve been blessed with great hand-eye coordination and a grasp of mathematics (an important element in video gaming) I gravitated to that activity. GOING PRO I started professional gaming in 1999 when I entered the CPL (Cyberathlete Professional League) tournament in Dallas and won $4,000 for coming in third place. Emerging as one of the top players in the United States, a company interested in sponsoring me fl ew me to Sweden to compete against the top 12 players in the world. I won 18 straight games, lost none, and took fi rst place, becoming the number one ranked Quake III player in the world in the process. Two months later I followed that success by traveling to Dallas and defending my title as the world’s best Quake III player, winning the $40,000 grand prize. From there I entered competitions all over the world, including Singapore, Korea, Germany, Australia, Holland and Brazil in addition to Los Angeles, New York and St. Louis. WINNING STREAK I was excited to showcase my true gaming skills when defending my title as CPL Champion of the year at the CPL Winter 2001 because I would be competing in a totally different first person shooter (fps) game, Alien vs. -

Fatal1ty P67 Performance Series UM.Indd

Fatal1ty P67 Performance Series User Manual Version 1.0 Published April 2011 1 Fatal1ty Story Who knew that at age 19, I would be a World Champion PC gamer. When I was 13, I actually played competitive billiards in professional tournaments and won four or five games off guys who played at the highest level. I actually thought of making a career of it, but at that young age situations change rapidly. Because I’ve been blessed with great hand-eye coordination and a grasp of mathematics (an important element in video gaming) I gravitated to that activity. GOING PRO I started professional gaming in 1999 when I entered the CPL (Cyberathlete Professional League) tournament in Dallas and won $4,000 for coming in third place. Emerging as one of the top players in the United States, a company interested in sponsoring me fl ew me to Sweden to compete against the top 12 players in the world. I won 18 straight games, lost none, and took fi rst place, becoming the number one ranked Quake III player in the world in the process. Two months later I followed that success by traveling to Dallas and defending my title as the world’s best Quake III player, winning the $40,000 grand prize. From there I entered competitions all over the world, including Singapore, Korea, Germany, Australia, Holland and Brazil in addition to Los Angeles, New York and St. Louis. WINNING STREAK I was excited to showcase my true gaming skills when defending my title as CPL Champion of the year at the CPL Winter 2001 because I would be competing in a totally different first person shooter (fps) game, Alien vs. -

P67 Transformer-01

P67 Transformer Intel® P67 Chipset www.asrock.com Detail Specification - ATX Form Factor: 12.0-in x 8.6-in, 30.5 cm x 21.8 cm Platform - All Solid Capacitor design (100% Japan-made high-quality Conductive Polymer Capacitors) - Supports Intel® Lynnfield CoreTM i7 / i5 Processors in LGA1156 CPU Package - Advanced V8 + 2 Power Phase Design - Supports Intel® Turbo Boost Technology - Supports Hyper-Threading Technology - Supports Untied Overclocking Technology Chipset - Intel® P67 - Dual Channel DDR3 memory technology Memory - 4 x DDR3 DIMM slots - Supports DDR3 2600+(OC)/2133(OC)/1866(OC)/1600/1333/1066 non-ECC, un-buffered memory - Max. capacity of system memory: 16GB - Supports Intel® Extreme Memory Profile (XMP) - 1 x PCI Express 2.0 x16 slot (blue @ x16 mode) Expansion Slot - 3 x PCI Express 2.0 x1 slots - 2 x PCI slots - 7.1 CH HD Audio with Content Protection (Realtek ALC892 Audio Audio Codec) - Premium Blu-ray audio support - PCIE x1 Gigabit LAN 10/100/1000 Mb/s LAN - Realtek RTL8111E - Supports Wake-On-LAN - Supports LAN Cable Detection - Supports Energy Efficient Ethernet 802.3az I/O Panel Rear Panel I/O - 1 x PS/2 Mouse Port - 1 x PS/2 Keyboard Port - 1 x Coaxial SPDIF Out Port - 1 x Optical SPDIF Out Port - 6 x Ready-to-Use USB 2.0 Ports - 1 x eSATA2 Connector - 2 x Ready-to-Use USB 3.0 Ports - 1 x RJ-45 LAN Port with LED (ACT/LINK LED and SPEED LED) - 1 x Clear CMOS Switch with LED - HD Audio Jack: Side Speaker / Rear Speaker / Central / Bass / Line in / Front Speaker / Microphone - 2 x SATA3 6.0 Gb/s connectors, support RAID -

Fatal1ty P67 Professional Series

Fatal1ty P67 Professional Series User Manual Version 1.0 Published November 2010 1 Fatal1ty Story Who knew that at age 19, I would be a World Champion PC gamer. When I was 13, I actually played competitive billiards in professional tournaments and won four or five games off guys who played at the highest level. I actually thought of making a career of it, but at that young age situations change rapidly. Because I’ve been blessed with great hand-eye coordination and a grasp of mathematics (an important element in video gaming) I gravitated to that activity. GOING PRO I started professional gaming in 1999 when I entered the CPL (Cyberathlete Professional League) tournament in Dallas and won $4,000 for coming in third place. Emerging as one of the top players in the United States, a company interested in sponsoring me fl ew me to Sweden to compete against the top 12 players in the world. I won 18 straight games, lost none, and took fi rst place, becoming the number one ranked Quake III player in the world in the process. Two months later I followed that success by traveling to Dallas and defending my title as the world’s best Quake III player, winning the $40,000 grand prize. From there I entered competitions all over the world, including Singapore, Korea, Germany, Australia, Holland and Brazil in addition to Los Angeles, New York and St. Louis. WINNING STREAK I was excited to showcase my true gaming skills when defending my title as CPL Champion of the year at the CPL Winter 2001 because I would be competing in a totally different first person shooter (fps) game, Alien vs. -

Across the Intel Sandy Bridge

ADVERTORIAL ADVERTORIAL Innovations: Faster, Better and More Efficient Compared to its first generation Intel® Core™ i7 predecessors, the Intel® Core™ A smaller fabrication process lets the Intel® Sandy Bridge systems use less energy, i7-2600K offers a huge amount of advancements and this is further reflected in its and a native 32nm fabrication process ensures that you get more performance performance. Using a performance Intel P67-chipset board along with the Intel® without leading to higher electricity costs. Less energy used also means less heat Core™ i7-2600K processor, the Sandy Bridge platform easily breezes through generated, so that your computer system stays cooler and therefore lasts a whole benchmarks such as Futuremark’s PCMark05 and PCMark Vantage. lot longer. Not content with just a native 32nm fabrication process, Intel® has also added new innovations such as Turbo Boost 2.0, which allows for longer and Overclocking Performance higher-clocked periods of extra performance. Building upon previous innovations, some new Sandy Bridge processors also use Intel® vPro™ technologies for better security, while all of the processors feature better on-chip integration for the onboard graphics and memory controller. The new onboard graphics processors include the Intel® GMA HD 2000 and 3000, which have higher clock speeds and their own Turbo Boost, so the graphics can speed automatically up as you need it to. Other innovations include hardware- encoded media codecs such as H.264, along with features like Quick Sync Video, InTru 3D and AVX instructions built into the graphics processors themselves. This means even faster transcoding for HD media content, along with faster and smoother graphics for both games and movies. -

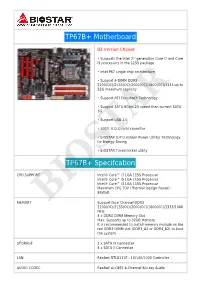

TP67B+ Motherboard TP67B+ Specifcation

TP67B+ Motherboard B3 Version Chipset • Supports the Intel 2nd generation Core i7 and Core i5 processors in the 1155 package • Intel P67 single chip architecture • Support 4-DIMM DDR3- 2200(OC)/2133(OC)/2000(OC)/1600(OC)/1333 up to 32G maximum capacity • Support ATI CrossfireX Technology • Support SATA 6Gb/s 2X speed than current SATA 3G • Support USB 3.0 • 100% X.D.C solid capacitor • BIOSTAR G.P.U (Green Power Utility) Technology for Energy Saving • BIOSTAR Toverclocker utility TP67B+ Specifcation CPU SUPPORT Intel® Core™ i7 LGA 1155 Processor Intel® Core™ i5 LGA 1155 Processor Intel® Core™ i3 LGA 1155 Processor Maximum CPU TDP (Thermal Design Power) : 95Watt MEMORY BIOSTARSupport Dual Channel DDR3 2200(OC)/2133(OC)/2000(OC)/1600(OC)/1333/1066 MHz 4 x DDR3 DIMM Memory Slot Max. Supports up to 32GB Memory It is recommended to install memory module on the red DDR3 DIMM slot (DDR3_A2 or DDR3_B2) to boot the system. STORAGE 2 x SATA III Connector 4 x SATA II Connector LAN Realtek RTL8111E - 10/100/1000 Controller AUDIO CODEC Realtek ALC892 6-Channel Blu-ray Audio USB 2 x USB 3.0 Port 4 x USB 2.0 Port 2 x USB 2.0 Header EXPANSION SLOT 1 x PCI-E 3.0 x16 Slot(supported by Core i5 -3xxx / Core i7 3xxx CPU) 1 x PCI-E 2.0 x16 Slot(x4) 1 x PCI-E 2.0 x1 Slot 2 x PCI Slot REAR I/O 1 x PS/2 Mouse 1 x PS/2 Keyboard 2 x USB 3.0 Port 4 x USB 2.0 Port 1 x LAN Port 3 x Audio Jacks 1 x S/PDIF Out Port INTERNAL I/O 1 x USB 2.0 Header 2 x SATA III Connector (6Gb/s) 4 x SATA II Connector (3Gb/s ) 1 x Front Audio Header 1 x Front Panel Header 1 x S/PDIF-Out -

P67 Extreme6

P67 Extreme6 User Manual Version 1.0 Published November 2010 Copyright©2010 ASRock INC. All rights reserved. 1 Copyright Notice: No part of this manual may be reproduced, transcribed, transmitted, or translated in any language, in any form or by any means, except duplication of documentation by the purchaser for backup purpose, without written consent of ASRock Inc. Products and corporate names appearing in this manual may or may not be regis- tered trademarks or copyrights of their respective companies, and are used only for identifi cation or explanation and to the owners’ benefi t, without intent to infringe. Disclaimer: Specifi cations and information contained in this manual are furnished for informa- tional use only and subject to change without notice, and should not be constructed as a commitment by ASRock. ASRock assumes no responsibility for any errors or omissions that may appear in this manual. With respect to the contents of this manual, ASRock does not provide warranty of any kind, either expressed or implied, including but not limited to the implied warran- ties or conditions of merchantability or fi tness for a particular purpose. In no event shall ASRock, its directors, offi cers, employees, or agents be liable for any indirect, special, incidental, or consequential damages (including damages for loss of profi ts, loss of business, loss of data, interruption of business and the like), even if ASRock has been advised of the possibility of such damages arising from any defect or error in the manual or product. This device complies with Part 15 of the FCC Rules.