Lecture 1: the Anatomy of a Supercomputer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

NVIDIA Tesla Personal Supercomputer, Please Visit

NVIDIA TESLA PERSONAL SUPERCOMPUTER TESLA DATASHEET Get your own supercomputer. Experience cluster level computing performance—up to 250 times faster than standard PCs and workstations—right at your desk. The NVIDIA® Tesla™ Personal Supercomputer AccessiBLE to Everyone TESLA C1060 COMPUTING ™ PROCESSORS ARE THE CORE is based on the revolutionary NVIDIA CUDA Available from OEMs and resellers parallel computing architecture and powered OF THE TESLA PERSONAL worldwide, the Tesla Personal Supercomputer SUPERCOMPUTER by up to 960 parallel processing cores. operates quietly and plugs into a standard power strip so you can take advantage YOUR OWN SUPERCOMPUTER of cluster level performance anytime Get nearly 4 teraflops of compute capability you want, right from your desk. and the ability to perform computations 250 times faster than a multi-CPU core PC or workstation. NVIDIA CUDA UnlocKS THE POWER OF GPU parallel COMPUTING The CUDA™ parallel computing architecture enables developers to utilize C programming with NVIDIA GPUs to run the most complex computationally-intensive applications. CUDA is easy to learn and has become widely adopted by thousands of application developers worldwide to accelerate the most performance demanding applications. TESLA PERSONAL SUPERCOMPUTER | DATASHEET | MAR09 | FINAL FEATURES AND BENEFITS Your own Supercomputer Dedicated computing resource for every computational researcher and technical professional. Cluster Performance The performance of a cluster in a desktop system. Four Tesla computing on your DesKtop processors deliver nearly 4 teraflops of performance. DESIGNED for OFFICE USE Plugs into a standard office power socket and quiet enough for use at your desk. Massively Parallel Many Core 240 parallel processor cores per GPU that can execute thousands of GPU Architecture concurrent threads. -

"Computers" Abacus—The First Calculator

Component 4: Introduction to Information and Computer Science Unit 1: Basic Computing Concepts, Including History Lecture 4 BMI540/640 Week 1 This material was developed by Oregon Health & Science University, funded by the Department of Health and Human Services, Office of the National Coordinator for Health Information Technology under Award Number IU24OC000015. The First "Computers" • The word "computer" was first recorded in 1613 • Referred to a person who performed calculations • Evidence of counting is traced to at least 35,000 BC Ishango Bone Tally Stick: Science Museum of Brussels Component 4/Unit 1-4 Health IT Workforce Curriculum 2 Version 2.0/Spring 2011 Abacus—The First Calculator • Invented by Babylonians in 2400 BC — many subsequent versions • Used for counting before there were written numbers • Still used today The Chinese Lee Abacus http://www.ee.ryerson.ca/~elf/abacus/ Component 4/Unit 1-4 Health IT Workforce Curriculum 3 Version 2.0/Spring 2011 1 Slide Rules John Napier William Oughtred • By the Middle Ages, number systems were developed • John Napier discovered/developed logarithms at the turn of the 17 th century • William Oughtred used logarithms to invent the slide rude in 1621 in England • Used for multiplication, division, logarithms, roots, trigonometric functions • Used until early 70s when electronic calculators became available Component 4/Unit 1-4 Health IT Workforce Curriculum 4 Version 2.0/Spring 2011 Mechanical Computers • Use mechanical parts to automate calculations • Limited operations • First one was the ancient Antikythera computer from 150 BC Used gears to calculate position of sun and moon Fragment of Antikythera mechanism Component 4/Unit 1-4 Health IT Workforce Curriculum 5 Version 2.0/Spring 2011 Leonardo da Vinci 1452-1519, Italy Leonardo da Vinci • Two notebooks discovered in 1967 showed drawings for a mechanical calculator • A replica was built soon after Leonardo da Vinci's notes and the replica The Controversial Replica of Leonardo da Vinci's Adding Machine . -

Computersacific 2008CIFIC Philosophical Universityuk Articles PHILOSOPHICAL Publishing of Quarterlysouthernltd QUARTERLY California and Blackwell Publishing Ltd

PAPQ 3 0 9 Operator: Xiaohua Zhou Dispatch: 21.12.07 PE: Roy See Journal Name Manuscript No. Proofreader: Wu Xiuhua No. of Pages: 42 Copy-editor: 1 BlackwellOxford,PAPQP0279-0750©XXXOriginalPACOMPUTERSacific 2008CIFIC Philosophical UniversityUK Articles PHILOSOPHICAL Publishing of QuarterlySouthernLtd QUARTERLY California and Blackwell Publishing Ltd. 2 3 COMPUTERS 4 5 6 BY 7 8 GUALTIERO PICCININI 9 10 Abstract: I offer an explication of the notion of the computer, grounded 11 in the practices of computability theorists and computer scientists. I begin by 12 explaining what distinguishes computers from calculators. Then, I offer a 13 systematic taxonomy of kinds of computer, including hard-wired versus 14 programmable, general-purpose versus special-purpose, analog versus digital, and serial versus parallel, giving explicit criteria for each kind. 15 My account is mechanistic: which class a system belongs in, and which 16 functions are computable by which system, depends on the system’s 17 mechanistic properties. Finally, I briefly illustrate how my account sheds 18 light on some issues in the history and philosophy of computing as well 19 as the philosophy of mind. What exactly is a digital computer? (Searle, 1992, p. 205) 20 21 22 23 In our everyday life, we distinguish between things that compute, such as 24 pocket calculators, and things that don’t, such as bicycles. We also distinguish 25 between different kinds of computing device. Some devices, such as abaci, 26 have parts that need to be moved by hand. They may be called computing 27 aids. Other devices contain internal mechanisms that, once started, produce 28 a result without further external intervention. -

Programs Processing Programmable Calculator

IAEA-TECDOC-252 PROGRAMS PROCESSING RADIOIMMUNOASSAY PROGRAMMABLE CALCULATOR Off-Line Analysi f Countinso g Data from Standard Unknownd san s A TECHNICAL DOCUMENT ISSUEE TH Y DB INTERNATIONAL ATOMIC ENERGY AGENCY, VIENNA, 1981 PROGRAM DATR SFO A PROCESSIN RADIOIMMUNOASSAN I G Y USIN HP-41E GTH C PROGRAMMABLE CALCULATOR IAEA, VIENNA, 1981 PrinteIAEe Austrin th i A y b d a September 1981 PLEASE BE AWARE THAT ALL OF THE MISSING PAGES IN THIS DOCUMENT WERE ORIGINALLY BLANK The IAEA does not maintain stocks of reports in this series. However, microfiche copies of these reports can be obtained from INIS Microfiche Clearinghouse International Atomic Energy Agency Wagramerstrasse 5 P.O.Bo0 x10 A-1400 Vienna, Austria on prepayment of Austrian Schillings 25.50 or against one IAEA microfiche service coupon to the value of US $2.00. PREFACE The Medical Applications Section of the International Atomic Energy Agenc s developeha y d severae th ln o programe us r fo s Hewlett-Packard HP-41C programmable calculator to facilitate better quality control in radioimmunoassay through improved data processing. The programs described in this document are designed for off-line analysis of counting data from standard and "unknown" specimens, i.e., for analysis of counting data previously recorded by a counter. Two companion documents will follow offering (1) analogous programe on-linus r conjunction fo i se n wit suitabla h y designed counter, and (2) programs for analysis of specimens introduced int successioa o f assano y batches from "quality-control pools" of the substance being measured. Suggestions for improvements of these programs and their documentation should be brought to the attention of: Robert A. -

TEC 201 Microcomputers – Applications and Techniques (3) Two Hours Lecture and Two Hours Lab Per Week

TEC 201 Microcomputers – Applications and Techniques (3) Two hours lecture and two hours lab per week. An introduction to microcomputer hardware and applications of the microcomputer in industry. Hands-on experience with computer system hardware and software. TEC 209 Introduction to Industrial Technology (3) This course examines fundamental topics in Industrial Technology. Topics include: role and scope of Industrial Technology, career paths, problem solving in Technology, numbering systems, scientific calculators, dimensioning and tolerancing and computer applications in Industrial Technology. TEC 210 Machining/Manufacturing Processes (3) An introduction to machining concepts and basic processes. Practical experiences with hand tools, jigs, drills, grinders, mills and lathes is emphasized. TEC 211 AC/DC Circuits (3) Prerequisite: MS 112. Two hours lecture and two hours lab. Scientific and engineering notation; voltage, current, resistance and power, inductors, capacitors, network theorems, phaser analysis of AC circuits. TEC 225 Electronic Devices I (4) Prerequisites: MS 112 and TEC 211. Three hours lecture and two hours lab. First course in solid state devices. Course topics include: solid state fundamentals, diodes, BJTs, amplifiers and FETs. TEC 250 Computer-Aided Design I (3) Prerequisites: MS 112, TEC 201 or equivalent. Two hours lecture and two hours lab. Interpreting engineering drawings and the creation of computer graphics as applied to two-dimensional drafting and design. TEC 252 Programmable Controllers (3) Prerequisite: TEC 201 or equivalent. Two hours lecture and two hours lab. Study of basic industrial control concepts using modern PLC systems. TEC 302 Advanced Technical Mathematics (4) Prerequisite: MS 112 or higher. Selected topics from trigonometry, analytic geometry, differential and integral calculus. -

Carbon Calculator *CE = Carbon Emissions

Carbon Calculator *CE = Carbon Emissions What is a “Carbon Emission”? A Carbon Emission is the unit of measurement that measures carbon dioxide (CO 2 ). What is the “Carbon Footprint”? A carbon footprint is the measure of the environmental impact of a particular individual or organization's lifestyle or operation, measured in units of carbon dioxide (carbon emissions). Recycling Upcycling 1 Cellphone = 20 lbs of CE 1 Cellphone = 38.4 lbs of CE 1 Laptop = 41 lbs of CE 1 Laptop = 78.7 lbs of CE 1 Tablet = 25 lbs of CE 1 Tablet = 48 lbs of CE 1 iPod = 10 lbs of CE 1 iPod = 19.2 lbs of CE 1 Apple TV/Mini = 20 lbs of CE 1 Apple TV/Mini = 38.4 lbs of CE *1 Video Game Console = 15 lbs of CE 1 Video Game Console = 28.8 lbs of CE *1 Digital Camera = 10 lbs of CE 1 Digital Camera = 19.2 lbs of CE *1 DSLR Camera = 20 lbs of CE 1 DSLR Camera = 38.4 lbs of CE *1 Video Game = 5 lbs of CE 1 Video Game = 9.6 lbs of CE *1 GPS = 5 lbs of CE 1 GPS = 9.6 lbs of CE *Accepted in working condition only If items DO NOT qualify for Upcycling, they will be properly Recycled. Full reporting per school will be submitted with carbon emission totals. Causes International, Inc. • 75 Second Avenue Suite 605 • Needham Heights, MA 02494 (781) 444-8800 • www.causesinternational.com • [email protected] Earth Day Upcycle Product List In working condition only. -

The Sunway Taihulight Supercomputer: System and Applications

SCIENCE CHINA Information Sciences . RESEARCH PAPER . July 2016, Vol. 59 072001:1–072001:16 doi: 10.1007/s11432-016-5588-7 The Sunway TaihuLight supercomputer: system and applications Haohuan FU1,3 , Junfeng LIAO1,2,3 , Jinzhe YANG2, Lanning WANG4 , Zhenya SONG6 , Xiaomeng HUANG1,3 , Chao YANG5, Wei XUE1,2,3 , Fangfang LIU5 , Fangli QIAO6 , Wei ZHAO6 , Xunqiang YIN6 , Chaofeng HOU7 , Chenglong ZHANG7, Wei GE7 , Jian ZHANG8, Yangang WANG8, Chunbo ZHOU8 & Guangwen YANG1,2,3* 1Ministry of Education Key Laboratory for Earth System Modeling, and Center for Earth System Science, Tsinghua University, Beijing 100084, China; 2Department of Computer Science and Technology, Tsinghua University, Beijing 100084, China; 3National Supercomputing Center in Wuxi, Wuxi 214072, China; 4College of Global Change and Earth System Science, Beijing Normal University, Beijing 100875, China; 5Institute of Software, Chinese Academy of Sciences, Beijing 100190, China; 6First Institute of Oceanography, State Oceanic Administration, Qingdao 266061, China; 7Institute of Process Engineering, Chinese Academy of Sciences, Beijing 100190, China; 8Computer Network Information Center, Chinese Academy of Sciences, Beijing 100190, China Received May 27, 2016; accepted June 11, 2016; published online June 21, 2016 Abstract The Sunway TaihuLight supercomputer is the world’s first system with a peak performance greater than 100 PFlops. In this paper, we provide a detailed introduction to the TaihuLight system. In contrast with other existing heterogeneous supercomputers, which include both CPU processors and PCIe-connected many-core accelerators (NVIDIA GPU or Intel Xeon Phi), the computing power of TaihuLight is provided by a homegrown many-core SW26010 CPU that includes both the management processing elements (MPEs) and computing processing elements (CPEs) in one chip. -

Scheduling Many-Task Workloads on Supercomputers: Dealing with Trailing Tasks

Scheduling Many-Task Workloads on Supercomputers: Dealing with Trailing Tasks Timothy G. Armstrong, Zhao Zhang Daniel S. Katz, Michael Wilde, Ian T. Foster Department of Computer Science Computation Institute University of Chicago University of Chicago & Argonne National Laboratory [email protected], [email protected] [email protected], [email protected], [email protected] Abstract—In order for many-task applications to be attrac- as a worker and allocate one task per node. If tasks are tive candidates for running on high-end supercomputers, they single-threaded, each core or virtual thread can be treated must be able to benefit from the additional compute, I/O, as a worker. and communication performance provided by high-end HPC hardware relative to clusters, grids, or clouds. Typically this The second feature of many-task applications is an empha- means that the application should use the HPC resource in sis on high performance. The many tasks that make up the such a way that it can reduce time to solution beyond what application effectively collaborate to produce some result, is possible otherwise. Furthermore, it is necessary to make and in many cases it is important to get the results quickly. efficient use of the computational resources, achieving high This feature motivates the development of techniques to levels of utilization. Satisfying these twin goals is not trivial, because while the efficiently run many-task applications on HPC hardware. It parallelism in many task computations can vary over time, allows people to design and develop performance-critical on many large machines the allocation policy requires that applications in a many-task style and enables the scaling worker CPUs be provisioned and also relinquished in large up of existing many-task applications to run on much larger blocks rather than individually. -

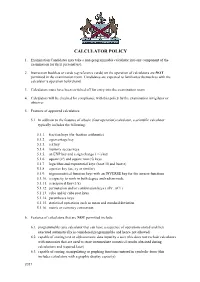

Calculator Policy

CALCULATOR POLICY 1. Examination Candidates may take a non-programmable calculator into any component of the examination for their personal use. 2. Instruction booklets or cards (eg reference cards) on the operation of calculators are NOT permitted in the examination room. Candidates are expected to familiarise themselves with the calculator’s operation beforehand. 3. Calculators must have been switched off for entry into the examination room. 4. Calculators will be checked for compliance with this policy by the examination invigilator or observer. 5. Features of approved calculators: 5.1. In addition to the features of a basic (four operation) calculator, a scientific calculator typically includes the following: 5.1.1. fraction keys (for fraction arithmetic) 5.1.2. a percentage key 5.1.3. a π key 5.1.4. memory access keys 5.1.5. an EXP key and a sign change (+/-) key 5.1.6. square (x²) and square root (√) keys 5.1.7. logarithm and exponential keys (base 10 and base e) 5.1.8. a power key (ax, xy or similar) 5.1.9. trigonometrical function keys with an INVERSE key for the inverse functions 5.1.10. a capacity to work in both degree and radian mode 5.1.11. a reciprocal key (1/x) 5.1.12. permutation and/or combination keys ( nPr , nCr ) 5.1.13. cube and/or cube root keys 5.1.14. parentheses keys 5.1.15. statistical operations such as mean and standard deviation 5.1.16. metric or currency conversion 6. Features of calculators that are NOT permitted include: 6.1. -

E-Commerce Marketplace

E-COMMERCE MARKETPLACE NIMBIS, AWESIM “Nimbis is providing, essentially, the e-commerce infrastructure that allows suppliers and OEMs DEVELOP COLLABORATIVE together, to connect together in a collaborative HPC ENVIRONMENT form … AweSim represents a big, giant step forward in that direction.” Nimbis, founded in 2008, acts as a clearinghouse for buyers and sellers of technical computing — Bob Graybill, Nimbis president and CEO services and provides pre-negotiated access to high performance computing services, software, and expertise from the leading compute time vendors, independent software vendors, and domain experts. Partnering with the leading computing service companies, Nimbis provides users with a choice growing menu of pre-qualified, pre-negotiated services from HPC cycle providers, independent software vendors, domain experts, and regional solution providers, delivered on a “pay-as-you- go” basis. Nimbis makes it easier and more affordable for desktop users to exploit technical computing for faster results and superior products and solutions. VIRTUAL DESIGNS. REAL BENEFITS. Nimbis Services Inc., a founding associate of the AweSim industrial engagement initiative led by the Ohio Supercomputer Center, has been delving into access complexities and producing, through innovative e-commerce solutions, an easy approach to modeling and simulation resources for small and medium-sized businesses. INFORMATION TECHNOLOGY INFORMATION TECHNOLOGY 2016 THE CHALLENGE Nimbis and the AweSim program, along with its predecessor program Blue Collar Computing, have identified several obstacles that impede widespread adoption of modeling and simulation powered by high performance computing: expensive hardware, complex software and extensive training. In response, the public/private partnership is developing and promoting use of customized applications (apps) linked to OSC’s powerful supercomputer systems. -

Wearable Technology for Enhanced Security

Communications on Applied Electronics (CAE) – ISSN : 2394-4714 Foundation of Computer Science FCS, New York, USA Volume 5 – No.10, September 2016 – www.caeaccess.org Wearable Technology for Enhanced Security Agbaje M. Olugbenga, PhD Babcock University Department of Computer Science Ogun State, Nigeria ABSTRACT Sproutling. Watches like the Apple Watch, and jewelry such Wearable's comprise of sensors and have computational as Cuff and Ringly. ability. Gadgets such as wristwatches, pens, and glasses with Taking a look at the history of computer generations up to the installed cameras are now available at cheap prices for user to present, we could divide it into three main types: mainframe purchase to monitor or securing themselves. Nigerian faced computing, personal computing, and ubiquitous or pervasive with several kidnapping in schools, homes and abduction for computing[4]. The various divisions is based on the number ransomed collection and other unlawful acts necessitate these of computers per users. The mainframe computing describes reviews. The success of the wearable technology in medical one large computer connected to many users and the second, uses prompted the research into application into security uses. personal computing, as one computer per person while the The method of research is the use of case studies and literature term ubiquitous computing however, was used in 1991 by search. This paper takes a look at the possible applications of Paul Weiser. Weiser depicted a world full of embedded the wearable technology to combat the cases of abduction and sensing technologies to streamline and improve life [5]. kidnapping in Nigeria. Nigeria faced with several kidnapping in schools and homes General Terms are in dire need for solution. -

Mysugr Bolus Calculator User Manual

mySugr Bolus Calculator User Manual Version: 3.0.8_Android - 2021-06-02 1 Indications for use 1.1 Intended use The mySugr Bolus Calculator, as a function of the mySugr Logbook app, is intended for the management of insulin dependent diabetes by calculating a bolus insulin dose or carbohydrate intake based on therapy data of the patient. Before its use, the intended user performs a setup using patient-specific target blood glucose, carbohydrate to insulin ratio, insulin correction factor and insulin acting time parameters provided by the responsible healthcare professional. For the calculation, in addition to the setup parameters, the algorithm uses current blood glucose values, planned carbohydrate intake and the active insulin which is calculated based on the insulin action curves of the respective insulin type. 1.2 Who is the mySugr Bolus Calculator for? The mySugr Bolus Calculator is designed for users: diagnosed with insulin dependent diabetes aged 18 years and above treated with short-acting human insulin or rapid-acting analog insulin undergoing intensified insulin therapy in the form of Multiple Daily Injections (MDI) or Continuous Subcutaneous Insulin Infusion (CSII) under guidance of a doctor or other healthcare professional who are physically and mentally able to independently manage their diabetes therapy able to proficiently use a smartphone 1.3 Environment for use As a mobile application, the mySugr Bolus Calculator can be used in any environment where the user would typically and safely use a smartphone. 2 Contraindications 2.1 Circumstances for bolus calculation The mySugr Bolus Calculator can not be used when: the user's blood glucose is below 20 mg/dL or 1.2 mmol/L the user's blood glucose is above 500 mg/dL or 27.7 mmol/L the time of the log entry containing input data for the calculation is older than 15 minutes 1 2.2 Insulin restrictions The mySugr Bolus Calculator may only be used with the insulins listed in the app settings and must especially not be used with either combination or long acting insulin.