ACM SIGARCH Order # Is 415972. IEEE Computer Society Press Order # Is RS00160

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Multics System, 1975

Honeywell The Multics System O 1975,1976,Honeywell Information Systems Inc. File No.:lLll - -- - ecure A Unique Business Problem-SolvingTool Here is a computer techniques are available to system that enables data all users automatically processing users to control through the Multics operating and distribute easily accessi- supervisor. ble computer power. The Because it is a unique Honeywell Multics System combination of advanced represents an advanced computing theory and out- approach to making the com- standing computer hardware, puter an integral, thoroughly Multics can provide an infor- reliable part of a company's mation service system more operation. advanced than any other yet The Multics System available. replaces many of the proce- Honeywell offers, as dures limiting conventional part of its advanced Series 60 systems and sweeps away line, two models for Multics - many of the factors that have the Model 68/60 and the restricted the application of Model 68/80. computers to routine data While contributing sig- processing assignments. nificantly to the application Now-with Multics - diversity of the Series 60 the computer becomes a family, these Multics systems responsive tool for solving also enable Honeywell to challenging business accommodate more efficiently problems. the computing needs of The Multics System today's businesses. incorporates many of the most user-oriented program- ming and supervisory tech- niques yet devised. These Multics is Transaction Processing -and More Although Multics is by Ease of accessibility, design a transaction oriented, featuring a simple and con- interactive information sys- sistent user interface for all tem, its functional capability types of services. There is no encompasses the full spec- job control or command trum of a general purpose language to learn and an computer. -

Download The

LEADING THE FUTURE OF TECHNOLOGY 2016 ANNUAL REPORT TABLE OF CONTENTS 1 MESSAGE FROM THE IEEE PRESIDENT AND THE EXECUTIVE DIRECTOR 3 LEADING THE FUTURE OF TECHNOLOGY 5 GROWING GLOBAL AND INDUSTRY PARTNERSHIPS 11 ADVANCING TECHNOLOGY 17 INCREASING AWARENESS 23 AWARDING EXCELLENCE 29 EXPANSION AND OUTREACH 33 ELEVATING ENGAGEMENT 37 MESSAGE FROM THE TREASURER AND REPORT OF INDEPENDENT CERTIFIED PUBLIC ACCOUNTANTS 39 CONSOLIDATED FINANCIAL STATEMENTS Barry L. Shoop 2016 IEEE President and CEO IEEE Xplore® Digital Library to enable personalized importantly, we must be willing to rise again, learn experiences based on second-generation analytics. from our experiences, and advance. As our members drive ever-faster technological revolutions, each of us MESSAGE FROM As IEEE’s membership continues to grow must play a role in guaranteeing that our professional internationally, we have expanded our global presence society remains relevant, that it is as innovative as our THE IEEE PRESIDENT AND and engagement by opening offices in key geographic members are, and that it continues to evolve to meet locations around the world. In 2016, IEEE opened a the challenges of the ever-changing world around us. second office in China, due to growth in the country THE EXECUTIVE DIRECTOR and to better support engineers in Shenzhen, China’s From Big Data and Cloud Computing to Smart Grid, Silicon Valley. We expanded our office in Bangalore, Cybersecurity and our Brain Initiative, IEEE members India, and are preparing for the opening of a new IEEE are working across varied disciplines, pursuing Technology continues to be a transformative power We continue to make great strides in our efforts to office in Vienna, Austria. -

Xinya (Leah) Zhao Abdulahi Abu Outline

K-Computer Xinya (Leah) Zhao Abdulahi Abu Outline • History of Supercomputing • K-Computer Architecture • Programming Environment • Performance Evaluation • What's next? Timeline of Supercomputing Control Data The Cray era Massive Processing Petaflop Computing Corporation (1960s) (mid-1970s - 1980s) (1990s) (21st century) • CDC 1604 (1960): First • 80 MHz Cray-1 (1976): • Hitachi SR2201 (1996): • Realization: Power of solid state The most successful used 2048 processors large number of small • CDC 6600 (1964): 100 supercomputers in history connected via a fast three processors can be computers were sold at $8 • Vector processor dimensioanl corssbar harnessed to achieve high million each • Introduced chaining in network performance • Gained speed by "farming which scalar and vector • ASCI Red: mesh-based • IBM Blue Gene out" work to peripheral registers generate interim MIMD massively parallel architecture: trades computing elements, results system with over 9,000 processor speed for low freeing the CPU to • The Cray-2 (1985): No compute nodes and well power consumption, so a process actual data chaning and high memory over 12 terabytes of disk large number of • STAR-100: First to use latency with deep storage processors can be used at vector processing pipelinging • ASCI Red was the first air cooled temperature ever to break through the • K computer (2011) : 1 teraflop barrier fastest in the world K Computer is #1 !!! Why K Computer? Purpose: • Perform extremely complex mathematical or scientific calculations (ex: modelling the changes -

Multics Security Evaluation: Vulnerability Analysis*

* Multics Security Evaluation: Vulnerability Analysis Paul A. Karger, 2Lt, USAF Roger R. Schell, Maj, USAF Deputy for Command and Management Systems (MCI), HQ Electronic Systems Division Hanscom AFB, MA 01730 ABSTRACT operational requirements cannot be met by dedicated sys- A security evaluation of Multics for potential use as a tems because of the lack of information sharing. It has two-level (Secret / Top Secret) system in the Air Force been estimated by the Electronic Systems Division (ESD) Data Services Center (AFDSC) is presented. An overview sponsored Computer Security Technology Panel [10] that is provided of the present implementation of the Multics these additional costs may amount to $100,000,000 per Security controls. The report then details the results of a year for the Air Force alone. penetration exercise of Multics on the HIS 645 computer. In addition, preliminary results of a penetration exercise 1.2 Requirement for Multics Security of Multics on the new HIS 6180 computer are presented. Evaluation The report concludes that Multics as implemented today is not certifiably secure and cannot be used in an open This evaluation of the security of the Multics system use multi-level system. However, the Multics security was performed under Project 6917, Program Element design principles are significantly better than other con- 64708F to meet requirements of the Air Force Data Ser- temporary systems. Thus, Multics as implemented today, vices Center (AFDSC). AFDSC must provide responsive can be used in a benign Secret / Top Secret environment. interactive time-shared computer services to users within In addition, Multics forms a base from which a certifiably the Pentagon at all classification levels from unclassified to secure open use multi-level system can be developed. -

Towards Comprehensible and Effective Permission Systems

Towards Comprehensible and Effective Permission Systems Adrienne Porter Felt Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2012-185 http://www.eecs.berkeley.edu/Pubs/TechRpts/2012/EECS-2012-185.html August 9, 2012 Copyright © 2012, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Towards Comprehensible and Effective Permission Systems by Adrienne Porter Felt A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the GRADUATE DIVISION of the UNIVERSITY OF CALIFORNIA, BERKELEY Committee in charge: Professor David Wagner, Chair Professor Vern Paxson Professor Tapan Parikh Fall 2012 Towards Comprehensible and Effective Permission Systems Copyright c 2012 by Adrienne Porter Felt Abstract Towards Comprehensible and Effective Permission Systems by Adrienne Porter Felt Doctor of Philosophy in Computer Science University of California, Berkeley Professor David Wagner, Chair How can we, as platform designers, protect computer users from the threats associated with ma- licious, privacy-invasive, and vulnerable applications? Modern platforms have turned away from the traditional user-based permission model and begun adopting application permission systems in an attempt to shield users from these threats. This dissertation evaluates modern permission systems with the goal of improving the security of future platforms. -

David D. Clark

Designs for an Internet David D. Clark Dra Version 3.0 of Jan 1, 2017 David D. Clark Designs for an Internet Status is version of the book is a pre-release intended to get feedback and comments from members of the network research community and other interested readers. Readers should assume that the book will receive substantial revision. e chapters on economics, management and meeting the needs of society are preliminary, and comments are particularly solicited on these chapters. Suggestions as to how to improve the descriptions of the various architectures I have discussed are particularly solicited, as are suggestions about additional citations to relevant material. For those with a technical background, note that the appendix contains a further review of relevant architectural work, beyond what is in Chapter 5. I am particularly interesting in learning which parts of the book non-technical readers nd hard to follow. Revision history Version 1.1 rst pre-release May 9 2016. Version 2.0 October 2016. Addition of appendix with further review of related work. Addition of a ”Chapter zero”, which provides an introduction to the Internet for non-technical readers. Substantial revision to several chapters. Version 3.0 Jan 2017 Addition of discussion of Active Nets Still missing–discussion of SDN in management chapter. ii 178 David D. Clark Designs for an Internet A note on the cover e picture I used on the cover is not strictly “architecture”. It is a picture of the Memorial to the Mur- dered Jews of Europe, in Berlin, which I photographed in 2006. -

2008 Cse Course List 56

It is CSE’s intention every year to make the Annual Report representative of the whole Department. With this ideal in mind, a design contest is held every year open to Graduate and Undergraduate students. This year’s winner was James Dickson, a junior CSE major who hails from Granville, Ohio. CONTENTS 2008 ACHIEVEMENT & HIGHLIGHTS 1 ANNUAL CSE DEPARTMENT AWARDS 11 INDUSTRIAL ADVISORY BOARD 12 RETIREMENT DOUBLE HIT 13 RESEARCH 14 GRANTS, AWARDS & GIFTS 19 COLLOQUIUM 27 STUDENTS 29 FaCULTY AND STAFF 38 SELECT FaCULTY PUBLICATIONS 49 2007 - 2008 CSE COURSE LIST 56 395 Dreese Labs 2015 Neil Avenue Columbus, Ohio 43210-1277 (614) 292-5813 WWW.CSE.OHIO-STATE.EDU i Mission Statement ± The Department of Computer Science and Engineering will impact the information age as a national leader in computing research and education. ± We will prepare computing graduates who are highly sought after, productive, and well-respected for their work, and who contribute to new developments in computing. ± We will give students in other disciplines an appropriate foundation in computing for their education, research, and experiences after graduation, consistent with computing’s increasingly fundamental role in society. ± In our areas of research focus, we will contribute key ideas to the development of the computing basis of the information age, advancing the state of the art for the benefit of society, the State of Ohio, and The Ohio State University. ± We will work with key academic partners within and outside of OSU, and with key industrial partners, in pursuit of our research and educational endeavors. ii GREETIN G S FROM THE CHAIR ’S OFFI C E Dear Colleges, Alumni, Friends, and Parents, As we reach the end of the 2007-2008 academic year, I am glad to introduce you a new annual report of the department. -

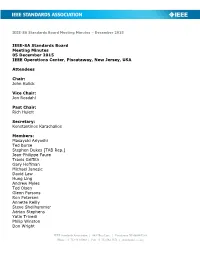

IEEE-SA Standards Board Meeting Minutes – December 2015

Board Meeting Minutes – September 2011 IEEE-SA Standards Board Meeting Minutes – December 2015 IEEE-SA Standards Board Meeting Minutes 05 December 2015 IEEE Operations Center, Piscataway, New Jersey, USA Attendees Chair: John Kulick Vice Chair: Jon Rosdahl Past Chair: Rich Hulett Secretary: Konstantinos Karachalios Members: Masayuki Ariyoshi Ted Burse Stephen Dukes [TAB Rep.] Jean-Philippe Faure Travis Griffith Gary Hoffman Michael Janezic David Law Hung Ling Andrew Myles Ted Olsen Glenn Parsons Ron Petersen Annette Reilly Steve Shellhammer Adrian Stephens Yatin Trivedi Philip Winston Don Wright IEEE Standards Association | 445 Hoes Lane | Piscataway NJ 08854 USA Phone: +1 732 981 0060 | Fax: +1 732 562 1571 | standards.ieee.org IEEE-SA Standards Board Meeting Minutes – December 2015 Yu Yuan Daidi Zhong Thomas Koshy, NRC Liaison Jim Matthews, IEC Liaison Members Absent: Joe Koepfinger, Member Emeritus Dick DeBlasio, DOE Liaison IEEE Staff: Melissa Aranzamendez Kathryn Bennett Christina Boyce Matthew Ceglia Tom Compton Christian DeFelice Karen Evangelista Jonathan Goldberg Mary Ellen Hanntz Yvette Ho Sang Noelle Humenick Karen Kenney Michael Kipness Adam Newman Mary Lynne Nielsen Moira Patterson Walter Pienciak Dave Ringle, Recording Secretary Rudi Schubert Sam Sciacca Patrick Slaats Walter Sun Susan Tatiner Cherry Tom Lisa Weisser Meng Zhao IEEE Outside Legal Counsel: Claire Topp Guests: Chuck Adams Dennis Brophy Dave Djavaherian IEEE-SA Standards Board Meeting Minutes – December 2015 James Gilb Scott Gilfillan Daniel Hermele Bruce Kraemer Xiaohui Liu Kevin Lu John Messenger Gil Ohana Mehmet Ulema, ComSoc Liaison to the SASB Neil Vohra Walter Weigel Yingli Wen Phil Wennblom Howard Wolfman Helene Workman Paul Zeineddin 1 Call to Order Chair Kulick called the meeting to order at 9:00 a.m. -

Shanlu › About › Cv › CV Shanlu.Pdf Shan Lu

Shan Lu University of Chicago, Dept. of Computer Science Phone: +1-773-702-3184 5730 S. Ellis Ave., Rm 343 E-mail: [email protected] Chicago, IL 60637 USA Homepage: http://people.cs.uchicago.edu/~shanlu RESEARCH INTERESTS Tool support for improving the correctness and efficiency of large scale software systems EMPLOYMENT 2019 – present Professor, Dept. of Computer Science, University of Chicago 2014 – 2019 Associate Professor, Dept. of Computer Sciences, University of Chicago 2009 – 2014 Assistant Professor, Dept. of Computer Sciences, University of Wisconsin – Madison EDUCATION 2008 University of Illinois at Urbana-Champaign, Urbana, IL Ph.D. in Computer Science Thesis: Understanding, Detecting, and Exposing Concurrency Bugs (Advisor: Prof. Yuanyuan Zhou) 2003 University of Science & Technology of China, Hefei, China B.S. in Computer Science HONORS AND AWARDS 2019 ACM Distinguished Member Among 62 members world-wide recognized for outstanding contributions to the computing field 2015 Google Faculty Research Award 2014 Alfred P. Sloan Research Fellow Among 126 “early-career scholars (who) represent the most promising scientific researchers working today” 2013 Distinguished Alumni Educator Award Among 3 awardees selected by Department of Computer Science, University of Illinois 2010 NSF Career Award 2021 Honorable Mention Award @ CHI for paper [C71] (CHI 2021) 2019 Best Paper Award @ SOSP for paper [C62] (SOSP 2019) 2019 ACM SIGSOFT Distinguished Paper Award @ ICSE for paper [C58] (ICSE 2019) 2017 Google Scholar Classic Paper Award for -

Software Engineering 0835

Software Engineering 0835 1. Objectives In order to cultivate top creative talents in the field of software engineering, this discipline is designed to help students develop awareness of innovation and innovation capacity. Focusing on relevant software engineering research, students are expected to master the solid and broad basic theory and in-depth expertise and to achieve innovative research results. Doctoral students in this field should have a rigorous scientific attitude, good scientific style and scientific ethics, a wide range of disciplinary perspectives, a pioneering spirit and the overall quality for independently conducting scientific research. 2. Length of Study Period Postgraduate classification Normal length of study period Length of study period 4 years full-time 3 to 5 years full-time Doctoral students or 5 years part-time or 5 to 7 years part-time Direct Doctoral students I 5 years full-time 5 to 7 years full-time Direct Doctoral students II 6 years full-time 5 to 7 years full-time Notes: (1) Direct Doctoral students I: Doctoral students pursuing a doctoral degree directly from a bachelor degree (2) Direct Doctoral students II: Doctoral students of successive postgraduate and doctoral programs of study without a master degree 3. Research Areas (1) Software engineering theory and methods (2) Embedded computing and the Internet of Things (3) Digital media technologies (4) Trustworthy software (5) Computer and network security 4. Curriculums and Credits 4.1 Doctoral students (14 Credits or above) Course Classification Course -

Recent Supercomputing Development in Japan

Supercomputing in Japan Yoshio Oyanagi Dean, Faculty of Information Science Kogakuin University 2006/4/24 1 Generations • Primordial Ages (1970’s) – Cray-1, 75APU, IAP • 1st Generation (1H of 1980’s) – Cyber205, XMP, S810, VP200, SX-2 • 2nd Generation (2H of 1980’s) – YMP, ETA-10, S820, VP2600, SX-3, nCUBE, CM-1 • 3rd Generation (1H of 1990’s) – C90, T3D, Cray-3, S3800, VPP500, SX-4, SP-1/2, CM-5, KSR2 (HPC ventures went out) • 4th Generation (2H of 1990’s) – T90, T3E, SV1, SP-3, Starfire, VPP300/700/5000, SX-5, SR2201/8000, ASCI(Red, Blue) • 5th Generation (1H of 2000’s) – ASCI,TeraGrid,BlueGene/L,X1, Origin,Power4/5, ES, SX- 6/7/8, PP HPC2500, SR11000, …. 2006/4/24 2 Primordial Ages (1970’s) 1974 DAP, BSP and HEP started 1975 ILLIAC IV becomes operational 1976 Cray-1 delivered to LANL 80MHz, 160MF 1976 FPS AP-120B delivered 1977 FACOM230-75 APU 22MF 1978 HITAC M-180 IAP 1978 PAX project started (Hoshino and Kawai) 1979 HEP operational as a single processor 1979 HITAC M-200H IAP 48MF 1982 NEC ACOS-1000 IAP 28MF 1982 HITAC M280H IAP 67MF 2006/4/24 3 Characteristics of Japanese SC’s 1. Manufactured by main-frame vendors with semiconductor facilities (not ventures) 2. Vector processors are attached to mainframes 3. HITAC IAP a) memory-to-memory b) summation, inner product and 1st order recurrence can be vectorized c) vectorization of loops with IF’s (M280) 4. No high performance parallel machines 2006/4/24 4 1st Generation (1H of 1980’s) 1981 FPS-164 (64 bits) 1981 CDC Cyber 205 400MF 1982 Cray XMP-2 Steve Chen 630MF 1982 Cosmic Cube in Caltech, Alliant FX/8 delivered, HEP installed 1983 HITAC S-810/20 630MF 1983 FACOM VP-200 570MF 1983 Encore, Sequent and TMC founded, ETA span off from CDC 2006/4/24 5 1st Generation (1H of 1980’s) (continued) 1984 Multiflow founded 1984 Cray XMP-4 1260MF 1984 PAX-64J completed (Tsukuba) 1985 NEC SX-2 1300MF 1985 FPS-264 1985 Convex C1 1985 Cray-2 1952MF 1985 Intel iPSC/1, T414, NCUBE/1, Stellar, Ardent… 1985 FACOM VP-400 1140MF 1986 CM-1 shipped, FPS T-series (max 1TF!!) 2006/4/24 6 Characteristics of Japanese SC in the 1st G. -

List of Internet Pioneers

List of Internet pioneers Instead of a single "inventor", the Internet was developed by many people over many years. The following are some Internet pioneers who contributed to its early development. These include early theoretical foundations, specifying original protocols, and expansion beyond a research tool to wide deployment. The pioneers Contents Claude Shannon The pioneers Claude Shannon Claude Shannon (1916–2001) called the "father of modern information Vannevar Bush theory", published "A Mathematical Theory of Communication" in J. C. R. Licklider 1948. His paper gave a formal way of studying communication channels. It established fundamental limits on the efficiency of Paul Baran communication over noisy channels, and presented the challenge of Donald Davies finding families of codes to achieve capacity.[1] Charles M. Herzfeld Bob Taylor Vannevar Bush Larry Roberts Leonard Kleinrock Vannevar Bush (1890–1974) helped to establish a partnership between Bob Kahn U.S. military, university research, and independent think tanks. He was Douglas Engelbart appointed Chairman of the National Defense Research Committee in Elizabeth Feinler 1940 by President Franklin D. Roosevelt, appointed Director of the Louis Pouzin Office of Scientific Research and Development in 1941, and from 1946 John Klensin to 1947, he served as chairman of the Joint Research and Development Vint Cerf Board. Out of this would come DARPA, which in turn would lead to the ARPANET Project.[2] His July 1945 Atlantic Monthly article "As We Yogen Dalal May Think" proposed Memex, a theoretical proto-hypertext computer Peter Kirstein system in which an individual compresses and stores all of their books, Steve Crocker records, and communications, which is then mechanized so that it may Jon Postel [3] be consulted with exceeding speed and flexibility.