Extracting Visual Encodings from Map Chart Images with Color-Encoded Scalar Values

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Planar Maps: an Interaction Paradigm for Graphic Design

CH1'89 PROCEEDINGS MAY 1989 PLANAR MAPS: AN INTERACTION PARADIGM FOR GRAPHIC DESIGN Patrick Baudelaire Michel Gangnet Digital Equipment Corporation Pads Research Laboratory 85, Avenue Victor Hugo 92563 Rueil-Malmaison France In a world of changing taste one thing remains as a foundation for decorative design -- the geometry of space division. Talbot F. Hamlin (1932) ABSTRACT Compared to traditional media, computer illustration soft- Figure 1: Four lines or a rectangular area ? ware offers superior editing power at the cost of reduced free- Unfortunately, with typical drawing software this dual in- dom in the picture construction process. To reduce this dis- terpretation is not possible. The picture contains no manipu- crepancy, we propose an extension to the classical paradigm lable objects other than the four original lines. It is impossi- of 2D layered drawing, the map paradigm, that is conducive ble for the software to color the rectangle since no such rect- to a more natural drawing technique. We present the key angle exists. This impossibility is even more striking when concepts on which the new paradigm is based: a) graphical the four lines are abutting as in Fig. 2. We feel that such a objects, called planar maps, that describe shapes with multi- restriction, counter to the traditional practice of the designer, ple colors and contours; b) a drawing technique, called map is a hindrance to productivity and creativity. In this paper sketching, that allows the iterative construction of arbitrarily we propose a new drawing paradigm that will permit a dual complex objects. We also discuss user interface design is- interpretation of Fig. -

Texture / Image-Based Rendering Texture Maps

Texture / Image-Based Rendering Texture maps Surface color and transparency Environment and irradiance maps Reflectance maps Shadow maps Displacement and bump maps Level of detail hierarchy CS348B Lecture 12 Pat Hanrahan, Spring 2005 Texture Maps How is texture mapped to the surface? Dimensionality: 1D, 2D, 3D Texture coordinates (s,t) Surface parameters (u,v) Direction vectors: reflection R, normal N, halfway H Projection: cylinder Developable surface: polyhedral net Reparameterize a surface: old-fashion model decal What does texture control? Surface color and opacity Illumination functions: environment maps, shadow maps Reflection functions: reflectance maps Geometry: bump and displacement maps CS348B Lecture 12 Pat Hanrahan, Spring 2005 Page 1 Classic History Catmull/Williams 1974 - basic idea Blinn and Newell 1976 - basic idea, reflection maps Blinn 1978 - bump mapping Williams 1978, Reeves et al. 1987 - shadow maps Smith 1980, Heckbert 1983 - texture mapped polygons Williams 1983 - mipmaps Miller and Hoffman 1984 - illumination and reflectance Perlin 1985, Peachey 1985 - solid textures Greene 1986 - environment maps/world projections Akeley 1993 - Reality Engine Light Field BTF CS348B Lecture 12 Pat Hanrahan, Spring 2005 Texture Mapping ++ == 3D Mesh 2D Texture 2D Image CS348B Lecture 12 Pat Hanrahan, Spring 2005 Page 2 Surface Color and Transparency Tom Porter’s Bowling Pin Source: RenderMan Companion, Pls. 12 & 13 CS348B Lecture 12 Pat Hanrahan, Spring 2005 Reflection Maps Blinn and Newell, 1976 CS348B Lecture 12 Pat Hanrahan, Spring 2005 Page 3 Gazing Ball Miller and Hoffman, 1984 Photograph of mirror ball Maps all directions to a to circle Resolution function of orientation Reflection indexed by normal CS348B Lecture 12 Pat Hanrahan, Spring 2005 Environment Maps Interface, Chou and Williams (ca. -

Digital Mapping & Spatial Analysis

Digital Mapping & Spatial Analysis Zach Silvia Graduate Community of Learning Rachel Starry April 17, 2018 Andrew Tharler Workshop Agenda 1. Visualizing Spatial Data (Andrew) 2. Storytelling with Maps (Rachel) 3. Archaeological Application of GIS (Zach) CARTO ● Map, Interact, Analyze ● Example 1: Bryn Mawr dining options ● Example 2: Carpenter Carrel Project ● Example 3: Terracotta Altars from Morgantina Leaflet: A JavaScript Library http://leafletjs.com Storytelling with maps #1: OdysseyJS (CartoDB) Platform Germany’s way through the World Cup 2014 Tutorial Storytelling with maps #2: Story Maps (ArcGIS) Platform Indiana Limestone (example 1) Ancient Wonders (example 2) Mapping Spatial Data with ArcGIS - Mapping in GIS Basics - Archaeological Applications - Topographic Applications Mapping Spatial Data with ArcGIS What is GIS - Geographic Information System? A geographic information system (GIS) is a framework for gathering, managing, and analyzing data. Rooted in the science of geography, GIS integrates many types of data. It analyzes spatial location and organizes layers of information into visualizations using maps and 3D scenes. With this unique capability, GIS reveals deeper insights into spatial data, such as patterns, relationships, and situations - helping users make smarter decisions. - ESRI GIS dictionary. - ArcGIS by ESRI - industry standard, expensive, intuitive functionality, PC - Q-GIS - open source, industry standard, less than intuitive, Mac and PC - GRASS - developed by the US military, open source - AutoDESK - counterpart to AutoCAD for topography Types of Spatial Data in ArcGIS: Basics Every feature on the planet has its own unique latitude and longitude coordinates: Houses, trees, streets, archaeological finds, you! How do we collect this information? - Remote Sensing: Aerial photography, satellite imaging, LIDAR - On-site Observation: total station data, ground penetrating radar, GPS Types of Spatial Data in ArcGIS: Basics Raster vs. -

Geotime As an Adjunct Analysis Tool for Social Media Threat Analysis and Investigations for the Boston Police Department Offeror: Uncharted Software Inc

GeoTime as an Adjunct Analysis Tool for Social Media Threat Analysis and Investigations for the Boston Police Department Offeror: Uncharted Software Inc. 2 Berkeley St, Suite 600 Toronto ON M5A 4J5 Canada Business Type: Canadian Small Business Jurisdiction: Federally incorporated in Canada Date of Incorporation: October 8, 2001 Federal Tax Identification Number: 98-0691013 ATTN: Jenny Prosser, Contract Manager, [email protected] Subject: Acquiring Technology and Services of Social Media Threats for the Boston Police Department Uncharted Software Inc. (formerly Oculus Info Inc.) respectfully submits the following response to the Technology and Services of Social Media Threats RFP. Uncharted accepts all conditions and requirements contained in the RFP. Uncharted designs, develops and deploys innovative visual analytics systems and products for analysis and decision-making in complex information environments. Please direct any questions about this response to our point of contact for this response, Adeel Khamisa at 416-203-3003 x250 or [email protected]. Sincerely, Adeel Khamisa Law Enforcement Industry Manager, GeoTime® Uncharted Software Inc. [email protected] 416-203-3003 x250 416-708-6677 Company Proprietary Notice: This proposal includes data that shall not be disclosed outside the Government and shall not be duplicated, used, or disclosed – in whole or in part – for any purpose other than to evaluate this proposal. If, however, a contract is awarded to this offeror as a result of – or in connection with – the submission of this data, the Government shall have the right to duplicate, use, or disclose the data to the extent provided in the resulting contract. GeoTime as an Adjunct Analysis Tool for Social Media Threat Analysis and Investigations 1. -

Chartmaking in England and Its Context, 1500–1660

58 • Chartmaking in England and Its Context, 1500 –1660 Sarah Tyacke Introduction was necessary to challenge the Dutch carrying trade. In this transitional period, charts were an additional tool for The introduction of chartmaking was part of the profes- the navigator, who continued to use his own experience, sionalization of English navigation in this period, but the written notes, rutters, and human pilots when he could making of charts did not emerge inevitably. Mariners dis- acquire them, sometimes by force. Where the navigators trusted them, and their reluctance to use charts at all, of could not obtain up-to-date or even basic chart informa- any sort, continued until at least the 1580s. Before the tion from foreign sources, they had to make charts them- 1530s, chartmaking in any sense does not seem to have selves. Consequently, by the 1590s, a number of ship- been practiced by the English, or indeed the Scots, Irish, masters and other practitioners had begun to make and or Welsh.1 At that time, however, coastal views and plans sell hand-drawn charts in London. in connection with the defense of the country began to be In this chapter the focus is on charts as artifacts and made and, at the same time, measured land surveys were not on navigational methods and instruments.4 We are introduced into England by the Italians and others.2 This lack of domestic production does not mean that charts I acknowledge the assistance of Catherine Delano-Smith, Francis Her- and other navigational aids were unknown, but that they bert, Tony Campbell, Andrew Cook, and Peter Barber, who have kindly commented on the text and provided references and corrections. -

Arcgis® + Geotime®: GIS Technology to Support the Analysis Of

® ® In many application areas, this is not enough: Geo- spatial and temporal correlations between the data ArcGIS + GeoTime : GIS technology should be studied, so that, on the basis of this insight, the available data - more and more numerous - can to support the analysis of telephone be translated into knowledge and therefore in appropriate decisions . In the area of security, to name an example, all this results in the predictive traffic data analysis of the spatial-temporal occurrence of crimes. Lastly, a technologically advanced GIS platform must Giorgio Forti, Miriam Marta, Fabrizio Pauri ® ensure data sharing and enable the world of mobile devices (system of engagement). ® There are several ways to share data / information, all supported by ArcGIS, such as: sharing within a single Historical mobile phone traffic billboards analysis is organization, according to the profiles assigned becoming increasingly important in investigative Figure 2: Sample data representation of two (identity); the sharing of multiple organizations that activities of public security organizations around the cellphone users in GeoTime may / should share confidential data (a very common world, and leading technology companies have been situation in both Public Security and Emergency trying to respond to the strong demand for the most Other predefined analysis features are already Management); public communication, open to all (for suitable tools for supporting such activities. available (automatic cluster search, who attends sites example, to report investigative success, or to of investigation interest, mobility compatibility with communicate to citizens unsafe areas for the Originally developed as a project funded in the United participation in events, etc.), allowing considerable frequency of criminal offenses). -

Techniques for Spatial Analysis and Visualization of Benthic Mapping Data: Final Report

Techniques for spatial analysis and visualization of benthic mapping data: final report Item Type monograph Authors Andrews, Brian Publisher NOAA/National Ocean Service/Coastal Services Center Download date 29/09/2021 07:34:54 Link to Item http://hdl.handle.net/1834/20024 TECHNIQUES FOR SPATIAL ANALYSIS AND VISUALIZATION OF BENTHIC MAPPING DATA FINAL REPORT April 2003 SAIC Report No. 623 Prepared for: NOAA Coastal Services Center 2234 South Hobson Avenue Charleston SC 29405-2413 Prepared by: Brian Andrews Science Applications International Corporation 221 Third Street Newport, RI 02840 TABLE OF CONTENTS Page 1.0 INTRODUCTION..........................................................................................1 1.1 Benthic Mapping Applications..........................................................................1 1.2 Remote Sensing Platforms for Benthic Habitat Mapping ..........................................2 2.0 SPATIAL DATA MODELS AND GIS CONCEPTS ................................................3 2.1 Vector Data Model .......................................................................................3 2.2 Raster Data Model........................................................................................3 3.0 CONSIDERATIONS FOR EFFECTIVE BENTHIC HABITAT ANALYSIS AND VISUALIZATION .........................................................................................4 3.1 Spatial Scale ...............................................................................................4 3.2 Habitat Scale...............................................................................................4 -

3B – a Guide to Pictograms

3b – A Guide to Pictograms A pictogram involves the use of a symbol in place of a word or statistic. Why would we use a pictogram? Pictograms can be very useful when trying to interpret data. The use of pictures allows the reader to easily see the frequency of a geographical phenomenon without having to always read labels and annotations. They are best used when the aesthetic qualities of the data presentation are more important than the ability to read the data accurately. Pictogram bar charts A normal bar chart can be made using a set of pictures to make up the required bar height. These pictures should be related to the data in question and in some cases it may not be necessary to provide a key or explanation as the pictures themselves will demonstrate the nature of the data inherently. A key may be needed if large numbers are being displayed – this may also mean that ‘half’ sized symbols may need to be used too. This project was funded by the Nuffield Foundation, but the views expressed are those of the authors and not necessarily those of the Foundation. Proportional shapes and symbols Scaling the size of the picture to represent the amount or frequency of something within a data set can be an effective way of visually representing data. The symbol should be representative of the data in question, or if the data does not lend itself to a particular symbol, a simple shape like a circle or square can be equally effective. Proportional symbols can work well with GIS, where the symbols can be placed on different sites on the map to show a geospatial connection to the data. -

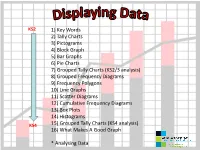

1) Key Words 2) Tally Charts 3) Pictograms 4) Block Graph 5) Bar

KS2 1) Key Words 2) Tally Charts 3) Pictograms 4) Block Graph 5) Bar Graphs 6) Pie Charts 7) Grouped Tally Charts (KS2/3 analysis) 8) Grouped Frequency Diagrams 9) Frequency Polygons 10) Line Graphs 11) Scatter Diagrams 12) Cumulative Frequency Diagrams 13) Box Plots 14) Histograms KS4 15) Grouped Tally Charts (KS4 analysis) 16) What Makes A Good Graph * Analysing Data Key words Axes Linear Continuous Median Correlation Origin Plot Data Discrete Scale Frequency x -axis Grouped y -axis Interquartile Title Labels Tally Types of data Discrete data can only take specific values, e.g. siblings, key stage 3 levels, numbers of objects Continuous data can take any value, e.g. height, weight, age, time, etc. Tally Chart A tally chart is used to organise data from a list into a table. The data shows the number of children in each of 30 families. 2, 1, 5, 0, 2, 1, 3, 0, 2, 3, 2, 4, 3, 1, 2, 3, 2, 1, 4, 0, 1, 3, 1, 2, 2, 6, 3, 2, 2, 3 Number of children in a Tally Frequency family 0 1 2 3 4 or more Year 3/4/5/6:- represent data using: lists, tally charts, tables and diagrams Tally Chart This data can now be represented in a Pictogram or a Bar Graph The data shows the number of children in each family. 30 families were studied. Add up the tally Number of children in a Tally Frequency family 0 III 3 1 IIII I 6 2 IIII IIII 10 3 IIII II 7 4 or more IIII 4 Total 30 Check the total is 30 IIII = 5 Year 3/4/5/6:- represent data using: lists, tally charts, tables and diagrams Pictogram This data could be represented by a Pictogram: Number of Tally Frequency -

2021 Garmin & Navionics Cartography Catalog

2021 CARTOGRAPHY CATALOG CONTENTS BlueChart® Coastal Charts �� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 04 LakeVü Inland Maps �� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 06 Canada LakeVü G3 �� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 08 ActiveCaptain® App �� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 09 New Chart Guarantee� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 10 How to Read Your Product ID Code �� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 10 Inland Maps ��������������������������������������������������� 12 Coastal Charts ������������������������������������������������� 16 United States� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 18 Canada ���������������������������������������������������� 24 Caribbean �������������������������������������������������� 26 South America� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 27 Europe����������������������������������������������������� 28 Africa ����������������������������������������������������� 39 Asia ������������������������������������������������������ 40 Australia/New Zealand �� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 42 Pacific Islands �� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � -

What Is Geovisualization? to the Growing Field of Geovisualization

This issue of GeoMatters is devoted What is Geovisualization? to the growing field of geovisualization. Brian McGregor uses geovisualiztion to by Joni Storie produce animated maps showing settle- ment patterns of Hutterite colonies. Dr. Marc Vachon’s students use it to produce From a cartography perspective, dynamic presentation options to com- videos about urban visualization (City geovisualization represents a change in municate knowledge. For example, at- Hall and Assiniboine Park), while Dr. how knowledge is formed and repre- lases require extra planning compared Chris Storie shows geovisualiztion for sented. Traditional cartography is usu- to individual maps, structurally they retail mapping in Winnipeg. Also in this issue Honours students describe their ally seen a visualization (a.k.a. map) could include hundreds of maps, and thesis projects for the upcoming collo- that is presented after the conclusion all the maps relate to each other. Dr. quium next March, Adrienne Ducharme is reached to emphasize or compliment Danny Blair and Dr. Ian Mauro, in the tells us about her graduate research at the research conclusions. Geovisual- Department of Geography, provide an ELA, we have a report about Cultivate ization changes this format by incor- excellent example of this integration UWinnipeg and our alumni profile fea- porating spatial data into the analysis with the Prairie Climate Atlas (http:// tures Michelle Méthot (Smith). (O’Sullivan and Unwin, 2010). Spatial www.climateatlas.ca/). The combina- Please feel free to pass this newsletter data, statistics and analysis are used to tion of maps with multimedia provides to anyone with an interest in geography. answer questions which contribute to for better understanding as well as en- Individuals can also see GeoMatters at the Geography website, or keep up with the conclusion that is reached within riched and informative experiences of us on Facebook (Department of Geog- the research. -

1. What Is a Pictogram? 2

Canonbury Home Learning Year 2/3 Maths Steppingstone activity Lesson 5 – 26.06.2020 LO: To interpret and construct simple pictograms Success Criteria: 1. Read the information about pictograms 2. Use the information in the Total column to complete the pictogram 3. Find some containers around your house and experiment with their capacity – talk through the questions with someone you live with 1. What is a pictogram? 2. Complete the pictogram: A pictogram is a chart or graph which uses pictures to represent data. They are set out the same way as a bar chart but use pictures instead of bars. Each picture could represent one item or more than one. Today we will be drawing pictograms where each picture represents one item. 2. Use the tally chart to help you complete the pictogram: Canonbury Home Learning Year 2/3 Maths Lesson 5 – 26.06.2020 LO: To interpret and construct simple pictograms Task: You are going to be drawing pictograms 1-1 Success Criteria: 1. Read the information about pictograms 2. Task 1: Use the information in the table to complete the pictogram 3. Task 2: Use the information in the tally chart and pictogram to complete the missing sections of each Model: 2. 3. 1. What is a pictogram? A pictogram is a chart or graph which uses pictures to represent data. They are set out the same way as a bar chart but use pictures instead of bars. Each picture could represent one item or more than one. Today we will be drawing pictograms where each picture represents one item.