TECHNOLOGY for AUTONOMOUS SPACE SYSTEMS Ashley W

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Magcon White Paper V3

Magnetospheric Constellation Tracing the flow of mass and energy from the solar wind through the magnetosphere Larry Kepko and Guan Le NASA Goddard Space Flight Center 1. Executive Summary The Magnetospheric Constellation (MagCon) mission is designed to understand the transport of mass and energy across the boundaries of and within Earth’s magnetosphere using a constellation of up to 36 small satellites. Energy is input into the geospace system at the dayside and flank magnetopause, yet we still do not understand the azimuthal extent of dayside reconnection sites, nor do we have a quantifiable understanding of how much energy enters the magnetosphere during different solar wind conditions. On the nightside, impulsive flows at various spatial and temporal scales occur frequently during storms and substorms and couple to the ionosphere through still unresolved physical mechanisms. A distributed array of small satellites is the required tool for unraveling the physics of magnetospheric mass and energy transport while providing definitive determinations of how major solar events lead to specific types of space weather. MagCon will map the global circulation of magnetic fields and plasma flows within a domain extending from just above the Earth’s surface to ~22 Earth radii (RE) radius, at all local times, on spatial scales from 1-5 RE and minimum time scales of 3-10 seconds. It will reveal simultaneously for the first time both the global spatial structures and temporal evolution of the magnetotail, the dayside and flank magnetopause, and the nightside transition region, leading to the physical understanding of system dynamics and energy transport across all scales. -

Mission to Jupiter

This book attempts to convey the creativity, Project A History of the Galileo Jupiter: To Mission The Galileo mission to Jupiter explored leadership, and vision that were necessary for the an exciting new frontier, had a major impact mission’s success. It is a book about dedicated people on planetary science, and provided invaluable and their scientific and engineering achievements. lessons for the design of spacecraft. This The Galileo mission faced many significant problems. mission amassed so many scientific firsts and Some of the most brilliant accomplishments and key discoveries that it can truly be called one of “work-arounds” of the Galileo staff occurred the most impressive feats of exploration of the precisely when these challenges arose. Throughout 20th century. In the words of John Casani, the the mission, engineers and scientists found ways to original project manager of the mission, “Galileo keep the spacecraft operational from a distance of was a way of demonstrating . just what U.S. nearly half a billion miles, enabling one of the most technology was capable of doing.” An engineer impressive voyages of scientific discovery. on the Galileo team expressed more personal * * * * * sentiments when she said, “I had never been a Michael Meltzer is an environmental part of something with such great scope . To scientist who has been writing about science know that the whole world was watching and and technology for nearly 30 years. His books hoping with us that this would work. We were and articles have investigated topics that include doing something for all mankind.” designing solar houses, preventing pollution in When Galileo lifted off from Kennedy electroplating shops, catching salmon with sonar and Space Center on 18 October 1989, it began an radar, and developing a sensor for examining Space interplanetary voyage that took it to Venus, to Michael Meltzer Michael Shuttle engines. -

James A. Slavin

James A. Slavin Professor of Space Physics Department of Climate and Space Science & Engineering University of Michigan, College of Engineering Climate & Space Research Building Ann Arbor, MI, 48109 Phone: 240-476-8009 [email protected] EDUCATION: 1982 - Ph.D., Space Physics, University of California at Los Angeles Dissertation: Bow Shock Studies at Mercury, Venus, Earth and Mars with Applications ot the Solar – Planetary Interaction Problem; Advisor: Prof. Robert E. Holzer 1978 - M.S., Geophysics and Space Physics, University of California at Los Angeles 1976 - B.S., Physics, Case Western Reserve University APPOINTMENTS: 2011 - 2018 Chair, Department of Climate and Space Sciences & Engineering, University of Michigan 2005 - 2011 Director, Heliophysics Science Division 1990 - 2004 Head, Electrodynamics Branch 1987 - 1989 Staff Scientist, NASA/GSFC Laboratory for Extraterrestrial Physics 1986 - 1987 Discipline Scientist for Magnetospheric Physics, Space Physics Division, NASA Headquarters 1983 - 1986 Research Scientist, Astrophysics and Space Physics Section, Caltech/Jet Propulsion Laboratory HONORS: 2018 - Heliophysics Summer School Faculty, UCAR High Altitude Observatory 2017 - NASA Group Achievement Award, MESSENGER Project Team 2017 - Asia Oceania Geosciences Society 14th Annual Meeting Distinguished Lecturer in Planetary Sciences 2016 - NASA Group Achievement Award, MMS Instument Suite 2012 - International Academy of Astronautics Laurels for Team Achievement for MESSENGER 2012 - Fellow, American Geophysical Union 2009 - NASA Group -

Refactoring the Curiosity Rover's Sample Handling Architecture on Mars

Refactoring the Curiosity Rover's Sample Handling Architecture on Mars Vandi Verma Stephen Kuhn (abstracting the shared development effort of many) The research was carried out at the Jet Propulsion Laboratory, California Institute of Technology, under a contract with the National Aeronautics and Space Administration. Copyright 2018 California Institute of Technology. U.S. Government sponsorship acknowledged. Introduction • The Mars Science Laboratory (“MSL”) hardware was architected to enable a fixed progression of activities that essentially enforced a campaign structure. In this structure, sample processing and analysis conflicted with any other usage of the robotic arm. • Unable to stow the arm and drive away • Unable to deploy arm instruments for in-situ engineering or science • Unable to deploy arm instruments or mechanisms on new targets • Sampling engineers developed and evolved a refactoring of this architecture in order to have it both ways Turn the hardware into a series of caches and catchments that could service all arm orientations. Enable execution of other arm activities with one “active” sample Page 2 11/18/20preserved such that additional portions could be delivered. The Foundational Risk Page 3 11/18/20 The Original Approach Page 4 11/18/20 Underpinnings of the original approach • Fundamentally, human rover planners simply could not keep track of sample in CHIMRA as it sluiced, stick- slipped, fell over partitions, pooled in catchments, etc. Neither could we update the Flight Software to model this directly. • But, with some simplifying abstraction of sample state and preparation of it into confined regimes of orientation, ground tools could effectively track sample. Rover planners’ use of Software Simulator was extended to enforce a chain of custody in commanded orientation, creating a faulted breakpoint upon violation that could be used to fix it. -

Guide to the Robert W. Jackson Collection, 1964-1999 PP03.02

Guide to the Robert W. Jackson Collection, 1964-1999 PP03.02 NASA Ames History Office NASA Ames Research Center Contact Information: NASA Ames Research Center NASA Ames History Office Mail-Stop 207-1 Moffett Field, CA 94035-1000 Phone: (650) 604-1032 Email: [email protected] URL: http://history.arc.nasa.gov/ Collection processed by: Leilani Marshall, March 2004 Table of Contents Descriptive Summary.......................................................................................................... 2 Administrative Information ................................................................................................ 2 Biographical Note ............................................................................................................... 3 Scope and Content .............................................................................................................. 4 Series Description ............................................................................................................... 5 Indexing Terms ................................................................................................................... 6 Container List...................................................................................................................... 7 Jackson Collection 1 Descriptive Summary Title: Robert W. Jackson Collection, 1964-1999 Collection Number: PP03.02 Creator: Robert W. Jackson Dates: Inclusive: 1964-1999 Bulk: 1967-1988 Extent: Volume: 1.67 linear feet Repository: NASA Ames History -

Introduction to Astronomy from Darkness to Blazing Glory

Introduction to Astronomy From Darkness to Blazing Glory Published by JAS Educational Publications Copyright Pending 2010 JAS Educational Publications All rights reserved. Including the right of reproduction in whole or in part in any form. Second Edition Author: Jeffrey Wright Scott Photographs and Diagrams: Credit NASA, Jet Propulsion Laboratory, USGS, NOAA, Aames Research Center JAS Educational Publications 2601 Oakdale Road, H2 P.O. Box 197 Modesto California 95355 1-888-586-6252 Website: http://.Introastro.com Printing by Minuteman Press, Berkley, California ISBN 978-0-9827200-0-4 1 Introduction to Astronomy From Darkness to Blazing Glory The moon Titan is in the forefront with the moon Tethys behind it. These are two of many of Saturn’s moons Credit: Cassini Imaging Team, ISS, JPL, ESA, NASA 2 Introduction to Astronomy Contents in Brief Chapter 1: Astronomy Basics: Pages 1 – 6 Workbook Pages 1 - 2 Chapter 2: Time: Pages 7 - 10 Workbook Pages 3 - 4 Chapter 3: Solar System Overview: Pages 11 - 14 Workbook Pages 5 - 8 Chapter 4: Our Sun: Pages 15 - 20 Workbook Pages 9 - 16 Chapter 5: The Terrestrial Planets: Page 21 - 39 Workbook Pages 17 - 36 Mercury: Pages 22 - 23 Venus: Pages 24 - 25 Earth: Pages 25 - 34 Mars: Pages 34 - 39 Chapter 6: Outer, Dwarf and Exoplanets Pages: 41-54 Workbook Pages 37 - 48 Jupiter: Pages 41 - 42 Saturn: Pages 42 - 44 Uranus: Pages 44 - 45 Neptune: Pages 45 - 46 Dwarf Planets, Plutoids and Exoplanets: Pages 47 -54 3 Chapter 7: The Moons: Pages: 55 - 66 Workbook Pages 49 - 56 Chapter 8: Rocks and Ice: -

Autonomous Vehicles in Support of Naval Operations Committee on Autonomous Vehicles in Support of Naval Operations, National Research Council

Autonomous Vehicles in Support of Naval Operations Committee on Autonomous Vehicles in Support of Naval Operations, National Research Council ISBN: 0-309-55115-3, 256 pages, 6 x 9, (2005) This free PDF was downloaded from: http://www.nap.edu/catalog/11379.html Visit the National Academies Press online, the authoritative source for all books from the National Academy of Sciences, the National Academy of Engineering, the Institute of Medicine, and the National Research Council: • Download hundreds of free books in PDF • Read thousands of books online, free • Sign up to be notified when new books are published • Purchase printed books • Purchase PDFs • Explore with our innovative research tools Thank you for downloading this free PDF. If you have comments, questions or just want more information about the books published by the National Academies Press, you may contact our customer service department toll-free at 888-624-8373, visit us online, or send an email to [email protected]. This free book plus thousands more books are available at http://www.nap.edu. Copyright © National Academy of Sciences. Permission is granted for this material to be shared for noncommercial, educational purposes, provided that this notice appears on the reproduced materials, the Web address of the online, full authoritative version is retained, and copies are not altered. To disseminate otherwise or to republish requires written permission from the National Academies Press. Autonomous Vehicles in Support of Naval Operations http://www.nap.edu/catalog/11379.html AUTONOMOUS VEHICLES IN SUPPORT OF NAVAL OPERATIONS Committee on Autonomous Vehicles in Support of Naval Operations Naval Studies Board Division on Engineering and Physical Sciences THE NATIONAL ACADEMIES PRESS Washington, D.C. -

AI, Robots, and Swarms: Issues, Questions, and Recommended Studies

AI, Robots, and Swarms Issues, Questions, and Recommended Studies Andrew Ilachinski January 2017 Approved for Public Release; Distribution Unlimited. This document contains the best opinion of CNA at the time of issue. It does not necessarily represent the opinion of the sponsor. Distribution Approved for Public Release; Distribution Unlimited. Specific authority: N00014-11-D-0323. Copies of this document can be obtained through the Defense Technical Information Center at www.dtic.mil or contact CNA Document Control and Distribution Section at 703-824-2123. Photography Credits: http://www.darpa.mil/DDM_Gallery/Small_Gremlins_Web.jpg; http://4810-presscdn-0-38.pagely.netdna-cdn.com/wp-content/uploads/2015/01/ Robotics.jpg; http://i.kinja-img.com/gawker-edia/image/upload/18kxb5jw3e01ujpg.jpg Approved by: January 2017 Dr. David A. Broyles Special Activities and Innovation Operations Evaluation Group Copyright © 2017 CNA Abstract The military is on the cusp of a major technological revolution, in which warfare is conducted by unmanned and increasingly autonomous weapon systems. However, unlike the last “sea change,” during the Cold War, when advanced technologies were developed primarily by the Department of Defense (DoD), the key technology enablers today are being developed mostly in the commercial world. This study looks at the state-of-the-art of AI, machine-learning, and robot technologies, and their potential future military implications for autonomous (and semi-autonomous) weapon systems. While no one can predict how AI will evolve or predict its impact on the development of military autonomous systems, it is possible to anticipate many of the conceptual, technical, and operational challenges that DoD will face as it increasingly turns to AI-based technologies. -

Venus Aerobot Multisonde Mission

w AIAA Balloon Technology Conference 1999 Venus Aerobot Multisonde Mission By: James A. Cutts ('), Viktor Kerzhanovich o_ j. (Bob) Balaram o), Bruce Campbell (2), Robert Gershman o), Ronald Greeley o), Jeffery L. Hall ('), Jonathan Cameron o), Kenneth Klaasen v) and David M. Hansen o) ABSTRACT requires an orbital relay system that significantly Robotic exploration of Venus presents many increases the overall mission cost. The Venus challenges because of the thick atmosphere and Aerobot Multisonde (VAMuS) Mission concept the high surface temperatures. The Venus (Fig 1 (b) provides many of the scientific Aerobot Multisonde mission concept addresses capabilities of the VGA, with existing these challenges by using a robotic balloon or technology and without requiring an orbital aerobot to deploy a number of short lifetime relay. It uses autonomous floating stations probes or sondes to acquire images of the (aerobots) to deploy multiple dropsondes capable surface. A Venus aerobot is not only a good of operating for less than an hour in the hot lower platform for precision deployment of sondes but atmosphere of Venus. The dropsondes, hereafter is very effective at recovering high rate data. This described simply as sondes, acquire high paper describes the Venus Aerobot Multisonde resolution observations of the Venus surface concept and discusses a proposal to NASA's including imaging from a sufficiently close range Discovery program using the concept for a that atmospheric obscuration is not a major Venus Exploration of Volcanoes and concern and communicate these data to the Atmosphere (VEVA). The status of the balloon floating stations from where they are relayed to deployment and inflation, balloon envelope, Earth. -

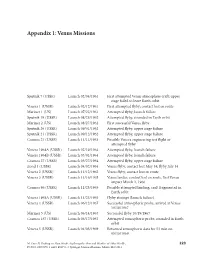

Appendix 1: Venus Missions

Appendix 1: Venus Missions Sputnik 7 (USSR) Launch 02/04/1961 First attempted Venus atmosphere craft; upper stage failed to leave Earth orbit Venera 1 (USSR) Launch 02/12/1961 First attempted flyby; contact lost en route Mariner 1 (US) Launch 07/22/1961 Attempted flyby; launch failure Sputnik 19 (USSR) Launch 08/25/1962 Attempted flyby, stranded in Earth orbit Mariner 2 (US) Launch 08/27/1962 First successful Venus flyby Sputnik 20 (USSR) Launch 09/01/1962 Attempted flyby, upper stage failure Sputnik 21 (USSR) Launch 09/12/1962 Attempted flyby, upper stage failure Cosmos 21 (USSR) Launch 11/11/1963 Possible Venera engineering test flight or attempted flyby Venera 1964A (USSR) Launch 02/19/1964 Attempted flyby, launch failure Venera 1964B (USSR) Launch 03/01/1964 Attempted flyby, launch failure Cosmos 27 (USSR) Launch 03/27/1964 Attempted flyby, upper stage failure Zond 1 (USSR) Launch 04/02/1964 Venus flyby, contact lost May 14; flyby July 14 Venera 2 (USSR) Launch 11/12/1965 Venus flyby, contact lost en route Venera 3 (USSR) Launch 11/16/1965 Venus lander, contact lost en route, first Venus impact March 1, 1966 Cosmos 96 (USSR) Launch 11/23/1965 Possible attempted landing, craft fragmented in Earth orbit Venera 1965A (USSR) Launch 11/23/1965 Flyby attempt (launch failure) Venera 4 (USSR) Launch 06/12/1967 Successful atmospheric probe, arrived at Venus 10/18/1967 Mariner 5 (US) Launch 06/14/1967 Successful flyby 10/19/1967 Cosmos 167 (USSR) Launch 06/17/1967 Attempted atmospheric probe, stranded in Earth orbit Venera 5 (USSR) Launch 01/05/1969 Returned atmospheric data for 53 min on 05/16/1969 M. -

(PLEXIL) for Executable Plans and Command Sequences”, International Symposium on Artificial Intelligence, Robotics and Automation in Space (Isairas), 2005

V. Verma, T. Estlin, A. Jónsson, C. Pasareanu, R. Simmons, K. Tso, “Plan Execution Interchange Language (PLEXIL) for Executable Plans and Command Sequences”, International Symposium on Artificial Intelligence, Robotics and Automation in Space (iSAIRAS), 2005 PLAN EXECUTION INTERCHANGE LANGUAGE (PLEXIL) FOR EXECUTABLE PLANS AND COMMAND SEQUENCES Vandi Verma(1), Tara Estlin(2), Ari Jónsson(3), Corina Pasareanu(4), Reid Simmons(5), Kam Tso(6) (1)QSS Inc. at NASA Ames Research Center, MS 269-1 Moffett Field CA 94035, USA, Email:[email protected] (2)Jet Propulsion Laboratory, M/S 126-347, 4800 Oak Grove Drive, Pasadena CA 9110, USA , Email:[email protected] (3) USRA-RIACS at NASA Ames Research Center, MS 269-1 Moffett Field CA 94035, USA, Email:[email protected] (4)QSS Inc. at NASA Ames Research Center, MS 269-1 Moffett Field CA 94035, USA, Email:[email protected] (5)Robotics Institute, Carnegie Mellon University, Pittsburgh PA 15213, USA, Email:[email protected] (6)IA Tech Inc., 10501Kinnard Avenue, Los Angeles, CA 9002, USA, Email: [email protected] ABSTRACT this task since it is impossible for a small team to manually keep track of all sub-systems in real time. Space mission operations require flexible, efficient and Complex manipulators and instruments used in human reliable plan execution. In typical operations command missions must also execute sequences of commands to sequences (which are a simple subset of general perform complex tasks. Similarly, a rover operating on executable plans) are generated on the ground, either Mars must autonomously execute commands sent from manually or with assistance from automated planning, Earth and keep the rover safe in abnormal situations. -

Deep Space 2: the Mars Microprobe Project and Beyond

First International Conference on Mars Polar Science 3039.pdf DEEP SPACE 2: THE MARS MICROPROBE PROJECT AND BEYOND. S. E. Smrekar and S. A. Gavit, Mail Stop 183-501, Jet Propulsion Laboratory, California Institute of Technology, 4800 Oak Grove Drive, Pasa- dena CA 91109, USA ([email protected]). Mission Overview: The Mars Microprobe Proj- System Design, Technologies, and Instruments: ect, or Deep Space 2 (DS2), is the second of the New Telecommunications. The DS2 telecom system, Millennium Program planetary missions and is de- which is mounted on the aftbody electronics plate, signed to enable future space science network mis- relays data back to earth via the Mars Global Surveyor sions through flight validation of new technologies. A spacecraft which passes overhead approximately once secondary goal is the collection of meaningful science every 2 hours. The receiver and transmitter operate in data. Two micropenetrators will be deployed to carry the Ultraviolet Frequency Range (UHF) and data is out surface and subsurface science. returned at a rate of 7 Kbits/second. The penetrators are being carried as a piggyback Ultra-low-temperature lithium primary battery. payload on the Mars Polar Lander cruise ring and will One challenging aspect of the microprobe design is be launched in January of 1999. The Microprobe has the thermal environment. The batteries are likely to no active control, attitude determination, or propulsive stay no warmer than -78° C. A lithium-thionyl pri- systems. It is a single stage from separation until mary battery was developed to survive the extreme landing and will passively orient itself due to its aero- temperature, with a 6 to 14 V range and a 3-year shelf dynamic design (Fig.