Large Group Musical Interaction Using Disposable Wireless Motion Sensors Mark Christopher Feldmeier ROTCH

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Music (And More) 2019 Quarter 3 Report

The Music (and More) 2019 Quarter 3 Report Report covers the time period of July 1st to Kieran Robbins & Chief - "Sway" [glam rock] September 30th, 2019. I inadvertently missed Troy a few before that time period, which were brought to my attention by fans, bands & Moriah Formica - "I Don't Care What You others. The missing are listed at the end, along with an Think" (single) [hard rock] Albany End Note… Nine Votes Short - "NVS: 09/03/2019" [punk rock] RECORDINGS: Albany Hard Rock / Metal / Punk Psychomanteum - "Mortal Extremis" (single track) Attica - "Resurected" (EP) [hardcore metal] Albany [thrash prog metal industrial] Albany Between Now and Forever - "Happy" (single - Silversyde - "In The Dark" [christian gospel hard rock] Mudvayne cover) [melodic metal rock] Albany Troy/Toledo OH Black Electric - "Black Electric" [heavy stoner blues rock] Scotchka - "Back on the Liquor" | "Save" (single tracks) Voorheesville [emo pop punk] Albany Blood Blood Blood - "Stranglers” (single) [darkwave Somewhere To Call Home - "Somewhere To Call Home" horror synthpunk] Troy [nu-metalcore] Albany Broken Field Runner – "Lay My Head Down" [emo pop Untaymed - "Lady" (single track) [british hard rock] punk] Albany / LA Colonie Brookline - "Live From the Bunker - Acoustic" (EP) We’re History - "We’re History" | "Pop Tarts" - [acoustic pop-punk alt rock] Greenwich "Avalanche" (singles) [punk rock pop] Saratoga Springs Candy Ambulance - "Traumantic" [alternative grunge Wet Specimens - "Haunted Flesh" (EP) [hardcore punk] rock] Saratoga Springs Albany Craig Relyea - "Between the Rain" (single track) Rock / Pop [modern post-rock] Schenectady Achille - "Superman (A Song for Mora)" (single) [alternative pop rock] Albany Dead-Lift - "Take It or Leave It" (single) [metal hard rock fusion] Schenectady Caramel Snow - "Wheels Are Meant To Roll Away" (single track) [shoegaze dreampop] Delmar Deep Slut - "u up?" (3-song) [punk slutcore rap] Albany Cassandra Kubinski - "DREAMS (feat. -

KOMUNITAS MUSIK HARDCORE STRAIGHT EDGE DI KABUPATEN BATANG Kajian Tentang Analisis Bentuk Musik Dan Aktivitasnya JURUSAN PENDIDI

KOMUNITAS MUSIK HARDCORE STRAIGHT EDGE DI KABUPATEN BATANG Kajian Tentang Analisis Bentuk Musik dan Aktivitasnya Skripsi disajikan sebagai salah satu syarat untuk memperoleh gelar Sarjana Pendidikan Prodi Studi Pendidikan Seni Musik oleh Dadang Dwi Septiyan 2501409036 JURUSAN PENDIDIKAN SENI DRAMA, TARI, DAN MUSIK FAKULTAS BAHASA DAN SENI UNIVERSITAS NEGERI SEMARANG 2013 PERSETUJUAN PEMBIMBING Skripsi ini telah disetujui oleh pembimbing untuk diajukan ke Sidang Panitia Ujian Skripsi Jurusan Pendidikan Seni Drama, Tari, dan Musik, Fakultas Bahasa dan Seni, Universitas Neger i Semarang. Hari : Senin Tanggal : 25 Februari 2013 Menyetujui Pembimbing I Pembimbing II Drs. Suharto, S.Pd, M.Hum Drs. Eko Raharjo, M.Hum NIP 196510181990031002 NIP. 196510181992031001 Mengetahui, Ketua Jurusan Joko Wiyoso, S.Kar.M.Hum. iii HALAMAN PENGESAHAN Skripsi dengan judul “KOMUNITAS MUSIK HARDCORE STRAIGHT EDGE DI KABUPATEN BATANG: Kajian Analisis Bentuk Musik dan Aktivitasnya” telah dipertahankan di hadapan sidang Panitia Ujian Skripsi FBS UNNES pada tanggal 4 Maret 2013. Panitia Ujian Skripsi Ketua Sekretaris Prof. Dr. Agus Nuryatin, M. Hum Dra. Siti Aesijah, M.Pd NIP... 196008031989011001 NIP...196512191991032003 Penguji I Drs. Bagus Susetyo, M.Hum NIP. 196200910199011101 Penguji II Penguji III Drs. Suharto, S.Pd, M.Hum Drs. Eko Raharjo, M.Hum NIP 196510181990031002 NIP 196510181992031001 iii iv SARI Septiyan, Dadang Dwi. 2013. Komunitas Musik Hardcore Straight Edge Di Kabupaten Batang: Kajian Tentang Analisis Bentuk Musik dan Aktivitasnya. Skripsi. Pendidikan Seni Drama, Tari, dan Musik, Fakultas Bahasa dan Seni, Universitas Negeri Semarang. Pembimbing I: Drs. Suharto, S.Pd, M. Hum., Pembimbing II: Drs. Eko Raharjo, M. Hum. Kata kunci: musik hardcore straight edge, bentuk musik, dan aktivitas Komunitas musik hardcore straight edge adalah sebuah komunitas musik yang pada awalnya dianggap negatif oleh masyarakat mengenai musik dan aktivitasnya. -

Atom and His Package Possibly the Smallest Band on the List, Atom & His

Atom and His Package Possibly the smallest band on the list, Atom & his Package consists of Adam Goren (Atom) and his package (a Yamaha music sequencer) make funny punk songs utilising many of of the package's hundreds of instruments about the metric system, the lead singer of Judas Priest and what Jewish people do on Christmas. Now moved on to other projects, Atom will remain the person who told the world Anarchy Means That I Litter, The Palestinians Are Not the Same Thing as the Rebel Alliance Jackass, and If You Own The Washington Redskins, You're a ****. Ghost Mice With only two members in this folk/punk band their voices and their music can be heard along with such pride. This band is one of the greatest to come out of the scene because of their abrasive acoustic style. The band consists of a male guitarist (I don't know his name) and Hannah who plays the violin. They are successful and very well should be because it's hard to 99 when you have such little to work with. This band is off Plan It X records and they put on a fantastic show. Not only is the band one of the leaders of the new genre called folk/punk but I'm sure there is going to be very big things to come from them and it will always be from the heart. Defiance, Ohio Defiance, Ohio are perhaps one of the most compassionate and energetic leaders of the "folk/punk" movement. Their clever lyrics accompanied by fast, melodic, acoustic guitars make them very enjoyable to listen to. -

The Bands of Detroit

IT’S FREE! TAKE ONE! DETROIT PUNK ROCK SCENE REPORT It seems that the arsenal of democracy has been raided, pillaged, and ultimately, neglected. A city once teeming with nearly two million residents has seemingly emptied to 720,000 in half a century’s time (the actual number is likely around 770,000 residing citizens, including those who aren’t registered), leaving numerous plots of land vacant and unused. Unfortunately, those areas are seldom filled with proactive squatters or off-the-grid residents; most are not even occupied at all. The majority of the east and northwest sides of the city are examples of this urban blight. Detroit has lost its base of income in its taxpaying residents, simultaneously retaining an anchor of burdensome (whether it’s voluntary or not) poverty-stricken, government-dependent citizens. Just across the Detroit city borders are the gated communities of xenophobic suburban families, who turn their collective noses at all that does not beckon to their will and their wallet. Somewhere, in the narrow cracks between these two aforementioned sets of undesirables, is the single best punk rock scene you’ve heard nary a tale of, the one that everyone in the U.S. and abroad tends to overlook. Despite receiving regular touring acts (Subhumans, Terror, Common Enemy, Star Fucking Hipsters, Entombed, GBH, the Adicts, Millions of Dead Cops, Mouth Sewn Shut, DRI, DOA, etc), Detroit doesn’t seem to get any recognition for homegrown punk rock, even though we were the ones who got the ball rolling in the late 60s. Some of the city’s naysayers are little more than punk rock Glenn Becks or Charlie Sheens, while others have had genuinely bad experiences; however, if the world is willing to listen to what we as Detroiters have to say with an unbiased ear, we are willing to speak, candidly and coherently. -

Anarchism, Hardcore Music, and Counterculture

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2016 Don't Let the World Rot: Anarchism, Hardcore Music, and Counterculture Pearson Bolt University of Central Florida Part of the Critical and Cultural Studies Commons, and the Ethnomusicology Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Masters Thesis (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Bolt, Pearson, "Don't Let the World Rot: Anarchism, Hardcore Music, and Counterculture" (2016). Electronic Theses and Dissertations, 2004-2019. 5128. https://stars.library.ucf.edu/etd/5128 DON’T LET THE WORLD ROT: ANARCHISM, HARDCORE, AND COUNTERCULTURE by PEARSON L. BOLT B.A. University of Central Florida, 2013 A thesis submitted in partial fulfillment of the requirements for the degree of Master of Arts in the Department of English in the College of Arts and Humanities at the University of Central Florida Orlando, Florida Summer Term 2016 Major Professor: Anthony Grajeda © Pearson Bolt 2016 ii ABSTRACT Hardcore music is intrinsically anarchistic. The hardcore music scene represents a radical departure from contemporary society. Rejecting the materialism, militarism, and hedonism of the mainstream music scene—and, by extension, modern culture—hardcore music presents an alternative lifestyle rooted in solidarity, equality, and liberty. Indeed, the culture of the hardcore scene approaches a transitive, nomadic model of an anarchistic commune built on resistance as a way of life. -

Zombies No Presskit 2019 EN

PUNK ROCK FROM EVERYWHERE BIOGRAPHY FESTIVALS Multicultural punk rock band, formed in Venezuela in 2010 and now UNION ROCK SHOW - CARACAS, VENEZUELA based in Paris since end of 2017. A music at the borders of TU VOZ ES TU PODER - CARACAS, VENEZUELA Skatepunk, HxC and Metal, in favor of social awareness, thoughts FESTIVAL NUEVAS BANDAS - CARACAS, VENEZUELA and freedom of speech. FOD AND FRIENDS - LONDON, ENGLAND POLITE RIOT FEST - LONDON, ENGLAND We’ve shared stage with Dischord and TheZeta, from our original SAVE THE SCENE FEST - LEISNIG, GERMANY hometown, Caracas. 69 Enfermos from Colombia, DobleD from Mexico, Play Attenchon from Ecuador. Also, with Lagwagon, A Wilhelm Scream, Adolescents, Darko, Drones, Eat Dirt, White dog Suicide, SMF, F.O.D, ... We’ve performed in Venezuela, Colombia, ON THE PRESS Peru, Ecuador. United Kingdom, Germany, The Netherlands, THE PUNKMONGER Switzerland, Italy, Slovakia, Hungary, Bosnia, Croatia, Austria... There are some strong fist in the air chorus lines and a great energy throughout the album. I have no doubt The band won best album art for La única culpa que tengo, in the that they would fit easily into a punk/metal line up and Union Rock Show Awards, a prominent festival on the Venezuelan be great to see live. scene. Also, for the same festival, nominee as best band several times. The single “Hoy” (entitled Now in the English version) qualified MUSICALLY FRESH the band among 2000 other bands to participate in the Tu Voz es Tu Zombies No can’t decide whether they want to pursue Poder festival and album. old-school US west-coast Hardcore Punk, melodic and tuneful Punk or jagged contemporary European Skate Punk and this three-fold indecision is their greatest strength. -

Documenting Destruction: an Examination of the Nashville Hardcore Punk Scene

Documenting Destruction: An Examination of the Nashville Hardcore Punk Scene by Nicholas R. Cummings A thesis presented to the Honors College of Middle Tennessee State University in partial fulfillment of the requirements for graduation from the University Honors College Fall 2019 Documenting Destruction: An Examination of the Nashville Hardcore Punk Scene by Nicholas R. Cummings Approved: _______________________________ Dr. John Dougan Department of Recording Industry _______________________________ Beverly Keel Department of Recording Industry _______________________________ Dr. Philip E. Philips, Associate Dean University Honors College i Table of Contents: I. Introduction Pg. 1 II. Background Pg. 2 III. Nashville Pg. 7 IV. Venues Pg. 8 V. Labels Pg. 10 VI. Producers Pg. 12 VII. Bands Pg. 13 VIII. Conclusion Pg. 17 IX. Appendix Pg. 18 ii Abstract: While known mainly for country music, Nashville house a thriving hardcore punk scene. This thesis serves as an exploration of both hardcore as a genre and the Nashville scene itself. It explores the venues, record labels, producers, and some of the most important bands of the Nashville scene. It is the sister work to a photo book created by the author. The photos were taken over the course of a year, from October 2018 to October 2019, utilizing both film and digital photography as well as a variety of techniques. It is meant to document the current state of Nashville’s hardcore scene, with its reader base consisting mainly of members of the scene. I. Introduction: Nashville is known around the world as a Mecca for country music, but if one ventures a few miles away from the bright lights of Broadway one will find an entirely different type of music; hidden in dive bars and dingy basements is one of the most thriving hardcore punk scenes in the country. -

Universitá Degli Studi Di Milano Facoltà Di Scienze Matematiche, Fisiche E Naturali Dipartimento Di Tecnologie Dell'informazione

UNIVERSITÁ DEGLI STUDI DI MILANO FACOLTÀ DI SCIENZE MATEMATICHE, FISICHE E NATURALI DIPARTIMENTO DI TECNOLOGIE DELL'INFORMAZIONE SCUOLA DI DOTTORATO IN INFORMATICA Settore disciplinare INF/01 TESI DI DOTTORATO DI RICERCA CICLO XXIII SERENDIPITOUS MENTORSHIP IN MUSIC RECOMMENDER SYSTEMS Eugenio Tacchini Relatore: Prof. Ernesto Damiani Direttore della Scuola di Dottorato: Prof. Ernesto Damiani Anno Accademico 2010/2011 II Acknowledgements I would like to thank all the people who helped me during my Ph.D. First of all I would like to thank Prof. Ernesto Damiani, my advisor, not only for his support and the knowledge he imparted to me but also for his capacity of understanding my needs and for having let me follow my passions; thanks also to all the other people of the SESAR Lab, in particular to Paolo Ceravolo and Gabriele Gianini. Thanks to Prof. Domenico Ferrari, who gave me the possibility to work in an inspiring context after my graduation, helping me to understand the direction I had to take. Thanks to Prof. Ken Goldberg for having hosted me in his laboratory, the Berkeley Laboratory for Automation Science and Engineering at the University of California, Berkeley, a place where I learnt a lot; thanks also to all the people of the research group and in particular to Dmitry Berenson and Timmy Siauw for the very fruitful discussions about clustering, path searching and other aspects of my work. Thanks to all the people who accepted to review my work: Prof. Richard Chbeir, Prof. Ken Goldberg, Prof. Przemysław Kazienko, Prof. Ronald Maier and Prof. Robert Tolksdorf. Thanks to 7digital, our media partner for the experimental test, and in particular to Filip Denker. -

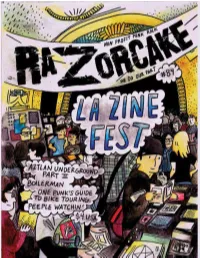

Razorcake Issue #84 As A

RIP THIS PAGE OUT WHO WE ARE... Razorcake exists because of you. Whether you contributed If you wish to donate through the mail, any content that was printed in this issue, placed an ad, or are a reader: without your involvement, this magazine would not exist. We are a please rip this page out and send it to: community that defi es geographical boundaries or easy answers. Much Razorcake/Gorsky Press, Inc. of what you will fi nd here is open to interpretation, and that’s how we PO Box 42129 like it. Los Angeles, CA 90042 In mainstream culture the bottom line is profi t. In DIY punk the NAME: bottom line is a personal decision. We operate in an economy of favors amongst ethical, life-long enthusiasts. And we’re fucking serious about it. Profi tless and proud. ADDRESS: Th ere’s nothing more laughable than the general public’s perception of punk. Endlessly misrepresented and misunderstood. Exploited and patronized. Let the squares worry about “fi tting in.” We know who we are. Within these pages you’ll fi nd unwavering beliefs rooted in a EMAIL: culture that values growth and exploration over tired predictability. Th ere is a rumbling dissonance reverberating within the inner DONATION walls of our collective skull. Th ank you for contributing to it. AMOUNT: Razorcake/Gorsky Press, Inc., a California non-profit corporation, is registered as a charitable organization with the State of California’s COMPUTER STUFF: Secretary of State, and has been granted official tax exempt status (section 501(c)(3) of the Internal Revenue Code) from the United razorcake.org/donate States IRS. -

Ancient Committee Is an Emo Band! a Game Chef Entry by Dev Purkayastha

Ancient Committee is an Emo Band! a Game Chef entry by Dev Purkayastha Timing: Playable in 10 Sessions of 1 Hour each. Ingredients: ANCIENT COMMITTEE EMOTION (not: glass) See design thread at: http://www.1km1kt.net/forum/ftopic722.html Please don’t look at: http://www.myspace.com/xancientcommitteex ”Ancient Committee is an Emo Band! Play through the ten iterations of a mediocre mid-90s emo band, and use your whine-soaked rock to save the souls of the kids in the audience. Cheer up emokid – you’re on stage!” A Note to the Judges: I’d like to step out of my rules text and explain my use of the game elements. The “EMOTION” element is hopefully obvious; “emo” derives from the word “emotional”, and the genre is all about the open display of emotion. As for the use of “Ancient Committee”: the idea for this game came to me because I realized that this was almost an archetypal emo band name. (Play with the Emo Band Name Generator to see for yourself.) There are already bands like The Postal Service, British Sea Power and The Warren Commission. I feel like starting off with the right band name is a step in the right direction. Beyond this, I try to emphasize sticking to the phrases “Ancient” and “Committee”, and injecting these as epic-sized metaphors into the lyrics. There’s actually a decent precedent for this, in the form of Coheed and Cambria. I hope my choices make more sense now. In any case, thank you for reading! Dear Journal… Posted 19 Mar Mood: Apathetic It sucks that I finished writing that kick@$$ song but it looks like “Ancient Committee” isn’t going to get off the ground. -

Middle Class Music in Suburban Nowhere Land: Emo and the Performance of Masculinity

MIDDLE CLASS MUSIC IN SUBURBAN NOWHERE LAND: EMO AND THE PERFORMANCE OF MASCULINITY Matthew J. Aslaksen A Thesis Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of MASTER OF ARTS August 2006 Committee: Jeremy Wallach, Advisor Becca Cragin Angela Nelson ii Abstract Jeremy Wallach, Advisor Emo is an emotional form that attracts a great deal of ridicule from much of the punk and indie music communities. Emo is short for “emotional punk” or “emotional hardcore,” but because of the amount of evolution it has undergone it has become a very difficult genre to define. This genre is well known for its almost whiny sound and its preoccupation with relationship problems and emotional instability. As a result, it is seen by many within the more underground music community as an inauthentic emotionally indulgent form of music. Emo has also very recently become a form that has gained widespread mainstream media appeal with bands such as Dashboard Confessional, Taking Back Sunday, and My Chemical Romance. It is generally consumed by a younger teenaged to early college audience, and it is largely performed by suburban middle class artists. Overall, I have argued that emo represents challenge to conventional norms of hegemonic middle-class masculinity, a challenge which has come about as a result of feelings of discontent with the emotional repression of this masculinity. In this work I have performed multiple interviews that include both performers and audience members who participate in this type of music. The questions that I ask the subjects of my ethnographic research focus on the meaning of this particular performance to both the audience and performers. -

E304 Anchord Copia Album

Release Date: 11th January 2017 Recommended Singles: 4. New Existence Plug-In 5. Plight Runaway ANCHORD AS A REAL RETURN Tracklist: The first time renowned producer Santi Garcia (head singer and guitarist of No More 1. dvtrephtxlxa Lies and person responsible for the sound of most of BCore’s catalogue, as well 2. Bad Cop as quite a few renowned Spanish and European punk/hardcore bands) saw 3. Christmas 4. New Existence Plug-In Anchord live, it reminded him of the first time he saw Aina (flagship band of BCore 5. Plight Runaway and the Spanish independent scene in the nineties) performing: a special feeling 6. From A Cage that goes further than just watching four extremely young kids that know how to 7. Gone/Everywhere play; he saw a band with hardcore roots and a special performance and creativity 8. Joy Is Lost And Therefore Your Voice that differentiated them clearly from others and that was, ultimately, contagious. 9. Lackpitalism 10. La Muntanya 11. Tambourine Prophets After over two years touring Catalonia, Spain and even Europe with their first songs, published by Saltamarges in 2014 on cassette, they are now ready to release their Selling Points: first LP, ‘As A Real Return’ (2017). If they already proved from the start that their - Recorded and mixed by Santi Garcia at composition game is hereditary of their love for nineties’ melodic hardcore and Ultramarinos Costa Brava. emo-core, this new album shows us how the band have gone straight down the path Master by Victor Garcia at Ultramarinos of genuine exploration with a one way ticket that starts at that station.