Describing Syntax and Semantics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Administering Unidata on UNIX Platforms

C:\Program Files\Adobe\FrameMaker8\UniData 7.2\7.2rebranded\ADMINUNIX\ADMINUNIXTITLE.fm March 5, 2010 1:34 pm Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta UniData Administering UniData on UNIX Platforms UDT-720-ADMU-1 C:\Program Files\Adobe\FrameMaker8\UniData 7.2\7.2rebranded\ADMINUNIX\ADMINUNIXTITLE.fm March 5, 2010 1:34 pm Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Notices Edition Publication date: July, 2008 Book number: UDT-720-ADMU-1 Product version: UniData 7.2 Copyright © Rocket Software, Inc. 1988-2010. All Rights Reserved. Trademarks The following trademarks appear in this publication: Trademark Trademark Owner Rocket Software™ Rocket Software, Inc. Dynamic Connect® Rocket Software, Inc. RedBack® Rocket Software, Inc. SystemBuilder™ Rocket Software, Inc. UniData® Rocket Software, Inc. UniVerse™ Rocket Software, Inc. U2™ Rocket Software, Inc. U2.NET™ Rocket Software, Inc. U2 Web Development Environment™ Rocket Software, Inc. wIntegrate® Rocket Software, Inc. Microsoft® .NET Microsoft Corporation Microsoft® Office Excel®, Outlook®, Word Microsoft Corporation Windows® Microsoft Corporation Windows® 7 Microsoft Corporation Windows Vista® Microsoft Corporation Java™ and all Java-based trademarks and logos Sun Microsystems, Inc. UNIX® X/Open Company Limited ii SB/XA Getting Started The above trademarks are property of the specified companies in the United States, other countries, or both. All other products or services mentioned in this document may be covered by the trademarks, service marks, or product names as designated by the companies who own or market them. License agreement This software and the associated documentation are proprietary and confidential to Rocket Software, Inc., are furnished under license, and may be used and copied only in accordance with the terms of such license and with the inclusion of the copyright notice. -

The Gnu Binary Utilities

The gnu Binary Utilities Version cygnus-2.7.1-96q4 May 1993 Roland H. Pesch Jeffrey M. Osier Cygnus Support Cygnus Support TEXinfo 2.122 (Cygnus+WRS) Copyright c 1991, 92, 93, 94, 95, 1996 Free Software Foundation, Inc. Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modi®ed versions of this manual under the conditions for verbatim copying, provided also that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this manual into another language, under the above conditions for modi®ed versions. The GNU Binary Utilities Introduction ..................................... 467 1ar.............................................. 469 1.1 Controlling ar on the command line ................... 470 1.2 Controlling ar with a script ............................ 472 2ld.............................................. 477 3nm............................................ 479 4 objcopy ....................................... 483 5 objdump ...................................... 489 6 ranlib ......................................... 493 7 size............................................ 495 8 strings ........................................ 497 9 strip........................................... 499 Utilities 10 c++®lt ........................................ 501 11 nlmconv .................................... -

Also Includes Slides and Contents From

The Compilation Toolchain Cross-Compilation for Embedded Systems Prof. Andrea Marongiu ([email protected]) Toolchain The toolchain is a set of development tools used in association with source code or binaries generated from the source code • Enables development in a programming language (e.g., C/C++) • It is used for a lot of operations such as a) Compilation b) Preparing Libraries Most common toolchain is the c) Reading a binary file (or part of it) GNU toolchain which is part of d) Debugging the GNU project • Normally it contains a) Compiler : Generate object files from source code files b) Linker: Link object files together to build a binary file c) Library Archiver: To group a set of object files into a library file d) Debugger: To debug the binary file while running e) And other tools The GNU Toolchain GNU (GNU’s Not Unix) The GNU toolchain has played a vital role in the development of the Linux kernel, BSD, and software for embedded systems. The GNU project produced a set of programming tools. Parts of the toolchain we will use are: -gcc: (GNU Compiler Collection): suite of compilers for many programming languages -binutils: Suite of tools including linker (ld), assembler (gas) -gdb: Code debugging tool -libc: Subset of standard C library (assuming a C compiler). -bash: free Unix shell (Bourne-again shell). Default shell on GNU/Linux systems and Mac OSX. Also ported to Microsoft Windows. -make: automation tool for compilation and build Program development tools The process of converting source code to an executable binary image requires several steps, each with its own tool. -

Riscv-Software-Stack-Tutorial-Hpca2015

Software Tools Bootcamp RISC-V ISA Tutorial — HPCA-21 08 February 2015 Albert Ou UC Berkeley [email protected] Preliminaries To follow along, download these slides at http://riscv.org/tutorial-hpca2015.html 2 Preliminaries . Shell commands are prefixed by a “$” prompt. Due to time constraints, we will not be building everything from source in real-time. - Binaries have been prepared for you in the VM image. - Detailed build steps are documented here for completeness but are not necessary if using the VM. Interactive portions of this tutorial are denoted with: $ echo 'Hello world' . Also as a reminder, these slides are marked with an icon in the upper-right corner: 3 Software Stack . Many possible combinations (and growing) . But here we will focus on the most common workflows for RISC-V software development 4 Agenda 1. riscv-tools infrastructure 2. First Steps 3. Spike + Proxy Kernel 4. QEMU + Linux 5. Advanced Cross-Compiling 6. Yocto/OpenEmbedded 5 riscv-tools — Overview “Meta-repository” with Git submodules for every stable component of the RISC-V software toolchain Submodule Contents riscv-fesvr RISC-V Frontend Server riscv-isa-sim Functional ISA simulator (“Spike”) riscv-qemu Higher-performance ISA simulator riscv-gnu-toolchain binutils, gcc, newlib, glibc, Linux UAPI headers riscv-llvm LLVM, riscv-clang submodule riscv-pk RISC-V Proxy Kernel (riscv-linux) Linux/RISC-V kernel port riscv-tests ISA assembly tests, benchmark suite All listed submodules are hosted under the riscv GitHub organization: https://github.com/riscv 6 riscv-tools — Installation . Build riscv-gnu-toolchain (riscv*-*-elf / newlib target), riscv-fesvr, riscv-isa-sim, and riscv-pk: (pre-installed in VM) $ git clone https://github.com/riscv/riscv-tools $ cd riscv-tools $ git submodule update --init --recursive $ export RISCV=<installation path> $ export PATH=${PATH}:${RISCV}/bin $ ./build.sh . -

The Frege Programming Language ( Draft)

The Frege Programming Language (Draft) by Ingo Wechsung last changed May 14, 2014 3.21.285 Abstract This document describes the functional programming language Frege and its implemen- tation for the Java virtual machine. Commonplace features of Frege are type inference, lazy evaluation, modularization and separate compile-ability, algebraic data types and type classes, pattern matching and list comprehension. Distinctive features are, first, that the type system supports higher ranked polymorphic types, and, second, that Frege code is compiled to Java. This allows for maximal interoperability with existing Java software. Any Java class may be used as an abstract data type, Java functions and methods may be called from Frege functions and vice versa. Despite this interoperability feature Frege is a pure functional language as long as impure Java functions are declared accordingly. What is or who was Frege? Friedrich Ludwig Gottlob Frege was a German mathematician, who, in the second half of the 19th century tried to establish the foundation of mathematics in pure logic. Al- though this attempt failed in the very moment when he was about to publish his book Grundgesetze der Arithmetik, he is nevertheless recognized as the father of modern logic among philosophers and mathematicians. In his essay Funktion und Begriff [1] Frege introduces a function that takes another function as argument and remarks: Eine solche Funktion ist offenbar grundverschieden von den bisher betrachteten; denn als ihr Argument kann nur eine Funktion auftreten. Wie nun Funktionen von Gegenst¨andengrundverschieden sind, so sind auch Funktionen, deren Argu- mente Funktionen sind und sein m¨ussen,grundverschieden von Funktionen, deren Argumente Gegenst¨andesind und nichts anderes sein k¨onnen. -

Powerview Command Reference

PowerView Command Reference TRACE32 Online Help TRACE32 Directory TRACE32 Index TRACE32 Documents ...................................................................................................................... PowerView User Interface ............................................................................................................ PowerView Command Reference .............................................................................................1 History ...................................................................................................................................... 12 ABORT ...................................................................................................................................... 13 ABORT Abort driver program 13 AREA ........................................................................................................................................ 14 AREA Message windows 14 AREA.CLEAR Clear area 15 AREA.CLOSE Close output file 15 AREA.Create Create or modify message area 16 AREA.Delete Delete message area 17 AREA.List Display a detailed list off all message areas 18 AREA.OPEN Open output file 20 AREA.PIPE Redirect area to stdout 21 AREA.RESet Reset areas 21 AREA.SAVE Save AREA window contents to file 21 AREA.Select Select area 22 AREA.STDERR Redirect area to stderr 23 AREA.STDOUT Redirect area to stdout 23 AREA.view Display message area in AREA window 24 AutoSTOre .............................................................................................................................. -

Data Transformation Language (DTL)

DTL Data Transformation Language Phillip H. Sherrod Copyright © 2005-2006 All rights reserved www.dtreg.com DTL is a full programming language built into the DTREG program. DTL makes it easy to generate new variables, transform and combine input variables and select records to be used in the analysis. Contents Contents...................................................................................................................................................3 Introduction .............................................................................................................................................6 Introduction to the DTL Language......................................................................................................6 Using DTL For Data Transformations ....................................................................................................7 The main() function.............................................................................................................................7 Global Variables..................................................................................................................................8 Implicit Global Variables ................................................................................................................8 Explicit Global Variables ................................................................................................................9 Static Global Variables..................................................................................................................11 -

File Management Tools

File Management Tools ● gzip and gunzip ● tar ● find ● df and du ● od ● nm and strip ● sftp and scp Gzip and Gunzip ● The gzip utility compresses a specified list of files. After compressing each specified file, it renames it to have a “.gz” extension. ● General form. gzip [filename]* ● The gunzip utility uncompresses a specified list of files that had been previously compressed with gzip. ● General form. gunzip [filename]* Tar (38.2) ● Tar is a utility for creating and extracting archives. It was originally setup for archives on tape, but it now is mostly used for archives on disk. It is very useful for sending a set of files to someone over the network. Tar is also useful for making backups. ● General form. tar options filenames Commonly Used Tar Options c # insert files into a tar file f # use the name of the tar file that is specified v # output the name of each file as it is inserted into or # extracted from a tar file x # extract the files from a tar file Creating an Archive with Tar ● Below is the typical tar command used to create an archive from a set of files. Note that each specified filename can also be a directory. Tar will insert all files in that directory and any subdirectories. tar cvf tarfilename filenames ● Examples: tar cvf proj.tar proj # insert proj directory # files into proj.tar tar cvf code.tar *.c *.h # insert *.c and *.h files # into code.tar Extracting Files from a Tar Archive ● Below is the typical tar command used to extract the files from a tar archive. -

Reference Manual for the Icon Programming Language Version 5 (( Implementation for Limx)*

Reference Manual for the Icon Programming Language Version 5 (( Implementation for liMX)* Can A. Contain. Ralph £ Grixwoltl, and Stephen B. Watnplcr "RSI-4a December 1981, Corrected July 1982 Department of Computer Science The University of Arizona Tucson, Arizona 85721 This work was supported by the National Science Foundation under Grant MCS79-03890. Copyright © 1981 by Ralph E. Griswold All rights reserved. No part of this work may be reproduced, transmitted, or stored in any form or by any means without the prior written consent of the copyright owner. CONTENTS Chapter i Introduction 1.1 Background I 1.2 Scope ol the Manual 2 1.3 An Overview of Icon 2 1.4 Syntax Notation 2 1.5 Organization ol the Manual 3 Chapter 2 Basic Concepts and Operations 2.1 Types 4 2.2 Expressions 4 2.2.1 Variables and Assignment 4 2.2.2 Keywords 5 2.2.3 Functions 5 2.2.4 Operators 6 2.3 Evaluation of Expressions 6 2.3.1 Results 6 2.3.2 Success and Failure 7 2.4 Basic Control Structures 7 2.5 Compound Expressions 9 2.6 Loop Control 9 2.7 Procedures 9 Chapter 3 Generators and Expression Evaluation 3.1 Generators 11 3.2 Goal-Directed Evaluation 12 }.?> Evaluation of Expres.sions 13 3.4 I he Extent ol Backtracking 14 3.5 I he Reversal ol Effects 14 Chapter 4 Numbers and Arithmetic Operations 4.1 Integers 15 4.1.1 literal Integers 15 4.1.2 Integer Arithmetic 15 4.1.3 Integer Comparison 16 4.2 Real Numbers 17 4.2.1 literal Real Numbers 17 4.2.2 Real Arithmetic 17 4.2.3 Comparison of Real Numbers IS 4.3 Mixed-Mode Arithmetic IX 4.4 Arithmetic Type Conversion 19 -

The Extent and Cause of the Pre-White Dwarf Instability Strip

The Extent and Cause of the Pre-White Dwarf Instability Strip M. S. O’Brien1 Received ; accepted arXiv:astro-ph/9910495v1 27 Oct 1999 1Space Telescope Science Institute, 3700 San Martin Drive, Baltimore, MD 21218; [email protected]. –2– ABSTRACT One of the least understood aspects of white dwarf evolution is the process by which they are formed. The initial stages of white dwarf evolution are characterized by high luminosity, high effective temperature, and high surface gravity, making it difficult to constrain their properties through traditional spectroscopic observations. We are aided, however, by the fact that many H- and He-deficient pre-white dwarfs (PWDs) are multiperiodic g-mode pulsators. These stars fall into two classes, the variable planetary nebula nuclei (PNNV) and the “naked” GW Vir stars. Pulsations in PWDs provide a unique opportunity to probe their interiors, which are otherwise inaccesible to direct observation. Until now, however, the nature of the pulsation mechanism, the precise boundaries of the instability strip, and the mass distribution of the PWDs were complete mysteries. These problems must be addressed before we can apply knowledge of pulsating PWDs to improve understanding of white dwarf formation. This paper lays the groundwork for future theoretical investigations of these stars. In recent years, Whole Earth Telescope observations led to determination of mass and luminosity for the majority of the GW Vir pulsators. With these observations, we identify the common properties and trends PWDs exhibit as a class. We find that pulsators of low mass have higher luminosity, suggesting the range of instability is highly mass-dependent. -

The Extent and Cause of the Pre-White Dwarf Instability Strip

THE ASTROPHYSICAL JOURNAL, 532:1078È1088, 2000 April 1 ( 2000. The American Astronomical Society. All rights reserved. Printed in U.S.A. THE EXTENT AND CAUSE OF THE PREÈWHITE DWARF INSTABILITY STRIP M. S. OÏBRIEN Space Telescope Science Institute, 3700 San Martin Drive, Baltimore, MD 21218; obrien=stsci.edu. Received 1999 July 14; accepted 1999 November 17 ABSTRACT One of the least understood aspects of white dwarf evolution is the process by which they are formed. The initial stages of white dwarf evolution are characterized by high luminosity, high e†ective tem- perature, and high surface gravity, making it difficult to constrain their properties through traditional spectroscopic observations. We are aided, however, by the fact that many H- and He-deÐcient preÈwhite dwarfs (PWDs) are multiperiodic g-mode pulsators. These stars fall into two classes: the variable planet- ary nebula nuclei (PNNV) and the ““ naked ÏÏ GW Vir stars. Pulsations in PWDs provide a unique opportunity to probe their interiors, which are otherwise inaccesible to direct observation. Until now, however, the nature of the pulsation mechanism, the precise boundaries of the instability strip, and the mass distribution of the PWDs were complete mysteries. These problems must be addressed before we can apply knowledge of pulsating PWDs to improve understanding of white dwarf formation. This paper lays the groundwork for future theoretical investigations of these stars. In recent years, Whole Earth Telescope observations led to determination of mass and luminosity for the majority of the GW Vir pulsators. With these observations, we identify the common properties and trends PWDs exhibit as a class. -

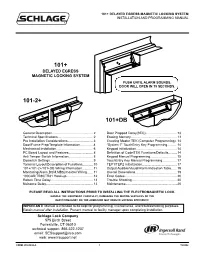

Installation Instructions: Schlage 101 Delayed Egress Magnetic Locking

101+ DELAYED EGRESS MAGNETIC LOCKING SYSTEM INSTALLATION AND PROGRAMMING MANUAL 101+ DELAYED EGRESS MAGNETIC LOCKING SYSTEM PUSH UNTIL ALARM SOUNDS. DOOR WILL OPEN IN 15 SECONDS. 101-2+ 101+DB General Description............................................. 2 Door Propped Delay(SEC)...................................13 Technical Specifications....................................... 2 Erasing Memory................................................... 13 Pre Installation Considerations............................ 3 Creating Master TEK (Computer Programming). 14 Door/Frame Prep/Template Information...............4 “System 7” TouchEntry Key Programming.......... 14 Mechanical Installation........................................ 6 Keypad Initialization............................................. 14 PC Board Layout and Features........................... 8 Definition of Code/TEK Functions/Defaults..........14 Anti Tamper Switch Information........................... 8 Keypad Manual Programming............................. 15 Dipswitch Settings................................................9 TouchEntry Key Manual Programming................ 17 Terminal Layout/Description of Functions............10 TEP1/TEP2 Initialization...................................... 17 101+/101-2+/101+DB Wiring Information............ 11 Output Audible/Visual/Alarm Indication Table...... 19 Monitoring(Alarm,DSM,MBS)/Control Wiring.......11 Overall Dimensions..............................................19 100CAB/TR80/TR81 Hook-up..............................12 Error