Leading One Prediction - Implementation, Generalization, and Application

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bash Guide for Beginners

Bash Guide for Beginners Machtelt Garrels Garrels BVBA <tille wants no spam _at_ garrels dot be> Version 1.11 Last updated 20081227 Edition Bash Guide for Beginners Table of Contents Introduction.........................................................................................................................................................1 1. Why this guide?...................................................................................................................................1 2. Who should read this book?.................................................................................................................1 3. New versions, translations and availability.........................................................................................2 4. Revision History..................................................................................................................................2 5. Contributions.......................................................................................................................................3 6. Feedback..............................................................................................................................................3 7. Copyright information.........................................................................................................................3 8. What do you need?...............................................................................................................................4 9. Conventions used in this -

L#07: PHP Date & Time

L#07: PHP Date & Time Using Date & Time in PHP Lecture 7 March 21, 2007 ©2007, I.Sarah Al-Shareef 1 Table of Content Overview Date and Time Functions Definitions Displaying Date and Time Getting Date as Timestamp Displaying Readable Date Building Formatting String Special Formatting Characters for date() Convert from Readable to Timestamp Getting Files Dates Displaying Dates in other languages Formatting Characters for strftime() March 21, 2007 ©2007, I.Sarah Al-Shareef 2 1 L#07: PHP Date & Time Overview Date & Time Functions Function Description checkdate() Validates set of Gregorian year, month, and day values (for example, 2005, 3, 17). date_sunrise() Returns time of sunrise for a given day and location (new in PHP 5). date_sunset() Returns time of sunset for a given day and location (new in PHP 5). date() Formats a local date/time, given a Unix timestamp (for example, 1111035030000 from the introduction to this chapter) and a formatting string. getdate() Given a Unix timestamp, returns an associative array containing date and time information (defaults to current time). gettimeofday() Returns an associative array containing information about the current system time. In PHP 5.1, it is possible for this function to return a float as well. March 21, 2007 ©2007, I.Sarah Al-Shareef 3 Overview Date & Time Fun cont. Function Description gmdate() Formats a GMT/UTC date/time. Uses the same formatting characters as the date() function. gmmktime() Converts a set of GMT date/time values into a Unix timestamp (analogous to mktime()). gmstrftime() Formats a GMT/UTC date/time according to locale settings (similar to strftime() except the time used is GMT/UTC). -

A Novel High Speed Leading Zero Counter for Floating Point Units

Satish Paidi, Rohit Sreerama, K.Neelima / International Journal of Engineering Research and Applications (IJERA) ISSN: 2248-9622 www.ijera.com Vol. 2, Issue 2,Mar-Apr 2012, pp.1103-1105 A Novel High Speed Leading Zero Counter For Floating Point Units Satish Paidi*, Rohit Sreerama*, K.Neelima** *(M.Tech VLSI, Sree Vidyanikethan Engineering College, Tirupathi,) ** (Assistant Professor, Sree Vidyanikethan Engineering College, Tirupathi,) ABSTRACT: - This paper mainly focuses on leading derived Boolean relations that describes the proposed zero counting. In this paper a novel leading zero counter leading zero counter. In section 4 the implementation is proposed. New Boolean expressions are derived for results and the comparison with the existing method are the new leading zero counter. We can explore various discussed and section 5 concludes the paper follows by designs of leading zero counting unit by using the the references. proposed approach. When compared with the known architectures the new circuits can be implemented II. FUNCTIONALITY OF EXISTING effectively in both static and dynamic logic and also LEADING ZERO COUNTING METHOD requires less energy per operation. The proposed leading This section discusses the method which is used in zero counter can also be integrated with a leading zero leading zero counting, their implementation and anticipation logic for better and fast results. Finally, a functionality. A leading zero is any zero bit that leads a simple and effective Leading Zero Counter was proposed number string in positional notation. In binary and the occupancy rate and the delay characteristics are representation consider the number of consecutive zeros investigated. that appear in a word before the first more significant bit Keywords - Floating Point Unit, Normalization, Leading that equal to one. -

The C Programming Language

The C programming Language The C programming Language By Brian W. Kernighan and Dennis M. Ritchie. Published by Prentice-Hall in 1988 ISBN 0-13-110362-8 (paperback) ISBN 0-13-110370-9 Contents ● Preface ● Preface to the first edition ● Introduction 1. Chapter 1: A Tutorial Introduction 1. Getting Started 2. Variables and Arithmetic Expressions 3. The for statement 4. Symbolic Constants 5. Character Input and Output 1. File Copying 2. Character Counting 3. Line Counting 4. Word Counting 6. Arrays 7. Functions 8. Arguments - Call by Value 9. Character Arrays 10. External Variables and Scope 2. Chapter 2: Types, Operators and Expressions 1. Variable Names 2. Data Types and Sizes 3. Constants 4. Declarations http://freebooks.by.ru/view/CProgrammingLanguage/kandr.html (1 of 5) [5/15/2002 10:12:59 PM] The C programming Language 5. Arithmetic Operators 6. Relational and Logical Operators 7. Type Conversions 8. Increment and Decrement Operators 9. Bitwise Operators 10. Assignment Operators and Expressions 11. Conditional Expressions 12. Precedence and Order of Evaluation 3. Chapter 3: Control Flow 1. Statements and Blocks 2. If-Else 3. Else-If 4. Switch 5. Loops - While and For 6. Loops - Do-While 7. Break and Continue 8. Goto and labels 4. Chapter 4: Functions and Program Structure 1. Basics of Functions 2. Functions Returning Non-integers 3. External Variables 4. Scope Rules 5. Header Files 6. Static Variables 7. Register Variables 8. Block Structure 9. Initialization 10. Recursion 11. The C Preprocessor 1. File Inclusion 2. Macro Substitution 3. Conditional Inclusion 5. Chapter 5: Pointers and Arrays 1. -

Decimal Fractions

Decimal Fractions Decimal Fractions Curriculum Ready www.mathletics.com Decimalfractionsallowustobemoreaccuratewithourcalculationsandmeasurements. Becausemostofushavetenfingers,itisthoughtthatthisisthereasonthedecimalfractionsystem isbasedaroundthenumber10! Sowecanthinkofdecimalfractionsasbeingfractionswithpowersof10 inthedenominator. WriteinthisspaceEVERYTHINGyoualreadyknowaboutdecimalfractions. s a go! Give thi Q Tomakedark-greencolouredpaint,youcanmixyellowandbluetogether,usingexactly0.5(half)asmuch yellowasyoudoblue. Howmuchdark-greenpaintwillyoumakeifyouuseallofthe12.5 mLofbluepaintyouhave? Work through the book for a great way to do this Decimal Fractions H 6 1 Mathletics Passport © 3P Learning SERIES TOPIC How does it work? Decimal Fractions Place value of decimal fractions Decimalfractionsrepresentpartsofawholenumberorobject. thousandths thousands of • thousandths Millionths Tens ThousandsHundredsTens Ones TenthsHundredthsThousandthsTen HundredMillionthsTen W H O L E D E C I M A L 1 10 10 # 000 000 000 000 000 100 100 # ' 1000 1000 # ' # Decimalpoint 10 10 ' 000 000 # 100 ' 1 ' 10 ' ' st 1 1 decimalplace:'10 ==# onetenth 10 nd 1 2 decimalplace:'10 ==# onehundredth 100 Add‘th’tothe namefordecimal rd 1 3 decimalplace:'10 ==# onethousandth placevalues 1000 th 1 4 decimalplace:'10 ==# onetenthousandth etc... 10 000 Writetheplacevalueofeachdigitinthenumber465.2703 4 6 5 . 2 7 0 3 Multiplybymultiplesof 10 Dividebymultiplesof 10 Expanded forms Place values 4......41# 00 = 400 = 4hundred 6......61# 0 = 60 = 6tens(orsixty) Integerparts -

Programming in C

Programming in C Programming in C This is a set of notes on Programming in C. They were originally prepared as plain ASCII files using a private set of nroff macros and have now been converted to HTML. Most of the small programming examples are available on-line. Just select the link about the program and then use your WWW browser's ability to save pages in a local file. 1. Chapter 1 Introduction 2. Chapter 2 Programming with Integers 3. Chapter 3 Arithmetic 4. Chapter 4 Data Types 5. Chapter 5 Loops and Conditions 6. Chapter 6 Addresses, Arrays, Pointers and Strings 7. Chapter 7 Functions and Storage Management 8. Chapter 8 The Pre-Processor and Standard Libraries 9. Chapter 9 Command Line Arguments and File Handling 10. Chapter 10 Structures, Unions and Typedefs 11. Chapter 11 Separate Compilation of C Modules 12. Chapter 12 Efficiency, Economy and Portability There are various other sources of information available on-line. If you are really interested in the C programming language you should 1. Read the comp.lang.c newsgroup 2. Obtain and read the comp.lang.c FAQ 3. Study the submissions to the International Obfuscated C Code Contest If you are interested in systems programming especially in the Unix context there are further notes on a wide range of topics. Peter Burden [email protected] http://www.scit.wlv.ac.uk/~jphb/cbook/html/ [02/04/2002 09:18:37] Introduction to C Programming - Introduction Introduction to C Programming - Introduction Chapter chap1 section 1 By time-honoured convention the first C program anybody writes is known as the "hello world" program. -

Bits and Bit Sequences Integers

Data ● (Some repeating CS1083, ECE course) ● bits and bit sequences ● integers (signed and unsigned) ● bit vectors ● strings and characters ● floating point numbers ● hexadecimal and octal notations Bits and Bit Sequences ● Fundamentally, we have the binary digit, 0 or 1. ● More interesting forms of data can be encoded into a bit sequence. ● 00100 = “drop the secret package by the park entrance” 00111 = “Keel Meester Bond” ● A given bit sequence has no meaning unless you know how it has been encoded. ● Common things to encode: integers, doubles, chars. And machine instructions. Encoding things in bit sequences (From textbook) ● Floats ● Machine Instructions How Many Bit Patterns? ● With k bits, you can have 2k different patterns ● 00..00, 00..01, 00..10, … , 11..10, 11..11 ● Remember this! It explains much... ● E.g., if you represent numbers with 8 bits, you can represent only 256 different numbers. Names for Groups of Bits ● nibble or nybble: 4 bits ● octet: 8 bits, always. Seems pedantic. ● byte: 8 bits except with some legacy systems. In this course, byte == octet. ● after that, it gets fuzzy (platform dependent). For 32-bit ARM, – halfword: 16 bits – word: 32 bits Unsigned Binary (review) ● We can encode non-negative integers in unsigned binary. (base 2) ● 10110 = 1*24 + 0*23 + 1*22 + 1*21 +1*20 represents the mathematical concept of “twenty-two”. In decimal, this same concept is written as 22 = 2*101 + 2*100. ● Converting binary to decimal is just a matter of adding up powers of 2, and writing the result in decimal. ● Going from decimal to binary is trickier. -

Safe Prescribing

Special issue PRESCRIBING THE ROLE OF SAFE PRESCRIBING IN THE PREVENTION OF MEDICATION ERRORS edications are the most common cause order is written, flag the patient’s chart and place it in the of adverse events in hospitalized rack by the clerical associate to ensure that the nurse and M patients.1,2 Adverse drug events (ADEs) the pharmacist are aware of the order. This process is occur during 6.5% of hospital admissions and 1% essential because the omission of medication of patients suffer disabling injuries as a result of an administration, usually first time doses, is the most ADE.1,2,3 Common causes of medication errors are frequently reported medication error type at Shands listed in Table 1.4 Because adverse Jacksonville. reactions to medications can be Obviously, no one group of serious or fatal, it is extremely " Preventing medication healthcare professionals is responsible for important that drug allergies and errors must be a multi- all errors. Therefore, preventing the reactions are documented. medication errors must be a multi- Additionally, medications can be disciplinary effort." disciplinary effort. For any further easily confused as a result of look- questions, please contact your liaison alike or sound-alike names and pharmacist or the Drug Information abbreviations (e.g., Toradol and tramadol, O.D. Service at 244-4185. can be interpreted as once daily or right eye, and (Continued on page 2) AZT could represent azathioprine or zidovudine). It is important that everyone utilize strategies to Table 1. Common Causes of Medication Errors decrease medication errors. Several tips for safe prescribing of medications are reviewed in Table 2 and should be incorporated into daily practice.4 • Ambiguous strength designation When writing orders for patients in the • Look-alike or sound-alike names or use of hospital, the same tips are useful. -

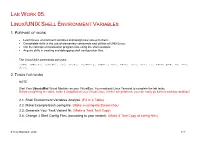

Lab Work 05. Linux/UNIX Shell Environment Variables

LAB WORK 05. LINUX/UNIX SHELL ENVIRONMENT VARIABLES 1. PURPOSE OF WORK • Learn to use environment variables and assign new values to them; • Consolidate skills in the use of elementary commands and utilities of UNIX/Linux; • Get the concept of initialization program files using the shell example; • Acquire skills in creating and debugging shell configuration files. The Linux/UNIX commands are used: sudo, adduser, userdel, set, unset, readonly, export, env, more, less, man, ls, echo, pwd, wc, who, date, ... 2. TASKS FOR WORK NOTE. Start Your UbuntuMini Virtual Machine on your VirtualBox. You need only Linux Terminal to complete the lab tasks. Before completing the tasks, make a SnapShot of your Virtual Linux. If there are problems, you can easily go back to working condition! 2.1. Shell Environment Variables Analyze. (Fill in a Table) 2.2. Make Example bash config file. (Make a config-file Screenshot) 2.3. Generate Your Task Variant Nr. (Make a Task Text Copy) 2.4. Change 3 Shell Config Files (according to your variant). (Make 3 Text Copy of config-files) © Yuriy Shamshin, 2020 1/17 3. REPORT Make a report about this work and send it to the teacher’s email (use a docx Report Blank). REPORT FOR LAB WORK 05: LINUX/UNIX SHELL ENVIRONMENT VARIABLES Student Name Surname Student ID (nV) Date 3.1. Insert Completing Shell Environment Variables Analyze Table, 3.2. Insert Screenshot with Your Config-file and working Clock, 3.3. Insert Text Copy of Task for Your Variant, 3.4. Insert Text Copy of Your Modification for 3 Configuration Files with the necessary comments: ˜/.bash_profile, ˜/.bashrc, ˜/.bash_logout. -

The Concept of Zero Continued…

International Journal of Scientific & Engineering Research Volume 3, Issue 12, December-2012 1 ISSN 2229-5518 Research Topic: The Concept of Zero Continued… Abstract: In the BC calendar era, the year 1 BC is the first year before AD 1; no room is reserved 0 (zero; /ˈziːroʊ/ ZEER-oh) is both a for a year zero. By contrast, in astronomical number[1] and the numerical digit used to year numbering, the year 1 BC is numbered represent that number in numerals. It plays a 0, the year 2 BC is numbered −1, and so on. central role in mathematics as the additive identity of the integers, real numbers, and The oldest known text to use a decimal many other algebraic structures. As a digit, 0 place-value system, including a zero, is the is used as a placeholder in place value Jain text from India entitled the systems. In the English language, 0 may be Lokavibhâga, dated 458 AD. This text uses called zero, nought or (US) naught ( Sanskrit numeral words for the digits, with /ˈnɔːt/), nil, or "o". Informal or slang terms words such as the Sanskrit word for void for for zero include zilch and zip. Ought or zero. aught ( /ˈɔːt/), have also been used. The first known use of special glyphs for the decimal digits that includes the 0 is the integer immediately preceding 1. In indubitable appearance of a symbol for the most cultures, 0 was identified before the digit zero, a small circle, appears on a stone idea of negative things that go lower than inscription found at the Chaturbhuja Temple zero was accepted. -

The Science of Computing II Living with Cyber Lesson 1: Number Systems and Binary Arithmetic Pillar: Computer Architecture the M

The Science of Computing II Living with Cyber Lesson 1: Number Systems and Binary Arithmetic Pillar: Computer Architecture The most basic unit of storage is the bit. At any point in time, a bit can be in only one of two states: “0” or “1.” Bits are generally implemented as two-state electronic devices (e.g., a current is flowing or not flowing, a voltage is high or low, a magnetic field is polarized in one direction or the opposite direction, etc). The symbol “0” is used to represent one of these states and the symbol “1” is used to represent the other. It really doesn’t matter which symbol (the “0” or the “1”) represents which physical state (e.g., “high” or “low”). All that is important is that the symbols be assigned consistently and that the two states be clearly distinguishable from each other. Sequences (or “patterns”) of bit values can be used to represent numbers (both positive and negative, integer and real), alphanumeric characters, images, sounds, and even program instructions. In fact, anything that can be stored in a computer must ultimately be stored as a pattern of bit values. The binary number system Today, virtually all civilizations use a base ten counting system. However, this has not always been so. In primitive tally systems, for example, there is one stroke for each object being counted. For example, the following tally pattern represents twelve: Some tally systems group strokes together. The one illustrated above places five strokes in each group. Most early systems attached little or no meaning to the order of the symbols used to represent a number. -

Regular Expressions: the Complete Tutorial

Regular Expressions The Complete Tutorial Jan Goyvaerts Regular Expressions: The Complete Tutorial Jan Goyvaerts Copyright © 2006, 2007 Jan Goyvaerts. All rights reserved. Last updated July 2007. No part of this book shall be reproduced, stored in a retrieval system, or transmitted by any means, electronic, mechanical, photocopying, recording, or otherwise, without written permission from the author. This book is published exclusively at http://www.regular-expressions.info/print.html Every effort has been made to make this book as complete and as accurate as possible, but no warranty or fitness is implied. The information is provided on an “as is” basis. The author and the publisher shall have neither liability nor responsibility to any person or entity with respect to any loss or damages arising from the information contained in this book. i Table of Contents Tutorial................................................................................................................ 1 1. Regular Expression Tutorial ......................................................................................................................................... 3 2. Literal Characters............................................................................................................................................................ 5 3. First Look at How a Regex Engine Works Internally .............................................................................................. 7 4. Character Classes or Character Sets............................................................................................................................