An Analysis of the Duckworth Lewis Method in the Context of English County Cricket

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tournament Rules Match Rules Net Run Rate

Tournament Rules - Only employees nominated by member AMCs holding valid employment card shall be allowed to participate. - Organizing committee is providing all teams with 15 color kits. No one will be allowed to wear any other kit. Extra kits (on request) would cost PKR 2,000 per kit. Teams may give names of maximum 18 players. - The tournament will consist of 12 teams in total, divided in 2 groups with each team playing 5 group matches. - At the end of the league matches, top 2 teams from each group will qualify for the semi-finals. - Points shall be awarded on the following system: win/walkover (3pts), tie/washout (1pt), lost (0pts). - In case the points are equal, the team with better net run rate (NRR) will qualify for the semi- finals (the formula is given below). - The reporting time for the morning match will be 9:00am sharp (toss at 9:15am and match would start at 9:30am) and for the afternoon match the reporting time will be 1:00pm sharp (toss at 1:15pm and match would start at 1:30pm). - Walkover will be awarded in the event if a team (minimum of 7 players) fails to appear within 30 minutes of the scheduled time of the allotted time. - In the case of a tie in a knockout match, the result will be decided by a super-over. - The team's captain will have the responsibility of maintaining discipline and healthy atmosphere during the matches, any grievances should be brought to committee's notice by the captain only. -

Issue 43: Summer 2010/11

Journal of the Melbourne CriCket Club library issue 43, suMMer 2010/2011 Cro∫se: f. A Cro∫ier, or Bi∫hops ∫taffe; also, a croo~ed ∫taffe wherewith boyes play at cricket. This Issue: Celebrating the 400th anniversary of our oldest item, Ashes to Ashes, Some notes on the Long Room, and Mollydookers in Australian Test Cricket Library News “How do you celebrate a Quadricentennial?” With an exhibition celebrating four centuries of cricket in print The new MCC Library visits MCC Library A range of articles in this edition of The Yorker complement • The famous Ashes obituaries published in Cricket, a weekly cataloguing From December 6, 2010 to February 4, 2010, staff in the MCC the new exhibition commemorating the 400th anniversary of record of the game , and Sporting Times in 1882 and the team has swung Library will be hosting a colleague from our reciprocal club the publication of the oldest book in the MCC Library, Randle verse pasted on to the Darnley Ashes Urn printed in into action. in London, Neil Robinson, research officer at the Marylebone Cotgrave’s Dictionarie of the French and English tongues, published Melbourne Punch in 1883. in London in 1611, the same year as the King James Bible and the This year Cricket Club’s Arts and Library Department. This visit will • The large paper edition of W.G. Grace’s book that he premiere of Shakespeare’s last solo play, The Tempest. has seen a be an important opportunity for both Neil’s professional presented to the Melbourne Cricket Club during his tour in commitment development, as he observes the weekday and event day The Dictionarie is a scarce book, but not especially rare. -

Regulations Governing the Qualification and Registration of Cricketers

REGULATIONS GOVERNING THE QUALIFICATION AND REGISTRATION OF CRICKETERS 1 DEFINITIONS In these Regulations: 1.1 “Appeal Panel” means the Appeal Panel, appointed pursuant to Regulation 11. 1.2 “Approved Cricket” means a Domestic Cricket Event as defined by the ICC in Regulation 32 of the ICC Regulations. 1.3 “Arbitration Panel” means the Arbitration Panel, appointed pursuant to Regulation 8. 1.4 “Competition” means each of the Specsavers County Championship, the Vitality Blast and the Royal London One-Day Cup. 1.5 “Competitive County Cricket” means: (a) the Specsavers County Championship and the Unicorns Championship; (b) the Royal London One-Day Cup, Vitality Blast, Unicorns Trophy and the Unicorns T20; and (c) any other similar competition authorised by and designated as Competitive County Cricket by the ECB which for the avoidance of doubt shall include matches between First Class Counties and MCC Universities and matches between a First Class County or the Unicorns and a representative side of a Full or Associate Member Country. 1.6 “County” , except where the context may otherwise require, means any one of the County Cricket Clubs from time to time playing in the County Championship or the Minor Counties Championship. 1.7 “CDC” means the Cricket Discipline Commission of the ECB. 1.8 “Cricketer” means a cricketer who is or seeks to be qualified and/or registered in accordance with these Regulations. 1.9 “ECB” means the England and Wales Cricket Board, or a duly appointed committee thereof. 1.10 “ECB Regulations” means any ECB rules, regulations, codes or policies as are in force from time to time. -

Fifty Years of Surrey Championship Cricket

Fifty Years of Surrey Championship Cricket History, Memories, Facts and Figures • How it all started • How the League has grown • A League Chairman’s season • How it might look in 2043? • Top performances across fifty years HAVE YOUR EVENT AT THE KIA OVAL 0207 820 5670 SE11 5SS [email protected] events.kiaoval.com Surrey Championship History 1968 - 2018 1968 2018 Fifty Years of Surrey 1968 2018 Championship Cricket ANNIVERSA ANNIVERSA 50TH RY 50TH RY April 2018 PRESIDENT Roland Walton Surrey Championship 50th Anniversary 1968 - 2018 Contents Diary of anniversary activities anD special events . 4 foreworD by peter Murphy (chairMan) . 5 the surrey chaMpionship – Micky stewart . 6 Message froM richarD thoMpson . 7 the beginning - MeMories . 9. presiDent of surrey chaMpionship . 10 reflections anD observations on the 1968 season . 16 sccca - final 1968 tables . 19 the first Match - saturDay May 4th 1968 . 20 ten years of league cricket (1968 - 1977) . 21 the first twenty years - soMe personal MeMories . 24 Message froM Martin bicknell . 27 the history of the surrey chaMpionship 1968 to 1989 . 28 the uMpires panel . 31 the seconD 25 years . 32 restructuring anD the preMier league 1994 - 2005 . 36 the evolution of the surrey chaMpionship . 38 toDay’s ecb perspective of league cricket . 39 norManDy - froM grass roots to the top . 40 Diary of a league chairMan’s season . 43 surrey chaMpionship coMpetition . 46 expansion anD where are they now? . 47 olD grounDs …..….. anD new! . 51 sponsors of the surrey chaMpionship . 55 what Might the league be like in 25 years? . 56 surrey chaMpionship cappeD surrey players . 58 history . -

Matador Bbqs One-Day Cup

06. Matador BBQs One-Day Cup BBQs One-Day 06. Matador 06. MATADOR BBQS ONE-DAY CUP Playing Handbook | 2015-16 1 06. Matador BBQs One-Day Cup BBQs One-Day 06. Matador 2 MATADOR BBQS ONE-DAY CUP Start Time Match Date Home Team Vs Away Team Venue Broadcaster (AEDT) 1 Monday, 5 October 15 New South Wales V CA XI Bankstown Oval 10:00 am None 2 Monday, 5 October 15 Queensland V Tasmania North Sydney Oval 10:00 am None 3 Monday, 5 October 15 South Australia V Western Australia Hurstville Oval 10:00 am None 4 Wednesday, 7 October 15 CA XI V Victoria Hurstville Oval 10:00 am None 5 Thursday, 8 October 15 New South Wales V South Australia North Sydney Oval 10:00 am GEM 6 Friday, 9 October 15 Victoria V Queensland Blacktown International Sportspark 1 2:00 pm GEM 7 Saturday, 10 October 15 CA XI V Tasmania Bankstown Oval 10:00 am None 8 Saturday, 10 October 15 Western Australia V New South Wales Blacktown International Sportspark 1 2:00 pm GEM 9 Sunday, 11 October 15 South Australia V Queensland North Sydney Oval 10:00 am GEM 10 Monday, 12 October 15 Victoria V Western Australia Blacktown International Sportspark 1 10:00 am GEM 11 Monday, 12 October 15 Tasmania V New South Wales Hurstville Oval 10:00 am None 12 Wednesday, 14 October 15 South Australia V Tasmania Blacktown International Sportspark 1 10:00 am GEM 13 Thursday, 15 October 15 Western Australia V CA XI North Sydney Oval 10:00 am GEM 14 Friday, 16 October 15 Victoria V South Australia Bankstown Oval 10:00 am None 15 Friday, 16 October 15 Queensland V New South Wales Drummoyne Oval 2:00 pm -

![Arxiv:1908.07372V1 [Physics.Soc-Ph] 12 Aug 2019 We Introduce the Concept of Using SDE for the Game of Cricket Using a Very Rudimentary Model in Subsection 2.1](https://docslib.b-cdn.net/cover/7213/arxiv-1908-07372v1-physics-soc-ph-12-aug-2019-we-introduce-the-concept-of-using-sde-for-the-game-of-cricket-using-a-very-rudimentary-model-in-subsection-2-1-407213.webp)

Arxiv:1908.07372V1 [Physics.Soc-Ph] 12 Aug 2019 We Introduce the Concept of Using SDE for the Game of Cricket Using a Very Rudimentary Model in Subsection 2.1

Stochastic differential theory of cricket Santosh Kumar Radha Department of physics *Case Western Reserve University Abstract A new formalism for analyzing the progression of cricket game using Stochastic differential equa- tion (SDE) is introduced. This theory enables a quantitative way of representing every team using three key variables which have physical meaning associated with them. This is in contrast with the traditional system of rating/ranking teams based on combination of different statical cumulants. Fur- ther more, using this formalism, a new method to calculate the winning probability as a progression of number of balls is given. Keywords: Stochastic Differential Equation, Cricket, Sports, Math, Physics 1. Introduction Sports, as a social entertainer exists because of the unpredictable nature of its outcome. More recently, the world cup final cricket match between England and New Zealand serves as a prime example of this unpredictability. Even when one team is heavily advantageous, there is a chance that the other team will win and that likelihood varies by the particular sport as well as the teams involved. There have been many studies showing that unpredictability is an unavoidable fact of sports [1], despite which there has been numerous attempts at predicting the outcome of sports [5]. Though different, there has been considerable efforts directed towards predicting the future of other fields including financial markets [4], arts and entertainment award events [6], and politics [7]. There has been a wide range of statistical analysis performed on sports, primarily baseball and football[8,2,3] but very few have been applied to understand cricket. Complex system analysis[9], machine learning models[10, 12] and various statical data analysis[13, 14, 15, 16, 17] have been used in previous studies to describe and analyze cricket. -

Wwcc Official Dodgeball Rules

id8653828 pdfMachine by Broadgun Software - a great PDF writer! - a great PDF creator! - http://www.pdfmachine.com http://www.broadgun.com WWCC OFFICIAL DODGEBALL RULES PLAY AREA: The game is played on the basketball court. Center Line: A player may not step on or over the center line. They may reach over to retrieve a ball. EQUIPMENT: a) Players must wear proper attire (tennis shoes, shirts etc.). “ ” b) An official WWCC dodgeball is used. c) With 6 players, 5 dodgeballs will be used per court. TEAMS: A team consists of 6 players on the court. A team may play with fewer than 6 (that would be a disadvantage as there are fewer players to eliminate). Extra Players: No more than 6 players per team may be on the court at a time. If a team has additional players, they may rotate in at the conclusion of a game. TIME: a) Best of three game. b) Teams will play for 3 minutes on their side of the court. Once that 3 minutes is over than players from either team will be able to enter the opposing teams side of the court. PLAY: a) To start the game each team has 2 dodgeballs. There will be one dodgeball placed on center line. “ ” b) If a player is hit by a fly ball , before it hits the floor and after being thrown by a player on the opposing team that player is out. “ ” c) If a player catches a fly ball , the thrower is out. ALSO: The other team returns an eliminated player to their team. -

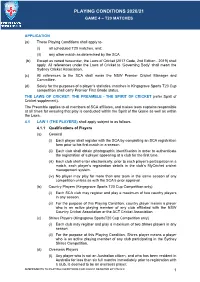

Game 4 Playing Conditions 2020-21

PLAYING CONDITIONS 2020/21 GAME 4 – T20 MATCHES APPLICATION (a) These Playing Conditions shall apply to- (i) all scheduled T20 matches, and; (ii) any other match as determined by the SCA. (b) Except as varied hereunder, the Laws of Cricket (2017 Code, 2nd Edition - 2019) shall apply. All references under the Laws of Cricket to ‘Governing Body’ shall mean the Sydney Cricket Association. (c) All references to the SCA shall mean the NSW Premier Cricket Manager and Committee. (d) Solely for the purposes of a player’s statistics, matches in Kingsgrove Sports T20 Cup competition shall carry Premier First Grade status. THE LAWS OF CRICKET: THE PREAMBLE - THE SPIRIT OF CRICKET (refer Spirit of Cricket supplement). The Preamble applies to all members of SCA affiliates, and makes team captains responsible at all times for ensuring that play is conducted within the Spirit of the Game as well as within the Laws. 4.1 LAW 1 (THE PLAYERS) shall apply subject to as follows. 4.1.1 Qualifications of Players (a) General (i) Each player shall register with the SCA by completing an SCA registration form prior to his first match in a season. (ii) Each club shall obtain photographic identification in order to authenticate the registration of a player appearing at a club for the first time. (iii) Each club shall enter electronically, prior to each player’s participation in a match, each player’s registration details in the club’s MyCricket cricket management system. (iv) No player may play for more than one team in the same season of any competition unless as with the SCA’s prior approval. -

Tape Ball Cricket

TAPE BALL CRICKET RULES HIGHLIGHTS There will be absolutely ZERO TOLERANCE (no use of any tobacco, no pan parag, or no non-tumbaco pan parag, or any smell of any of these items)’ Forfeit time is five (5) minutes after the scheduled game start time. If a team is not “Ready to Play” within five (5) minutes after the scheduled game start time, then that team will forfeit and the opposing team will be declared the winner (assuming the opposing team is ready to play). A team must have a minimum of twelve (12) players and a maximum of eighteen (18). A match will consist of two teams with eleven (11) players including a team captain. A match may not start if either team consists of fewer than eight (8) players. The blade of the bat shall have a conventional flat face. A Ihsan Tennis ball covered with WHITE ELECTRICAL TAPE (TAPE TENNIS BALL) will be used for all competitions. When applying any of the above-mentioned rules OR when taking any disciplinary actions, ABSOLUTELY NO CONSIDERATION will be given to what was done in the previous tournaments. It is required that each team provide one (1) player (players can rotate) at all times to sit near or sit with the scorer so he / she can write correct names and do stats correctly for each player. GENERAL INFORMATION, RULES AND REGULATIONS FOR CRICKET There will be absolutely ZERO TOLERANCE (no use of any tobacco, no pan parag, or no non-tumbaco pan parag, or any smell of any of these items) Umpire’s decision will be final during all matches. -

Stumped at the Supermarket: Making Sense of Nutrition Rating Systems 2 Table of Contents

Stumped at the Supermarket Making Sense of Nutrition Rating Systems 2010 Kate Armstrong, JD Public Health Law Center, William Mitchell College of Law St. Paul, Minnesota Commissioned by the National Policy & Legal Analysis Network to Prevent Childhood Obesity (NPLAN) nplan.org phlpnet.org Support for this paper was provided by a grant from the Robert Wood Johnson Foundation, through the National Policy & Legal Analysis Network to Prevent Childhood Obesity (NPLAN). NPLAN is a program of Public Health Law & Policy (PHLP). PHLP is a nonprofit organization that provides legal information on matters relating to public health. The legal information provided in this document does not constitute legal advice or legal representation. For legal advice, readers should consult a lawyer in their state. Stumped at the Supermarket: Making Sense of Nutrition Rating Systems 2 Table of Contents Introduction . 4 Emergence of Nutrition Rating Systems in the United States . 6 Health Organization Labels . 6 Food Manufacturers’ Front-of-Package Labeling Systems (2004-2007) . 7 Food Retailers’ Nutrition Scoring and Rating Systems (2006-2009) . 10 Development and Suspension of Smart Choices (2007-2009). .14 Nutrition Rating Systems: A Bad Idea, or Just Too Much of a Good Thing? . 21 A Critique of Nutrition Rating Systems . 21 Multiple Nutrition Rating Systems: Causing Consumer Confusion? . 25 Nutrition Rating Systems Abroad: Lessons Learned from Foreign Examples . .27 FDA Regulation of Point-of-Purchase Food Labeling: Implications for Nutrition Rating Systems . 31 Overview of FDA’s Regulatory Authority Over Food Labeling . 31 Past FDA Activity Surrounding Front-of-Package Labeling and Nutrition Rating Systems . 33 Recent and Future FDA Activity Surrounding Point-of-Purchase Food Labeling . -

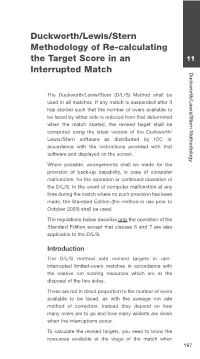

Duckworth/Lewis/Stern Methodology of Re-Calculating the Target Score in an 11

4003_FCC_KSL_DuckworthLewisStern_2018_p195-208.qxp_D-L F 2005_p228.qxd 22/02/2018 10:51 Page 195 Duckworth/Lewis/Stern Methodology of Re-calculating the Target Score in an 11 Interrupted Match Duckworth/Lewis/Stern Methodology The Duckworth/Lewis/Stern (D/L/S) Method shall be used in all matches. If any match is suspended after it has started such that the number of overs available to be faced by either side is reduced from that determined when the match started, the revised target shall be computed using the latest version of the Duckworth/ Lewis/Stern software as distributed by ICC in accordance with the instructions provided with that software and displayed on the screen. Where possible, arrangements shall be made for the provision of back-up capability, in case of computer malfunction, for the operation or continued operation of the D/L/S. In the event of computer malfunction at any time during the match where no such provision has been made, the Standard Edition (the method in use prior to October 2003) shall be used. The regulations below describe only the operation of the Standard Edition except that clauses 6 and 7 are also applicable to the D/L/S. Introduction The D/L/S method sets revised targets in rain- interrupted limited-overs matches in accordance with the relative run scoring resources which are at the disposal of the two sides. These are not in direct proportion to the number of overs available to be faced, as with the average run rate method of correction. Instead they depend on how many overs are to go and how many wickets are down when the interruptions occur. -

Prediction of the Outcome of a Twenty-20 Cricket Match Arjun Singhvi, Ashish V Shenoy, Shruthi Racha, Srinivas Tunuguntla

Prediction of the outcome of a Twenty-20 Cricket Match Arjun Singhvi, Ashish V Shenoy, Shruthi Racha, Srinivas Tunuguntla Abstract—Twenty20 cricket, sometimes performance is a big priority for ICC and also for written Twenty-20, and often abbreviated to the channels broadcasting the matches. T20, is a short form of cricket. In a Twenty20 Hence to solving an exciting problem such as game the two teams of 11 players have a single determining the features of players and teams that innings each, which is restricted to a maximum determine the outcome of a T20 match would have of 20 overs. This version of cricket is especially considerable impact in the way cricket analytics is unpredictable and is one of the reasons it has done today. The following are some of the gained popularity over recent times. However, terminologies used in cricket: This template was in this project we try four different approaches designed for two affiliations. for predicting the results of T20 Cricket 1) Over: In the sport of cricket, an over is a set Matches. Specifically we take in to account: of six balls bowled from one end of a cricket previous performance statistics of the players pitch. involved in the competing teams, ratings of 2) Average Runs: In cricket, a player’s batting players obtained from reputed cricket statistics average is the total number of runs they have websites, clustering the players' with similar scored, divided by the number of times they have performance statistics and using an ELO based been out. Since the number of runs a player scores approach to rate players.