New Gradient Methods for Bandwidth Selection in Bivariate Kernel Density Estimation ( Siloko, I

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Journal Abbreviations

Abbreviations of Names of Serials This list gives the form of references used in Mathematical Reviews (MR). not previously listed ⇤ The abbreviation is followed by the complete title, the place of publication journal indexed cover-to-cover § and other pertinent information. † monographic series Update date: July 1, 2016 4OR 4OR. A Quarterly Journal of Operations Research. Springer, Berlin. ISSN Acta Math. Hungar. Acta Mathematica Hungarica. Akad. Kiad´o,Budapest. § 1619-4500. ISSN 0236-5294. 29o Col´oq. Bras. Mat. 29o Col´oquio Brasileiro de Matem´atica. [29th Brazilian Acta Math. Sci. Ser. A Chin. Ed. Acta Mathematica Scientia. Series A. Shuxue † § Mathematics Colloquium] Inst. Nac. Mat. Pura Apl. (IMPA), Rio de Janeiro. Wuli Xuebao. Chinese Edition. Kexue Chubanshe (Science Press), Beijing. ISSN o o † 30 Col´oq. Bras. Mat. 30 Col´oquio Brasileiro de Matem´atica. [30th Brazilian 1003-3998. ⇤ Mathematics Colloquium] Inst. Nac. Mat. Pura Apl. (IMPA), Rio de Janeiro. Acta Math. Sci. Ser. B Engl. Ed. Acta Mathematica Scientia. Series B. English § Edition. Sci. Press Beijing, Beijing. ISSN 0252-9602. † Aastaraam. Eesti Mat. Selts Aastaraamat. Eesti Matemaatika Selts. [Annual. Estonian Mathematical Society] Eesti Mat. Selts, Tartu. ISSN 1406-4316. Acta Math. Sin. (Engl. Ser.) Acta Mathematica Sinica (English Series). § Springer, Berlin. ISSN 1439-8516. † Abel Symp. Abel Symposia. Springer, Heidelberg. ISSN 2193-2808. Abh. Akad. Wiss. G¨ottingen Neue Folge Abhandlungen der Akademie der Acta Math. Sinica (Chin. Ser.) Acta Mathematica Sinica. Chinese Series. † § Wissenschaften zu G¨ottingen. Neue Folge. [Papers of the Academy of Sciences Chinese Math. Soc., Acta Math. Sinica Ed. Comm., Beijing. ISSN 0583-1431. -

Elenco Delle Riviste Scientifiche Dell'area 13 - Valido a Partire Dal 4 Luglio 2018 TITOLO ISSN 900 2036-8836 1989

Elenco delle riviste Scientifiche dell'Area 13 - Valido a partire dal 4 luglio 2018 TITOLO ISSN 900 2036-8836 1989. RIVISTA DI DIRITTO PUBBLICO E SCIENZE POLITICHE 1720-4240 4OR 1619-4500 4OR 1614-2411 A.W.R. BULLETIN 0001-2947 AAA TAC, ACOUSTICAL ARTS AND ARTIFACTS. TECHNOLOGY, AESTHETICS, COMMUNICATION. 1824-6176 AAPS PHARMSCITECH 1530-9932 ABACUS 0001-3072 ABRUZZO CONTEMPORANEO 1127-5995 ABSTRACT AND APPLIED ANALYSIS 1085-3375 ACADEMIC EMERGENCY MEDICINE 1069-6563 ACADEMIC EXCHANGE QUARTERLY 1096-1453 ACADEMIC MEDICINE 1040-2446 ACADEMICUS 2079-3715 ACADEMY OF ACCOUNTING AND FINANCIAL STUDIES JOURNAL 1096-3685 ACADEMY OF BANKING STUDIES JOURNAL 1939-2230 ACADEMY OF MANAGEMENT LEARNING & EDUCATION 1537-260X ACADEMY OF MANAGEMENT PERSPECTIVES 1558-9080 ACADEMY OF MANAGEMENT REVIEW 0363-7425 ACCIDENT ANALYSIS AND PREVENTION 0001-4575 ACCOUNTING & TAXATION 1944-592X ACCOUNTING AND BUSINESS RESEARCH 0001-4788 ACCOUNTING AND FINANCE 0810-5391 ACCOUNTING AND FINANCE 2249-3964 ACCOUNTING AND FINANCE RESEARCH 1927-5994 ACCOUNTING AND FINANCE RESEARCH 1927-5986 ACCOUNTING AND THE PUBLIC INTEREST 1530-9320 ACCOUNTING AUDITING & ACCOUNTABILITY 0951-3574 ACCOUNTING EDUCATION 0963-9284 ACCOUNTING FORUM 0155-9982 ACCOUNTING HISTORIANS JOURNAL 0148-4184 ACCOUNTING HISTORY 1749-3374 ACCOUNTING HISTORY 1032-3732 ACCOUNTING HISTORY REVIEW 2155-2851 ACCOUNTING HISTORY REVIEW 2155-286X ACCOUNTING HORIZONS 0888-7993 ACCOUNTING HORIZONS 1558-7975 ACCOUNTING IN EUROPE 1744-9480 ACCOUNTING REVIEW 0001-4826 ACCOUNTING, AUDITING & ACCOUNTABILITY JOURNAL 1368-0668 -

Wykaz Czasopism Naukowych Dla Dyscypliny MATEMATYKA

www.irsw.pl Wykaz czasopism naukowych dla dyscypliny MATEMATYKA (wg Komunikatu Ministra Nauki i Szkolnictwa Wyższego z dnia 31 lipca 2019 r. w sprawie wykazu czasopism naukowych i recenzowanych materiałów z konferencji międzynarodowych wraz z przypisaną liczbą punktów) Stan prawny na 20.12.2019 r. Unikatowy Identyfikator Tytuł 1 issn e-issn Tytuł 2 issn e-issn Punkty Czasopisma ABHANDLUNGEN AUS DEM MATHEMATISCHEN SEMINAR DER Abhandlungen aus dem Mathematischen Seminar der Universitat 29 0025-5858 1865-8784 0025-5858 70 UNIVERSITAT HAMBURG Hamburg 76 ACM Communications in Computer Algebra 1932-2232 1932-2240 ACM Communications in Computer Algebra 1932-2232 20 83 ACM Transactions on Algorithms 1549-6325 1549-6333 ACM Transactions on Algorithms 1549-6325 140 106 ACM Transactions on Modeling and Computer Simulation 1049-3301 1558-1195 ACM Transactions on Modeling and Computer Simulation 1049-3301 70 115 ACM Transactions on Spatial Algorithms and Systems 2374-0353 2374-0361 ACM Transactions on Spatial Algorithms and Systems 2374-0353 2374-0361 70 154 ACTA APPLICANDAE MATHEMATICAE 0167-8019 1572-9036 Acta Applicandae Mathematicae 0167-8019 1572-9036 70 156 ACTA ARITHMETICA 0065-1036 1730-6264 Acta Arithmetica 0065-1036 70 204 Acta et Commentationes Universitatis Tartuensis de Mathematica 1406-2283 2228-4699 Acta et Commentationes Universitatis Tartuensis de Mathematica 1406-2283 2228-4699 20 233 ACTA MATHEMATICA 0001-5962 1871-2509 Acta Mathematica 0001-5962 200 234 ACTA MATHEMATICA HUNGARICA 0236-5294 1588-2632 Acta Mathematica Hungarica 0236-5294 -

Abbreviations of Names of Serials

Abbreviations of Names of Serials This list gives the form of references used in Mathematical Reviews (MR). ∗ not previously listed E available electronically The abbreviation is followed by the complete title, the place of publication § journal reviewed cover-to-cover V videocassette series and other pertinent information. † monographic series ¶ bibliographic journal E 4OR 4OR. Quarterly Journal of the Belgian, French and Italian Operations Research ISSN 1211-4774. Societies. Springer, Berlin. ISSN 1619-4500. §Acta Math. Sci. Ser. A Chin. Ed. Acta Mathematica Scientia. Series A. Shuxue Wuli † 19o Col´oq. Bras. Mat. 19o Col´oquio Brasileiro de Matem´atica. [19th Brazilian Xuebao. Chinese Edition. Kexue Chubanshe (Science Press), Beijing. (See also Acta Mathematics Colloquium] Inst. Mat. Pura Apl. (IMPA), Rio de Janeiro. Math.Sci.Ser.BEngl.Ed.) ISSN 1003-3998. † 24o Col´oq. Bras. Mat. 24o Col´oquio Brasileiro de Matem´atica. [24th Brazilian §ActaMath.Sci.Ser.BEngl.Ed. Acta Mathematica Scientia. Series B. English Edition. Mathematics Colloquium] Inst. Mat. Pura Apl. (IMPA), Rio de Janeiro. Science Press, Beijing. (See also Acta Math. Sci. Ser. A Chin. Ed.) ISSN 0252- † 25o Col´oq. Bras. Mat. 25o Col´oquio Brasileiro de Matem´atica. [25th Brazilian 9602. Mathematics Colloquium] Inst. Nac. Mat. Pura Apl. (IMPA), Rio de Janeiro. § E Acta Math. Sin. (Engl. Ser.) Acta Mathematica Sinica (English Series). Springer, † Aastaraam. Eesti Mat. Selts Aastaraamat. Eesti Matemaatika Selts. [Annual. Estonian Heidelberg. ISSN 1439-8516. Mathematical Society] Eesti Mat. Selts, Tartu. ISSN 1406-4316. § E Acta Math. Sinica (Chin. Ser.) Acta Mathematica Sinica. Chinese Series. Chinese Math. Abh. Braunschw. Wiss. Ges. Abhandlungen der Braunschweigischen Wissenschaftlichen Soc., Acta Math. -

Mathematical Combinatorics

ISSN 1937 - 1055 VOLUME 1, 2013 INTERNATIONAL JOURNAL OF MATHEMATICAL COMBINATORICS EDITED BY THE MADIS OF CHINESE ACADEMY OF SCIENCES AND BEIJING UNIVERSITY OF CIVIL ENGINEERING AND ARCHITECTURE March, 2013 Vol.1, 2013 ISSN 1937-1055 International Journal of Mathematical Combinatorics Edited By The Madis of Chinese Academy of Sciences and Beijing University of Civil Engineering and Architecture March, 2013 Aims and Scope: The International J.Mathematical Combinatorics (ISSN 1937-1055) is a fully refereed international journal, sponsored by the MADIS of Chinese Academy of Sci- ences and published in USA quarterly comprising 100-150 pages approx. per volume, which publishes original research papers and survey articles in all aspects of Smarandache multi-spaces, Smarandache geometries, mathematical combinatorics, non-euclidean geometry and topology and their applications to other sciences. Topics in detail to be covered are: Smarandache multi-spaces with applications to other sciences, such as those of algebraic multi-systems, multi-metric spaces, , etc.. Smarandache geometries; · · · Differential Geometry; Geometry on manifolds; Topological graphs; Algebraic graphs; Random graphs; Combinatorial maps; Graph and map enumeration; Combinatorial designs; Combinatorial enumeration; Low Dimensional Topology; Differential Topology; Topology of Manifolds; Geometrical aspects of Mathematical Physics and Relations with Manifold Topology; Applications of Smarandache multi-spaces to theoretical physics; Applications of Combi- natorics to mathematics and theoretical physics; Mathematical theory on gravitational fields; Mathematical theory on parallel universes; Other applications of Smarandache multi-space and combinatorics. Generally, papers on mathematics with its applications not including in above topics are also welcome. It is also available from the below international databases: Serials Group/Editorial Department of EBSCO Publishing 10 Estes St. -

11 Maggio 1958 [email protected]; [email protected]

Soci corrispondenti Vicenţiu RĂDULESCU Caracal (Romania), 11 Maggio 1958 [email protected]; [email protected] Full Professor at the Department of Mathematics, University of Craiova (Romania). I Classe: socio corrispondente (2016-). Attività e cariche pubbliche o Attività culturali . Professorial Fellow, Institute of Mathematics “Simion Stoilow" of the Romanian Academy, Bucharest, Romania (2007 - ) . Reviewer activity for: Mathematical Reviews (since 1993) Zentralblatt für Mathematik (since 1995) Applied Mechanics Review (since 1999) MAA Reviews (since 2007) . Editorial activities: Acquisition Editor, de Gruyter-Versita Book Publishing Program in Mathematics Editor in Chief of Advances in Nonlinear Analysis (Walter de Gruyter) Associate Editor of Nonlinear Analysis: Theory, Methods & Applications (Elsevier) Associate Editor of the Journal of Mathematical Analysis and Applications (Elsevier Member of the Editorial Board of Complex Variables and Elliptic Equations (Taylor & Francis) Associate Editor of Boundary Value Problems (Springer) Associate Editor of the Electronic Journal of Differential Equations Editor of Advances in Pure and Applied Mathematics (Walter de Gruyter) Associate Editor of Discrete and Continuous Dynamical Systems, Series S (American Institute of Mathematical Sciences) Member of the Editorial Committee of Opuscula Mathematica (Krakow University) Member of the Editorial Board of “MATHlics Research Paper Series Applied MATHematics Journal for Economics" (edited by MEDAlics-Research -

Abbreviations of Names of Serials

Abbreviations of Names of Serials This list gives the form of references used in Mathematical Reviews (MR). ∗ not previously listed The abbreviation is followed by the complete title, the place of publication x journal indexed cover-to-cover and other pertinent information. y monographic series Update date: January 30, 2018 4OR 4OR. A Quarterly Journal of Operations Research. Springer, Berlin. ISSN xActa Math. Appl. Sin. Engl. Ser. Acta Mathematicae Applicatae Sinica. English 1619-4500. Series. Springer, Heidelberg. ISSN 0168-9673. y 30o Col´oq.Bras. Mat. 30o Col´oquioBrasileiro de Matem´atica. [30th Brazilian xActa Math. Hungar. Acta Mathematica Hungarica. Akad. Kiad´o,Budapest. Mathematics Colloquium] Inst. Nac. Mat. Pura Apl. (IMPA), Rio de Janeiro. ISSN 0236-5294. y Aastaraam. Eesti Mat. Selts Aastaraamat. Eesti Matemaatika Selts. [Annual. xActa Math. Sci. Ser. A Chin. Ed. Acta Mathematica Scientia. Series A. Shuxue Estonian Mathematical Society] Eesti Mat. Selts, Tartu. ISSN 1406-4316. Wuli Xuebao. Chinese Edition. Kexue Chubanshe (Science Press), Beijing. ISSN y Abel Symp. Abel Symposia. Springer, Heidelberg. ISSN 2193-2808. 1003-3998. y Abh. Akad. Wiss. G¨ottingenNeue Folge Abhandlungen der Akademie der xActa Math. Sci. Ser. B Engl. Ed. Acta Mathematica Scientia. Series B. English Wissenschaften zu G¨ottingen.Neue Folge. [Papers of the Academy of Sciences Edition. Sci. Press Beijing, Beijing. ISSN 0252-9602. in G¨ottingen.New Series] De Gruyter/Akademie Forschung, Berlin. ISSN 0930- xActa Math. Sin. (Engl. Ser.) Acta Mathematica Sinica (English Series). 4304. Springer, Berlin. ISSN 1439-8516. y Abh. Akad. Wiss. Hamburg Abhandlungen der Akademie der Wissenschaften xActa Math. Sinica (Chin. Ser.) Acta Mathematica Sinica. -

Stan Praktyk Publikacyjnych Polskich Czasopism Naukowych W 2017 Roku

STAN PRAKTYK PUBLIKACYJNYCH POLSKICH CZASOPISM NAUKOWYCH W 2017 ROKU EMANUEL KULCZYCKI STYCZEŃ 2018 Publikacja finansowana jest w ramach projektu „Badanie wzorców doskonałości w nauce i sztuce” w ramach programu Ministra Nauki i Szkolnictwa Wyższego pod nazwą „DIALOG” w latach 2017-2019. STRONA PROJEKTU http://excellence-project.zut.edu.pl JAK CYTOWAĆ RAPORT Kulczycki, E. (2018). Stan praktyk publikacyjnych polskich czasopism naukowych w 2017 roku. DOI: 10.6084/m9.figshare.5683813 Chciałbym podziękować Anecie Drabek, Markowi Hołowieckiemu, Przemysławowi Korytkowskiemu oraz Ewie A. Rozkosz, którzy przyczynili się do powstania tego raportu zarówno poprzez gromadzenie danych jak i przekazane uwagi do pierwszych wersji niniejszego dokumentu. SPIS TREŚCI PODSTAWOWE INFORMACJE O POLSKICH CZASOPISMACH 1 1. Cel raportu .........................................................................................................6 2. Przedmiot analiz ................................................................................................ 7 3. Charakterystyka tematyczna polskich czasopism naukowych .........................9 4. Miejsce wydawania – podział geograficzny .....................................................17 5. Częstotliwość wydawania ................................................................................ 18 6. Czasopisma z wyliczonym wskaźnikiem Impact Factor ..................................24 7. Czasopisma indeksowane na listach European Reference Index for the Humanities ......................................................................................... -

Wykaz Czasopism Naukowych Lp. Tytuł 1 Tytuł 2 Punkty 29 ACTA

Wykaz czasopism naukowych Lp. Tytuł 1 Tytuł 2 Punkty 29 ACTA MATHEMATICA Acta Mathematica 200 76 ACTA NUMERICA Acta Numerica 200 83 ADVANCES IN MATHEMATICS Advances in Mathematics 200 106 AMERICAN JOURNAL OF MATHEMATICS American Journal of Mathematics 200 115 Analysis & PDE Analysis and PDE 200 154 ANNALES SCIENTIFIQUES DE L ECOLE NORMALE SUPERIEURE Annales Scientifiques de l'Ecole Normale Superieure 200 156 ANNALS OF MATHEMATICS Annals of Mathematics 200 204 ANNALS OF STATISTICS Annals of Statistics 200 233 BIOINFORMATICS Bioinformatics 200 234 BULLETIN OF THE AMERICAN MATHEMATICAL SOCIETY Bulletin of the American Mathematical Society 200 235 CALCULUS OF VARIATIONS AND PARTIAL DIFFERENTIAL EQUATIONS Calculus of Variations and Partial Differential Equations 200 236 COMMUNICATIONS ON PURE AND APPLIED MATHEMATICS Communications on Pure and Applied Mathematics 200 237 COMPOSITIO MATHEMATICA Compositio Mathematica 200 238 DUKE MATHEMATICAL JOURNAL Duke Mathematical Journal 200 239 FOUNDATIONS OF COMPUTATIONAL MATHEMATICS Foundations of Computational Mathematics 200 267 GEOMETRIC AND FUNCTIONAL ANALYSIS Geometric and Functional Analysis 200 320 GEOMETRY & TOPOLOGY Geometry and Topology 200 338 INVENTIONES MATHEMATICAE Inventiones Mathematicae 200 434 JOURNAL DE MATHEMATIQUES PURES ET APPLIQUEES Journal des Mathematiques Pures et Appliquees 200 447 JOURNAL FUR DIE REINE UND ANGEWANDTE MATHEMATIK Journal für die Reine und Angewandte Mathematik 200 448 JOURNAL OF ALGEBRAIC GEOMETRY Journal of Algebraic Geometry 200 449 JOURNAL OF DIFFERENTIAL GEOMETRY Journal of Differential Geometry 200 455 Journal of Mathematical Logic Journal of Mathematical Logic 200 456 JOURNAL OF THE AMERICAN MATHEMATICAL SOCIETY Journal of the American Mathematical Society 200 457 JOURNAL OF THE AMERICAN STATISTICAL ASSOCIATION Journal of the American Statistical Association 200 458 JOURNAL OF THE EUROPEAN MATHEMATICAL SOCIETY Journal of the European Mathematical Society 200 JOURNAL OF THE ROYAL STATISTICAL SOCIETY SERIES B-STATISTICAL Journal of the Royal Statistical Society. -

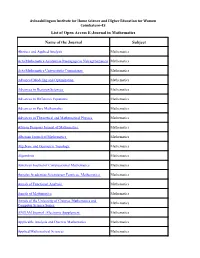

Name of the Journal Subject List of Open Access E-Journal In

Avinashilingam Institute for Home Science and Higher Education for Women Coimbatore-43 List of Open Access E-Journal in Mathematics Name of the Journal Subject Abstract and Applied Analysis Mathematics Acta Mathematica Academiae Paedagogicae Nyiregyhaziensis Mathematics Acta Mathematica Universitatis Comenianae Mathematics Advanced Modeling and Optimization Mathematics Advances in Decision Sciences Mathematics Advances in Difference Equations Mathematics Advances in Pure Mathematics Mathematics Advances in Theoretical and Mathematical Physics Mathematics African Diaspora Journal of Mathematics Mathematics Albanian Journal of Mathematics Mathematics Algebraic and Geometric Topology Mathematics Algorithms Mathematics American Journal of Computational Mathematics Mathematics Annales Academiae Scientiarum Fennicae. Mathematica Mathematics Annals of Functional Analysis Mathematics Annals of Mathematics Mathematics Annals of the University of Craiova. Mathematics and Mathematics Computer Science Series ANZIAM Journal : Electronic Supplement Mathematics Applicable Analysis and Discrete Mathematics Mathematics Applied Mathematical Sciences Mathematics Applied Mathematics Mathematics Applied Mathematics E - Notes Mathematics Applied Sciences Mathematics Archivum Mathematicum Mathematics Armenian Journal of Mathematics Mathematics Asian Journal of Algebra Mathematics Asian Journal of Mathematics & Statistics Mathematics Australian & New Zealand Industrial and Applied Mathematics Mathematics Journal Austrian Journal of Statistics Mathematics Azerbaijan -

Mat 02 Issn 1 Titolo

MAT 02 % MCQ ISSN 1 TITOLO 2011 2012 2013 2014 ABHANDLUNGEN AUS DEM MATHEMATISCHEN SEMINAR DER 0025-5858 UNIVERSITAT HAMBURG 29.2% 58.5% 48.2% 67.6% 0065-1036 ACTA ARITHMETICA 71.5% 60.9% 64.1% 63.0% ACTA ET COMMENTATIONES UNIVERSITATIS TARTUENSIS DE 1406-2283 MATHEMATICA 8.8% 16.2% 3.5% 7.7% 0001-5962 ACTA MATHEMATICA 100.0% 100.0% 100.0% 99.3% 0236-5294 ACTA MATHEMATICA HUNGARICA 56.7% 53.9% 50.0% 41.5% 0252-9602 ACTA MATHEMATICA SCIENTIA 31.7% 27.1% 40.1% 39.8% ACTA MATHEMATICA SINICA, CHINESE 0583-1431 SERIES 23.6% 19.0% 19.4% 16.5% ACTA MATHEMATICA SINICA, ENGLISH 1439-8516 SERIES 56.7% 52.5% 41.9% 45.1% ACTA MATHEMATICA UNIVERSITATIS 0862-9544 COMENIANAE 23.6% 23.9% 18.3% 21.1% 0251-4184 ACTA MATHEMATICA VIETNAMICA 29.2% 32.4% 33.1% 32.0% ADVANCED STUDIES IN CONTEMPORARY MATHEMATICS 1229-3067 (KYUNGSHANG) 45.4% 77.8% 71.8% 38.7% 0196-8858 ADVANCES IN APPLIED MATHEMATICS 85.6% 87.0% 87.0% 81.7% 0001-8708 ADVANCES IN MATHEMATICS 96.5% 96.5% 96.5% 96.5% Advances in Mathematics of 1930-5346 Communications 42.6% 52.5% 52.1% 69.7% ADVANCES IN PURE AND APPLIED 1867-1152 MATHEMATICS 38.0% 28.2% 28.9% 35.9% 0001-9054 AEQUATIONES MATHEMATICAE 54.9% 50.7% 52.1% 48.6% 1012-9405 AFRIKA MATEMATIKA 3.9% 12.0% 36.6% 17.6% AKCE International Journal of Graphs and 0972-8600 Combinatorics 11.6% 21.8% 16.9% 12.0% 1937-0652 ALGEBRA & NUMBER THEORY 90.5% 91.5% 91.5% 93.0% 1726-3255 ALGEBRA AND DISCRETE MATHEMATICS 19.0% 16.2% 16.9% 18.7% 1005-3867 ALGEBRA COLLOQUIUM 29.2% 27.1% 28.2% 24.6% 0002-5240 ALGEBRA UNIVERSALIS 48.6% 43.3% 46.5% 45.1% ALGEBRAS AND REPRESENTATION 1386-923X THEORY 74.3% 63.0% 71.8% 70.8% 0002-9327 AMERICAN JOURNAL OF MATHEMATICS 95.8% 97.2% 96.8% 96.8% 0002-9890 AMERICAN MATHEMATICAL MONTHLY 43.7% 36.6% 40.1% 35.9% Analele Stiintifice ale Universitatii ``Al. -

Instructions for Authors

Instructions for authors The journal Opuscula Mathematica original research articles that are of significant importance in all areas of Discrete Mathematics, Functional Analysis, Differential Equations, Mathematical Physics, Nonlinear Analysis, Numerical Analysis, Probability Theory and Statistics, Theory of Optimal Control and Optimization, Financial Mathematics and Mathematical Economic Theory, Operations Research and other areas of Applied Mathematics. OPEN ACCESS POLICY. Opuscula Mathematica is an open access reasearch journal. This means that the full texts of articles are freely available to users who may read, download, print, and redistribute them without a subscription. SUBMISSION OF MANUSCRIPT. In order to submit a paper for publication, the authors should send a PDF file by e-mail to the address: [email protected] or two copies of the manuscript to the Editorial Office: OPUSCULA MATHEMATICA AGH University of Science and Technology Faculty of Applied Mathematics al. A. Mickiewicza 30, 30-059 Krakow, Poland tel.: +48 12 617 35 86, +48 12 617 31 68 fax: +48 12 617 31 65 http://www.opuscula.agh.edu.pl Clarity of exposition, readability, and timeliness of the contents are the prime criteria in accepting papers submitted for publication. Only original papers will be considered. Manuscripts are accepted for review with the understanding that the same work has not been and will not be and is not being currently submitted elsewhere. Submission for publication must have been approved by all of the authors and by the institution where the work was carried out. Further, that any person cited as a source of personal communications must have approved such citation.