Fast Extrinsic Calibration of a Laser Rangefinder to a Camera

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

“Digital Single Lens Reflex”

PHOTOGRAPHY GENERIC ELECTIVE SEM-II DSLR stands for “Digital Single Lens Reflex”. In simple language, a DSLR is a digital camera that uses a mirror mechanism to either reflect light from a camera lens to an optical viewfinder (which is an eyepiece on the back of the camera that one looks through to see what they are taking a picture of) or let light fully pass onto the image sensor (which captures the image) by moving the mirror out of the way. Although single lens reflex cameras have been available in various shapes and forms since the 19th century with film as the recording medium, the first commercial digital SLR with an image sensor appeared in 1991. Compared to point-and-shoot and phone cameras, DSLR cameras typically use interchangeable lenses. Take a look at the following image of an SLR cross section (image courtesy of Wikipedia): When you look through a DSLR viewfinder / eyepiece on the back of the camera, whatever you see is passed through the lens attached to the camera, which means that you could be looking at exactly what you are going to capture. Light from the scene you are attempting to capture passes through the lens into a reflex mirror (#2) that sits at a 45 degree angle inside the camera chamber, which then forwards the light vertically to an optical element called a “pentaprism” (#7). The pentaprism then converts the vertical light to horizontal by redirecting the light through two separate mirrors, right into the viewfinder (#8). When you take a picture, the reflex mirror (#2) swings upwards, blocking the vertical pathway and letting the light directly through. -

Completing a Photography Exhibit Data Tag

Completing a Photography Exhibit Data Tag Current Data Tags are available at: https://unl.box.com/s/1ttnemphrd4szykl5t9xm1ofiezi86js Camera Make & Model: Indicate the brand and model of the camera, such as Google Pixel 2, Nikon Coolpix B500, or Canon EOS Rebel T7. Focus Type: • Fixed Focus means the photographer is not able to adjust the focal point. These cameras tend to have a large depth of field. This might include basic disposable cameras. • Auto Focus means the camera automatically adjusts the optics in the lens to bring the subject into focus. The camera typically selects what to focus on. However, the photographer may also be able to select the focal point using a touch screen for example, but the camera will automatically adjust the lens. This might include digital cameras and mobile device cameras, such as phones and tablets. • Manual Focus allows the photographer to manually adjust and control the lens’ focus by hand, usually by turning the focus ring. Camera Type: Indicate whether the camera is digital or film. (The following Questions are for Unit 2 and 3 exhibitors only.) Did you manually adjust the aperture, shutter speed, or ISO? Indicate whether you adjusted these settings to capture the photo. Note: Regardless of whether or not you adjusted these settings manually, you must still identify the images specific F Stop, Shutter Sped, ISO, and Focal Length settings. “Auto” is not an acceptable answer. Digital cameras automatically record this information for each photo captured. This information, referred to as Metadata, is attached to the image file and goes with it when the image is downloaded to a computer for example. -

Session Outline: History of the Daguerreotype

Fundamentals of the Conservation of Photographs SESSION: History of the Daguerreotype INSTRUCTOR: Grant B. Romer SESSION OUTLINE ABSTRACT The daguerreotype process evolved out of the collaboration of Louis Jacques Mande Daguerre (1787- 1851) and Nicephore Niepce, which began in 1827. During their experiments to invent a commercially viable system of photography a number of photographic processes were evolved which contributed elements that led to the daguerreotype. Following Niepce’s death in 1833, Daguerre continued experimentation and discovered in 1835 the basic principle of the process. Later, investigation of the process by prominent scientists led to important understandings and improvements. By 1843 the process had reached technical perfection and remained the commercially dominant system of photography in the world until the mid-1850’s. The image quality of the fine daguerreotype set the photographic standard and the photographic industry was established around it. The standardized daguerreotype process after 1843 entailed seven essential steps: plate polishing, sensitization, camera exposure, development, fixation, gilding, and drying. The daguerreotype process is explored more fully in the Technical Note: Daguerreotype. The daguerreotype image is seen as a positive to full effect through a combination of the reflection the plate surface and the scattering of light by the imaging particles. Housings exist in great variety of style, usually following the fashion of miniature portrait presentation. The daguerreotype plate is extremely vulnerable to mechanical damage and the deteriorating influences of atmospheric pollutants. Hence, highly colored and obscuring corrosion films are commonly found on daguerreotypes. Many daguerreotypes have been damaged or destroyed by uninformed attempts to wipe these films away. -

Sample Manuscript Showing Specifications and Style

Information capacity: a measure of potential image quality of a digital camera Frédéric Cao 1, Frédéric Guichard, Hervé Hornung DxO Labs, 3 rue Nationale, 92100 Boulogne Billancourt, FRANCE ABSTRACT The aim of the paper is to define an objective measurement for evaluating the performance of a digital camera. The challenge is to mix different flaws involving geometry (as distortion or lateral chromatic aberrations), light (as luminance and color shading), or statistical phenomena (as noise). We introduce the concept of information capacity that accounts for all the main defects than can be observed in digital images, and that can be due either to the optics or to the sensor. The information capacity describes the potential of the camera to produce good images. In particular, digital processing can correct some flaws (like distortion). Our definition of information takes possible correction into account and the fact that processing can neither retrieve lost information nor create some. This paper extends some of our previous work where the information capacity was only defined for RAW sensors. The concept is extended for cameras with optical defects as distortion, lateral and longitudinal chromatic aberration or lens shading. Keywords: digital photography, image quality evaluation, optical aberration, information capacity, camera performance database 1. INTRODUCTION The evaluation of a digital camera is a key factor for customers, whether they are vendors or final customers. It relies on many different factors as the presence or not of some functionalities, ergonomic, price, or image quality. Each separate criterion is itself quite complex to evaluate, and depends on many different factors. The case of image quality is a good illustration of this topic. -

Ground-Based Photographic Monitoring

United States Department of Agriculture Ground-Based Forest Service Pacific Northwest Research Station Photographic General Technical Report PNW-GTR-503 Monitoring May 2001 Frederick C. Hall Author Frederick C. Hall is senior plant ecologist, U.S. Department of Agriculture, Forest Service, Pacific Northwest Region, Natural Resources, P.O. Box 3623, Portland, Oregon 97208-3623. Paper prepared in cooperation with the Pacific Northwest Region. Abstract Hall, Frederick C. 2001 Ground-based photographic monitoring. Gen. Tech. Rep. PNW-GTR-503. Portland, OR: U.S. Department of Agriculture, Forest Service, Pacific Northwest Research Station. 340 p. Land management professionals (foresters, wildlife biologists, range managers, and land managers such as ranchers and forest land owners) often have need to evaluate their management activities. Photographic monitoring is a fast, simple, and effective way to determine if changes made to an area have been successful. Ground-based photo monitoring means using photographs taken at a specific site to monitor conditions or change. It may be divided into two systems: (1) comparison photos, whereby a photograph is used to compare a known condition with field conditions to estimate some parameter of the field condition; and (2) repeat photo- graphs, whereby several pictures are taken of the same tract of ground over time to detect change. Comparison systems deal with fuel loading, herbage utilization, and public reaction to scenery. Repeat photography is discussed in relation to land- scape, remote, and site-specific systems. Critical attributes of repeat photography are (1) maps to find the sampling location and of the photo monitoring layout; (2) documentation of the monitoring system to include purpose, camera and film, w e a t h e r, season, sampling technique, and equipment; and (3) precise replication of photographs. -

Digital Photography Basics for Beginners

DIGITAL PHOTOGRAPHY BASICS FOR BEGINNERS by Robert Berdan [email protected] www.canadiannaturephotographer.com These notes are free to use by anyone learning or teaching photography. 1. Choosing a camera - there are 2 main types of compact cameras A) Point and Shoot Camera (some have interchangeable lenses most don't) - you view the scene on a liquid crystal display (LCD) screen, some cameras also offer viewfinders. B) Single Lens Reflex (SLR) - cameras with interchangeable lenses let you see the image through the lens that is attached to the camera. What you see is what you get - this feature is particularly valuable when you want to use different types of lenses. Digital SLR Camera with Interchangeable zoom lens 1 Point and shoot cameras are small, light weight and can be carried in a pocket. These cameras tend to be cheaper then SLR cameras. Many of these cameras offer a built in macro mode allowing extreme close-up pictures. Generally the quality of the images on compact cameras is not as good as that from SLR cameras, but they are capable of taking professional quality images. SLR cameras are bigger and usually more expensive. SLRs can be used with a wide variety of interchangeable lenses such as telephoto lenses and macro lenses. SLR cameras offer excellent image quality, lots of features and accessories (some might argue too many features). SLR cameras also shoot a higher frame rates then compact cameras making them better for action photography. Their disadvantages include: higher cost, larger size and weight. They are called Single Lens Reflex, because you see through the lens attached to the camera, the light is reflected by a mirror through a prism and then the viewfinder. -

Evaluation of the Lens Flare

https://doi.org/10.2352/ISSN.2470-1173.2021.9.IQSP-215 © 2021, Society for Imaging Science and Technology Evaluation of the Lens Flare Elodie Souksava, Thomas Corbier, Yiqi Li, Franc¸ois-Xavier Thomas, Laurent Chanas, Fred´ eric´ Guichard DXOMARK, Boulogne-Billancourt, France Abstract Flare, or stray light, is a visual phenomenon generally con- sidered undesirable in photography that leads to a reduction of the image quality. In this article, we present an objective metric for quantifying the amount of flare of the lens of a camera module. This includes hardware and software tools to measure the spread of the stray light in the image. A novel measurement setup has been developed to generate flare images in a reproducible way via a bright light source, close in apparent size and color temperature (a) veiling glare (b) luminous halos to the sun, both within and outside the field of view of the device. The proposed measurement works on RAW images to character- ize and measure the optical phenomenon without being affected by any non-linear processing that the device might implement. Introduction Flare is an optical phenomenon that occurs in response to very bright light sources, often when shooting outdoors. It may appear in various forms in the image, depending on the lens de- (c) haze (d) colored spot and ghosting sign; typically it appears as colored spots, ghosting, luminous ha- Figure 1. Examples of flare artifacts obtained with our flare setup. los, haze, or a veiling glare that reduces the contrast and color saturation in the picture (see Fig. 1). -

Food Photography Camera Bag from the Foodtography Desk

FOODTOGRAPHYSCHOOL.COM FOOD PHOTOGRAPHY CAMERA BAG FROM THE FOODTOGRAPHY DESK HEY THERE, Foodtographer! Welcome! We’re so excited you’re interested in learning more about camera gear. As a photographer, getting the right equipment can be a daunting task. Not to mention all the new vocabulary! From makes and models, to focal lengths, fixed frame, cropped sensors, megapixels, and more, things can get confusing. Like, HALP! Luckily, we’re here to help! In this guide, we’re bringing you our best recommendations for everything you need in your camera bag. We even have multiple suggestions based on different price points to help suit a variety of needs. Now go out there and assemble that perfect camera bag! Love & Brownies, Sarah *Some of the links listed are affiliate links. This means if you click on a link and purchase the item, we will receive an affiliate commission from the retailer at no cost to you. Thank you for your support! FOODTOGRAPHYSCHOOL.COM FOOD PHOTOGRAPHY CAMERA BAG Cameras First things first, cameras! At its core, a camera is a box that lets in light. There are many different ways that cameras can let in light, all with varying degrees of accuracy when it comes to how they record color, contrast, and light itself. POINT AND SHOOT CAMERAS On the lower-quality end of things are point-and-shoot cameras. These are cameras designed to be easy to use, so much so that you can basically point your camera and shoot! They come with a built-in lens, and are quite limited in their functionality. -

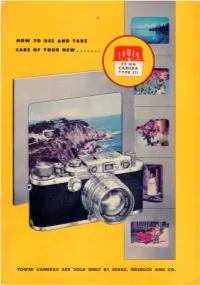

How to Use and Take Care of Your New

HOW TO USE AND TAKE CARE OF YOUR NEW • •• • ••• TOWER CAMERAS ARE SOLD ONLY BY SEARS, ROEBUCK AND CO . INTRODUCTION Your TOWER 35 mm Type III Camera is a precision instrument. Sears laboratory technicians and buyers have worked with the manufacturers on this camera for more than a year and a half before offering it to you. It is a camera that 'will last a lifetime, if treated properly This booklet gives detailed, but simple instructions on its use and proper care. READ BOOKLET CAREFULLY, and keep it handy for reference. 2 We have written this manual in more detail and more technically than is necessary for the ordinary amateur photographe;-. However, after th e amateur has progressed a little in photography, his curiosity will lead him into more advanced stages and the following detailed information is an attempt on our part to anticipate a few of his questions. On the page to the right, we have canden ed th e steps to be taken when ad justing camera for picture taking. This is all the beginner needs to know Even the advanced amateur may fi nd it well to memorize these steps ,1l1d review them occasionally NOTE . The keyed illustration (A) above is frequently referred to throughout the following pages. For that reason, the manual is bound so that you may leave this page open for handy reference. 3 1 Remove lens cap from lens a 2. If lens is collapsible type, pull out simple step which is often overlooked. and lock it in position. Make sure it is firmly locked. -

35Mm Twin-Lens Reflex Camera Sports Viewfinder Viewfinder Hood Film Advance Shaft Assembly Time: Approx

How to assemble and use the supplement Assembled Product and Part Names 35mm Twin-Lens Reflex Camera Sports viewfinder Viewfinder hood Film advance shaft Assembly time: Approx. one hour Tripod socket Parts in the Kit Film advance knob Sprocket Bottom Black box Top plate Film rewind shaft Film rewind knob Aperture plate Front plate plate Viewfinder screen Viewfinder lens Film rewind knob Shutter release lever Screwdriver Film advance knob Back cover Viewfinder lens fixing shaft Imaging lens locking hook holder Back cover Focusing dial Counter Rear plate Side plate (right) Shutter plate Counter gear Shaft holder feeder Back cover locking hook Lens Side plate (left) Mirror fixing plate Counter Shutter release Sprocket lever shaft Assembling the Body Shutter release lever Imaging lens frame Shutter plate Film advance shaft Viewfinder side plate (right) 1 Assemble the body side plate (right) 2 Assemble the body side plate (left) Screws (18) Nut Viewfinder side plate (left) Viewfinder Tripod mount front plate / rear 1.Install the tripod mount 1.Install the film advance knob Shutter front plate plate Insert the nut into the tripod mount, attach the tripod mount to the side plate Insert the film advance knob fixing shaft into the large hole on the shaft holder, (right), and secure with two screws. and align the shape of the film advance knob with the shape of the tip of the film Viewfinder lens frame Imaging lens holder advance knob fixing shaft so that the movement of the knob matches the movement of the shaft. Tighten the screw while making sure that the knob does Screws not move. -

BRAVO! Summer Employee Institute 2012 How to Buy a Digital Camera

BRAVO! Summer Employee Institute 2012 How to Buy a Digital Camera What is a Digital Camera? A digital camera is a camera that captures both still images and video as well. A digital camera is different from conventional film cameras because still images and videos are recorded onto an electronic image sensor. Both conventional and digital cameras operate in a similar manner, they differ because unlike film cameras, digital cameras can immediately produce an image that can be displayed on the devices screen. The images the digital cameras record can also be stored or deleted from memory. Digital cameras also possess the ability to record moving images or videos and also capture sound. The first digital camera was invented in 1975 at the Eastman Kodak company, by an engineer named Steve Sasson. Steps to Buying a Digital Camera 1. What do you need from a Digital Camera: Determine the main purpose you will be buying the digital camera for. If you are going on vacation and will be using it for family photos, then you do not need a $2,000 digital camera. 2. Do you need a DSLR: A Digital Single Lens Reflex camera is a more expensive digital camera. Used for more professional photography sessions that also gives the photographer more creative control of the shoots. 3. Shutter Speed: The speed between captures is very important depending on the activity being captured. Some cameras lag in the time between captures, while others do not. 4. Image Stabilization: Automatically stabilizes the capture from a person’s shaky hands. Lower end digital may not have this feature, it is something the purchaser must look into. -

Texas 4-H Photography Project Explore Photography

Texas 4-H Photography Project Explore Photography texas4-h.tamu.edu The members of Texas A&M AgriLife will provide equal opportunities in programs and activities, education, and employment to all persons regardless of race, color, sex, religion, national origin, age, disability, genetic information, veteran status, sexual orientation or gender identity and will strive to achieve full and equal employment opportunity throughout Texas A&M AgriLife. TEXAS 4-H PHOTOGRAPHY PROJECT Description With a network of more than 6 million youth, 600,000 volunteers, 3,500 The Texas 4-H Explore professionals, and more than 25 million alumni, 4-H helps shape youth series allows 4-H volunteers, to move our country and the world forward in ways that no other youth educators, members, and organization can. youth who may be interested in learning more about 4-H Texas 4-H to try some fun and hands- Texas 4-H is like a club for kids and teens ages 5-18, and it’s BIG! It’s on learning experiences in a the largest youth development program in Texas with more than 550,000 particular project or activity youth involved each year. No matter where you live or what you like to do, area. Each guide features Texas 4-H has something that lets you be a better you! information about important aspects of the 4-H program, You may think 4-H is only for your friends with animals, but it’s so much and its goal of teaching young more! You can do activities like shooting sports, food science, healthy people life skills through hands- living, robotics, fashion, and photography.