Time Series Scenario Composition Framework in Hydroinformatics Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Practical Hydroinformatics

Water Science and Technology Library 68 Practical Hydroinformatics Computational Intelligence and Technological Developments in Water Applications Bearbeitet von Robert J Abrahart, Linda M See, Dimitri P Solomatine 1. Auflage 2008. Buch. xvi, 506 S. Hardcover ISBN 978 3 540 79880 4 Format (B x L): 15,5 x 23,5 cm Gewicht: 944 g Weitere Fachgebiete > Geologie, Geographie, Klima, Umwelt > Geologie > Hydrologie, Hydrogeologie Zu Inhaltsverzeichnis schnell und portofrei erhältlich bei Die Online-Fachbuchhandlung beck-shop.de ist spezialisiert auf Fachbücher, insbesondere Recht, Steuern und Wirtschaft. Im Sortiment finden Sie alle Medien (Bücher, Zeitschriften, CDs, eBooks, etc.) aller Verlage. Ergänzt wird das Programm durch Services wie Neuerscheinungsdienst oder Zusammenstellungen von Büchern zu Sonderpreisen. Der Shop führt mehr als 8 Millionen Produkte. Chapter 2 Data-Driven Modelling: Concepts, Approaches and Experiences D. Solomatine, L.M. See and R.J. Abrahart Abstract Data-driven modelling is the area of hydroinformatics undergoing fast development. This chapter reviews the main concepts and approaches of data-driven modelling, which is based on computational intelligence and machine-learning methods. A brief overview of the main methods – neural networks, fuzzy rule-based systems and genetic algorithms, and their combination via committee approaches – is provided along with hydrological examples and references to the rest of the book. Keywords Data-driven modelling · data mining · computational intelligence · fuzzy rule-based systems · genetic algorithms · committee approaches · hydrology 2.1 Introduction Hydrological models can be characterised as physical, mathematical (including lumped conceptual and distributed physically based models) and empirical. The lat- ter class of models, in contrast to the first two, involves mathematical equations that are not derived from physical processes in the catchment but from analysis of time series data. -

Applications of Social Media in Hydroinformatics: a Survey

Applications of Social Media in Hydroinformatics: A Survey Yufeng Yu, Yuelong Zhu, Dingsheng Wan,Qun Zhao [email protected] College of Computer and Information Hohai University Nanjing, Jiangsu, China Kai Shu, Huan Liu [email protected] School of Computing, Informatics, and Decision Systems Engineering Arizona State University Tempe, Arizona, U.S.A Abstract Floods of research and practical applications employ social media data for a wide range of public applications, including environmental monitoring, water resource managing, disaster and emergency response, etc. Hydroinformatics can benefit from the social media technologies with newly emerged data, techniques and analytical tools to handle large datasets, from which creative ideas and new values could be mined. This paper first proposes a 4W (What, Why, When, hoW) model and a methodological structure to better understand and represent the application of social media to hydroinformatics, then provides an overview of academic research of applying social media to hydroinformatics such as water environment, water resources, flood, drought and water Scarcity management. At last,some advanced topics and suggestions of water-related social media applications from data collection, data quality management, fake news detection, privacy issues , algorithms and platforms was present to hydroinformatics managers and researchers based on previous discussion. Keywords: Social Media, Big Data, Hydroinformatics, Social Media Mining, Water Resource, Data Quality, Fake News 1 Introduction In the past two -

Hydroinformatics and Its Applications at Delft Hydraulics Arthur E

83 © IWA Publishing 1999 Journal of Hydroinformatics | 01.2 | 1999 Hydroinformatics and its applications at Delft Hydraulics Arthur E. Mynett ABSTRACT Hydroinformatics concerns applications of advanced information technologies in the fields Arthur E. Mynett Department of Strategic Research and of hydro-sciences and engineering. The rapid advancement and indeed the very success of Development, hydroinformatics is directly associated with these applications. The aim of this paper is to provide Delft Hydraulics, Postbus 177, Rotterdamseweg 185, 2600 MH Delft, an overview of some recent advances and to illustrate the practical implications of hydroinformatics The Netherlands technologies. A selection of characteristic examples on various topics is presented here, demonstrating the practical use at Delft Hydraulics. Most surely they will be elaborated upon in a more detailed way in forthcoming issues of this Journal. First, a very brief historical background is outlined to characterise the emergence and evolution of hydroinformatics in hydraulic and environmental engineering practice. Recent advances in computational hydraulics are discussed next. Numerical methods are outlined whose main advantages lie in their efficiency and applicability to a very wide range of practical problems. The numerical scheme has to adhere only to the velocity Courant number and is based upon a staggered grid arrangement. Therefore the method is efficient for most free surface flows, including complex networks of rivers and canals, as well as overland flows. Examples are presented for dam break problems and inundation of polders. The latter results are presented within the setting of a Geographical Information System. In general, computational modelling can be viewed as a class of techniques very much based on, and indeed quite well described by, mathematical equations. -

Hydroinformatics Support to Flood Forecasting and Flood Management

Water in Celtic Countries: Quantity, Quality and Climate Variability (Proceedings of the Fourth InterCeltic 23 Colloquium on Hydrology and Management of Water Resources, Guimarães, Portugal, July 2005). IAHS Publ. 310, 2007. Hydroinformatics support to flood forecasting and flood management ADRI VERWEY WL | Delft Hydraulics, Delft, The Netherlands [email protected] Abstract This keynote paper describes state-of-the-art hydroinformatics support to the water sector. A few examples are worked out in some detail, whereas for other examples the reader is guided to recent literature. The focus is on flood forecasting and flood management, with a brief description of the potential of changing technologies that support studies and facilities in this area. Examples are: new data collection methods; data mining from these extensive new sources of information, e.g. the use of genetic programming; data driven modelling techniques, e.g. artificial neural networks; decision support systems; and the provision of a hydroinformatics platform for flood forecasting. Particular attention is given to advances in numerical flood modelling. Over recent years the robustness of numerical models has increased substantially, solving for example, the flooding and drying problem of flood plains and the computation of supercritical flows. In addition, the emergence of hybrid 1D2D models is discussed with their different options for linking model components of flood prone areas. Key words data mining; flood forecasting; flood management; flow resistance; flood -

Hydroinformatics for Hydrology: Data-Driven and Hybrid Techniques

Hydroinformatics for hydrology: data-driven and hybrid techniques Dimitri P. Solomatine UNESCO-IHE Institute for Water Education Hydroinformatics Chair Outline of the course Notion of data-driven modelling (DDM) Data Introduction to some methods Combining models - hybrid models Demonstration of applications – Rainfall-runoff modelling – Reservoir optimization D.P. Solomatine. Data-driven modelling (part 1). 2 •1 Quick start: rainfallrainfall--runoffrunoff modelling FLOW1: effective rainfall and discharge data Discharge [m3/s] Eff.rainfall [mm] 800 0 2 700 4 600 Effective rainfall [mm] 6 500 8 400 10 Discharge [m3/s] 12 300 14 200 16 100 18 0 20 0 500 1000 1500 2000 2500 Time [hrs] D.P. Solomatine. Data-driven modelling. Applications. 3 Quick start: how to calculate runoff one step ahead? SF – Snow RF – Rain EA – Evapotranspiration SP – Snow cover SF RF IN – Infiltration EA R – Recharge A. Lumped conceptual model SM – Soil moisture CFLUX – Capillary transport SP UZ – Storage in upper reservoir IN PERC – Percolation SM LZ – Storage in lower reservoir R CFLUX Qo – Fast runoff component Q0 Q1 – Slow runoff component UZ Q – Total runoff PERC Q1 Q=Q0+Q1 Transform LZ function B. Data-driven (regression) model, based on past data: Q (t+1) = f (REt, REt-1, REt-2, REt-3, REt-4, REt-5, Qt, Qt-1, Qt-2) FLOW1: effective rainfall and discharge data Discharge [m3/s] where f is a non-linear function Eff.rainfall [mm] 800 0 2 700 4 600 Effective rainfall [mm] 6 500 8 400 10 Discharge [m3/s] 12 300 14 200 16 100 18 0 20 0 500 1000 1500 2000 2500 4 D.P. -

The Water Informatics in Science and Engineering (WISE) Centre for Doctoral Training

Hydrol. Earth Syst. Sci., 25, 2721–2738, 2021 https://doi.org/10.5194/hess-25-2721-2021 © Author(s) 2021. This work is distributed under the Creative Commons Attribution 4.0 License. Hydroinformatics education – the Water Informatics in Science and Engineering (WISE) Centre for Doctoral Training Thorsten Wagener1,2,9, Dragan Savic3,4, David Butler4, Reza Ahmadian5, Tom Arnot6, Jonathan Dawes7, Slobodan Djordjevic4, Roger Falconer5, Raziyeh Farmani4, Debbie Ford4, Jan Hofman3,6, Zoran Kapelan4,8, Shunqi Pan5, and Ross Woods1,2 1Department of Civil Engineering, University of Bristol, Bristol, UK 2Cabot Institute, University of Bristol, Bristol, UK 3KWR Water Research Institute, Nieuwegein, the Netherlands 4Centre for Water Systems, College of Engineering, Mathematics and Physical Sciences, University of Exeter, Exeter, UK 5Hydro-environmental Research Centre, School of Engineering, Cardiff University, Cardiff, UK 6Water Innovation and Research Centre, Department of Chemical Engineering, University of Bath, Bath, UK 7Institute for Mathematical Innovation and Department of Mathematical Sciences, University of Bath, Bath, UK 8Department of Water Management, Delft University of Technology, Delft, the Netherlands 9Institute of Environmental Science and Geography, University of Potsdam, Potsdam, Germany Correspondence: Thorsten Wagener ([email protected]) Received: 15 September 2020 – Discussion started: 5 October 2020 Revised: 17 April 2021 – Accepted: 23 April 2021 – Published: 20 May 2021 Abstract. The Water Informatics in Science and Engineer- 1 Introduction ing Centre for Doctoral Training (WISE CDT) offers a post- graduate programme that fosters enhanced levels of innova- tion and collaboration by training a cohort of engineers and The global water cycle consists of a complex web of interact- scientists at the boundary of water informatics, science and ing physical, biogeochemical, ecological and human systems engineering. -

Building an Intelligent Hydroinformatics Integration Platform for Regional Flood Inundation Warning Systems

water Editorial Building an Intelligent Hydroinformatics Integration Platform for Regional Flood Inundation Warning Systems Li-Chiu Chang 1,*, Fi-John Chang 2 , Shun-Nien Yang 1, I-Feng Kao 2, Ying-Yu Ku 1, Chun-Ling Kuo 3 and Ir. Mohd Zaki bin Mat Amin 4 1 Department of Water Resources and Environmental Engineering, Tamkang University, New Taipei City 25137, Taiwan; [email protected] (S.-N.Y.); [email protected] (Y.-Y.K.) 2 Department of Bioenvironmental Systems Engineering, National Taiwan University, Taipei 10617, Taiwan; [email protected] (F.-J.C.); [email protected] (I.-F.K.) 3 Water Resources Agency, Ministry of Economic Affairs, Taipei 10617, Taiwan; [email protected] 4 Water Resources and Climatic Change Research Centre, National Hydraulic Research Institute of Malaysia, 43300 Selangor, Malaysia; [email protected] * Corresponding author: [email protected]; Tel.: +886-2-26258523 Received: 30 November 2018; Accepted: 19 December 2018; Published: 21 December 2018 Abstract: Flood disasters have had a great impact on city development. Early flood warning systems (EFWS) are promising countermeasures against flood hazards and losses. Machine learning (ML) is the kernel for building a satisfactory EFWS. This paper first summarizes the ML methods proposed in this special issue for flood forecasts and their significant advantages. Then, it develops an intelligent hydroinformatics integration platform (IHIP) to derive a user-friendly web interface system through the state-of-the-art machine learning, visualization and system developing techniques for improving online forecast capability and flood risk management. The holistic framework of the IHIP includes five layers (data access, data integration, servicer, functional subsystem, and end-user application) and one database for effectively dealing with flood disasters. -

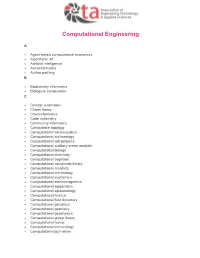

AETA-Computational-Engineering.Pdf

Computational Engineering A Agent-based computational economics Algorithmic art Artificial intelligence Astroinformatics Author profiling B Biodiversity informatics Biological computation C Cellular automaton Chaos theory Cheminformatics Code stylometry Community informatics Computable topology Computational aeroacoustics Computational archaeology Computational astrophysics Computational auditory scene analysis Computational biology Computational chemistry Computational cognition Computational complexity theory Computational creativity Computational criminology Computational economics Computational electromagnetics Computational epigenetics Computational epistemology Computational finance Computational fluid dynamics Computational genomics Computational geometry Computational geophysics Computational group theory Computational humor Computational immunology Computational journalism Computational law Computational learning theory Computational lexicology Computational linguistics Computational lithography Computational logic Computational magnetohydrodynamics Computational Materials Science Computational mechanics Computational musicology Computational neurogenetic modeling Computational neuroscience Computational number theory Computational particle physics Computational photography Computational physics Computational science Computational engineering Computational scientist Computational semantics Computational semiotics Computational social science Computational sociology Computational -

MH106748 Physiology-Based Virtual Reality Training for Social Skills In

MH106748 Physiology-based virtual reality training for social skills in schizophrenia 11/30/2018 PROTOCOL We will implement the adaptive social VR game and determine the optimal dose. After we construct each subject’s affective model with the machine-learning algorithm, we will test the effects of the adaptive VR games in 40 medicated outpatients with schizophrenia (SZ). Demographically matched control (CO) subjects (n=16) will only participate in the affective modeling and social and cognitive assessments but not in VR training (see Human Subjects section for details). To determine the optimal dose of VR training, it is necessary to conduct a valid assessment for social functioning pre- and post- treatment. Details of assessments are outlined below. Optimal dose of physiology-based, adaptive VR intervention is unknown but Tsang & Man53 reported that just ten 30-minute sessions of conventional, non-adaptive VR training was sufficient to obtain an improvement in SZ. In our study, we will examine the effects of social VR training on social and cognitive measures, pre- and post-treatment. At baseline (t1), the PI’s will use stratified randomization to assign SZ to two dose conditions: high vs. low. Stratified randomization is used to ensure that potential confounding factors (e.g. age, gender) are evenly distributed between groups. If possible, we will try to match the two groups on IQ, and the Social Functioning Scale79, a broad measure of social functioning, but if it cannot be done, we will statistically adjust for them when we test for treatment effects. Everybody will train twice a week but the low dose group will train for 30 mins and the high dose group for 2 x 30 mins per visit. -

Urban Hydroinformatics: Past, Present and Future

water Review Urban Hydroinformatics: Past, Present and Future C. Makropoulos 1,2,3 and D. A. Savi´c 1,4,* 1 KWR, Water Research Institute, Groningenhaven 7, 3433 PE Nieuwegein, The Netherlands; [email protected] 2 Department of Water Resources and Environmental Engineering, School of Civil Engineering, National Technical University of Athens, Iroon Politechniou 5, 157 80 Zografou, Athens, Greece 3 Department of Civil and Environmental Engineering, Norwegian University of Science and Technology, 7491 Trondheim, Norway 4 Centre for Water Systems, University of Exeter, Exeter EX44QF, UK * Correspondence: [email protected] Received: 20 May 2019; Accepted: 10 September 2019; Published: 20 September 2019 Abstract: Hydroinformatics, as an interdisciplinary domain that blurs boundaries between water science, data science and computer science, is constantly evolving and reinventing itself. At the heart of this evolution, lies a continuous process of critical (self) appraisal of the discipline’s past, present and potential for further evolution, that creates a positive feedback loop between legacy, reality and aspirations. The power of this process is attested by the successful story of hydroinformatics thus far, which has arguably been able to mobilize wide ranging research and development and get the water sector more in tune with the digital revolution of the past 30 years. In this context, this paper attempts to trace the evolution of the discipline, from its computational hydraulics origins to its present focus on the complete socio-technical system, by providing at the same time, a functional framework to improve the understanding and highlight the links between different strands of the state-of-art hydroinformatic research and innovation. -

New Skills in the Time of Hydroinformatics

Blokker, 8 nov 2018 1 New skills in the time of hydroinformatics drinking water quality conference 2018 KWR invests in strong links with Building the knowledge base valuable partners, in order to needed to provide drinking water build a solid knowledge base of world class quality. realise high quality reference projects 40 years of collective research covering source to tap, create marketable products institutional memory for the drinking water sector KWR Watercycle Research Institute Knowledge institute of and for Dutch & Flemish drinking water companies Blokker, 8 nov 2018 3 Hydroinformatics Hydroinformatics (…) concentrates on the application of ICTs in addressing the increasingly serious problems of the equitable and efficient use of water for many different purposes. (Wikipedia) ➔ More data ➔ More information ➔ More knowledge (?) Blokker, 8 nov 2018 4 New skills Computer skills? • Genetic algorithms • Artificial intelligence • Datamining • … Human skills? • Problem definition • System understanding • … Blokker, 8 nov 2018 5 Some examples in drinking water distribution OPTIMAL NETWORK DESIGN SENSORED NETWORK + REAL TIME MODELLING Janke, R., Uber, J., Hattchett, S., A. Gibbons, D. and Y. Wang, H. (2015). "Enhancements to the EPANET-RTX (Real-Time Analytics) Software Libraries-2015. ." Blokker, 8 nov 2018 6 van Thienen, P., Vertommen, I. and van Laarhoven, K. (2018). "Practical application of optimization techniques Optimal network design to drinking water distribution problems." Hydroinformatics International Conference, Palermo, Italy. -

Hydroinformatics for Scientific Knowledge, Informed Policy, and Effective Response AGENDA Page 4 - 11

CUAHSI Conference on Hydroinformatics July 29 - 31, 2019 Brigham Young University Provo, Utah Hydroinformatics for scientific knowledge, informed policy, and effective response AGENDA Page 4 - 11 BYU CAMPUS DIRECTIONS Page 12 KEYNOTE SPEAKERS Page 13 - 17 PLENARY LIGHTNING TALKS TABLE Page 18 SESSIONS OF Page 19 - 35 WORKSHOPS CONTENTS Page 36 - 39 TOWN HALL Page 40 POSTER PRESENTATIONS Page 41 - 54 2 ACKNOWLEDGEMENTS CUAHSI would like to acknowledge the contributions, support, and assistance from the following organizations: Conference on Hydroinformatics Program Committee Daniel P. Ames (Brigham Young University) Sara Larsen (Upper Colorado River Commission) Emilio Mayorga (University of Washington) Lauren Patterson (Duke University) Jon Pollak (CUAHSI) Jordan Read (U.S. Geological Survey) Dwane Young (U.S. Environmental Protection Agency) Conference Session Chairs, Speakers and Workshop Organizers Brigham Young University CUAHSI Member Institutions National Science Foundation 3 AGENDA Monday, July 29 7:00 - 8:30am Registration and Breakfast Engineering Building (2nd Fl) Welcome Address Engineering 8:45 - 9:00am Building Speaker: Jerad Bales (CUAHSI) (Room 204/206) 9:00 - 10:00am Keynote Engineering Building Incubating progress in hydrologic big data deep learning as a community (Room Speaker: Chaopeng Shen (Pennsylvania State University) 204/206) 10:00 - 10:30am Refreshment Break Engineering Building (2nd Fl) 10:30 - 11:00am Plenary Lightning Talks Engineering Building Leveraging water quality monitoring data through the water quality