Exploring the Effects of Higher Fidelity Display and Interaction for Virtual

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Towards Balancing Fun and Exertion in Exergames

Towards Balancing Fun and Exertion in Exergames Exploring the Impact of Movement-Based Controller Devices, Exercise Concepts, Game Adaptivity and Player Modes on Player Experience and Training Intensity in Different Exergame Settings Zur Erlangung des Grades eines Doktors der Naturwissenschaften (Dr. rer. nat.) genehmigte Dissertation von Anna Lisa Martin-Niedecken (geb. Martin) aus Hadamar Tag der Einreichung: 05.11.2020, Tag der Prüfung: 11.02.2021 1. Gutachten: Prof. Dr. rer. medic. Josef Wiemeyer 2. Gutachten: Prof. Dr. rer. nat. Frank Hänsel Darmstadt – D 17 Department of Human Sciences Institute of Sport Science Towards Balancing Fun and Exertion in Exergames: Exploring the Impact of Movement-Based Controller Devices, Exercise Concepts, Game Adaptivity and Player Modes on Player Experience and Training Intensity in Different Exergame Settings Zur Erlangung des Grades eines Doktors der Naturwissenschaften (Dr. rer. nat.) genehmigte Dissertation von Anna Lisa Martin-Niedecken (geb. Martin) aus Hadamar am Fachbereich Humanwissenschaften der Technischen Universität Darmstadt 1. Gutachten: Prof. Dr. rer. medic. Josef Wiemeyer 2. Gutachten: Prof. Dr. rer. nat. Frank Hänsel Tag der Einreichung: 05.11.2020 Tag der Prüfung: 11.02.2021 Darmstadt, Technische Universität Darmstadt Darmstadt — D 17 Bitte zitieren Sie dieses Dokument als: URN: urn:nbn:de:tuda-tuprints-141864 URL: https://tuprints.ulb.tu-darmstadt.de/id/eprint/14186 Dieses Dokument wird bereitgestellt von tuprints, E-Publishing-Service der TU Darmstadt http://tuprints.ulb.tu-darmstadt.de [email protected] Jahr der Veröffentlichung der Dissertation auf TUprints: 2021 Die Veröffentlichung steht unter folgender Creative Commons Lizenz: Namensnennung – Share Alike 4.0 International (CC BY-SA 4.0) Attribution – Share Alike 4.0 International (CC BY-SA 4.0) https://creativecommons.org/licenses/by-sa/4.0/ Erklärungen laut Promotionsordnung §8 Abs. -

Exergames Experience in Physical Education: a Review

PHYSICAL CULTURE AND SPORT. STUDIES AND RESEARCH DOI: 10.2478/pcssr-2018-0010 Exergames Experience in Physical Education: A Review Authors’ contribution: Cesar Augusto Otero Vaghetti1A-E, Renato Sobral A) conception and design 2A-E 1A-E of the study Monteiro-Junior , Mateus David Finco , Eliseo B) acquisition of data Reategui3A-E, Silvia Silva da Costa Botelho 4A-E C) analysis and interpretation of data D) manuscript preparation 1Federal University of Pelotas, Brazil E) obtaining funding 2State University of Montes Carlos, Brazil 3Federal University of Rio Grande do Sul, Brazil 4Federal University of Rio Grande, USA ABSTRACT Exergames are consoles that require a higher physical effort to play when compared to traditional video games. Active video games, active gaming, interactive games, movement-controlled video games, exertion games, and exergaming are terms used to define the kinds of video games in which the exertion interface enables a new experience. Exergames have added a component of physical activity to the otherwise motionless video game environment and have the potential to contribute to physical education classes by supplementing the current activity options and increasing student enjoyment. The use of exergames in schools has already shown positive results in the past through their potential to fight obesity. As for the pedagogical aspects of exergames, they have attracted educators’ attention due to the large number of games and activities that can be incorporated into the curriculum. In this way, the school must consider the development of a new physical education curriculum in which the key to promoting healthy physical activity in children and youth is enjoyment, using video games as a tool. -

Playstation Move Controller Oem + Sports Champions

wygenerowano 28/09/2021 10:39 PlayStation Move Controller oem + Sports Champions cena 208 zł dostępność Oczekujemy platforma PlayStation 3 odnośnik robson.pl/produkt,11668,playstation_move_controller_oem___sports_champions.html Adres ul.Powstańców Śląskich 106D/200 01-466 Warszawa Godziny otwarcia poniedziałek-piątek w godz. 9-17 sobota w godz. 10-15 Nr konta 25 1140 2004 0000 3702 4553 9550 Adres e-mail Oferta sklepu : [email protected] Pytania techniczne : [email protected] Nr telefonów tel. 224096600 Serwis : [email protected] tel. 224361966 Zamówienia : [email protected] Wymiana gier : [email protected] Zestaw zawiera: ● Grę Sports Champions ● controller PLAYSTATION MOVE PlayStation Move to unikatowy controller przeznaczony wyłącznie do konsoli PlayStation 3. Jak sama nazwa wskazuje, urządzenie skupia się na grach, w których gracz musi pozostawać w ruchu. Co ważne, wyświetlany na ekranie obraz dostępny jest w jakości High Definition. Kontroler został wyposażony w zaawansowane czujniki ruchu i powstał po to, by zapewnić graczom niespotykaną dotąd precyzję w sterowaniu. Urządzenia pozwala na zwiększenie swojej skuteczności w grach, dzięki możliwości precyzyjnego wykrywania ruchów, kąta i pozycji w trójwymiarowej przestrzeni. Bez problemu odczytuje ono zarówno dynamiczne, jak i subtelne ruchy gracza (uderzenia rakietą tenisową czy też pociągnięcie pędzlem). PlayStation Move świetnie współpracuje z kamerą PlayStation Eye, która zajmuje się rejestrowaniem głosu i sylwetki gracza. Co ważne, PlayStation Move jest bezprzewodowy. Posiada on wbudowany akumulator litowo-jonowy oraz technologię Bluetooth. W Sports Champions uczestniczymy w wielu różnorodnych konkurencjach sportowych, gdzie musimy wykazać się sporą zręcznością i umiejętnościami manualnymi. Należy mieć na uwadze, że gra jest przeznaczona z myślą o kontrolerach PlayStation Move, a więc nie zagramy w nią przy użyciu standardowego pada. -

Playstation Games

The Video Game Guy, Booths Corner Farmers Market - Garnet Valley, PA 19060 (302) 897-8115 www.thevideogameguy.com System Game Genre Playstation Games Playstation 007 Racing Racing Playstation 101 Dalmatians II Patch's London Adventure Action & Adventure Playstation 102 Dalmatians Puppies to the Rescue Action & Adventure Playstation 1Xtreme Extreme Sports Playstation 2Xtreme Extreme Sports Playstation 3D Baseball Baseball Playstation 3Xtreme Extreme Sports Playstation 40 Winks Action & Adventure Playstation Ace Combat 2 Action & Adventure Playstation Ace Combat 3 Electrosphere Other Playstation Aces of the Air Other Playstation Action Bass Sports Playstation Action Man Operation EXtreme Action & Adventure Playstation Activision Classics Arcade Playstation Adidas Power Soccer Soccer Playstation Adidas Power Soccer 98 Soccer Playstation Advanced Dungeons and Dragons Iron and Blood RPG Playstation Adventures of Lomax Action & Adventure Playstation Agile Warrior F-111X Action & Adventure Playstation Air Combat Action & Adventure Playstation Air Hockey Sports Playstation Akuji the Heartless Action & Adventure Playstation Aladdin in Nasiras Revenge Action & Adventure Playstation Alexi Lalas International Soccer Soccer Playstation Alien Resurrection Action & Adventure Playstation Alien Trilogy Action & Adventure Playstation Allied General Action & Adventure Playstation All-Star Racing Racing Playstation All-Star Racing 2 Racing Playstation All-Star Slammin D-Ball Sports Playstation Alone In The Dark One Eyed Jack's Revenge Action & Adventure -

Emerging Leaders 2011 Team G Core Genres to Collect

Emerging Leaders 2011 Team G Core Genres to Collect The fluid nature of the videogame industry renders any core genre list outdated very quickly, thus making any list extremely difficult to compile. As a result, we concentrate on offering a genre-level list of core games, with the caution to always remain flexible in offerings. We focus on the following five genres: Social, Narrative, Action, Knowledge, and Strategy. Social : Games that require some degree of socialization. o Party games: Games that require two or more players. • Wii: Mario Party, Super Monkey Balls, Rayman Raving Ribbids • PS3: Little Big Planet, Lemmings, • XBOX 360: Portal o Strategy games: Games with an emphasis on problem solving. • Wii: Battalion Wars 2, Pikmin 2 • PS3: Record of Agarest War • XBOX 360: Command and Conquer, Civilization Revolution Narrative: Games that have a storyline and requires a player to embody a character in context. o Role-Playing Games (RPGs): Games that uses character development as the main part of the game. It can be turned based or real-time action based combat. A key part of the RPG is for the character to increase the inventory, wealth, or statistics of the character during the progression of the game. • Wii: Tales of Symphonia • PS3: Final Fantasy, Fallout, Dragon Age, Elder Scroll • XBOX 360: Fable III, Dungeon Siege, Mass Effect Action: Games that require movement, quick thinking, and reflexes. o Rhythm games: Game play uses music as timing. • Guitar Hero, Rock Band, Dance Dance Revolution o Wii, Kinect, Move games: Games that are especially designed to use the new system. -

Exergames Experience in Physical Education: a Review

PHYSICAL CULTURE AND SPORT. STUDIES AND RESEARCH DOI: 10.2478/pcssr-2018-0010 Exergames Experience in Physical Education: A Review Authors’ contribution: Cesar Augusto Otero Vaghetti1A-E, Renato Sobral A) conception and design 2A-E 1A-E of the study Monteiro-Junior , Mateus David Finco , Eliseo B) acquisition of data Reategui3A-E, Silvia Silva da Costa Botelho 4A-E C) analysis and interpretation of data D) manuscript preparation 1Federal University of Pelotas, Brazil E) obtaining funding 2State University of Montes Carlos, Brazil 3Federal University of Rio Grande do Sul, Brazil 4Federal University of Rio Grande, USA ABSTRACT Exergames are consoles that require a higher physical effort to play when compared to traditional video games. Active video games, active gaming, interactive games, movement-controlled video games, exertion games, and exergaming are terms used to define the kinds of video games in which the exertion interface enables a new experience. Exergames have added a component of physical activity to the otherwise motionless video game environment and have the potential to contribute to physical education classes by supplementing the current activity options and increasing student enjoyment. The use of exergames in schools has already shown positive results in the past through their potential to fight obesity. As for the pedagogical aspects of exergames, they have attracted educators’ attention due to the large number of games and activities that can be incorporated into the curriculum. In this way, the school must consider the development of a new physical education curriculum in which the key to promoting healthy physical activity in children and youth is enjoyment, using video games as a tool. -

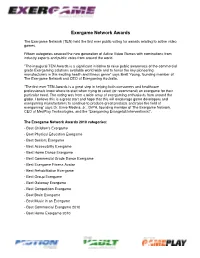

Exergame Network Awards

Exergame Network Awards The Exergame Network (TEN) held the first ever public voting for awards relating to active video games. Fifteen categories covered the new generation of Active Video Games with nominations from industry experts and public votes from around the world. ”The inaugural TEN Awards is a significant initiative to raise public awareness of the commercial grade Exergaming solutions available world wide and to honor the key pioneering manufacturers in this exciting health and fitness genre” says Brett Young, founding member of The Exergame Network and CEO of Exergaming Australia. ”The first ever TEN Awards is a great step in helping both consumers and healthcare professionals know where to start when trying to select (or recommend) an exergame for their particular need. The voting was from a wide array of exergaming enthusiasts from around the globe. I believe this is a great start and hope that this will encourage game developers and exergaming manufacturers to continue to produce great products and raise the field of exergaming” says Dr. Ernie Medina, Jr., DrPH, founding member of The Exergame Network, CEO of MedPlay Technologies, and the “Exergaming Evangelist/Interventionist”. The Exergame Network Awards 2010 categories: - Best Children's Exergame - Best Physical Education Exergame - Best Seniors Exergame - Best Accessibility Exergame - Best Home Dance Exergame - Best Commercial Grade Dance Exergame - Best Exergame Fitness Avatar - Best Rehabilitation Exergame - Best Group Exergame - Best Gateway Exergame - Best Competition Exergame - Best Brain Exergame - Best Music in an Exergame - Best Commercial Exergame 2010 - Best Home Exergame 2010 1. Best Children’s Exergame Award that gets younger kids moving with active video gaming - Dance Dance Revolution Disney Grooves by Konami - Wild Planet Hyper Dash - Atari Family Trainer - Just Dance Kids by Ubisoft - Nickelodeon Fit by 2K Play Dance Dance Revolution Disney Grooves by Konami 2. -

Sports Champions 2

wygenerowano 24/09/2021 19:55 SPORTS CHAMPIONS 2 cena 58 zł dostępność Oczekujemy platforma PlayStation 3 odnośnik robson.pl/produkt,15024,sports_champions_2.html Adres ul.Powstańców Śląskich 106D/200 01-466 Warszawa Godziny otwarcia poniedziałek-piątek w godz. 9-17 sobota w godz. 10-15 Nr konta 25 1140 2004 0000 3702 4553 9550 Adres e-mail Oferta sklepu : [email protected] Pytania techniczne : [email protected] Nr telefonów tel. 224096600 Serwis : [email protected] tel. 224361966 Zamówienia : [email protected] Wymiana gier : [email protected] Sports Champions 2 jest drugą odsłoną wyjątkowo popularnego zestawu sportowych gier zręcznościowych. Tytuł przeznaczony jest wyłącznie dla osób posiadających kontroler ruchu PlayStation Move. Jego producentem są amerykańskie studia Sony San Diego oraz Zindagi Games, które w swoim dorobku maja także inne gry stworzone dla Move, takie jak zręcznościowa przygodówka Medieval Moves: Wyprawa Trupazego. W czasie rozgrywki gracze mogą przetestować swoje siły w sześciu zróżnicowanych dyscyplinach sportowych: golfie, zjeździe na nartach, kręglach. boksie, tenisie oraz łucznictwie. Warto zauważyć, że z pierwszej części została przeniesione jedynie ostatnia z tych konkurencji, tak więc nawet weterani Sports Champions powinni znaleźć w kontynuacji coś dla siebie. Postaciami na ekranie sterujemy za pomocą PlayStation Move. Boks, narciarstwo oraz łucznictwo wspierają obsługę dwóch kontrolerów, ale nie są one niezbędne do zabawy. Sports Champions 2 oferuje cztery tryby rozgrywki, w tym trzy znane z poprzedniej odsłony gry. We Free Play możemy rzucić się w wir rywalizacji w wybranej dyscyplinie, w Challenge staramy się sprostać coraz trudniejszym zadaniom, natomiast w Champion Cup bierzemy udział w kilkustopniowych zawodach, w których konkurujemy z komputerowym AI lub żywymi przeciwnikami (maksymalnie trzema). -

Did Too Much Wii Cause Your Patient's Injury?

CASE 1 c Dorothy A. Sparks, MD; Lisa M. Coughlin, MD; Daniel M. Chase, MD Did too much Wii cause Department of General Surgery, Danbury Hospital, your patient’s injury? University of Vermont College of Medicine, Danbury, Conn (Dr. Sparks); Motion-controlled game consoles like Wii may be used Department of Surgery, University of Toledo Medical to play virtual sports, but the injuries associated with Center, Toledo, Ohio (Dr. Coughlin); Department of them are real. Here’s what to watch for—and a handy Surgery, Hoopeston Community Memorial Hospi- table linking specific games to particular injuries. tal, Hoopeston, Ill (Dr. Chase) [email protected] The authors reported no potential conflict of interest he release of the Wii—Nintendo’s 4th generation relevant to this article. Practice gaming console—in 2006 revolutionized the video recommendationS This article is an expansion of a game industry. By March 31, 2010, more than 70 mil- poster session presented at the T › Ask patients with repeti- lion units had been sold worldwide, earning Wii the title of 12th annual northeastern ohio tive motion injuries (RMIs) 1-3 universities college of medicine “fastest-selling game console of all time.” Department of Surgery resident whether they use interactive Today, there are several game consoles that, like Wii, al- research Day in may 2009 game consoles and, if so, how low the user not only to push buttons or move levers, but to and at the american college much time they spend playing of Preventive medicine control the game using physical movements (TABLE 1). And annual meeting in virtual sports each day. -

Sedentary Gaming Gets a Shot in the Arm with Move 16 September 2010, by RON HARRIS , Associated Press Writer

Sedentary gaming gets a shot in the arm with Move 16 September 2010, By RON HARRIS , Associated Press Writer end of the motion controller. An internal gyroscope and accelerometer help determine the exact position you're holding the Move and how fast you're swinging it in any direction. The main "Move button" is most comfortably accessed with your thumb, and it's surrounded by the usual PlayStation controller action buttons. The placement of the buttons and the feel of the controller seem comfortable and intuitive. The Move also sports a trigger button opposite the Move button for additional control. In this Tuesday, Sept. 14, 2010 photo, the Sony "Sports Champions" (SCEA, $39.99, rated PlayStation Move, a new wireless controller for the E10-plus) includes disc golf, table tennis, fencing, PlayStation 3 video game console, is shown. The Move archery, beach volleyball, bocce and a gladiator device allows for precise motion control over characters and objects on the screen during game play by simply duel. I compared several of the sports with my waving it. (AP Photo/John Bazemore) experience on the "Wii Sports Resort" title. Disc golf on the Wii is fun, but disc golf on PS3 using Move is a fuller experience. It's stunningly Sony Corp. gets gamers off the couch with its new realistic, with challenging built-in opponents and motion controller, the PlayStation Move. The Move detailed terrain. One minute I was scrambling for is a sensitive device with extremely accurate par through a cave entrance guarded by trees and character and object control. -

A Comparison Between Professional Traditional Sports and Electronic Sports

Mikko Lievetmursu A comparison between professional traditional sports and electronic sports A comparison between professional traditional sports and electronic sports Mikko Lievetmursu Bachelor’s Thesis Spring 2018 Business Information Technology Oulu University of Applied Sciences ABSTRACT Oulu University of Applied Sciences Business Information Technology Author(s): Mikko Lievetmursu Title of Bachelor´s thesis: A comparison between professional traditional sports and electronic sports Supervisor(s): Teppo Räisänen Term and year of completion: Spring 2018 Number of pages: 45 This research was written in Oulu University of Applied Sciences by Mikko Lievetmursu and the topic of the thesis focuses on the differences of professional traditional sports and electronic sports. The objective of the thesis is to understand the main differences and similarities in athlete’s professional growth on both sides as well as on an organizational business level. Background for the thesis came up from the exponential growth of e-sports industry in recent years, which means that e-sports is becoming a real sport industry alongside of traditional sports and comparing them can provide valuable knowledge to both industries and the client for the thesis in Oulu University of Applied Sciences. The author has also followed both scenes for years and has a large amount of interest towards both sports. This research achieved a broad understanding of how professional traditional sports and professional electronic sports differ in their respectful sides but also how they are very similar. It also finds additional discussion topics and problems that electronic sports are still facing and what direction electronic sports is heading. Additionally, this research shows how young electronic sports are in history and how they are still in a development of becoming a serious sport within the community. -

Games for Health IQP Final Report Draft 5 March 6Th, 2014 Guidelines for Appealing Exergames

Games for Health IQP Final Report Draft 5 March 6th, 2014 Guidelines for Appealing Exergames Interactive Qualifying Project Report completed in partial fulfillment Of the Bachelor of Science degree at Worcester Polytechnic Institute, Worcester, MA Submitted to: Professor Emmanuel Agu Professor Bengisu Tulu Amorn Chokchaisiripakdee _____________________________ Nuttaworn Sujumnong _____________________________ Latthapol Krachonkitkosol _____________________________ 1 Games for Health IQP Final Report Draft 5 March 6th, 2014 Table of Contents Abstract........................................................................................................................................................4 Chapter 1: Introduction ...............................................................................................................................5 Project objectives .....................................................................................................................................6 Chapter 2: Background ................................................................................................................................7 Chapter 3: Methodology............................................................................................................................11 3.1 Phase 1: Personal game review ........................................................................................................11 Selected Exergames................................................................................................................................13