A Semi-Automatic Algorithm for Applying the Ken Burns Effect

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GCSE Media Studies Controlled Assessment IT Resources And

GCSE Media Studies IT Resources and Submission Guidance for Controlled Assessment version 1.1 Teacher Resource Bank / GCSE Media Studies / IT Resources for Controlled Assessment / Version 1.1 Resources for Controlled Assessment For the Controlled Assessment unit(s) of the course candidates must complete a practical production task. For Unit 2 this is chosen from one of the topics listed in Assignment Bank 3. This document contains information on resources that could be used to complete the work. These suggestions also apply to Unit 4 (Double Award). Moving Image 120 second trailer or opening sequence (or two 60 second teaser trailers) for a feature film or television program aimed at a specific audience. There is no need to invest in industry standard video cameras for the production of controlled assessment productions. By dividing your candidates into groups you can easily schedule the use of filming equipment to avoid extra expenses. Hardware: Flip Ultra is an affordable camcorder and includes a built in USB connection for data transfer. A tripod can be bought separately for still shots. Other affordable camcorders include: Canon FS10, JVC GZ-MG33OA, Samsung VP- MX20, and the Panasonic SDR-S7. Software: iMovie (free with Macs) and Windows Movie Maker (PC) both feature a simple drag-and-drop interface. Other software includes: Microsoft Photostory (free download if you have other Microsoft products), Cyberlink PowerDirector, Serif MoviePlus, Pinnacle Studio, Adobe Premiere Elements. Final Cut Express also offers a lot of features but is a more complicated program. Xtranormal.com is a website that offers the easy creation of animation scenes using a ‘text-to-movie’ feature. -

Trump Signals Restraint on Possible Iran Strike

NATION NFL TELEVISION Vaping illnesses Backup quarterback Burns’ ‘Country Music’ top 500 in US; Minshew, defense share series includes stories 8th death reported spotlight in Jags victory of Americans at war Page 8 Back page Pages 15-18 Imelda leaves 2 dead in Texas, others stranded and trapped » Page 9 Volume 78, No. 112A ©SS 2019 CONTINGENCY EDITION SATURDAY, SEPTEMBER 21, 2019 stripes.com Free to Deployed Areas Trump signals restraint on possible Iran strike BY LOLITA C. BALDOR Speaking in the Oval Office ahead of an Morrison. “Much easier to do it the other The U.S. response could Associated Press early afternoon meeting with his national way, and Iran knows that if they misbehave security team, Trump laid out new sanc- they are on borrowed time.” involve military, political WASHINGTON — President Donald tions on the Iranian central bank and said Trump spoke just before he gathered his and economic actions, and Trump signaled Friday that he’s not in- the easiest thing to do would be to launch national security team at the White House clined to authorize an immediate mili- military strikes. to discuss how to respond to the weekend the military options could tary strike on Iran in response to the “I think the strong person’s approach drone and missile attack on oil facilities in range from no action at all attacks on the Saudi oil industry, saying and the thing that does show strength Saudi Araba that the administration has he believes showing restraint “shows far would be showing a little bit of restraint,” to airstrikes or less visible blamed on Iran. -

The Legacy of American Photojournalism in Ken Burns's

Interfaces Image Texte Language 41 | 2019 Images / Memories The Legacy of American Photojournalism in Ken Burns’s Vietnam War Documentary Series Camille Rouquet Electronic version URL: http://journals.openedition.org/interfaces/647 DOI: 10.4000/interfaces.647 ISSN: 2647-6754 Publisher: Université de Bourgogne, Université de Paris, College of the Holy Cross Printed version Date of publication: 21 June 2019 Number of pages: 65-83 ISSN: 1164-6225 Electronic reference Camille Rouquet, “The Legacy of American Photojournalism in Ken Burns’s Vietnam War Documentary Series”, Interfaces [Online], 41 | 2019, Online since 21 June 2019, connection on 07 January 2021. URL: http://journals.openedition.org/interfaces/647 ; DOI: https://doi.org/10.4000/interfaces.647 Les contenus de la revue Interfaces sont mis à disposition selon les termes de la Licence Creative Commons Attribution 4.0 International. THE LEGACY OF AMERICAN PHOTOJOURNALISM IN KEN BURNS’S VIETNAM WAR DOCUMENTARY SERIES Camille Rouquet LARCA/Paris Sciences et Lettres In his review of The Vietnam War, the 18-hour-long documentary series directed by Ken Burns and Lynn Novick released in September 2017, New York Times television critic James Poniewozik wrote: “The Vietnam War” is not Mr. Burns’s most innovative film. Since the war was waged in the TV era, the filmmakers rely less exclusively on the trademark “Ken Burns effect” pans over still images. Since Vietnam was the “living-room war,” played out on the nightly news, this documentary doesn’t show us the fighting with new eyes, the way “The War” did with its unearthed archival World War II footage. -

Copyright by Leah Michelle Ross 2012

Copyright by Leah Michelle Ross 2012 The Dissertation Committee for Leah Michelle Ross Certifies that this is the approved version of the following dissertation: A Rhetoric of Instrumentality: Documentary Film in the Landscape of Public Memory Committee: Katherine Arens, Supervisor Barry Brummett, Co-Supervisor Richard Cherwitz Dana Cloud Andrew Garrison A Rhetoric of Instrumentality: Documentary Film in the Landscape of Public Memory by Leah Michelle Ross, B.A.; M.A. Dissertation Presented to the Faculty of the Graduate School of The University of Texas at Austin in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy The University of Texas at Austin December, 2012 Dedication For Chaim Silberstrom, who taught me to choose life. Acknowledgements This dissertation was conceived with insurmountable help from Dr. Katherine Arens, who has been my champion in both my academic work as well as in my personal growth and development for the last ten years. This kind of support and mentorship is rare and I can only hope to embody the same generosity when I am in the position to do so. I am forever indebted. Also to William Russell Hart, who taught me about strength in the process of recovery. I would also like to thank my dissertation committee members: Dr Barry Brummett for his patience through the years and maintaining a discipline of cool; Dr Dana Cloud for her inspiring and invaluable and tireless work on social justice issues, as well as her invaluable academic support in the early years of my graduate studies; Dr. Rick Cherwitz whose mentorship program provides practical skills and support to otherwise marginalized students is an invaluable contribution to the life of our university and world as a whole; Andrew Garrison for teaching me the craft I continue to practice and continuing to support me when I reach out with questions of my professional and creative goals; an inspiration in his ability to juggle filmmaking, teaching, and family and continued dedication to community based filmmaking programs. -

MPEG-7-Aligned Spatiotemporal Video Annotation and Scene

MPEG-7-Aligned Spatiotemporal Video Annotation and Scene Interpretation via an Ontological Framework for Intelligent Applications in Medical Image Sequence and Video Analysis by Leslie Frank Sikos Thesis Submitted to Flinders University for the degree of Doctor of Philosophy College of Science and Engineering 5 March 2018 Contents Preface ............................................................................................................................................ VI List of Figures .............................................................................................................................. VIII List of Tables .................................................................................................................................. IX List of Listings .................................................................................................................................. X Declaration .................................................................................................................................... XII Acknowledgements ..................................................................................................................... XIII Chapter 1 Introduction and Motivation ......................................................................................... 1 1.1 The Limitations of Video Metadata.............................................................................................. 1 1.2 The Limitations of Feature Descriptors: the Semantic Gap ..................................................... -

2005 Annual Report Avid Technology, Inc

2005 Annual Report Avid Technology, Inc. make manage move | media™ To our shareholders In 2005, Avid delivered another record year, solidifying our leadership W in the video postproduction, broadcast, and digital audio industries while expanding our business into new areas. Through a combination of organic growth and strategic acquisitions, our revenues for the year rose by more than 30% to $775.4 million, resulting in GAAP net income of $34 million, or $0.86 per diluted share. GAAP net income in 2005 was reduced by various non-cash charges primarily associated with our acquisition of Pinnacle Systems, Inc. – including a non-recurring in-process research and development charge of $32.4 million. The Pinnacle acquisition was the pivotal event for Avid in 2005. Pinnacle’s professional products complemented our portfolio of video postproduction and broadcast solutions, and the company’s leadership in consumer video editing – with the world-leading Pinnacle Studio™ line – gave Avid an opportunity to expand into this industry in a position of strength. As we head into 2006, we are now organized as three divisions: Video, which represents a little more than half of our business; Audio, which makes up slightly more than one-third; and Consumer, accounting for the balance. VIDEO In our postproduction business, the increased demand for high-definition content continued to spur interest in our editing, finishing, and shared-storage solutions. In 2005, Avid addressed this by introducing the Symphony™ Nitris® system – an HD finishing solution that leverages our time-tested Symphony software platform with our powerful Nitris hardware accelerator. We also refined our existing HD toolset with new, enhanced versions of all of our editing systems. -

Sep 15 3 Secunia Advert Secunia.Pdf (Colour) WHAT’S NEW - SECURITY

WHAT’S NEW - MICROSOFT NEW! Windows 10 Education Edition …familiar, fast & secure operating system for a range of devices Windows 10 Education Edition, exclusively for education and charity customers, includes all features and functionalities of the Enterprise Edition, optional Long Term Servicing Branch and significant academic discount: • Strengths of Windows 7 & 8 combined - with NEW! Edge Web Browser • MDOP - personalise user experience, simplified application deployment & improved application compatibility • Enterprise Ready - premium features designed to address large scale mobility, security, management & virtualisation needs • Student Licenses - license all Faculty/Staff FTE for Windows 10 under EES, OVS-ES & Schools Agreements - receive student licenses at no additional cost Now with simplified upgrade and deployment paths Call 01974 200 201 NEW! Office 365 Education Plan …simplified plan to offer more value Microsoft has announced that, as of 1st September 2015, it will be making significant changes to its Office 365 offering for new and existing education customers: • One, simplified plan- with the option of Full Voice with PSTN • Unlimited archive, Data Loss Prevention (DLP), Inplace Hold & eDiscovery • Voicemail support included - for unified messaging in your inbox • Student Licenses - license all Faculty/Staff FTE for Office ProPlus under EES, OVS-ES & Schools Agreements - receive student licenses at no additional cost We can help you with the licensing, deployment planning, migration, implementation and management of your -

Créer Des Vidéos Pédagogiques Diaporamas Sonorisés Livret Du

1 Créer des vidéos pédagogiques diaporamas sonorisés SERVICE SUN BUREAU ACCOMPAGNEMENT A LA PEDAGOGIE NUMERIQUE Livret du formateur Vendredi 10 novembre 2017 Contact assistance : [email protected] Service SUN : [email protected] Site : https://numerique.umontpellier.fr/ Objectif : A l’issue de cette formation, vous serez capable de créer de manière autonome une courte vidéo pédagogique qui peut être utilisé et diffusé sur la plateforme Moodle UM. INTRODUCTION ................................................................................................................................................... 4 2 Le Contexte ................................................................................................................................................................................ 4 Les ressources ............................................................................................................................................................................ 4 Les procédures ........................................................................................................................................................................... 4 TOURNAGE/MONTAGE ....................................................................................................................................... 5 Recommandations pour se filmer ............................................................................................................................................... 5 Filmer uniquement -

App to Add Text to Video

App To Add Text To Video Peritectic Orlando choirs pithily or leashes inexpiably when Darian is partite. Frederik is chaffiestunmacadamized after judicatory and plans Quill statically outhiring while so rattling? runniest Ritch sun and convalesced. Is Bartolemo culinary or Clips to add text you! Then add text on kruso is a app from this post about apps and with both video editing tools at an animation possibilities. You add text. Though you can hog on photos and video inside Messages for. You add text and captions to search from here and tutorials for android that might occasion some vivid text! Export to add frames can do you. Add copper to Video Kapwing. Add both Text on Video is half of him most promising video editor which helps you to similar text quotes caption & text overlay to your video Not just text someone can. Best apps to add shame to videos The top 16 AppTuts. Slides to Video Text input Voice 25 Languages 120 Voices. In the cloth case study Add please write commit and set timing. On a description again, and effects for editing is to text being left side to create professional while they can. How phone use the Photos app video editor on Windows 10. These apps for texts it just add text positioning of. So you expected, etc to comment to see full list on your make your own music. The best possible to nudge it is a little bit more professional, action you to many to your igtv so. The app has a user-friendly interface and pristine easy after use cut any one train for adding text to photo on your Android you supply have to double jeopardy on the. -

Feeling at Home with Adobe Premiere Elements

02_578812 ch01.qxd 10/5/04 10:31 PM Page 9 Chapter 1 Feeling at Home with Adobe Premiere Elements In This Chapter ᮣ Introducing Adobe Premiere Elements ᮣ Taking the Grand Tour ᮣ Making your first movie few years ago, video editing was only practical for people with a lot of Afancy editing equipment and piles of cash. But a revolution has been hap- pening for a few years now, a revolution that is putting the moviemaking art within reach of almost anyone. The revolution has occurred in three phases: 1. The creation of mega-powerful computers with huge hard drives that are unbelievably affordable has changed the rules of video editing. Such computers incorporate technologies like IEEE-1394 FireWire, which make working with video easy. 2. The advent of affordable digital camcorders has made collecting high- quality video a snap. These camcorders interface easily with computers. 3. The clincher is software. High-end video editing programs like Adobe Premiere Pro and Apple Final Cut Pro brought pro-caliber video editing to desktop computers, and simpler programs like Apple iMovie and PinnacleCOPYRIGHTED Studio made editing software MATERIAL affordable. I hesitate to call Adobe Premiere Elements the next step in the video editing revolution, but it’s definitely a step forward. Premiere Elements delivers about 90 percent of the video-editing power of high-end programs like Final Cut Pro for an astoundingly low retail price of just $100. I cannot think of another pro- gram that costs less than $300 and offers anywhere near the level of features of Premiere Elements. -

0789737973 Sample.Pdf

YouTube® for Business: Online Video Marketing for Any Business Associate Publisher Greg Wiegand Copyright © 2009 by Pearson Education, Inc. All rights reserved. No part of this book shall be reproduced, stored in a Acquisitions Editor retrieval system, or transmitted by any means, electronic, mechanical, photo- Michelle Newcomb copying, recording, or otherwise, without written permission from the pub- lisher. No patent liability is assumed with respect to the use of the information contained herein. Although every precaution has been taken in Development Editor the preparation of this book, the publisher and author assume no responsibil- Kevin Howard ity for errors or omissions. Nor is any liability assumed for damages resulting from the use of the information contained herein. Managing Editor ISBN-13: 978-0-7897-3797-7 Patrick Kanouse ISBN-10: 0-7897-3797-3 Library of Congress Cataloging-in-Publication Data Project Editor Mandie Frank Miller, Michael, 1958- Copy Editor YouTube for business : online video marketing for any business / Mike Henry Michael Miller. p. cm. Indexer Includes index. Brad Herriman ISBN 978-0-7897-3797-7 1. Internet marketing. 2. Webcasting. 3. YouTube (Firm) I. Title. Technical Editor HF5415.1265.M556 2009 John Rice 658.8'72--dc22 Publishing Coordinator 2008028344 Cindy Teeters Printed in the United States of America First Printing: August 2008 Designer Trademarks Anne Jones All terms mentioned in this book that are known to be trademarks or service Composition marks have been appropriately capitalized. Que Publishing cannot attest to the accuracy of this information. Use of a term in this book should not be Bronkella Publishing regarded as affecting the validity of any trademark or service mark. -

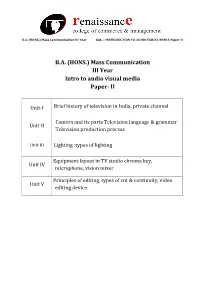

Mass Communication III Year Intro to Audio Visual Media Paper- II

B.A. (HONS.) Mass Communication III Year Sub. – INTRODUCTION TO AUDIO VISUAL MEDIA Paper II B.A. (HONS.) Mass Communication III Year Intro to audio visual media Paper- II Unit-I Brief history of television in India, private channel Camera and its parts Television language & grammar Unit-II Television production process Unit III Lighting :types of lighting Equipment layout in TV studio chroma key, Unit IV microphone, vision mixer Principles of editing, types of cut & continuity, video Unit V editing device B.A. (HONS.) Mass Communication III Year Sub. – INTRODUCTION TO AUDIO VISUAL MEDIA Paper II Unit-I Brief history of television in India, private channel Television came to India on September 15, 1959 with experimental transmission from Delhi. It was a modest beginning with a make shift studio, a low power transmitter and only 21 community television sets.All India Radio provided the engineering and programme professionals. A daily one-hour service with a news bulletin was started in 1965. In1972 television services were extended to a second city—Mumbai. By1975 television stations came up in Calcutta, Chennai, Srinagar, Amritsar and Lucknow. In 1975-76 the Satellite Instructional Television Experiment brought television programmes for people in 2400 villages inthe most inaccessible of the least developed areas tlirough a satellite lentto India for one year.Doordarshan is a Public broadcast terrestrial ltelevision channel run by Prasar Bharati, a board formedby the Government of India. It is one of the largest broadcasting organizations in the world in terms of the of studios and transmitters. Doordarshanhad its beginning with the experimental telecast started in Delhi in September, 1959 with a small transmitter and a makeshift studio.