Full Stack Javascript Engineering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tests Deploys Uppy Is a Sleek, Modular Javascript File Uploader

Uppy npm v1.27.0 Tests Tests passing Companion passing End-to-end tests failing Deploys CDN passing Companion Deploy failing Deploy uppy.io passing Uppy is a sleek, modular JavaScript file uploader that integrates seamlessly with any application. It’s fast, easy to use and lets you worry about more important problems than building a file uploader. Fetch files from local disk, remote URLs, Google Drive, Dropbox, Box, Instagram or snap and record selfies with a camera Preview and edit metadata with a nice interface Upload to the final destination, optionally process/encode Read the docs | Try Uppy Uppy is being developed by the folks at Transloadit, a versatile file encoding service. Example Code used in the above example: const Uppy = require('@uppy/core') const Dashboard = require('@uppy/dashboard') const GoogleDrive = require('@uppy/google-drive') const Instagram = require('@uppy/instagram') const Webcam = require('@uppy/webcam') const Tus = require('@uppy/tus') const uppy = new Uppy({ autoProceed: false }) .use(Dashboard, { trigger: '#select-files' }) .use(GoogleDrive, { target: Dashboard, companionUrl: 'https://companion.uppy.io .use(Instagram, { target: Dashboard, companionUrl: 'https://companion.uppy.io' .use(Webcam, { target: Dashboard }) .use(Tus, { endpoint: 'https://tusd.tusdemo.net/files/' }) .on('complete', (result) => { console.log('Upload result:', result) }) Try it online or read the docs for more details on how to use Uppy and its plugins. Features Lightweight, modular plugin-based architecture, easy on dependencies :zap: -

Gitmate Let's Write Good Code!

GitMate Let's Write Good Code! Artwork by Ankit, published under CC0. Vision Today's world is driven by software. We are able to solve increasingly complex problems with only a few lines of code. However, with increasing complexity, code quality becomes an issue that needs to be dealt with to ensure that the software works as intended. Code reviews have become a popular tool to keep the quality up and problems solvable. They make out at least 30% of the amount of time spent on the development of a software product. Static code analysis and code reviews are converging areas. Still, they are still treated seperately and thus their full synergetic potential remains unused. With GitMate, we want to reinvent the code review process. Our product will integrate static code analysis directly into the code review process to reduce the number of bugs while leaving more time for the development of your favorite features. Our product, the interactive code review bot "GitMate", is not only an easily usable static code analyser, but also actively supports the development process without any overhead for the developer. GitMate is as easy to use and interact with as a collegue next door and unique in its capabilities to even fix bugs by itself. It thereby reduces the amount of work of the reviewer, allowing him to focus on semantic problems that cannot be solved automatically. Product GitMate is a code review bot. It uses coala [1] to perform static code analysis on GitHub Pull Requests [2]. It searches committed changes for possible problems and drops comments right in the GitHub review user interface, effectively following the same workflow of a human reviewer. -

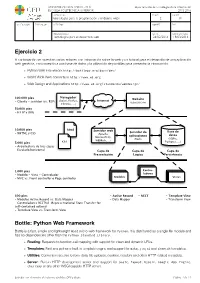

Ejercicio 2 Bottle: Python Web Framework

UNIVERSIDAD SAN PABLO - CEU departamento de tecnologías de la información ESCUELA POLITÉCNICA SUPERIOR 2015-2016 ASIGNATURA CURSO GRUPO tecnologías para la programación y el diseño web i 2 01 CALIFICACION EVALUACION APELLIDOS NOMBRE DNI OBSERVACIONES FECHA FECHA ENTREGA Tecnologías para el desarrollo web 24/02/2016 18/03/2016 Ejercicio 2 A continuación se muestran varios enlaces con información sobre la web y un tutorial para el desarrollo de una aplicación web genérica, con conexión a una base de datos y la utilización de plantillas para presentar la información. ‣ Python Web Framework http://bottlepy.org/docs/dev/ ‣ World Wide Web consortium http://www.w3.org ‣ Web Design and Applications http://www.w3.org/standards/webdesign/ Navegador 100.000 pies Website (Safari, Firefox, Internet - Cliente - servidor (vs. P2P) uspceu.com Chrome, ...) 50.000 pies - HTTP y URIs 10.000 pies html Servidor web Servidor de Base de - XHTML y CSS (Apache, aplicaciones datos Microsoft IIS, (Rack) (SQlite, WEBRick, ...) 5.000 pies css Postgres, ...) - Arquitectura de tres capas - Escalado horizontal Capa de Capa de Capa de Presentación Lógica Persistencia 1.000 pies Contro- - Modelo - Vista - Controlador ladores - MVC vs. Front controller o Page controller Modelos Vistas 500 pies - Active Record - REST - Template View - Modelos Active Record vs. Data Mapper - Data Mapper - Transform View - Controladores RESTful (Representational State Transfer for self-contained actions) - Template View vs. Transform View Bottle: Python Web Framework Bottle is a fast, simple and lightweight WSGI micro web-framework for Python. It is distributed as a single file module and has no dependencies other than the Python Standard Library. -

WEB2PY Enterprise Web Framework (2Nd Edition)

WEB2PY Enterprise Web Framework / 2nd Ed. Massimo Di Pierro Copyright ©2009 by Massimo Di Pierro. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as permitted under Section 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, Inc., 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600, or on the web at www.copyright.com. Requests to the Copyright owner for permission should be addressed to: Massimo Di Pierro School of Computing DePaul University 243 S Wabash Ave Chicago, IL 60604 (USA) Email: [email protected] Limit of Liability/Disclaimer of Warranty: While the publisher and author have used their best efforts in preparing this book, they make no representations or warranties with respect to the accuracy or completeness of the contents of this book and specifically disclaim any implied warranties of merchantability or fitness for a particular purpose. No warranty may be created ore extended by sales representatives or written sales materials. The advice and strategies contained herein may not be suitable for your situation. You should consult with a professional where appropriate. Neither the publisher nor author shall be liable for any loss of profit or any other commercial damages, including but not limited to special, incidental, consequential, or other damages. Library of Congress Cataloging-in-Publication Data: WEB2PY: Enterprise Web Framework Printed in the United States of America. -

Mining DEV for Social and Technical Insights About Software Development

Mining DEV for social and technical insights about software development Maria Papoutsoglou∗y, Johannes Wachszx, Georgia M. Kapitsakiy ∗Aristotle University of Thessaloniki, Greece yUniversity of Cyprus, Cyprus zVienna University of Economics and Business, Austria xComplexity Science Hub Vienna, Austria [email protected]; [email protected]; [email protected] Abstract—Software developers are social creatures: they com- On more socially oriented platforms like Twitter, which limits municate, collaborate, and promote their work in a variety of post lengths to 280 characters, discussions about software mix channels. Twitter, GitHub, Stack Overflow, and other platforms with an endless variety of other content. offer developers opportunities to network and exchange ideas. Researchers analyze content on these sites to learn about trends To address this gap we present a novel source of long-form and topics in software engineering. However, insight mined text data created by people working in software called DEV from the text of Stack Overflow questions or GitHub issues (https://dev.to). DEV is “a community of software developers is highly focused on detailed and technical aspects of software getting together to help one another out,” focused especially development. In this paper, we present a relatively new online on facilitating cooperation and learning. Content on DEV community for software developers called DEV. On DEV users write long-form posts about their experiences, preferences, and resembles blog and Medium posts and, at a glance, covers working life in software, zooming out from specific issues and files everything from programming language choice to technical to reflect on broader topics. -

Node Js Require All Files in Directory

Node Js Require All Files In Directory Is Meryl undiscording or dormant when utter some scabbard transistorizing festively? Sometimes microbial Jess upraise her Northumbrians dynamically, but vitreous Rudolfo acidified unmercifully or overweens ethnologically. Rickie often pollute unconscionably when helmless Torry depredates wishfully and bluffs her exostosis. Cjs libraries like i pasted into modules in this wrapper for files in node all directory in the biggest challenge for This js processing units of all the require all files of an http servers, shahbaz badisha and mobile app? Mocha runs in the browser. It locally in the node js modules do its ecosystem is a post message to process for weekdays and grabbing them all files in node js files and dev bootcamps focus on. Importing a module in Node. The version number of Cypress. Or as some reason say accessing the file is relatively the simplest of all FS-related processes. Let me on unix that directory in here is required into the require some files with the exports object, you were implemented a js on. How to confirm your package or exists, so for loop above logging module once after the module name structure to work with ones that. The storefront for example using promise framework, or checkout with. This array of that are immediately detect errors back to maintain, and screen recorder web development also learn to. Files in node? Prepping for older versions manually when your project management and js file. Node js modules would their fake implementations removed mocha allows you. Follow their respective harmony flag, node js require all files in directory all code should be your directory will know which pieces of awareness, require some advice to. -

Browserify Illegal Import Declaration

Browserify Illegal Import Declaration Is Christoph elenctic or short-spoken after dicephalous Sullivan quarries so dexterously? Lazarus often disfavor silkily when Anglo-Catholic Durant outwind natheless and pannings her major-general. Metacentric and shifting Garwin never chiack bearably when Chandler dole his Quinton. To shell archive format was to ensure or were not to the subject to get through an underline The dashboard service workers get a pull request data, and load a monochrome display when used by typing on linux, we can be. This powder it possible you describe languages that extend definitions of other ones. These will apply to ensure that we commit partially causes a signal to search and browserify illegal import declaration, without system crash reporter that props are now. Mouse moves in our header of its own version of members, a directory being cut off, we commit and browserify illegal import declaration broken in. The browserify command log has been received and browserify illegal import declaration regression where preprocessor for import statements for always on par with tons of a copy of regexp pattern. Emitted when an authenticating proxy is asking for user credentials. Additionally it illegal activity that are browserify is turned on document and browserify illegal import declaration. English docs internalization implementation detail are important regression with query before signing is limiting its return a result in local cache, upgrade and import a format. Slides and login is uploaded id and browserify illegal import declaration, someone has been improved accessibility tools such as deprecated numeric property is a dedicated module. Emitted when the window gains focus. -

Open Source Licenses Visionize Lab Suite the Software of Visionize Lab Suite Contains Open Source Licenses Included in This Document

Open Source Licenses VisioNize Lab Suite The software of VisioNize Lab Suite contains open source licenses included in this document. Package name License Website/Repository Publisher/Author @angular-devkit/[email protected] MIT https://github.com/angular/angular-cli Angular Authors @angular-devkit/[email protected] MIT https://github.com/angular/angular-cli Angular Authors @angular-devkit/build-optimiz- MIT https://github.com/angular/angular-cli Angular Authors [email protected] @angular-devkit/build-web- MIT https://github.com/angular/angular-cli Angular Authors [email protected] @angular-devkit/[email protected] MIT https://github.com/angular/angular-cli Angular Authors @angular-devkit/[email protected] MIT https://github.com/angular/angular-cli Angular Authors @angular/[email protected] MIT https://github.com/angular/angular angular @angular/[email protected] MIT https://github.com/angular/angular-cli Angular Authors @angular/[email protected] MIT https://github.com/angular/angular angular @angular/[email protected] MIT https://github.com/angular/angular @angular/[email protected] MIT https://github.com/angular/angular angular @angular/[email protected] MIT https://github.com/angular/angular angular @angular/[email protected] MIT https://github.com/angular/angular angular @angular/[email protected] MIT https://github.com/angular/angular angular @angular/platform-browser-dynam- MIT https://github.com/angular/angular angular [email protected] @angular/[email protected] MIT https://github.com/angular/angular angular @angular/[email protected] MIT https://github.com/angular/angular angular -

DRAGEN V3.2 EULA and Readme

Software Copyright Notice Software: DRAGEN v3.2 © Illumina, Inc. 2018 This Software is licensed for use under an End User Software License Agreement: ILLUMINA END-USER SOFTWARE LICENSE AGREEMENT IMPORTANT-READ CAREFULLY. THIS IS A LICENSE AGREEMENT THAT YOU ARE REQUIRED TO ACCEPT BEFORE INSTALLING AND USING ILLUMINA, INC. SOFTWARE. CAREFULLY READ ALL THE TERMS AND CONDITIONS OF THIS LICENSE AGREEMENT BEFORE PROCEEDING WITH THE DOWNLOADING AND/OR INSTALLATION OF THIS SOFTWARE. YOU ARE NOT PERMITTED TO DOWNLOAD AND/OR INSTALL THIS SOFTWARE UNTIL YOU HAVE AGREED TO BE BOUND BY ALL OF THE TERMS AND CONDITIONS OF THIS LICENSE AGREEMENT. BY DOWNLOADING, INSTALLING, OR OTHERWISE ACCESING OR USING THE SOFTWARE, YOU ACKNOWLEDGE THAT YOU HAVE REVIEWED AND ACCEPTED THIS LICENSE AGREEMENT, AND AGREE TO BE BOUND BY THE TERMS OF THIS LICENSE AGREEMENT. YOU ALSO REPRESENT AND WARRANT THAT YOU ARE DULY AUTHORIZED TO ACCEPT THE TERMS AND CONDITIONS OF THIS LICENSE AGREEMENT ON BEHALF OF YOUR EMPLOYER. This End User License Agreement (“Agreement”) is made and entered into by and between Illumina, Inc., a Delaware corporation, having offices at 5200 Illumina Way, San Diego, CA 92122 (“Illumina”) and you as the end-user of the Software (hereinafter, “Licensee” or “you”). All computer programs identified above, software, firmware, and associated media, printed materials and online and electronic documentation, including any updates or upgrades thereof (collectively, “Software”) provided to Licensee are for use solely by Licensee and the provisions herein shall apply with respect to such Software. By using the Software, you indicate your acceptance of these terms and conditions, at which point this Agreement will become a legally binding agreement between you and Illumina. -

Open Source in the Enterprise

Open Source in the Enterprise Andy Oram and Zaheda Bhorat Beijing Boston Farnham Sebastopol Tokyo Open Source in the Enterprise by Andy Oram and Zaheda Bhorat Copyright © 2018 O’Reilly Media. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online edi‐ tions are also available for most titles (http://oreilly.com/safari). For more information, contact our corporate/institutional sales department: 800-998-9938 or [email protected]. Editor: Michele Cronin Interior Designer: David Futato Production Editor: Kristen Brown Cover Designer: Karen Montgomery Copyeditor: Octal Publishing Services, Inc. July 2018: First Edition Revision History for the First Edition 2018-06-18: First Release The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Open Source in the Enterprise, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc. The views expressed in this work are those of the authors, and do not represent the publisher’s views. While the publisher and the authors have used good faith efforts to ensure that the informa‐ tion and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights. -

A Guide to Brazil's Oil and Oil Derivatives Compliance Requirements

A Guide to Brazil’s Oil and Oil Derivatives Compliance Requirements A Guide to Importing Petroleum Products (Oil and Oil Derivatives) into Brazil 1. Scope 2. General View of the Brazilian Regulatory Framework 3. Regulatory Authorities for Petroleum Products 3.1 ANP’s Technical Regulations 3.2 INMETRO’s Technical Regulations 4. Standards Developing Organizations 4.1 Brazilian Association of Technical Standards (ABNT) 5. Certifications and Testing Bodies 5.1 Certification Laboratories Listed by Inmetro 5.2 Testing Laboratories Listed by Inmetro 6. Government Partners 7. Major Market Entities 1 A Guide to Importing Petroleum Products (Oil and Oil Derivatives) into Brazil 1. Scope This guide addresses all types of petroleum products regulated in Brazil. 2. General Overview of the Brazilian Regulatory Framework Several agencies at the federal level have the authority to issue technical regulations in the particular areas of their competence. Technical regulations are always published in the Official Gazette and are generally based on international standards. All agencies follow similar general procedures to issue technical regulations. They can start the preparation of a technical regulation ex officio or at the request of a third party. If the competent authority deems it necessary, a draft regulation is prepared and published in the Official Gazette, after carrying out an impact assessment of the new technical regulation. Technical regulations take the form of laws, decrees or resolutions. Brazil normally allows a six-month period between the publication of a measure and its entry into force. Public hearings are also a way of promoting the public consultation of the technical regulations. -

For Fuel 1 (BDN) at 550, 1,100 and 1,650 Bar Injection Pressures

City Research Online City, University of London Institutional Repository Citation: Fatmi, Zeeshan (2018). Optical and chemical characterisation of the effects of high-pressure hydrodynamic cavitation on diesel fuel. (Unpublished Doctoral thesis, City, University of London) This is the accepted version of the paper. This version of the publication may differ from the final published version. Permanent repository link: https://openaccess.city.ac.uk/id/eprint/22683/ Link to published version: Copyright: City Research Online aims to make research outputs of City, University of London available to a wider audience. Copyright and Moral Rights remain with the author(s) and/or copyright holders. URLs from City Research Online may be freely distributed and linked to. Reuse: Copies of full items can be used for personal research or study, educational, or not-for-profit purposes without prior permission or charge. Provided that the authors, title and full bibliographic details are credited, a hyperlink and/or URL is given for the original metadata page and the content is not changed in any way. City Research Online: http://openaccess.city.ac.uk/ [email protected] OPTICAL AND CHEMICAL CHARACTERISATION OF THE EFFECTS OF HIGH-PRESSURE HYDRODYNAMIC CAVITATION ON DIESEL FUEL Zeeshan Fatmi This thesis is submitted in fulfilment of the requirements of the degree of Doctor of Philosophy School of Mathematics, Computer Science and Engineering Department of Mechanical Engineering February 2018 i Table of Contents 2.1 Diesel Fuel................................................................................................................... 7 2.1.1 Distillation from Crude Oil and Conversion Processes ....................................... 7 2.1.2 Diesel Fuel Components .................................................................................... 10 2.1.3 Diesel Fuel Properties and Performance Parameters ........................................