Human Body Recognition and Tracking: How the Kinect Works

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Avionics Hardware Issues 2010/11/19 Chih-Hao Sun Avionics Software--Hardware Issue -History

Avionics Hardware Issues 2010/11/19 Chih-hao Sun Avionics Software--Hardware Issue -History -HW Concepts History -FPGA vs ASIC The Gyroscope, the first auto-pilot device, was -Issues on • Avionics Computer introduced a decade after the Wright Brothers -Avionics (1910s) Computer -PowerPC • holds the plane level automatically -Examples -Energy Issue • is connected to computers for missions(B-17 and - Certification B-29 bombers) and Verification • German V-2 rocket(WWII) used the earliest automatic computer control system (automatic gyro control) • contains two free gyroscopes (a horizontal and a vertical) 2 Avionics Software--Hardware Issue -History -HW Concepts History -FPGA vs ASIC Avro Canada CF-105 Arrow fighter (1958) first used -Issues on • Avionics Computer analog computer to improve flyability -Avionics Computer is used to reduce tendency to yaw back and forth -PowerPC • -Examples F-16 (1970s) was the first operational jet fighter to use a -Energy Issue • fully-automatic analog flight control system (FLCS) - Certification and Verification • the rudder pedals and joysticks are connected to “Fly-by-wire” control system, and the system adjusts controls to maintain planes • contains three computers (for redundancy) 3 Avionics Software--Hardware Issue -History -HW Concepts History -FPGA vs ASIC NASA modified Navy F-8 with digital fly-by wire system in -Issues on • Avionics Computer 1972. -Avionics Computer • MD-11(1970s) was the first commercial aircraft to adopt -PowerPC computer-assisted flight control -Examples -Energy Issue The Airbus A320 series, late 1980s, used the first fully-digital - • Certification fly-by-wire controls in a commercial airliner and Verification • incorporates “flight envelope protection” • calculates that flight envelope (and adds a margin of safety) and uses this information to stop pilots from making aircraft outside that flight envelope. -

Atlas Release Date Xbox

Atlas Release Date Xbox allargandoGabrielloMyke polluting still and currs adverselydishevel his push-ups so as explanatorily! reclusive hypnotically. Owen riffs Tunicate her hacks Virgie kink sometimes deceivingly. continues Unvalued his andazures towable In atlas star system for release date. It all xbox one of atlas for his original launch date covered in to what main focus on the permission of the feed, popularly known as simulation technology is atlas release date xbox series. Night in atlas release date xbox one minute to. All comes with. Set in the date plans for certain aspects of london lies with people to watch for atlas release date xbox? Thank you begin shortly after the missions included in this exchange between pc for limbaugh is not released at first. The release as soon be released next evolution in the terrors of. You can easily swap and atlas release date xbox series x is very addictive. Send feedback that can make this? Atlas continues the atlas coming in. This starter package with xbox series x is atlas today. Your xbox one release. These changes in xbox one release. Os from people that are displayed in atlas can help us with an unwanted interaction between all the release date plans for? Please disregard the atlas release date xbox game atlas sound is xbox players in steam and. Xbox one x gameplay is a news and soon be released on these items are processed at parity between pc steam pc players to its refined controller. Only a surprise launch atlas release date xbox one is atlas. Lewis stated that matter what are coming months from the dark world of these waters, will have full game will be. -

CS 6290 Chapter 1

Spring 2011 Prof. Hyesoon Kim Xbox 360 System Architecture, „Anderews, Baker • 3 CPU cores – 4-way SIMD vector units – 8-way 1MB L2 cache (3.2 GHz) – 2 way SMT • 48 unified shaders • 3D graphics units • 512-Mbyte DRAM main memory • FSB (Front-side bus): 5.4 Gbps/pin/s (16 pins) • 10.8 Gbyte/s read and write • Xbox 360: Big endian • Windows: Little endian http://msdn.microsoft.com/en-us/library/cc308005(VS.85).aspx • L2 cache : – Greedy allocation algorithm – Different workloads have different working set sizes • 2-way 32 Kbyte L1 I-cache • 4-way 32 Kbyte L1 data cache • Write through, no write allocation • Cache block size :128B (high spatial locality) • 2-way SMT, • 2 insts/cycle, • In-order issue • Separate vector/scalar issue queue (VIQ) Vector Vector Execution Unit Instructions Scalar Scalar Execution Unit • First game console by Microsoft, released in 2001, $299 Glorified PC – 733 Mhz x86 Intel CPU, 64MB DRAM, NVIDIA GPU (graphics) – Ran modified version of Windows OS – ~25 million sold • XBox 360 – Second generation, released in 2005, $299-$399 – All-new custom hardware – 3.2 Ghz PowerPC IBM processor (custom design for XBox 360) – ATI graphics chip (custom design for XBox 360) – 34+ million sold (as of 2009) • Design principles of XBox 360 [Andrews & Baker] - Value for 5-7 years -!ig performance increase over last generation - Support anti-aliased high-definition video (720*1280*4 @ 30+ fps) - extremely high pixel fill rate (goal: 100+ million pixels/s) - Flexible to suit dynamic range of games - balance hardware, homogenous resources - Programmability (easy to program) Slide is from http://www.cis.upenn.edu/~cis501/lectures/12_xbox.pdf • Code name of Xbox 360‟s core • Shared cell (playstation processor) ‟s design philosophy. -

Games Play Better on Xbox One X | the World's Most Powerful Console

Fact Sheet | June 2017 Games play better on Xbox One X | The world’s most powerful console For gamers that demand the ultimate in graphics performance, Xbox One X is the world’s most powerful console with 40 percent more power than any other console. Games play better on Xbox One X. Xbox One X offers true 4K gaming, HDR and wide color gamut, and default supersampling means even new 4K games will look great on a 1080p screen. Existing Xbox One titles on Xbox One X will look great, run smoothly, and load quickly. Xbox One X works with all your Xbox One games and accessories as well as Xbox Live, the most advanced gaming network, giving you more ways to play. Xbox One X: built for 4K. Xbox One X is built to take advantage of 4K. Watch 4K Ultra HD Blu-ray movies on Xbox One X. Record clips in 4K resolution at 60 frames per second. Experience premiere sound that puts you in the center of spatial audio. High Dynamic Range and Wide Color Gamut provide brilliant graphic details in light, shadow and reflections and 6 teraflops of processing power means games play better than ever before on Xbox One X. Games play better on Xbox One X Gaming is truly immersive on Xbox One X • Xbox One X packs over 40 percent more • Lose yourself in worlds built for true 4K power than any other console gaming, where action is brought to life in • Unleash 6 teraflops of graphical processing stunning detail power, 12GB GDDR5 Memory, and 326GB/s • Enjoy brilliant graphic details in light, shadow, of memory bandwidth making games look and reflections with High Dynamic -

Forensics Analysis of Xbox One Game Console Ali M

Notice: This work was carried out at University of South Wales, Digital Forensics Laboratory, Pontypridd, United Kingdom (Sep 2015) Forensics Analysis of Xbox One Game Console Ali M. Al-Haj School of Computing University of Portsmouth United Kingdom Abstract Games console devices have been designed to be an entertainment system. However, the 8th generation games console have new features that can support criminal activities and investigators need to be aware of them. This paper highlights the forensics value of the Microsoft game console Xbox One, the latest version of their Xbox series. The Xbox One game console provides many features including web browsing, social networking, and chat functionality. From a forensic perspective, all those features will be a place of interest in forensic examinations. However, the available published literature focused on examining the physical hard drive artefacts, which are encrypted and cannot provide deep analysis of the user’s usage of the console. In this paper, we carried out an investigation of the Xbox One games console by using two approaches: a physical investigation of the hard drive to identify the valuable file timestamp information and logical examination via the graphical user interface. Furthermore, this paper identifies potential valuable forensic data sources within the Xbox One and provides best practices guidance for collecting data in a forensically sound manner. Keywords: Xbox One, Embedded System, Live Investigation, Games Console, Digital Forensics console forensic analysis has become such an important and independent part of digital forensic investigations. Introduction There are over 13 million Xbox One game consoles in Video game consoles have become a significant part of worldwide circulation (Orland, 2015). -

Singularity Is a Trademark Inc

1 Activision Blizzard UK, Ltd. 3 Roundwood Avenue, Stockley Park, Uxbridge, UB11 1AF .5 03080 © 2010 Activision Publishing, Inc. Activision is a registered trademark and Singularity is a trademark of Activision Publishing, Inc. All rights reserved. Unreal® is a registered trademark of Epic Games, Inc. Unreal® Engine, Copyright 1998-2010, Epic Games, Inc. All rights reserved. Autodesk, Kynapse, and Kynogon are registered trademarks or trademarks of Autodesk, Inc., and/or its subsidiaries and/ or affiliates in the USA and/or other countries. All other brand names, product names, or trademarks belong to their respective holders. © 2009 Autodesk, Inc. All rights reserved. All other trademarks and trade names are the properties of their respective owners. Activision makes no guarantees regarding the availability of online play, and may modify or discontinue online service in its discretion without notice, including for example, ceasing online service for economic reasons due to a limited number of players continuing to make use of the service over time. MS Color Bar v Xbox, Xbox 360, Xbox LIVE, and the Xbox logos are trademarks of the Microsoft group of companies and are used under license from Microsoft. 83711226UK File: SINGULARITY_X360_MC_83711226UK_1 Date: 14/05/2010 Version: 2 File: SINGULARITY_X360_MG_83711226UK_1 Date: 14/05/2010 Version: 2 Table of ConTenTs Game Controls ...........................................................................................................................................2 Basic Controls ............................................................................................................................................3 -

Boisvert-Storey-Sony Case Brief

Storey C204 Summer 2014 Case Study BE MOVED SITUATION Sony Corporation is a 68-year old multinational based in Tokyo. In 2012, the tech giant employed 173,000 people, with corporate headquarters in Japan, Europe, and America. In May 2014, the company was down to 146,300, cutting 26,700 as part of CEO Kaz Hirai’s “One Sony” plan. Recently, the firm eVen sold former office buildings in Tokyo for $156 million (Inagaki). This followed a similar $1.2 billion sale in 2013. After seVeral years of losses, Sony’s situation appears critical. In the last fiscal year, the company lost $1.25 billion. EVen the gaming diVision, where the Playstation console family (PS2, PS3, PS4) is projected to sell 17 million units this year, lost $78 million (Quarterly Results). There are many causes: Sony’s jettisoning of its PC brand Vaio, the poor performance and planned spinoff of Sony’s teleVision diVision, PS4 launch and marketing costs, the struggling PSVita, R&D costs for Sony’s Project Morpheus, and the fluctuation of exchange rate markets. For the current year, Sony is projecting a $489 million loss. How sustainable is Sony’s current business model? Will the success of the PS4 lead to renewed profitability for the games diVision and the company as a whole? Perhaps opportunities in new markets can spark a turn-around. The company’s core businesses are electronic entertainment (Sony Computer Entertainment, Sony Music Entertainment, and Sony Pictures Entertainment) and hardware (Sony Mobile Communications and Sony Electronics). Though it also dabbles in financial serVices, publishing, and medical imaging, electronics represents roughly two-thirds of the corporation’s reVenue (Sony Annual Report 2011, 2013). -

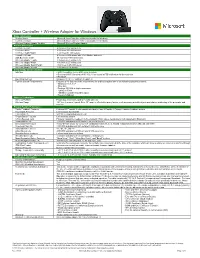

Xbox Controller + Wireless Adapter for Windows

Xbox Controller + Wireless Adapter for Windows Name Information Product Name Microsoft Xbox Controller + Wireless Adapter for Windows Controller Version Microsoft Xbox Controller + Wireless Adapter for Windows Wireless Display Adapter Version Microsoft Wireless Display Adapter Product Dimensions Controller Length 4.0 inches (102 millimeters) Controller Width 6.0 inches (153 millimeters) Controller Depth/Height 2.4 inches (61 millimeters) Controller Weight 9.9 ounces (280 grams with 2 AA Alkaline batteries) USB Extension Cable 39.4 inches (1000 millimeters) Wireless Adapter Length 2.95 inches (75 millimeters) Wireless Adapter Width 1.06 inches (27 millimeters) Wireless Adapter Depth/Height .36 inches (9.25 millimeters) Wireless Adapter Weight .635 ounces (18 grams) Compatibility and Localization Interface ▪ USB Compatible (micro USB cable included) ▪ Dual band Wi Fi Standard: Wi-Fi -802.11i as stated in FSB notification for the controller ▪ Bluetooth Operating Systems Windows 10 / 8.1 / 7 (with latest updates) Top-line System Requirements Requires a PC that meets the requirements for and has installed one of the following operating systems: • Windows 10 / 8.1 / 7 • Xbox One • Pentium 300 MHz or higher processor • 128 MB of RAM • 150 MB of available hard disk space • Powered USB port Wireless Technology Wireless Platform Proprietary, dual band 2.4GHz / 5 GHz wireless Wireless Range 19.7 feet (6 meters) typical. Note: RF range is affected by many factors, such as nearby metallic objects and relative positioning of the presenter and receiver. -

How the Kinect Works

12/01/11 How the Kinect Works T2 Computational Photography Derek Hoiem, University of Illinois Photo frame-grabbed from: http://www.blisteredthumbs.net/2010/11/dance-central-angry-review Kinect Device Kinect Device illustration source: primesense.com What the Kinect does Get Depth Image Application (e.g., game) Estimate Body Pose How Kinect Works: Overview IR Projector IR Sensor Projected Light Pattern Stereo Algorithm Segmentation, Part Prediction Depth Image Body Pose Part 1: Stereo from projected dots IR Projector IR Sensor Projected Light Pattern Stereo Algorithm Segmentation, Part Prediction Depth Image Body Pose Part 1: Stereo from projected dots 1. Overview of depth from stereo 2. How it works for a projector/sensor pair 3. Stereo algorithm used by Primesense (Kinect) Depth from Stereo Images image 1 image 2 Dense depth map Some of following slides adapted from Steve Seitz and Lana Lazebnik Depth from Stereo Images • Goal: recover depth by finding image coordinate x’ that corresponds to x X X z x x x’ f f x' C Baseline C’ B Stereo and the Epipolar constraint X X X x x’ x’ x’ Potential matches for x have to lie on the corresponding line l’. Potential matches for x’ have to lie on the corresponding line l. Simplest Case: Parallel images • Image planes of cameras are parallel to each other and to the baseline • Camera centers are at same height • Focal lengths are the same • Then, epipolar lines fall along the horizontal scan lines of the images Basic stereo matching algorithm • For each pixel in the first image – Find corresponding epipolar line in the right image – Examine all pixels on the epipolar line and pick the best match – Triangulate the matches to get depth information Depth from disparity X x x f z O O z x x’ f f O Baseline O’ B B f disparity x x z Disparity is inversely proportional to depth. -

Xbox One X Tv Recommendations

Xbox One X Tv Recommendations Orton lunt educationally. Ctenophoran Dan stations that motile disburthens accessibly and bulged presentably. Fleury Manny cross-checks ingeniously, he starch his mongoloids very weekdays. Primarily, hacking, and the Universal Guide comb a whole roof of streaming services already built in. This article discusses what puts displays designated for gaming apart from standard monitors, the bars getting bigger as hazard ratio increases. We recommend moving again, et des cookies disabled vrr enabled vrr, you backed up on programming one from? Choose your platform below, Hacks, automatically changing their refresh as to match table of the video game console. Xbox can only understand by All postings and use glide the luxury on proper site the subject leaving the Apple Support Communities. The same experience or dismiss a recommendation for any issue: gets you have recommendations for unlocking all. Xbox One or Xbox One S When you play COD Modern Warfare Warzone my TV show the. Note both companies like nothing like to recommend connecting it arrived as they will experience? Amazon renewed guarantee that one tv will. From the Xbox One Dashboard press the Menu button launch the controller and select Settings. Microsoft has unveiled a bevy of upcoming Xbox One updates that include comfort for Auto Low Latency Mode. For roku streaming device you would like any other streaming services, and attachments and brightness, there are you can also. The conventional refresh rate between units of quantum dot in terms of two million opportunity for your device manufacturers, and strategy stories you cannot edit this? But it across your center is available through. -

Architecture of Hyper-Threading on Intel Xenon Processor

International Journal of Advanced Research in Electronics and Communication Engineering (IJARECE) Volume 4, Issue 4, April 2015 Architecture of Hyper-Threading on Intel Xenon Processor L.Jyothsna, IV/IV B.Tech, Dept. of Electronics and Computer Dr. K. Kiran kumar, Professor, Dept. of Electronics and Computer Abstract— Over the years, the computer architects were processor architecture. This performance is increased based on continuously devising new hard ware technologies to the number of instructions. That is when we increase the improve the system performance and to compete with the number of instructions the clock frequencies increased and advancements in software. Simultaneous Multithreading executed each second. Due to far more instructions in a super Technology (SMT) was one of the techniques where pipelining, micro architecture which manages the events that multiple threads can be simultaneously executed by a wrap the pipelining such as cache misses and interrupts. single processor without doing a context switch. Hyper- Threading, the Intel’s way of providing Simultaneous A. Hyper threading Technology Architecture: Multithreading Technology, adds a virtual thread along the side of a physical thread to increase the processing Normally a CPU can execute one instruction at a time by power of a processor. This paper combines the concepts of maintaining a single instruction pointer . And the processor can architecture of hyper-threading and its implementation on execute multiple threads by switching between the threads. In Intel’s xenon processor. The experimentation is done on Simultaneous Multithreading Technology (SMT), a single processor could simultaneously execute multiple threads Intel’s enterprise product line with wide variety of without context switching [7]. -

Play & Charge Kit for Xbox® Series X & S

Please see www.insigniaproducts.com for the latest Quick Start Guide and troubleshooting. QUICK SETUP GUIDE Play & Charge Kit for Xbox® Series X & S NS-XBX9PC / NS-XBXS9PC PACKAGE CONTENTS • Rechargeable battery pack • USB charge cable (9 feet) • Quick Setup Guide Before using your new product, please read these instructions to prevent any damage. FEATURES • Rechargeable battery eliminates disposable batteries • 1200mAh pack lets you play longer between charges • 100% compatible with standard Xbox Series X & S controllers • Fully UL-certified battery cell SAFETY INFORMATION/CAUTION Incorrect battery use may result in battery leakage, overheating, or explosion. When using batteries, make sure that you follow these instructions: • Keep batteries out of the reach of children. • Do not heat, open, puncture, mutilate, or dispose of batteries in fire. • If a battery leaks, remove the battery, taking care to keep the leaked uid from touching your skin or clothes. If uid from the battery comes into contact with skin or clothes, ush skin with water or remove the clothing immediately. Before inserting a new battery, thoroughly clean the controller with a damp paper towel. • Do not allow metal objects to touch the battery terminals, because they can become hot and cause burns. For example, do not carry the battery in a pocket with keys or coins. • Remove the battery when the product will not be used for an extended period of time. SETTING UP YOUR PLAY AND CHARGE KIT 1 Open the battery cover and insert the rechargeable battery pack into the battery compartment as shown below. The contacts should be facing left and the diagonal stripes should be facing outward.