Post Link-Time Optimization on the Intel IA-32 Architecture£

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Design and Implementation of Generics for the .NET Common Language Runtime

Design and Implementation of Generics for the .NET Common Language Runtime Andrew Kennedy Don Syme Microsoft Research, Cambridge, U.K. fakeÒÒ¸d×ÝÑeg@ÑicÖÓ×ÓfغcÓÑ Abstract cally through an interface definition language, or IDL) that is nec- essary for language interoperation. The Microsoft .NET Common Language Runtime provides a This paper describes the design and implementation of support shared type system, intermediate language and dynamic execution for parametric polymorphism in the CLR. In its initial release, the environment for the implementation and inter-operation of multiple CLR has no support for polymorphism, an omission shared by the source languages. In this paper we extend it with direct support for JVM. Of course, it is always possible to “compile away” polymor- parametric polymorphism (also known as generics), describing the phism by translation, as has been demonstrated in a number of ex- design through examples written in an extended version of the C# tensions to Java [14, 4, 6, 13, 2, 16] that require no change to the programming language, and explaining aspects of implementation JVM, and in compilers for polymorphic languages that target the by reference to a prototype extension to the runtime. JVM or CLR (MLj [3], Haskell, Eiffel, Mercury). However, such Our design is very expressive, supporting parameterized types, systems inevitably suffer drawbacks of some kind, whether through polymorphic static, instance and virtual methods, “F-bounded” source language restrictions (disallowing primitive type instanti- type parameters, instantiation at pointer and value types, polymor- ations to enable a simple erasure-based translation, as in GJ and phic recursion, and exact run-time types. -

Tricore Architecture Manual for a Detailed Discussion of Instruction Set Encoding and Semantics

User’s Manual, v2.3, Feb. 2007 TriCore 32-bit Unified Processor Core Embedded Applications Binary Interface (EABI) Microcontrollers Edition 2007-02 Published by Infineon Technologies AG 81726 München, Germany © Infineon Technologies AG 2007. All Rights Reserved. Legal Disclaimer The information given in this document shall in no event be regarded as a guarantee of conditions or characteristics (“Beschaffenheitsgarantie”). With respect to any examples or hints given herein, any typical values stated herein and/or any information regarding the application of the device, Infineon Technologies hereby disclaims any and all warranties and liabilities of any kind, including without limitation warranties of non- infringement of intellectual property rights of any third party. Information For further information on technology, delivery terms and conditions and prices please contact your nearest Infineon Technologies Office (www.infineon.com). Warnings Due to technical requirements components may contain dangerous substances. For information on the types in question please contact your nearest Infineon Technologies Office. Infineon Technologies Components may only be used in life-support devices or systems with the express written approval of Infineon Technologies, if a failure of such components can reasonably be expected to cause the failure of that life-support device or system, or to affect the safety or effectiveness of that device or system. Life support devices or systems are intended to be implanted in the human body, or to support and/or maintain and sustain and/or protect human life. If they fail, it is reasonable to assume that the health of the user or other persons may be endangered. User’s Manual, v2.3, Feb. -

Tribits Lifecycle Model Version

SANDIA REPORT SAND2012-0561 Unlimited Release Printed February 2012 TriBITS Lifecycle Model Version 1.0 A Lean/Agile Software Lifecycle Model for Research-based Computational Science and Engineering and Applied Mathematical Software Roscoe A. Bartlett Michael A. Heroux James M. Willenbring Prepared by Sandia National Laboratories Albuquerque, New Mexico 87185 and Livermore, California 94550 Sandia National Laboratories is a multi-program laboratory managed and operated by Sandia Corporation, a wholly owned subsidiary of Lockheed Martin Corporation, for the U.S. Department of Energys National Nuclear Security Administration under Contract DE-AC04-94AL85000. Approved for public release; further dissemination unlimited. Issued by Sandia National Laboratories, operated for the United States Department of Energy by Sandia Corporation. NOTICE: This report was prepared as an account of work sponsored by an agency of the United States Government. Neither the United States Government, nor any agency thereof, nor any of their employees, nor any of their contractors, subcontractors, or their employees, make any warranty, express or implied, or assume any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or rep- resent that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise, does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States Government, any agency thereof, or any of their contractors or subcontractors. The views and opinions expressed herein do not necessarily state or reflect those of the United States Government, any agency thereof, or any of their contractors. -

Lecture 2 — Software Correctness

Lecture 2 — Software Correctness David J. Pearce School of Engineering and Computer Science Victoria University of Wellington Software Correctness RECOMMENDATIONS To Builders and Users of Software Make the most of effective software development technologies and formal methods. A variety of modern technologies — in particular, safe programming languages, static analysis, and formal methods — are likely to reduce the cost and difficulty of producing dependable software. Elementary best practices, such as source code control and systematic defect tracking, should be universally adopted, . –Software for Dependable Systems Patriot Missle 1991, Dhahran - Iraqi Scud missile hits barracks killing 28 soldiers - Patriot system was activated, but missed target by over 600m - Floating point representation of 0.1 was cause (rounding error over time, required 100 hours of continuous operation) See: “Engineering Disasters” https://www.youtube.com/watch?v=EMVBLg2MrLs (Image by Darkone - Own work, CC BY-SA 2.5) Unintended Acceleration? (Overview) Braking Problems (Toyota Prius) Drivers reported “sudden acceleration” when braking By 2010, over seven million cars recalled NASA experts called into investigate Specialists in static analysis Their report had significant redactions though Bookout vs Toyota, 2013 Victim’s awarded $1.5million each Barr provided expert testimony See “Unintended Acceleration and Other Embedded Software bugs”, Michael Barr, 2011 See “An Update on Toyota and Unintended acceleration” Unintended Acceleration? (NASA Analysis) Throttle -

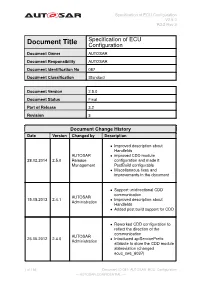

Configuration Description (And the Resulting ECU Ex- Tract of the System Configuration for the Individual Ecus)

Specification of ECU Configuration V2.5.0 R3.2 Rev 3 Specification of ECU Document Title Configuration Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 087 Document Classification Standard Document Version 2.5.0 Document Status Final Part of Release 3.2 Revision 3 Document Change History Date Version Changed by Description • Improved description about HandleIds AUTOSAR • improved CDD module 28.02.2014 2.5.0 Release configuration and made it Management PostBuild configurable • Miscellaneous fixes and improvements in the document • Support unidirectional CDD communication AUTOSAR 15.05.2013 2.4.1 • Improved description about Administration HandleIds • Added post build support for CDD • Reworked CDD configuration to reflect the direction of the communication AUTOSAR 25.05.2012 2.4.0 • Introduced apiServicePrefix Administration attribute to store the CDD module abbreviation (changed ecuc_sws_6037) 1 of 156 Document ID 087: AUTOSAR_ECU_Configuration — AUTOSAR CONFIDENTIAL — Specification of ECU Configuration V2.5.0 R3.2 Rev 3 • Added section on Complex Device Driver (section 4.4) • Clarification of PostBuildSelectable, PostBuildLoadable in VSMD AUTOSAR 31.03.2011 2.3.0 (added ecuc_sws_6046, Administration ecuc_sws_6047, ecuc_sws_6048, ecuc_sws_6049) • set configuration class affection support to deprecated AUTOSAR Updated definition how symbolic 14.10.2009 2.2.0 Administration names are generated from the EcuC. AUTOSAR Fixed foreign reference to 15.09.2008 2.1.0 Administration PduToFrameMapping AUTOSAR 01.07.2008 2.0.2 Legal -

CS5233 Components – Models and Engineering - Komponententechnologien - Master of Science (Informatik)

Prof. Dr. Th. Letschert CS5233 Components – Models and Engineering - Komponententechnologien - Master of Science (Informatik) Components in the Software Life-cycle Seite 1 © Th Letschert Software Lifecycle : Development 1. Development coding type checking, byte code generation 2. Linking / Loading 3. Execution Which language features support separate development in Java? Which units of (mutual dependent) code may be written, type-checked and compiled concurrently? Seite 2 Th Letschert Software Lifecycle : Development 1. Development component in Java: Compilation Unit The notion of “program” is obsolete by now Source code : system of interdependent compilation units Compilation unit: Source code fragment, unit of work for the compiler Separate compilation: Type-checking and generation of binary code for code fragments Result Binary code Type information for compiling other fragments Compilation units in Java: Goal symbol (“top” production) of the grammar (see Java language specification) CompilationUnit ::= [PackageDeclaration] [ImportDeclations] TypeDeclarations Types declared in different CUs can depend on each other, even circularly CUs have to be stored in files with a name that conforms to the one and only public type definition files have to placed in directories according to the package structure Seite 3 Th Letschert Software Lifecycle : Development Separate Compilation in Java Compile class C depending on C₁, C₂, ··· Cn . C₁, C₂, ··· must be present in source or binary form . if some Ci is not present in binary form: -

Linkers and Loaders Do?

Linkers & Loaders by John R. Levine Table of Contents 1 Table of Contents Chapter 0: Front Matter ........................................................ 1 Dedication .............................................................................................. 1 Introduction ............................................................................................ 1 Who is this book for? ......................................................................... 2 Chapter summaries ............................................................................. 3 The project ......................................................................................... 4 Acknowledgements ............................................................................ 5 Contact us ........................................................................................... 6 Chapter 1: Linking and Loading ........................................... 7 What do linkers and loaders do? ............................................................ 7 Address binding: a historical perspective .............................................. 7 Linking vs. loading .............................................................................. 10 Tw o-pass linking .............................................................................. 12 Object code libraries ........................................................................ 15 Relocation and code modification .................................................... 17 Compiler Drivers ................................................................................. -

Low-Cost Deterministic C++ Exceptions for Embedded Systems

Edinburgh Research Explorer Low-Cost Deterministic C++ Exceptions for Embedded Systems Citation for published version: Renwick, J, Spink, T & Franke, B 2019, Low-Cost Deterministic C++ Exceptions for Embedded Systems. in Proceedings of the 28th International Conference on Compiler Construction. ACM, Washington, DC, USA, pp. 76-86, 28th International Conference on Compiler Construction, Washington D.C., United States, 16/02/19. https://doi.org/10.1145/3302516.3307346 Digital Object Identifier (DOI): 10.1145/3302516.3307346 Link: Link to publication record in Edinburgh Research Explorer Document Version: Peer reviewed version Published In: Proceedings of the 28th International Conference on Compiler Construction General rights Copyright for the publications made accessible via the Edinburgh Research Explorer is retained by the author(s) and / or other copyright owners and it is a condition of accessing these publications that users recognise and abide by the legal requirements associated with these rights. Take down policy The University of Edinburgh has made every reasonable effort to ensure that Edinburgh Research Explorer content complies with UK legislation. If you believe that the public display of this file breaches copyright please contact [email protected] providing details, and we will remove access to the work immediately and investigate your claim. Download date: 25. Sep. 2021 Low-Cost Deterministic C++ Exceptions for Embedded Systems James Renwick Tom Spink Björn Franke University of Edinburgh University of Edinburgh University of Edinburgh Edinburgh, UK Edinburgh, UK Edinburgh, UK [email protected] [email protected] [email protected] ABSTRACT which their use is often associated. However, C++’s wide usage has The C++ programming language offers a strong exception mech- not been without issues. -

A Link-Time Optimizer for the Compaq Alpha

£ aÐØÓ : A Link-Time Optimizer for the Compaq Alpha Robert Muth Saumya Debray Scott Watterson Department of Computer Science University of Arizona Tucson, AZ 85721, USA fÑÙظ Öݸ ×Ûg Koen De Bosschere Vakgroep Elektronica en Informatiesystemen Universiteit Gent B-9000 Gent, Belgium Keywords : compilers, code optimization, executable editing November 2, 1999 Abstract Traditional optimizing compilers are limited in the scope of their optimizations by the fact that only a single function, or possibly a single module, is available for analysis and optimization. In particular, this means that library routines cannot be optimized to specific calling contexts. Other optimization opportunities, exploiting information not available before linktime such as addresses of variables and the final code layout, are often ignored because linkers are traditionally unsophisticated. A possible solution is to carry out whole-program optimization at link time. This paper describes aÐØÓ, a link-time optimizer for the Compaq Alpha architecture. It is able to realize significant performance improvements even for programs compiled with a good optimizing compiler with a high level of optimization. The resulting code is considerably faster that that obtained using the OM link-time optimizer, even when the latter is used in conjunction with profile-guided and inter-file compile- time optimizations. £ The work of Robert Muth, Saumya Debray and Scott Watterson was supported in part by the National Science Foundation under grant numbers CCR-9502826, CCR-9711166, and CDA-9500991. Koen De Bosschere is a research associate with the Fund for Scientific Research – Flanders. 1 Introduction Optimizing compilers for traditional imperative languages often limit their program analyses and optimizations to individual procedures [1]. -

Link-Time Improvement of Scheme Programs

Link-time ImprovementofScheme Programs Saumya Debray Rob ert Muth Scott Watterson Department of Computer Science University of Arizona Tucson, AZ 85721, U.S.A. fdebray, muth, [email protected] Technical Rep ort 98-16 Decemb er 1998 Abstract Optimizing compilers typically limit the scop e of their analyses and optimizations to individua l mo dules. This has two drawbacks: rst, library co de cannot b e optimized together with their callers, which implies that reusing co de through libraries incurs a p enalty; and second, the results of analysis and optimization cannot b e propagated from an application mo dule written in one language to a mo dule written in another. A p ossible solution is to carry out additional program optimization at link time. This pap er describ es our exp eriences with such optimization using two di erent optimizing Scheme compilers, and several b enchmark programs, via alto, a link-time optimizer we have develop ed for the DEC Alpha architecture. Exp eriments indicate that signi cant p erformance improvements are p ossible via link-time optimization even when the input programs have already b een sub jected to high levels of compile-time optimization . This work was supp orted in part by the National Science Foundation under grants CCR-9502826 and CCR 9711166. 1 Intro duction The traditional mo del of compilation usually limits the scop e of analysis and optimization to individual pro cedures, or p ossibly to mo dules. This mo del for co de optimization do es not take things as far as they could b e taken, in two resp ects. -

Implementation and Evaluation of Link Time Optimization with Libfirm

Institut für Programmstrukturen und Datenorganisation (IPD) Lehrstuhl Prof. Dr.-Ing. Snelting Implementation and Evaluation of Link Time Optimization with libFirm Bachelorarbeit von Mark Weinreuter an der Fakultät für Informatik Erstgutachter: Prof. Dr.-Ing. Gregor Snelting Zweitgutachter: Prof. Dr.-Ing. Jörg Henkel Betreuender Mitarbeiter: Dipl.-Inform. Manuel Mohr Bearbeitungszeit: 3. August 2016 – 14. November 2016 KIT – Die Forschungsuniversität in der Helmholtz-Gemeinschaft www.kit.edu Abstract Traditionell werden Programme dateiweise kompiliert und zum Schluss zu einer ausführbaren Datei gelinkt. Optimierungen werden während des Kompilierens angewandt, allerdings nur dateiintern. Mit Hilfe von LTO erhält der Compiler eine ganzheitliche Sicht über alle involvierten Dateien. Dies erlaubt dateiüber- greifende Optimierungen, wie das Inlinen von Funktionen oder das Entfernen von unbenutztem Code über Dateigrenzen hinweg. In dieser Arbeit wird libFirm und dessen C-Frontend cparser um LTO erweitert. Durch diese Erweiterung wird eine Laufzeitverbesserung von durchschnittlich 2.3% im Vergleich zur Vari- ante ohne LTO anhand der Benchmarks der SPEC CPU2000 gemessen. Für den vortex-Benchmark wird sogar eine Verbesserung um bis zu 25% gemessen. Traditionally, source files are compiled separately. As a last step these object files are linked into an executable. Optimizations are applied during compilation but only on a single file at a time. With LTO the compiler has a holistic view of all involved files. This allows for optimizations across files, such as inlining or removal of unused code across file boundaries. In this thesis libFirm and its C frontend cparser are extended with LTO functionality. By using LTO an averaged runtime improvement of 2.3% in comparison with the non LTO variant on basis of the SPEC CPU2000 is observed. -

A Practical System for Intermodule Code Optimization at Link-Time

D E C E M B E R 1 9 9 2 WRL Research Report 92/6 A Practical System for Intermodule Code Optimization at Link-Time Amitabh Srivastava David W. Wall d i g i t a l Western Research Laboratory 250 University Avenue Palo Alto, California 94301 USA The Western Research Laboratory (WRL) is a computer systems research group that was founded by Digital Equipment Corporation in 1982. Our focus is computer science research relevant to the design and application of high performance scientific computers. We test our ideas by designing, building, and using real systems. The systems we build are research prototypes; they are not intended to become products. There two other research laboratories located in Palo Alto, the Network Systems Laboratory (NSL) and the Systems Research Center (SRC). Other Digital research groups are located in Paris (PRL) and in Cambridge, Massachusetts (CRL). Our research is directed towards mainstream high-performance computer systems. Our prototypes are intended to foreshadow the future computing environments used by many Digital customers. The long-term goal of WRL is to aid and accelerate the development of high-performance uni- and multi-processors. The research projects within WRL will address various aspects of high-performance computing. We believe that significant advances in computer systems do not come from any single technological advance. Technologies, both hardware and software, do not all advance at the same pace. System design is the art of composing systems which use each level of technology in an appropriate balance. A major advance in overall system performance will require reexamination of all aspects of the system.