Testing Software Tools of Potential Interest for Digital Preservation Activities at the National Library of Australia

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virtual Disk File Virtualbox Ubuntu Download Christian Engvall

virtual disk file virtualbox ubuntu download Christian Engvall. How to install Ubuntu on a VirtualBox virtual machine. It’s very simple and won’t cost you a dime. Don’t forget to install the guest additions at the end. Virtualbox. Virtualbox is a virtualization software that is free and open source. Start by downloading and installing it. It runs on Windows, MacOS, Linux distributions and Solaris. Ubuntu. Ubuntu is a popular Linux distribution for desktops. It’s free and open source. So go ahead and download Ubuntu. 1. Creating a virtual machine in Virtualbox. Fire up VirtualBox and click on the New button in the top left menu. When i started to type Ubuntu, VirtualBox automatically set type to linux and version to Ubuntu. Name and operating system - VirtualBox. Next step is to set the amount of memory that will be allocated. VirtualBox will recommend you a number. But you can choose anything you’d like. This can be changed later if the virtual machine runs slow. Memory size - VirtualBox. Next you select a size of the hard disk. 8 gb that VirtualBox recommends will work fine. Now click Create . Harddisk size - VirtualBox. Select VDI as hard disk file type. Hard disk file type - VirtualBox. Next use dynamically allocated when asked. Storage on physical hard disk - VirtualBox. Set the disk size to the standard 8GB. Ubuntu file location - VirtualBox. 2. Installing Ubuntu on the new virtual machine. Now when the virtual machine is created it’s time to mount the Ubuntu ISO and install it. Click on settings with the newly created virtual machine selected. -

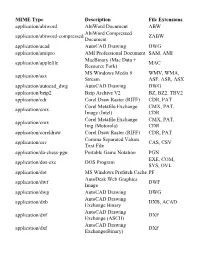

MIME Type Description File Extensions Application/Abiword

MIME Type Description File Extensions application/abiword AbiWord Document ABW AbiWord Compressed application/abiword-compressed ZABW Document application/acad AutoCAD Drawing DWG application/amipro AMI Professional Document SAM, AMI MacBinary (Mac Data + application/applefile MAC Resource Fork) MS Windows Media 9 WMV, WMA, application/asx Stream ASF, ASR, ASX application/autocad_dwg AutoCAD Drawing DWG application/bzip2 Bzip Archive V2 BZ, BZ2, TBV2 application/cdr Corel Draw Raster (RIFF) CDR, PAT Corel Metafile Exchange CMX, PAT, application/cmx Image (Intel) CDR Corel Metafile Exchange CMX, PAT, application/cmx Img (Motorola) CDR application/coreldraw Corel Draw Raster (RIFF) CDR, PAT Comma Separated Values application/csv CAS, CSV Text File application/da-chess-pgn Portable Game Notation PGN EXE, COM, application/dos-exe DOS Program SYS, OVL application/dot MS Windows Prefetch Cache PF AutoDesk Web Graphics application/dwf DWF Image application/dwg AutoCAD Drawing DWG AutoCAD Drawing application/dxb DXB, ACAD Exchange Binary AutoCAD Drawing application/dxf DXF Exchange (ASCII) AutoCAD Drawing application/dxf DXF Exchange(Binary) EMF, TMP, EMF, TMP, application/emf Windows Enhanced Metafile WMF application/envoy Envoy Document EVY, ENV Comma Separated Values application/excel CAS, CSV Text File MS Excel XLS, XLA, application/excel Worksheet/Add-In/Template XLT, XLB EXE, COM, application/exe DOS Program SYS, OVL EXE, VXD, application/exe MS Windows Driver (16 bit) SYS, DRV, 386 MS Windows Program (16 application/exe EXE, MOD, BIN bit) -

User Guide Laplink® Diskimage™ 7 Professional

http://www.laplink.com/contact 1 ™ E-mail us at [email protected] Laplink® DiskImage 7 Professional User Guide Tel (USA): +1 (425) 952-6001 Tel (UK): +44 (0) 870-2410-983 Fax (USA): +1 (425) 952-6002 Fax (UK): +44 (0) 870-2410-984 ™ Laplink® DiskImage 7 Professional Laplink Software, Inc. Customer Service/Technical Support: Web: http://www.laplink.com/contact E-mail: [email protected] Tel (USA): +1 (425) 952-6001 Fax (USA): +1 (425) 952-6002 Tel (UK): +44 (0) 870-2410-983 User Guide Fax (UK): +44 (0) 870-2410-984 Laplink Software, Inc. 600 108th Ave. NE, Suite 610 Bellevue, WA 98004 U.S.A. Copyright / Trademark Notice © Copyright 2013 Laplink Software, Inc. All rights reserved. Laplink, the Laplink logo, Connect Your World, and DiskImage are registered trademarks or trademarks of Laplink Software, Inc. in the United States and/or other countries. Other trademarks, product names, company names, and logos are the property of their respective holder(s). UG-DiskImagePro-EN-7 (REV. 5/2013) http://www.laplink.com/contact 2 ™ E-mail us at [email protected] Laplink® DiskImage 7 Professional User Guide Tel (USA): +1 (425) 952-6001 Tel (UK): +44 (0) 870-2410-983 Fax (USA): +1 (425) 952-6002 Fax (UK): +44 (0) 870-2410-984 Contents Installation and Registration System Requirements . 1 Installing Laplink DiskImage . 1 Registration . 2 Introduction to DiskImage Overview of Important Features . 2 Definitions . 3 Start Laplink DiskImage - Two Methods . 4 Windows Start . .4 Bootable CD . .4 DiskImage Tasks One-Click Imaging: Create an Image of the Entire Computer . -

Non-Binary Analysis

The Art of Mac Malware: Analysis p. wardle (The Art of Mac Malware) Volume 1: Analysis Chapter 0x5: Non-Binary Analysis Note: This book is a work in progress. You are encouraged to directly comment on these pages ...suggesting edits, corrections, and/or additional content! To comment, simply highlight any content, then click the icon which appears (to the right on the document’s border). 1 The Art of Mac Malware: Analysis p. wardle Content made possible by our Friends of Objective-See: Airo SmugMug Guardian Firewall SecureMac iVerify Halo Privacy In the previous chapter, we showed how the file utility [1] can be used to effectively identify a sample’s file type. File type identification is important as the majority of static analysis tools are file type specific. Now, let’s look at various file types one commonly encounters while analyzing Mac malware. As noted, some file types (such as disk images and packages) are simply the malware’s “distribution packaging”. For these file types, the goal is to extract the malicious contents (often the malware’s installer). Of course, Mac malware itself comes in various file formats, such as scripts and binaries. For each file type, we’ll briefly discuss its purpose, as well as highlight static analysis tools that can be used to analyze the file format. Note: This chapter focuses on the analysis of non-binary file formats (such as scripts). Subsequent chapters will dive into macOS’s binary file format (Mach-O), as well as discuss both analysis tools and techniques. 2 The Art of Mac Malware: Analysis p. -

![Adobe's Extensible Metadata Platform (XMP): Background [DRAFT -- Caroline Arms, 2011-11-30]](https://docslib.b-cdn.net/cover/4654/adobes-extensible-metadata-platform-xmp-background-draft-caroline-arms-2011-11-30-104654.webp)

Adobe's Extensible Metadata Platform (XMP): Background [DRAFT -- Caroline Arms, 2011-11-30]

Adobe's Extensible Metadata Platform (XMP): Background [DRAFT -- Caroline Arms, 2011-11-30] Contents • Introduction • Adobe's XMP Toolkits • Links to Adobe Web Pages on XMP Adoption • Appendix A: Mapping of PDF Document Info (basic metadata) to XMP properties • Appendix B: Software applications that can read or write XMP metadata in PDFs • Appendix C: Creating Custom Info Panels for embedding XMP metadata Introduction Adobe's XMP (Extensible Metadata Platform: http://www.adobe.com/products/xmp/) is a mechanism for embedding metadata into content files. For example. an XMP "packet" can be embedded in PDF documents, in HTML and in image files such as TIFF and JPEG2000 as well as Adobe's own PSD format native to Photoshop. In September 2011, XMP was approved as an ISO standard.[ ISO 16684-1: Graphic technology -- Extensible metadata platform (XMP) specification -- Part 1: Data model, serialization and core properties] XMP is an application of the XML-based Resource Description Framework (RDF; http://www.w3.org/TR/2004/REC-rdf-primer-20040210/), which is a generic way to encode metadata from any scheme. RDF is designed for it to be easy to use elements from any namespace. An important application area is in publication workflows, particularly to support submission of pictures and advertisements for inclusion in publications. The use of RDF allows elements from different schemes (e.g., EXIF and IPTC for photographs) to be held in a common framework during processing workflows. There are two ways to get XMP metadata into PDF documents: • manually via a customized File Info panel (or equivalent for products from vendors other than Adobe). -

Generating File Format Identification and Checksums with DROID

Electronic Records Modules Electronic Records Committee Congressional Papers Roundtable Society of American Archivists Generating File Format Identification and Checksums with DROID Brandon Hirsch Center for Legislative Studies [email protected] ____________________________________________________ Date Published: July 2016 Module#: ERCM001 Created 2016-07 CPR Electronic Records Committee File Format Identification & Checksum Generation with DROID May 2016 For Congressional Papers Roundtable Electronic Records Committee Table of Contents Table of Contents Overview and Rationale Procedural Assumptions Hardware and Software Requirements Workflow Configuring DROID Configuring DROID in Mac OS X Configuring DROID in Windows Starting DROID Starting DROID in Mac OS X Starting DROID in Windows What Do These Results Mean? Checksums Further Evaluation Exporting Results Filtering Reports Overview and Rationale File format identification is a critical component of digital preservation activities because it provides a reliable method for determining exactly what types of files are stored in your institution’s holdings. Understanding the contents of one’s holdings provides a foundation upon which additional preservation decisions are made. Additionally, generating checksums provides a reliable method for evaluating the identity and integrity of the specific files and objects in an institution’s digital holdings throughout the preservation lifecycle. The National Archives UK’s Digital Record Object IDentifier is one tool that can meet both of these needs. DROID’s primary function is to generate file format identification in compliance with the PRONOM registry, and to provide reports and/or exported results that can be used to 2 interpret the files within a data set. The exported results (i.e. exported to .csv) can also be used to enhance preservation information for a collection, accession, data set, etc. -

Tools Used by CERP

COLLABORATIVE ELECTRONIC RECORDS PROJECT EVALUATION OF TOOLS In order to process and preserve email collections for the pilot, tools were needed for format conversion, format detection, file comparison, and file extraction. One goal of the project was to address the realities that small to mid-sized institutions face with limited funding and technical staffing. During the project, various software applications (some free), metadata formats, and guides were used and evaluated. The summary below includes product information and the results of our trials. This report should not be considered an official endorsement of any product, nor is it a comprehensive list of every applicable product. Note: See glossary for format definitions. Product ABC Amber Outlook Converter Description ProcessText Group application that converts email into different formats such as PDF, HTML, and TXT. Trial version available. Vendor information “ABC Amber Outlook Converter is intended to help you keep your important emails, newsletters, other important messages organized in one file. It is a useful tool that converts your emails from MS Outlook to any document format (PDF, DOC, HTML, CHM, RTF, HLP, TXT, DBF, CSV, XML, MDB, etc.) easily and quickly. It generates the contents with bookmarks (in PDF, DOC, RTF and HTML), keeping hyperlinks. Also you can use this tool as MSG Converter. Currently our software supports more than 50 languages.” Intended CERP Use SIA tried it for some XML conversion of email before the XML parser- schema work was started by the CERP technical consultant. It can produce a report indicating number of unread items within the folders of an email account. -

'DIY' Digital Preservation for Audio Identify · Appraise · Organise · Preserve · Access Plan + Housekeeping

'DIY' Digital Preservation for Audio Identify · Appraise · Organise · Preserve · Access Plan + housekeeping Part 1 (10:00am-10:50am) Part 2 (11:00am-11:45am) 10:00am-10:10am Intros and housekeeping 10:50am-11:00am [10-minute comfort break] 10:10am-10:30am Digital Preservation for audio 11:00am-11:15am Organise (Migrate) What material do you care 11:15am-11:45am Store, Maintain, Access about and hope to keep?_ Discussion & Questions 10:30am-10:50am Locate, Appraise, Identify What kind of files are you working with?_ Feel free to Using Zoom swap to ‘Grid View’ when slides are not in use, to gage ‘the room’. Please use the chat Please keep function to your mic on ask questions ‘mute’ when while slides not speaking. are in use. Who are we? • Bridging the Digital Gap traineeship scheme • UK National Archives (National Lottery Heritage Fund) • bringing ‘digital’ skills into the archives sector Why are we doing these workshops? • agitate the cultural record to reflect lived experience • embrace tools that support historical self-determination among non- specialists • raise awareness, share skills, share knowledge What is digital preservation? • digital material is vulnerable in different ways to analog material • digital preservation = “a series of managed activities undertaken to ensure continued access to digital materials for as long as necessary.” Audio practices and technological dependency Image credit: Tarje Sælen Lavik Image credit: Don Shall Image credit: Mk2010 Image credit: Stahlkocher Image credit: JuneAugust Digital preservation and ‘born-digital’ audio Bitstream File(s) Digital Content Rendered Content • codecs (e.g. LPCM) • formats and containers (e.g. -

A Forensic Database for Digital Audio, Video, and Image Media

THE “DENVER MULTIMEDIA DATABASE”: A FORENSIC DATABASE FOR DIGITAL AUDIO, VIDEO, AND IMAGE MEDIA by CRAIG ANDREW JANSON B.A., University of Richmond, 1997 A thesis submitted to the Faculty of the Graduate School of the University of Colorado Denver in partial fulfillment of the requirements for the degree of Master of Science Recording Arts Program 2019 This thesis for the Master of Science degree by Craig Andrew Janson has been approved for the Recording Arts Program by Catalin Grigoras, Chair Jeff M. Smith Cole Whitecotton Date: May 18, 2019 ii Janson, Craig Andrew (M.S., Recording Arts Program) The “Denver Multimedia Database”: A Forensic Database for Digital Audio, Video, and Image Media Thesis directed by Associate Professor Catalin Grigoras ABSTRACT To date, there have been multiple databases developed for use in many forensic disciplines. There are very large and well-known databases like CODIS (DNA), IAFIS (fingerprints), and IBIS (ballistics). There are databases for paint, shoeprint, glass, and even ink; all of which catalog and maintain information on all the variations of their specific subject matter. Somewhat recently there was introduced the “Dresden Image Database” which is designed to provide a digital image database for forensic study and contains images that are generic in nature, royalty free, and created specifically for this database. However, a central repository is needed for the collection and study of digital audios, videos, and images. This kind of database would permit researchers, students, and investigators to explore the data from various media and various sources, compare an unknown with knowns with the possibility of discovering the likely source of the unknown. -

Digital Forensics Tool Testing – Image Metadata in the Cloud

Digital Forensics Tool Testing – Image Metadata in the Cloud Philip Clark Master’s Thesis Master of Science in Information Security 30 ECTS Department of Computer Science and Media Technology Gjøvik University College, 2011 Avdeling for informatikk og medieteknikk Høgskolen i Gjøvik Postboks 191 2802 Gjøvik Department of Computer Science and Media Technology Gjøvik University College Box 191 N-2802 Gjøvik Norway Digital Forensics Tool Testing – Image Metadata in the Cloud Abstract As cloud based services are becoming a common way for users to store and share images on the internet, this adds a new layer to the traditional digital forensics examination, which could cause additional potential errors in the investigation. Courtroom forensics evidence has historically been criticised for lacking a scientific basis. This thesis aims to present an approach for testing to what extent cloud based services alter or remove metadata in the images stored through such services. To exemplify what information which could potentially reveal sensitive information through image metadata, an overview of what information is publically shared will be presented, by looking at a selective section of images published on the internet through image sharing services in the cloud. The main contributions to be made through this thesis will be to provide an overview of what information regular users give away while publishing images through sharing services on the internet, either willingly or unwittingly, as well as provide an overview of how cloud based services handle Exif metadata today, along with how a forensic practitioner can verify to what extent information through a given cloud based service is reliable. -

Autodesk White Paper

AUTOCAD RASTER DESIGN 2010 FEATURES AND BENEFITS AutoCAD® Raster Design 2010 Features and Benefits Make the most of rasterized scanned drawings, maps, aerial photos, satellite imagery, and digital elevation models. Get more out of your raster data and enhance your designs, plans, presentations, ® and maps with AutoCAD Raster Design software. Contents Introduction ...................................................................................................................... 2 New Features and Enhancements .................................................................................. 2 Image Display ................................................................................................................... 3 Image Editing and Cleanup ............................................................................................. 4 Vectorization Tools with SmartCorrect .......................................................................... 6 Raster Entity Manipulation (REM) with SmartPick ....................................................... 7 Georeferenced Image Display and Analysis .................................................................. 8 Image Transformations .................................................................................................. 10 www.autodesk.com/rasterdesign AUTOCAD RASTER DESIGN 2010 FEATURES AND BENEFITS Introduction Extend the power of AutoCAD® and AutoCAD-based software by using AutoCAD Raster Design software for a wide range of applications. Get more out of your raster -

Metadefender Core V4.12.2

MetaDefender Core v4.12.2 © 2018 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 13 Key Features of Metadefender Core 14 1. Quick Start with Metadefender Core 15 1.1. Installation 15 Operating system invariant initial steps 15 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 21 1.3. Scan Files with Metadefender Core 21 2. Installing or Upgrading Metadefender Core 22 2.1. Recommended System Requirements 22 System Requirements For Server 22 Browser Requirements for the Metadefender Core Management Console 24 2.2. Installing Metadefender 25 Installation 25 Installation notes 25 2.2.1. Installing Metadefender Core using command line 26 2.2.2. Installing Metadefender Core using the Install Wizard 27 2.3. Upgrading MetaDefender Core 27 Upgrading from MetaDefender Core 3.x 27 Upgrading from MetaDefender Core 4.x 28 2.4. Metadefender Core Licensing 28 2.4.1. Activating Metadefender Licenses 28 2.4.2. Checking Your Metadefender Core License 35 2.5. Performance and Load Estimation 36 What to know before reading the results: Some factors that affect performance 36 How test results are calculated 37 Test Reports 37 Performance Report - Multi-Scanning On Linux 37 Performance Report - Multi-Scanning On Windows 41 2.6. Special installation options 46 Use RAMDISK for the tempdirectory 46 3. Configuring Metadefender Core 50 3.1. Management Console 50 3.2.