Download a Computer File, Your Mind Can Add New Lines of Code and Even Be Uploaded Onto the Cloud

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

17. Idealism, Or Something Near Enough Susan Schneider (In

17. Idealism, or Something Near Enough Susan Schneider (In Pearce and Goldsmidt, OUP, in press.) Some observations: 1. Mathematical entities do not come for free. There is a field, philosophy of mathematics, and it studies their nature. In this domain, many theories of the nature of mathematical entities require ontological commitments (e.g., Platonism). 2. Fundamental physics (e.g., string theory, quantum mechanics) is highly mathematical. Mathematical entities purport to be abstract—they purport to be non-spatial, atemporal, immutable, acausal and non-mental. (Whether they truly are abstract is a matter of debate, of course.) 3. Physicalism, to a first approximation, holds that everything is physical, or is composed of physical entities. 1 As such, physicalism is a metaphysical hypothesis about the fundamental nature of reality. In Section 1 of this paper, I urge that it is reasonable to ask the physicalist to inquire into the nature of mathematical entities, for they are doing heavy lifting in the physicalist’s approach, describing and identifying the fundamental physical entities that everything that exists is said to reduce to (supervene on, etc.). I then ask whether the physicalist in fact has the resources to provide a satisfying account of the nature of these mathematical entities, given existing work in philosophy of mathematics on nominalism and Platonism that she would likely draw from. And the answer will be: she does not. I then argue that this deflates the physicalist program, in significant ways: physicalism lacks the traditional advantages of being the most economical theory, and having a superior story about mental causation, relative to competing theories. -

Comment Attachment

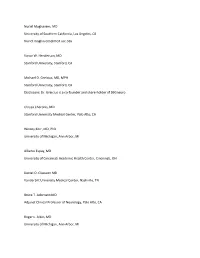

Nuriel Moghavem, MD University of Southern California, Los Angeles, CA [email protected] Victor W. Henderson, MD Stanford University, Stanford, CA Michael D. Greicius, MD, MPH Stanford University, Stanford, CA Disclosure: Dr. Greicius is a co-founder and share-holder of SBGneuro Chrysa Cheronis, MD Stanford University Medical Center, Palo Alto, CA Wesley Kerr, MD, PhD University of Michigan, Ann Arbor, MI Alberto Espay, MD University of Cincinnati Academic Health Center, Cincinnati, OH Daniel O. Claassen MD Vanderbilt University Medical Center, Nashville, TN Bruce T. Adornato MD Adjunct Clinical Professor of Neurology, Palo Alto, CA Roger L. Albin, MD University of Michigan, Ann Arbor, MI R. Ryan Darby, MD Vanderbilt University Medical Center, Nashville, TN Graham Garrison, MD University of Cincinnati, Cincinnati, Ohio Lina Chervak, MD University of Cincinnati Medical Center, Cincinnati, OH Abhimanyu Mahajan, MD, MHS Rush University Medical Center, Chicago, IL Richard Mayeux, MD Columbia University, New York, NY Lon Schneider MD Keck School of Medicine of the University of Southern California, Los Angeles, CAv Sami Barmada, MD PhD University of Michigan, Ann Arbor, MI Evan Seevak, MD Alameda Health System, Oakland, CA Will Mantyh MD University of Minnesota, Minneapolis, MN Bryan M. Spann, DO, PhD Consultant, Cognitive and Neurobehavior Disorders, Scottsdale, AZ Kevin Duque, MD University of Cincinnati, Cincinnati, OH Lealani Mae Acosta, MD, MPH Vanderbilt University Medical Center, Nashville, TN Marie Adachi, MD Alameda Health System, Oakland, CA Marina Martin, MD, MPH Stanford University School of Medicine, Palo Alto, CA Matthew Schrag MD, PhD Vanderbilt University Medical Center, Nashville, TN Matthew L. Flaherty, MD University of Cincinnati, Cincinnati, OH Stephanie Reyes, MD Durham, NC Hani Kushlaf, MD University of Cincinnati, Cincinnati, OH David B Clifford, MD Washington University in St Louis, St Louis, Missouri Marc Diamond, MD UT Southwestern Medical Center, Dallas, TX Disclosure: Dr. -

An Evolutionary Heuristic for Human Enhancement

18 TheWisdomofNature: An Evolutionary Heuristic for Human Enhancement Nick Bostrom and Anders Sandberg∗ Abstract Human beings are a marvel of evolved complexity. Such systems can be difficult to enhance. When we manipulate complex evolved systems, which are poorly understood, our interventions often fail or backfire. It can appear as if there is a ‘‘wisdom of nature’’ which we ignore at our peril. Sometimes the belief in nature’s wisdom—and corresponding doubts about the prudence of tampering with nature, especially human nature—manifest as diffusely moral objections against enhancement. Such objections may be expressed as intuitions about the superiority of the natural or the troublesomeness of hubris, or as an evaluative bias in favor of the status quo. This chapter explores the extent to which such prudence-derived anti-enhancement sentiments are justified. We develop a heuristic, inspired by the field of evolutionary medicine, for identifying promising human enhancement interventions. The heuristic incorporates the grains of truth contained in ‘‘nature knows best’’ attitudes while providing criteria for the special cases where we have reason to believe that it is feasible for us to improve on nature. 1.Introduction 1.1. The wisdom of nature, and the special problem of enhancement We marvel at the complexity of the human organism, how its various parts have evolved to solve intricate problems: the eye to collect and pre-process ∗ Oxford Future of Humanity Institute, Faculty of Philosophy and James Martin 21st Century School, Oxford University. Forthcoming in Enhancing Humans, ed. Julian Savulescu and Nick Bostrom (Oxford: Oxford University Press) 376 visual information, the immune system to fight infection and cancer, the lungs to oxygenate the blood. -

The Transhumanist Reader Is an Important, Provocative Compendium Critically Exploring the History, Philosophy, and Ethics of Transhumanism

TH “We are in the process of upgrading the human species, so we might as well do it E Classical and Contemporary with deliberation and foresight. A good first step is this book, which collects the smartest thinking available concerning the inevitable conflicts, challenges and opportunities arising as we re-invent ourselves. It’s a core text for anyone making TRA Essays on the Science, the future.” —Kevin Kelly, Senior Maverick for Wired Technology, and Philosophy “Transhumanism has moved from a fringe concern to a mainstream academic movement with real intellectual credibility. This is a great taster of some of the best N of the Human Future emerging work. In the last 10 years, transhumanism has spread not as a religion but as a creative rational endeavor.” SHU —Julian Savulescu, Uehiro Chair in Practical Ethics, University of Oxford “The Transhumanist Reader is an important, provocative compendium critically exploring the history, philosophy, and ethics of transhumanism. The contributors anticipate crucial biopolitical, ecological and planetary implications of a radically technologically enhanced population.” M —Edward Keller, Director, Center for Transformative Media, Parsons The New School for Design A “This important book contains essays by many of the top thinkers in the field of transhumanism. It’s a must-read for anyone interested in the future of humankind.” N —Sonia Arrison, Best-selling author of 100 Plus: How The Coming Age of Longevity Will Change Everything IS The rapid pace of emerging technologies is playing an increasingly important role in T overcoming fundamental human limitations. The Transhumanist Reader presents the first authoritative and comprehensive survey of the origins and current state of transhumanist Re thinking regarding technology’s impact on the future of humanity. -

Global Catastrophic Risks Survey

GLOBAL CATASTROPHIC RISKS SURVEY (2008) Technical Report 2008/1 Published by Future of Humanity Institute, Oxford University Anders Sandberg and Nick Bostrom At the Global Catastrophic Risk Conference in Oxford (17‐20 July, 2008) an informal survey was circulated among participants, asking them to make their best guess at the chance that there will be disasters of different types before 2100. This report summarizes the main results. The median extinction risk estimates were: Risk At least 1 million At least 1 billion Human extinction dead dead Number killed by 25% 10% 5% molecular nanotech weapons. Total killed by 10% 5% 5% superintelligent AI. Total killed in all 98% 30% 4% wars (including civil wars). Number killed in 30% 10% 2% the single biggest engineered pandemic. Total killed in all 30% 10% 1% nuclear wars. Number killed in 5% 1% 0.5% the single biggest nanotech accident. Number killed in 60% 5% 0.05% the single biggest natural pandemic. Total killed in all 15% 1% 0.03% acts of nuclear terrorism. Overall risk of n/a n/a 19% extinction prior to 2100 These results should be taken with a grain of salt. Non‐responses have been omitted, although some might represent a statement of zero probability rather than no opinion. 1 There are likely to be many cognitive biases that affect the result, such as unpacking bias and the availability heuristic‒‐well as old‐fashioned optimism and pessimism. In appendix A the results are plotted with individual response distributions visible. Other Risks The list of risks was not intended to be inclusive of all the biggest risks. -

Living in a Matrix, She Thinks to Herself

y most accounts, Susan Schneider lives a pretty normal life. BAn assistant professor of LIVING IN philosophy, she teaches classes, writes academic papers and A MATrix travels occasionally to speak at a philosophy or science lecture. At home, she shuttles her daughter to play dates, cooks dinner A PHILOSOPHY and goes mountain biking. ProfESSor’S LifE in Then suddenly, right in the middle of this ordinary American A WEB OF QUESTIONS existence, it happens. Maybe we’re all living in a Matrix, she thinks to herself. It’s like in the by Peter Nichols movie when the question, What is the Matrix? keeps popping up on Neo’s computer screen and sets him to wondering. Schneider starts considering all the what-ifs and the maybes that now pop up all around her. Maybe the warm sun on my face, the trees swishing by and the car I’m driving down the road are actually code streaming through the circuits of a great computer. What if this world, which feels so compellingly real, is only a simulation thrown up by a complex and clever computer game? If I’m in it, how could I even know? What if my real- seeming self is nothing more than an algorithm on a computational network? What if everything is a computer-generated dream world – a momentary assemblage of information clicking and switching and running away on a grid laid down by some advanced but unknown intelligence? It’s like that time, after earning an economics degree as an undergrad at Berkeley, she suddenly switched to philosophy. -

What Is the Upper Limit of Value?

WHAT IS THE UPPER LIMIT OF VALUE? Anders Sandberg∗ David Manheim∗ Future of Humanity Institute 1DaySooner University of Oxford Delaware, United States, Suite 1, Littlegate House [email protected] 16/17 St. Ebbe’s Street, Oxford OX1 1PT [email protected] January 27, 2021 ABSTRACT How much value can our decisions create? We argue that unless our current understanding of physics is wrong in fairly fundamental ways, there exists an upper limit of value relevant to our decisions. First, due to the speed of light and the definition and conception of economic growth, the limit to economic growth is a restrictive one. Additionally, a related far larger but still finite limit exists for value in a much broader sense due to the physics of information and the ability of physical beings to place value on outcomes. We discuss how this argument can handle lexicographic preferences, probabilities, and the implications for infinite ethics and ethical uncertainty. Keywords Value · Physics of Information · Ethics Acknowledgements: We are grateful to the Global Priorities Institute for highlighting these issues and hosting the conference where this paper was conceived, and to Will MacAskill for the presentation that prompted the paper. Thanks to Hilary Greaves, Toby Ord, and Anthony DiGiovanni, as well as to Adam Brown, Evan Ryan Gunter, and Scott Aaronson, for feedback on the philosophy and the physics, respectively. David Manheim also thanks the late George Koleszarik for initially pointing out Wei Dai’s related work in 2015, and an early discussion of related issues with Scott Garrabrant and others on asymptotic logical uncertainty, both of which informed much of his thinking in conceiving the paper. -

A Diatribe on Jerry Fodor's the Mind Doesn't Work That Way1

PSYCHE: http://psyche.cs.monash.edu.au/ Yes, It Does: A Diatribe on Jerry Fodor’s The Mind Doesn’t Work that Way1 Susan Schneider Department of Philosophy University of Pennsylvania 423 Logan Hall 249 S. 36th Street Philadelphia, PA 19104-6304 [email protected] © S. Schneider 2007 PSYCHE 13 (1), April 2007 REVIEW OF: J. Fodor, 2000. The Mind Doesn’t Work that Way. Cambridge, MA: MIT Press. 144 pages, ISBN-10: 0262062127 Abstract: The Mind Doesn’t Work That Way is an expose of certain theoretical problems in cognitive science, and in particular, problems that concern the Classical Computational Theory of Mind (CTM). The problems that Fodor worries plague CTM divide into two kinds, and both purport to show that the success of cognitive science will likely be limited to the modules. The first sort of problem concerns what Fodor has called “global properties”; features that a mental sentence has which depend on how the sentence interacts with a larger plan (i.e., set of sentences), rather than the type identity of the sentence alone. The second problem concerns what many have called, “The Relevance Problem”: the problem of whether and how humans determine what is relevant in a computational manner. However, I argue that the problem that Fodor believes global properties pose for CTM is a non-problem, and that further, while the relevance problem is a serious research issue, it does not justify the grim view that cognitive science, and CTM in particular, will likely fail to explain cognition. 1. Introduction The current project, it seems to me, is to understand what various sorts of theories of mind can and can't do, and what it is about them in virtue of which they can or can't do it. -

Program Guide

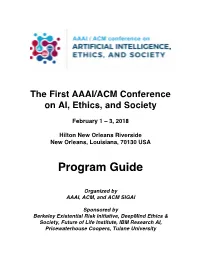

The First AAAI/ACM Conference on AI, Ethics, and Society February 1 – 3, 2018 Hilton New Orleans Riverside New Orleans, Louisiana, 70130 USA Program Guide Organized by AAAI, ACM, and ACM SIGAI Sponsored by Berkeley Existential Risk Initiative, DeepMind Ethics & Society, Future of Life Institute, IBM Research AI, Pricewaterhouse Coopers, Tulane University AIES 2018 Conference Overview Thursday, February Friday, Saturday, 1st February 2nd February 3rd Tulane University Hilton Riverside Hilton Riverside 8:30-9:00 Opening 9:00-10:00 Invited talk, AI: Invited talk, AI Iyad Rahwan and jobs: and Edmond Richard Awad, MIT Freeman, Harvard 10:00-10:15 Coffee Break Coffee Break 10:15-11:15 AI session 1 AI session 3 11:15-12:15 AI session 2 AI session 4 12:15-2:00 Lunch Break Lunch Break 2:00-3:00 AI and law AI and session 1 philosophy session 1 3:00-4:00 AI and law AI and session 2 philosophy session 2 4:00-4:30 Coffee break Coffee Break 4:30-5:30 Invited talk, AI Invited talk, AI and law: and philosophy: Carol Rose, Patrick Lin, ACLU Cal Poly 5:30-6:30 6:00 – Panel 2: Invited talk: Panel 1: Prioritizing The Venerable What will Artificial Ethical Tenzin Intelligence bring? Considerations Priyadarshi, in AI: Who MIT Sets the Standards? 7:00 Reception Conference Closing reception Acknowledgments AAAI and ACM acknowledges and thanks the following individuals for their generous contributions of time and energy in the successful creation and planning of AIES 2018: Program Chairs: AI and jobs: Jason Furman (Harvard University) AI and law: Gary Marchant -

Intergalactic Spreading of Intelligent Life and Sharpening the Fermi Paradox

Eternity in six hours: intergalactic spreading of intelligent life and sharpening the Fermi paradox Stuart Armstronga,∗, Anders Sandberga aFuture of Humanity Institute, Philosophy Department, Oxford University, Suite 8, Littlegate House 16/17 St. Ebbe's Street, Oxford, OX1 1PT UK Abstract The Fermi paradox is the discrepancy between the strong likelihood of alien intelligent life emerging (under a wide variety of assumptions), and the ab- sence of any visible evidence for such emergence. In this paper, we extend the Fermi paradox to not only life in this galaxy, but to other galaxies as well. We do this by demonstrating that traveling between galaxies { indeed even launching a colonisation project for the entire reachable universe { is a rela- tively simple task for a star-spanning civilization, requiring modest amounts of energy and resources. We start by demonstrating that humanity itself could likely accomplish such a colonisation project in the foreseeable future, should we want to, and then demonstrate that there are millions of galaxies that could have reached us by now, using similar methods. This results in a considerable sharpening of the Fermi paradox. Keywords: Fermi paradox, interstellar travel, intergalactic travel, Dyson shell, SETI, exploratory engineering 1. Introduction 1.1. The classical Fermi paradox The Fermi paradox, or more properly the Fermi question, consists of the apparent discrepancy between assigning a non-negligible probability for intelligent life emerging, the size and age of the universe, the relative rapidity ∗Corresponding author Email addresses: [email protected] (Stuart Armstrong), [email protected] (Anders Sandberg) Preprint submitted to Acta Astronautica March 12, 2013 with which intelligent life could expand across space or otherwise make itself visible, and the lack of observations of any alien intelligence. -

Transhumanism

T ranshumanism - Wikipedia, the free encyclopedia http://en.wikipedia.org/w/index.php?title=T ranshum... Transhumanism From Wikipedia, the free encyclopedia See also: Outline of transhumanism Transhumanism is an international Part of Ideology series on intellectual and cultural movement supporting Transhumanism the use of science and technology to improve human mental and physical characteristics Ideologies and capacities. The movement regards aspects Abolitionism of the human condition, such as disability, Democratic transhumanism suffering, disease, aging, and involuntary Extropianism death as unnecessary and undesirable. Immortalism Transhumanists look to biotechnologies and Libertarian transhumanism other emerging technologies for these Postgenderism purposes. Dangers, as well as benefits, are Singularitarianism also of concern to the transhumanist Technogaianism [1] movement. Related articles The term "transhumanism" is symbolized by Transhumanism in fiction H+ or h+ and is often used as a synonym for Transhumanist art "human enhancement".[2] Although the first known use of the term dates from 1957, the Organizations contemporary meaning is a product of the 1980s when futurists in the United States Applied Foresight Network Alcor Life Extension Foundation began to organize what has since grown into American Cryonics Society the transhumanist movement. Transhumanist Cryonics Institute thinkers predict that human beings may Foresight Institute eventually be able to transform themselves Humanity+ into beings with such greatly expanded Immortality Institute abilities as to merit the label "posthuman".[1] Singularity Institute for Artificial Intelligence Transhumanism is therefore sometimes Transhumanism Portal · referred to as "posthumanism" or a form of transformational activism influenced by posthumanist ideals.[3] The transhumanist vision of a transformed future humanity has attracted many supporters and detractors from a wide range of perspectives. -

Existential Risk Prevention As Global Priority

Global Policy Volume 4 . Issue 1 . February 2013 15 Existential Risk Prevention as Global Priority Nick Bostrom Research Article University of Oxford Abstract Existential risks are those that threaten the entire future of humanity. Many theories of value imply that even relatively small reductions in net existential risk have enormous expected value. Despite their importance, issues surrounding human-extinction risks and related hazards remain poorly understood. In this article, I clarify the concept of existential risk and develop an improved classification scheme. I discuss the relation between existential risks and basic issues in axiology, and show how existential risk reduction (via the maxipok rule) can serve as a strongly action-guiding principle for utilitarian concerns. I also show how the notion of existential risk suggests a new way of thinking about the ideal of sustainability. Policy Implications • Existential risk is a concept that can focus long-term global efforts and sustainability concerns. • The biggest existential risks are anthropogenic and related to potential future technologies. • A moral case can be made that existential risk reduction is strictly more important than any other global public good. • Sustainability should be reconceptualised in dynamic terms, as aiming for a sustainable trajectory rather than a sus- tainable state. • Some small existential risks can be mitigated today directly (e.g. asteroids) or indirectly (by building resilience and reserves to increase survivability in a range of extreme scenarios) but it is more important to build capacity to improve humanity’s ability to deal with the larger existential risks that will arise later in this century. This will require collective wisdom, technology foresight, and the ability when necessary to mobilise a strong global coordi- nated response to anticipated existential risks.