A Study of Luby-Rackoff Ciphers Zulfikar Amin Ramzan

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tuto Documentation Release 0.1.0

Tuto Documentation Release 0.1.0 DevOps people 2020-05-09 09H16 CONTENTS 1 Documentation news 3 1.1 Documentation news 2020........................................3 1.1.1 New features of sphinx.ext.autodoc (typing) in sphinx 2.4.0 (2020-02-09)..........3 1.1.2 Hypermodern Python Chapter 5: Documentation (2020-01-29) by https://twitter.com/cjolowicz/..................................3 1.2 Documentation news 2018........................................4 1.2.1 Pratical sphinx (2018-05-12, pycon2018)...........................4 1.2.2 Markdown Descriptions on PyPI (2018-03-16)........................4 1.2.3 Bringing interactive examples to MDN.............................5 1.3 Documentation news 2017........................................5 1.3.1 Autodoc-style extraction into Sphinx for your JS project...................5 1.4 Documentation news 2016........................................5 1.4.1 La documentation linux utilise sphinx.............................5 2 Documentation Advices 7 2.1 You are what you document (Monday, May 5, 2014)..........................8 2.2 Rédaction technique...........................................8 2.2.1 Libérez vos informations de leurs silos.............................8 2.2.2 Intégrer la documentation aux processus de développement..................8 2.3 13 Things People Hate about Your Open Source Docs.........................9 2.4 Beautiful docs.............................................. 10 2.5 Designing Great API Docs (11 Jan 2012)................................ 10 2.6 Docness................................................. -

Cryptanalysis of Substitution-Permutation Networks Using Key-Dependent Degeneracy*

Cryptanalysis of Substitution-Permutation Networks Using Key-Dependent Degeneracy* Howard M. Heys Electrical Engineering, Faculty of Engineering and Applied Science Memorial University of Newfoundland St. John’s, Newfoundland, Canada A1B 3X5 Stafford E.Tavares Department of Electrical and Computer Engineering Queen’s University Kingston, Ontario, Canada K7L 3N6 * This research was supported by the Natural Sciences and Engineering Research Council of Canada and the Telecommunications Research Institute of Ontario and was completed during the first author’s doctoral studies at Queen’s University. Cryptanalysis of Substitution-Permutation Networks Using Key-Dependent Degeneracy Keywords Ð Cryptanalysis, Substitution-Permutation Network, S-box Abstract Ð This paper presents a novel cryptanalysis of Substitution- Permutation Networks using a chosen plaintext approach. The attack is based on the highly probable occurrence of key-dependent degeneracies within the network and is applicable regardless of the method of S-box keying. It is shown that a large number of rounds are required before a network is re- sistant to the attack. Experimental results have found 64-bit networks to be cryptanalyzable for as many as 8 to 12 rounds depending on the S-box properties. ¡ . Introduction The concept of Substitution-Permutation Networks (SPNs) for use in block cryp- tosystem design originates from the “confusion” and “diffusion” principles in- troduced by Shannon [1]. The SPN architecture considered in this paper was first suggested by Feistel [2] and consists of rounds of non-linear substitutions (S-boxes) connected by bit permutations. Such a cryptosystem structure, referred 1 to as LUCIFER1 by Feistel, is a simple, efficient implementation of Shannon’s concepts. -

Two Practical and Provably Secure Block Ciphers: BEAR and LION

Two Practical and Provably Secure Block Ciphers: BEAR and LION Ross Anderson 1 and Eh Biham 2 Cambridge University, England; emall rja14~cl.cmn.ar .uk 2 Technion, Haifa, Israel; email biham~cs .teclmion.ac.il Abstract. In this paper we suggest two new provably secure block ci- phers, called BEAR and LION. They both have large block sizes, and are based on the Luby-Rackoff construction. Their underlying components are a hash function and a stream cipher, and they are provably secure in the sense that attacks which find their keys would yield attacks on one or both of the underlying components. They also have the potential to be much faster than existing block ciphers in many applications. 1 Introduction There are a number of ways in which cryptographic primitives can be trans- formed by composition, such as output feedback mode which transforms a block cipher into a stream cipher, and feedforward mode which transforms it into a hash function [P]. A number of these reductions are provable, in the sense that certain kinds of attack on the composite function would yield an attack on the underlying primitive; a recent example is the proof that the ANSI message au- thentication code is as secure as the underlying block cipher [BKR]. One construction which has been missing so far is a means of building a block cipher out of a stream cipher. In this paper, we show provably secure ways to construct a block cipher from a stream cipher and a hash function. Given that ways of constructing keyed hash functions from stream ciphers already exist [LRW] [K], this enables block ciphers to be constructed from stream ciphers. -

An Introduction to Cryptography Copyright © 1990-1999 Network Associates, Inc

An Introduction to Cryptography Copyright © 1990-1999 Network Associates, Inc. and its Affiliated Companies. All Rights Reserved. PGP*, Version 6.5.1 6-99. Printed in the United States of America. PGP, Pretty Good, and Pretty Good Privacy are registered trademarks of Network Associates, Inc. and/or its Affiliated Companies in the US and other countries. All other registered and unregistered trademarks in this document are the sole property of their respective owners. Portions of this software may use public key algorithms described in U.S. Patent numbers 4,200,770, 4,218,582, 4,405,829, and 4,424,414, licensed exclusively by Public Key Partners; the IDEA(tm) cryptographic cipher described in U.S. patent number 5,214,703, licensed from Ascom Tech AG; and the Northern Telecom Ltd., CAST Encryption Algorithm, licensed from Northern Telecom, Ltd. IDEA is a trademark of Ascom Tech AG. Network Associates Inc. may have patents and/or pending patent applications covering subject matter in this software or its documentation; the furnishing of this software or documentation does not give you any license to these patents. The compression code in PGP is by Mark Adler and Jean-Loup Gailly, used with permission from the free Info-ZIP implementation. LDAP software provided courtesy University of Michigan at Ann Arbor, Copyright © 1992-1996 Regents of the University of Michigan. All rights reserved. This product includes software developed by the Apache Group for use in the Apache HTTP server project (http://www.apache.org/). Copyright © 1995-1999 The Apache Group. All rights reserved. See text files included with the software or the PGP web site for further information. -

David Wong Snefru

SHA-3 vs the world David Wong Snefru MD4 Snefru MD4 Snefru MD4 MD5 Merkle–Damgård SHA-1 SHA-2 Snefru MD4 MD5 Merkle–Damgård SHA-1 SHA-2 Snefru MD4 MD5 Merkle–Damgård SHA-1 SHA-2 Snefru MD4 MD5 Merkle–Damgård SHA-1 SHA-2 Keccak BLAKE, Grøstl, JH, Skein Outline 1.SHA-3 2.derived functions 3.derived protocols f permutation-based cryptography AES is a permutation input AES output AES is a permutation 0 input 0 0 0 0 0 0 0 key 0 AES 0 0 0 0 0 0 output 0 Sponge Construction f Sponge Construction 0 0 0 1 0 0 0 1 f 0 1 0 0 0 0 0 1 Sponge Construction 0 0 0 1 r 0 0 0 1 f 0 1 0 0 c 0 0 0 1 Sponge Construction 0 0 0 1 r 0 0 0 1 f r c 0 1 0 0 0 0 0 0 c 0 0 0 0 0 1 0 0 key 0 AES 0 0 0 0 0 0 0 Sponge Construction message 0 1 0 1 0 ⊕ 1 0 0 f 0 0 0 0 0 1 0 0 Sponge Construction message 0 0 0 ⊕ ⊕ 0 f 0 0 0 0 Sponge Construction message 0 0 0 ⊕ ⊕ 0 f f 0 0 0 0 Sponge Construction message 0 0 0 ⊕ ⊕ ⊕ 0 f f 0 0 0 0 Sponge Construction message 0 0 0 ⊕ ⊕ ⊕ 0 f f f 0 0 0 0 Sponge Construction message 0 0 0 ⊕ ⊕ ⊕ 0 f f f 0 0 0 0 absorbing Sponge Construction message output 0 0 0 ⊕ ⊕ ⊕ 0 f f f 0 0 0 0 absorbing Sponge Construction message output 0 0 0 ⊕ ⊕ ⊕ 0 f f f f 0 0 0 0 absorbing Sponge Construction message output 0 0 0 ⊕ ⊕ ⊕ 0 f f f f 0 0 0 0 absorbing Sponge Construction message output 0 0 0 ⊕ ⊕ ⊕ 0 f f f f f 0 0 0 0 absorbing Sponge Construction message output 0 0 0 ⊕ ⊕ ⊕ 0 f f f f f 0 0 0 0 absorbing squeezing Keccak Guido Bertoni, Joan Daemen, Michaël Peeters and Gilles Van Assche 2007 SHA-3 competition 2012 2007 SHA-3 competition 2012 SHA-3 standard -

NISTIR 7620 Status Report on the First Round of the SHA-3

NISTIR 7620 Status Report on the First Round of the SHA-3 Cryptographic Hash Algorithm Competition Andrew Regenscheid Ray Perlner Shu-jen Chang John Kelsey Mridul Nandi Souradyuti Paul NISTIR 7620 Status Report on the First Round of the SHA-3 Cryptographic Hash Algorithm Competition Andrew Regenscheid Ray Perlner Shu-jen Chang John Kelsey Mridul Nandi Souradyuti Paul Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899-8930 September 2009 U.S. Department of Commerce Gary Locke, Secretary National Institute of Standards and Technology Patrick D. Gallagher, Deputy Director NISTIR 7620: Status Report on the First Round of the SHA-3 Cryptographic Hash Algorithm Competition Abstract The National Institute of Standards and Technology is in the process of selecting a new cryptographic hash algorithm through a public competition. The new hash algorithm will be referred to as “SHA-3” and will complement the SHA-2 hash algorithms currently specified in FIPS 180-3, Secure Hash Standard. In October, 2008, 64 candidate algorithms were submitted to NIST for consideration. Among these, 51 met the minimum acceptance criteria and were accepted as First-Round Candidates on Dec. 10, 2008, marking the beginning of the First Round of the SHA-3 cryptographic hash algorithm competition. This report describes the evaluation criteria and selection process, based on public feedback and internal review of the first-round candidates, and summarizes the 14 candidate algorithms announced on July 24, 2009 for moving forward to the second round of the competition. The 14 Second-Round Candidates are BLAKE, BLUE MIDNIGHT WISH, CubeHash, ECHO, Fugue, Grøstl, Hamsi, JH, Keccak, Luffa, Shabal, SHAvite-3, SIMD, and Skein. -

Identifying Open Research Problems in Cryptography by Surveying Cryptographic Functions and Operations 1

International Journal of Grid and Distributed Computing Vol. 10, No. 11 (2017), pp.79-98 http://dx.doi.org/10.14257/ijgdc.2017.10.11.08 Identifying Open Research Problems in Cryptography by Surveying Cryptographic Functions and Operations 1 Rahul Saha1, G. Geetha2, Gulshan Kumar3 and Hye-Jim Kim4 1,3School of Computer Science and Engineering, Lovely Professional University, Punjab, India 2Division of Research and Development, Lovely Professional University, Punjab, India 4Business Administration Research Institute, Sungshin W. University, 2 Bomun-ro 34da gil, Seongbuk-gu, Seoul, Republic of Korea Abstract Cryptography has always been a core component of security domain. Different security services such as confidentiality, integrity, availability, authentication, non-repudiation and access control, are provided by a number of cryptographic algorithms including block ciphers, stream ciphers and hash functions. Though the algorithms are public and cryptographic strength depends on the usage of the keys, the ciphertext analysis using different functions and operations used in the algorithms can lead to the path of revealing a key completely or partially. It is hard to find any survey till date which identifies different operations and functions used in cryptography. In this paper, we have categorized our survey of cryptographic functions and operations in the algorithms in three categories: block ciphers, stream ciphers and cryptanalysis attacks which are executable in different parts of the algorithms. This survey will help the budding researchers in the society of crypto for identifying different operations and functions in cryptographic algorithms. Keywords: cryptography; block; stream; cipher; plaintext; ciphertext; functions; research problems 1. Introduction Cryptography [1] in the previous time was analogous to encryption where the main task was to convert the readable message to an unreadable format. -

Data Intensive Dynamic Scheduling Model and Algorithm for Cloud Computing Security Md

1796 JOURNAL OF COMPUTERS, VOL. 9, NO. 8, AUGUST 2014 Data Intensive Dynamic Scheduling Model and Algorithm for Cloud Computing Security Md. Rafiqul Islam1,2 1Computer Science Department, American International University Bangladesh, Dhaka, Bangladesh 2Computer Science and Engineering Discipline, Khulna University, Khulna, Bangladesh Email: [email protected] Mansura Habiba Computer Science Department, American International University Bangladesh, Dhaka, Bangladesh Email: [email protected] Abstract—As cloud is growing immensely, different point cloud computing has gained widespread acceptance. types of data are getting more and more dynamic in However, without appropriate security and privacy terms of security. Ensuring high level of security for solutions designed for clouds, cloud computing becomes all data in storages is highly expensive and time a huge failure [2, 3]. Amazon provides a centralized consuming. Unsecured services on data are also cloud computing consisting simple storage services (S3) becoming vulnerable for malicious threats and data and elastic compute cloud (EC2). Google App Engine is leakage. The main reason for this is that, the also an example of cloud computing. While these traditional scheduling algorithms for executing internet-based online services do provide huge amounts different services on data stored in cloud usually of storage space and customizable computing resources. sacrifice security privilege in order to achieve It is eliminating the responsibility of local machines for deadline. To provide adequate security without storing and maintenance of data. Considering various sacrificing cost and deadline for real time data- kinds of data for each user stored in the cloud and the intensive cloud system, security aware scheduling demand of long term continuous assurance of their data algorithm has become an effective and important safety, security is one of the prime concerns for the feature. -

Performance Analysis of Cryptographic Hash Functions Suitable for Use in Blockchain

I. J. Computer Network and Information Security, 2021, 2, 1-15 Published Online April 2021 in MECS (http://www.mecs-press.org/) DOI: 10.5815/ijcnis.2021.02.01 Performance Analysis of Cryptographic Hash Functions Suitable for Use in Blockchain Alexandr Kuznetsov1 , Inna Oleshko2, Vladyslav Tymchenko3, Konstantin Lisitsky4, Mariia Rodinko5 and Andrii Kolhatin6 1,3,4,5,6 V. N. Karazin Kharkiv National University, Svobody sq., 4, Kharkiv, 61022, Ukraine E-mail: [email protected], [email protected], [email protected], [email protected], [email protected] 2 Kharkiv National University of Radio Electronics, Nauky Ave. 14, Kharkiv, 61166, Ukraine E-mail: [email protected] Received: 30 June 2020; Accepted: 21 October 2020; Published: 08 April 2021 Abstract: A blockchain, or in other words a chain of transaction blocks, is a distributed database that maintains an ordered chain of blocks that reliably connect the information contained in them. Copies of chain blocks are usually stored on multiple computers and synchronized in accordance with the rules of building a chain of blocks, which provides secure and change-resistant storage of information. To build linked lists of blocks hashing is used. Hashing is a special cryptographic primitive that provides one-way, resistance to collisions and search for prototypes computation of hash value (hash or message digest). In this paper a comparative analysis of the performance of hashing algorithms that can be used in modern decentralized blockchain networks are conducted. Specifically, the hash performance on different desktop systems, the number of cycles per byte (Cycles/byte), the amount of hashed message per second (MB/s) and the hash rate (KHash/s) are investigated. -

All-Or-Nothing Encryption and the Package Transform

All-or-Nothing Encryption and the Package Transform Ronald L. Rivest MIT Laboratory for Computer Science 545 Technology Square, Cambridge, Mass. 02139 rivest@theory, ics.mit, edu Abstract. We present a new mode of encryption for block ciphers, which we call all-or-nothing encryption. This mode has the interesting defining property that one must decrypt the entire ciphertext before one can de- termine even one message block. This means that brute-force searches against all-or-nothing encryption are slowed down by a factor equal to the number of blocks in the ciphertext. We give a specific way of im- plementing all-or-nothing encryption using a "package transform" as a pre-processing step to an ordinary encryption mode. A package trans- form followed by ordinary codebook encryption also has the interest- ing property that it is very efficiently implemented in parallel. All-or- nothing encryption can also provide protection against chosen-plaintext and related-message attacks. 1 Introduction One way in which a cryptosystem may be attacked is by brute-force search: an adversary tries decrypting an intercepted ciphertext with all possible keys until the plaintext "makes sense" or until it matches a known target plaintext. Our primary motivation is to devise means to make brute-force search more difficult, by appropriately pre-processing a message before encrypting it. In this paper, we assume that the cipher under discussion is a block cipher with fixed-length input/output blocks, although our remarks generalize to other kinds of ciphers. An "encryption mode" is used to extend the encryption function to arbitrary length messages (see, for example, Schneier [9] and Biham [3]). -

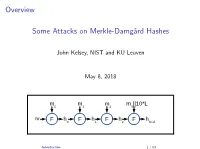

Some Attacks on Merkle-Damgård Hashes

Overview Some Attacks on Merkle-Damg˚ardHashes John Kelsey, NIST and KU Leuven May 8, 2018 m m m m ||10*L 0 1 2 3 iv F h F h F h F h 0 1 2 final Introduction 1 / 63 Overview I Cryptographic Hash Functions I Thinking About Collisions I Merkle-Damg˚ardhashing I Joux Multicollisions[2004] I Long-Message Second Preimage Attacks[1999,2004] I Herding and the Nostradamus Attack[2005] Introduction 2 / 63 Why Talk About These Results? I These are very visual results{looking at the diagram often explains the idea. I The results are pretty accessible. I Help you think about what's going on inside hashing constructions. Introduction 3 / 63 Part I: Preliminaries/Review I Hash function basics I Thinking about collisions I Merkle-Damg˚ardhash functions Introduction 4 / 63 Cryptographic Hash Functions I Today, they're the workhorse of crypto. I Originally: Needed for digital signatures I You can't sign 100 MB message{need to sign something short. I \Message fingerprint" or \message digest" I Need a way to condense long message to short string. I We need a stand-in for the original message. I Take a long, variable-length message... I ...and map it to a short string (say, 128, 256, or 512 bits). Cryptographic Hash Functions 5 / 63 Properties What do we need from a hash function? I Collision resistance I Preimage resistance I Second preimage resistance I Many other properties may be important for other applications Note: cryptographic hash functions are designed to behave randomly. Cryptographic Hash Functions 6 / 63 Collision Resistance The core property we need. -

Block Cipher Based Hashed Functions

ANALYSIS OF THREE BLOCK CIPHER BASED HASH FUNCTIONS: WHIRLPOOL, GRØSTL AND GRINDAHL A THESIS SUBMITTED TO THE GRADUATE SCHOOL OF APPLIED MATHEMATICS OF MIDDLE EAST TECHNICAL UNIVERSITY BY RITA ISMAILOVA IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHYLOSOPHY IN CRYPTOGRAPHY SEPTEMBER 2012 Approval of the thesis: ANALYSIS OF THREE BLOCK CIPHER BASED HASH FUNCTIONS: WHIRLPOOL, GRØSTL AND GRINDAHL submitted by RITA ISMAILOVA in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Department of Cryptography, Middle East Technical University by, Prof. Dr. Bülent Karasözen ____________ Director, Graduate School of Applied Mathematics Prof. Dr. Ferruh Özbudak ____________ Head of Department, Cryptography Assoc. Prof. Dr. Melek Diker Yücel ____________ Supervisor, Department of Electrical and Electronics Engineering Examining Committee Members: Prof. Dr. Ersan Akyıldız ____________ Department of Mathematics, METU Assoc. Prof. Dr. Melek Diker Yücel ____________ Department of Electrical and Electronics Engineering, METU Assoc. Prof. Dr. Ali Doğanaksoy ____________ Department of Mathematics, METU Assist. Prof. Dr. Zülfükar Saygı ____________ Department of Mathematics, TOBB ETU Dr. Hamdi Murat Yıldırım ____________ Department of Computer Technology and Information Systems, Bilkent University Date: ____________ I hereby declare that all information in this document has been obtained and presented in accordance with academic rules and ethical conduct. I also declare that, as required by these rules and conduct, I have fully cited and referenced all material and results that are not original to this work. Name, Last Name: RITA ISMAILOVA Signature : iii ABSTRACT ANALYSIS OF THREE BLOCK CIPHER BASED HASH FUNCTIONS: WHIRLPOOL, GRØSTL AND GRINDAHL Ismailova, Rita Ph.D., Department of Cryptography Supervisor : Assoc.