A General Statistic Framework for Genome-Based Disease Risk Prediction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Genetic Variation Across the Human Olfactory Receptor Repertoire Alters Odor Perception

bioRxiv preprint doi: https://doi.org/10.1101/212431; this version posted November 1, 2017. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY 4.0 International license. Genetic variation across the human olfactory receptor repertoire alters odor perception Casey Trimmer1,*, Andreas Keller2, Nicolle R. Murphy1, Lindsey L. Snyder1, Jason R. Willer3, Maira Nagai4,5, Nicholas Katsanis3, Leslie B. Vosshall2,6,7, Hiroaki Matsunami4,8, and Joel D. Mainland1,9 1Monell Chemical Senses Center, Philadelphia, Pennsylvania, USA 2Laboratory of Neurogenetics and Behavior, The Rockefeller University, New York, New York, USA 3Center for Human Disease Modeling, Duke University Medical Center, Durham, North Carolina, USA 4Department of Molecular Genetics and Microbiology, Duke University Medical Center, Durham, North Carolina, USA 5Department of Biochemistry, University of Sao Paulo, Sao Paulo, Brazil 6Howard Hughes Medical Institute, New York, New York, USA 7Kavli Neural Systems Institute, New York, New York, USA 8Department of Neurobiology and Duke Institute for Brain Sciences, Duke University Medical Center, Durham, North Carolina, USA 9Department of Neuroscience, University of Pennsylvania School of Medicine, Philadelphia, Pennsylvania, USA *[email protected] ABSTRACT The human olfactory receptor repertoire is characterized by an abundance of genetic variation that affects receptor response, but the perceptual effects of this variation are unclear. To address this issue, we sequenced the OR repertoire in 332 individuals and examined the relationship between genetic variation and 276 olfactory phenotypes, including the perceived intensity and pleasantness of 68 odorants at two concentrations, detection thresholds of three odorants, and general olfactory acuity. -

Database Tool the Systematic Annotation of the Three Main GPCR

Database, Vol. 2010, Article ID baq018, doi:10.1093/database/baq018 ............................................................................................................................................................................................................................................................................................. Database tool The systematic annotation of the three main Downloaded from https://academic.oup.com/database/article-abstract/doi/10.1093/database/baq018/406672 by guest on 15 January 2019 GPCR families in Reactome Bijay Jassal1, Steven Jupe1, Michael Caudy2, Ewan Birney1, Lincoln Stein2, Henning Hermjakob1 and Peter D’Eustachio3,* 1European Bioinformatics Institute, Hinxton, Cambridge, CB10 1SD, UK, 2Ontario Institute for Cancer Research, Toronto, ON M5G 0A3, Canada and 3New York University School of Medicine, New York, NY 10016, USA *Corresponding author: Tel: +212 263 5779; Fax: +212 263 8166; Email: [email protected] Submitted 14 April 2010; Revised 14 June 2010; Accepted 13 July 2010 ............................................................................................................................................................................................................................................................................................. Reactome is an open-source, freely available database of human biological pathways and processes. A major goal of our work is to provide an integrated view of cellular signalling processes that spans from ligand–receptor -

Table S1 the Four Gene Sets Derived from Gene Expression Profiles of Escs and Differentiated Cells

Table S1 The four gene sets derived from gene expression profiles of ESCs and differentiated cells Uniform High Uniform Low ES Up ES Down EntrezID GeneSymbol EntrezID GeneSymbol EntrezID GeneSymbol EntrezID GeneSymbol 269261 Rpl12 11354 Abpa 68239 Krt42 15132 Hbb-bh1 67891 Rpl4 11537 Cfd 26380 Esrrb 15126 Hba-x 55949 Eef1b2 11698 Ambn 73703 Dppa2 15111 Hand2 18148 Npm1 11730 Ang3 67374 Jam2 65255 Asb4 67427 Rps20 11731 Ang2 22702 Zfp42 17292 Mesp1 15481 Hspa8 11807 Apoa2 58865 Tdh 19737 Rgs5 100041686 LOC100041686 11814 Apoc3 26388 Ifi202b 225518 Prdm6 11983 Atpif1 11945 Atp4b 11614 Nr0b1 20378 Frzb 19241 Tmsb4x 12007 Azgp1 76815 Calcoco2 12767 Cxcr4 20116 Rps8 12044 Bcl2a1a 219132 D14Ertd668e 103889 Hoxb2 20103 Rps5 12047 Bcl2a1d 381411 Gm1967 17701 Msx1 14694 Gnb2l1 12049 Bcl2l10 20899 Stra8 23796 Aplnr 19941 Rpl26 12096 Bglap1 78625 1700061G19Rik 12627 Cfc1 12070 Ngfrap1 12097 Bglap2 21816 Tgm1 12622 Cer1 19989 Rpl7 12267 C3ar1 67405 Nts 21385 Tbx2 19896 Rpl10a 12279 C9 435337 EG435337 56720 Tdo2 20044 Rps14 12391 Cav3 545913 Zscan4d 16869 Lhx1 19175 Psmb6 12409 Cbr2 244448 Triml1 22253 Unc5c 22627 Ywhae 12477 Ctla4 69134 2200001I15Rik 14174 Fgf3 19951 Rpl32 12523 Cd84 66065 Hsd17b14 16542 Kdr 66152 1110020P15Rik 12524 Cd86 81879 Tcfcp2l1 15122 Hba-a1 66489 Rpl35 12640 Cga 17907 Mylpf 15414 Hoxb6 15519 Hsp90aa1 12642 Ch25h 26424 Nr5a2 210530 Leprel1 66483 Rpl36al 12655 Chi3l3 83560 Tex14 12338 Capn6 27370 Rps26 12796 Camp 17450 Morc1 20671 Sox17 66576 Uqcrh 12869 Cox8b 79455 Pdcl2 20613 Snai1 22154 Tubb5 12959 Cryba4 231821 Centa1 17897 -

Human Artificial Chromosome (Hac) Vector

Europäisches Patentamt *EP001559782A1* (19) European Patent Office Office européen des brevets (11) EP 1 559 782 A1 (12) EUROPEAN PATENT APPLICATION published in accordance with Art. 158(3) EPC (43) Date of publication: (51) Int Cl.7: C12N 15/09, C12N 1/15, 03.08.2005 Bulletin 2005/31 C12N 1/19, C12N 1/21, C12N 5/10, C12P 21/02 (21) Application number: 03751334.8 (86) International application number: (22) Date of filing: 03.10.2003 PCT/JP2003/012734 (87) International publication number: WO 2004/031385 (15.04.2004 Gazette 2004/16) (84) Designated Contracting States: • KATOH, Motonobu, Tottori University AT BE BG CH CY CZ DE DK EE ES FI FR GB GR Yonago-shi, Tottori 683-8503 (JP) HU IE IT LI LU MC NL PT RO SE SI SK TR • TOMIZUKA, Kazuma, Designated Extension States: Kirin Beer Kabushiki Kaisha AL LT LV MK Takashi-shi, Gunma 370-1295 (JP) • KUROIWA, Yoshimi, (30) Priority: 04.10.2002 JP 2002292853 Kirin Beer Kabushiki Kaisha Takasaki-shi, Gunma 370-1295 (JP) (71) Applicant: KIRIN BEER KABUSHIKI KAISHA • KAKEDA, Minoru, Kirin Beer Kabushiki Kaisha Tokyo 104-8288 (JP) Takasaki-shi, Gunma 370-1295 (JP) (72) Inventors: (74) Representative: HOFFMANN - EITLE • OSHIMURA, Mitsuo, Tottori University Patent- und Rechtsanwälte Yonago-shi, Tottori 683-8503 (JP) Arabellastrasse 4 81925 München (DE) (54) HUMAN ARTIFICIAL CHROMOSOME (HAC) VECTOR (57) The present invention relates to a human arti- ing a cell which expresses foreign DNA. Furthermore, ficial chromosome (HAC) vector and a method for pro- the present invention relates to a method for producing ducing the same. -

HMGB1 in Health and Disease R

Donald and Barbara Zucker School of Medicine Journal Articles Academic Works 2014 HMGB1 in health and disease R. Kang R. C. Chen Q. H. Zhang W. Hou S. Wu See next page for additional authors Follow this and additional works at: https://academicworks.medicine.hofstra.edu/articles Part of the Emergency Medicine Commons Recommended Citation Kang R, Chen R, Zhang Q, Hou W, Wu S, Fan X, Yan Z, Sun X, Wang H, Tang D, . HMGB1 in health and disease. 2014 Jan 01; 40():Article 533 [ p.]. Available from: https://academicworks.medicine.hofstra.edu/articles/533. Free full text article. This Article is brought to you for free and open access by Donald and Barbara Zucker School of Medicine Academic Works. It has been accepted for inclusion in Journal Articles by an authorized administrator of Donald and Barbara Zucker School of Medicine Academic Works. Authors R. Kang, R. C. Chen, Q. H. Zhang, W. Hou, S. Wu, X. G. Fan, Z. W. Yan, X. F. Sun, H. C. Wang, D. L. Tang, and +8 additional authors This article is available at Donald and Barbara Zucker School of Medicine Academic Works: https://academicworks.medicine.hofstra.edu/articles/533 NIH Public Access Author Manuscript Mol Aspects Med. Author manuscript; available in PMC 2015 December 01. NIH-PA Author ManuscriptPublished NIH-PA Author Manuscript in final edited NIH-PA Author Manuscript form as: Mol Aspects Med. 2014 December ; 0: 1–116. doi:10.1016/j.mam.2014.05.001. HMGB1 in Health and Disease Rui Kang1,*, Ruochan Chen1, Qiuhong Zhang1, Wen Hou1, Sha Wu1, Lizhi Cao2, Jin Huang3, Yan Yu2, Xue-gong Fan4, Zhengwen Yan1,5, Xiaofang Sun6, Haichao Wang7, Qingde Wang1, Allan Tsung1, Timothy R. -

DNA Supercoiling with a Twist Edwin Kamau Louisiana State University and Agricultural and Mechanical College, [email protected]

Louisiana State University LSU Digital Commons LSU Doctoral Dissertations Graduate School 2005 DNA supercoiling with a twist Edwin Kamau Louisiana State University and Agricultural and Mechanical College, [email protected] Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_dissertations Recommended Citation Kamau, Edwin, "DNA supercoiling with a twist" (2005). LSU Doctoral Dissertations. 999. https://digitalcommons.lsu.edu/gradschool_dissertations/999 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Doctoral Dissertations by an authorized graduate school editor of LSU Digital Commons. For more information, please [email protected]. DNA SUPERCOILING WITH A TWIST A Dissertation Submitted to the Graduate Faculty of the Louisiana State University and Agricultural and Mechanical College in partial fulfillment of the requirements for the degree of Doctor of Philosophy in The Department of Biological Sciences By Edwin Kamau B.S. Horticulture, Egerton University, Kenya, 1996, May, 2005 DEDICATIONS I would like to dedicate this dissertation to my son, Ashford Kimani Kamau. Your age marks the life of my graduate school career. I have been a weekend dad, broke and with enormous financial responsibilities; it kills me to see how sometimes you cry your heart out on Sunday evening when our weekend time together is over. I miss you too every moment, you have encouraged me to be a better person, a better dad so that I can make you proud. At your tender age, I have learned a lot from you. We will soon spend all the time in the world we need together; travel, sports, music, pre-historic discoveries and adventures; the road is wide open. -

Supplementary Methods

Heterogeneous Contribution of Microdeletions in the Development of Common Generalized and Focal epilepsies. SUPPLEMENTARY METHODS Epilepsy subtype extended description. Genetic Gereralized Epilepsy (GGE): Features unprovoked tonic and/or clonic seizures, originated inconsistently at some focal point within the brain that rapidly generalizes engaging bilateral distributed spikes and waves discharges on the electroencephalogram. This generalization can include cortical and sub cortical structures but not necessarily the entire cortex[1]. GGE is the most common group of epilepsies accounting for 20% of all cases[2]. It is characterized by an age-related onset and a strong familial aggregation and heritability which allows the assumption of a genetic cause. Although genetic associations have been identified, a broad spectrum of causes is acknowledged and remains largely unsolved [3]. Rolandic Epilepsy (RE): Commonly known also as Benign Epilepsy with Centrotemporal Spikes (BECTS), hallmarks early onset diagnosis (mean onset = 7 years old) with brief, focal hemifacial or oropharyngeal sensorimotor seizures alongside speech arrest and secondarily generalized tonic– clonic seizures, which mainly occur during sleep[4]. Rolandic epilepsy features a broad spectrum of less benign related syndromes called atypical Rolandic epilepsy (ARE), including benign partial epilepsy (ABPE), Landau–Kleffner syndrome(LKS) and epileptic encephalopathy with continuous spike-and-waves during sleep (CSWSS)[5]. Together they are the most common childhood epilepsy with a prevalence of 0.2–0.73/1000 (i.e. _1/2500)[6]. Adult Focal Epilepsy (AFE). Focal epilepsy is characterized by sporadic events of seizures originated within a specific brain region and restricted to one hemisphere. Although they can exhibit more than one network of wave discharges on the electroencephalogram, and different degrees of spreading, they feature a consistent site of origin. -

Greg's Awesome Thesis

Analysis of alignment error and sitewise constraint in mammalian comparative genomics Gregory Jordan European Bioinformatics Institute University of Cambridge A dissertation submitted for the degree of Doctor of Philosophy November 30, 2011 To my parents, who kept us thinking and playing This dissertation is the result of my own work and includes nothing which is the out- come of work done in collaboration except where specifically indicated in the text and acknowledgements. This dissertation is not substantially the same as any I have submitted for a degree, diploma or other qualification at any other university, and no part has already been, or is currently being submitted for any degree, diploma or other qualification. This dissertation does not exceed the specified length limit of 60,000 words as defined by the Biology Degree Committee. November 30, 2011 Gregory Jordan ii Analysis of alignment error and sitewise constraint in mammalian comparative genomics Summary Gregory Jordan November 30, 2011 Darwin College Insight into the evolution of protein-coding genes can be gained from the use of phylogenetic codon models. Recently sequenced mammalian genomes and powerful analysis methods developed over the past decade provide the potential to globally measure the impact of natural selection on pro- tein sequences at a fine scale. The detection of positive selection in particular is of great interest, with relevance to the study of host-parasite conflicts, immune system evolution and adaptive dif- ferences between species. This thesis examines the performance of methods for detecting positive selection first with a series of simulation experiments, and then with two empirical studies in mammals and primates. -

European Patent Office of Opposition to That Patent, in Accordance with the Implementing Regulations

(19) TZZ Z_T (11) EP 2 884 280 B1 (12) EUROPEAN PATENT SPECIFICATION (45) Date of publication and mention (51) Int Cl.: of the grant of the patent: G01N 33/566 (2006.01) 09.05.2018 Bulletin 2018/19 (21) Application number: 13197310.9 (22) Date of filing: 15.12.2013 (54) Method for evaluating the scent performance of perfumes and perfume mixtures Verfahren zur Bewertung des Duftverhaltens von Duftstoffen und Duftstoffmischungen Procédé d’evaluation de senteur performance du parfums et mixtures de parfums (84) Designated Contracting States: (56) References cited: AL AT BE BG CH CY CZ DE DK EE ES FI FR GB WO-A2-03/091388 GR HR HU IE IS IT LI LT LU LV MC MK MT NL NO PL PT RO RS SE SI SK SM TR • BAGHAEI KAVEH A: "Deorphanization of human olfactory receptors by luciferase and Ca-imaging (43) Date of publication of application: methods.",METHODS IN MOLECULAR BIOLOGY 17.06.2015 Bulletin 2015/25 (CLIFTON, N.J.) 2013, vol. 1003, 19 June 2013 (2013-06-19), pages229-238, XP008168583, ISSN: (73) Proprietor: Symrise AG 1940-6029 37603 Holzminden (DE) • KAVEH BAGHAEI ET AL: "Olfactory receptors coded by segregating pseudo genes and (72) Inventors: odorants with known specific anosmia.", 33RD • Hatt, Hanns ANNUAL MEETING OF THE ASSOCIATION FOR 44789 Bochum (DE) CHEMORECEPTION, 1 April 2011 (2011-04-01), • Gisselmann, Günter XP055111507, 58456 Witten (DE) • TOUHARA ET AL: "Deorphanizing vertebrate • Ashtibaghaei, Kaveh olfactory receptors: Recent advances in 44801 Bochum (DE) odorant-response assays", NEUROCHEMISTRY • Panten, Johannes INTERNATIONAL, PERGAMON PRESS, 37671 Höxter (DE) OXFORD, GB, vol. -

Misexpression of Cancer/Testis (Ct) Genes in Tumor Cells and the Potential Role of Dream Complex and the Retinoblastoma Protein Rb in Soma-To-Germline Transformation

Michigan Technological University Digital Commons @ Michigan Tech Dissertations, Master's Theses and Master's Reports 2019 MISEXPRESSION OF CANCER/TESTIS (CT) GENES IN TUMOR CELLS AND THE POTENTIAL ROLE OF DREAM COMPLEX AND THE RETINOBLASTOMA PROTEIN RB IN SOMA-TO-GERMLINE TRANSFORMATION SABHA M. ALHEWAT Michigan Technological University, [email protected] Copyright 2019 SABHA M. ALHEWAT Recommended Citation ALHEWAT, SABHA M., "MISEXPRESSION OF CANCER/TESTIS (CT) GENES IN TUMOR CELLS AND THE POTENTIAL ROLE OF DREAM COMPLEX AND THE RETINOBLASTOMA PROTEIN RB IN SOMA-TO- GERMLINE TRANSFORMATION", Open Access Master's Thesis, Michigan Technological University, 2019. https://doi.org/10.37099/mtu.dc.etdr/933 Follow this and additional works at: https://digitalcommons.mtu.edu/etdr Part of the Cancer Biology Commons, and the Cell Biology Commons MISEXPRESSION OF CANCER/TESTIS (CT) GENES IN TUMOR CELLS AND THE POTENTIAL ROLE OF DREAM COMPLEX AND THE RETINOBLASTOMA PROTEIN RB IN SOMA-TO-GERMLINE TRANSFORMATION By Sabha Salem Alhewati A THESIS Submitted in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE In Biological Sciences MICHIGAN TECHNOLOGICAL UNIVERSITY 2019 © 2019 Sabha Alhewati This thesis has been approved in partial fulfillment of the requirements for the Degree of MASTER OF SCIENCE in Biological Sciences. Department of Biological Sciences Thesis Advisor: Paul Goetsch. Committee Member: Ebenezer Tumban. Committee Member: Zhiying Shan. Department Chair: Chandrashekhar Joshi. Table of Contents List of figures .......................................................................................................................v -

WO 2019/068007 Al Figure 2

(12) INTERNATIONAL APPLICATION PUBLISHED UNDER THE PATENT COOPERATION TREATY (PCT) (19) World Intellectual Property Organization I International Bureau (10) International Publication Number (43) International Publication Date WO 2019/068007 Al 04 April 2019 (04.04.2019) W 1P O PCT (51) International Patent Classification: (72) Inventors; and C12N 15/10 (2006.01) C07K 16/28 (2006.01) (71) Applicants: GROSS, Gideon [EVIL]; IE-1-5 Address C12N 5/10 (2006.0 1) C12Q 1/6809 (20 18.0 1) M.P. Korazim, 1292200 Moshav Almagor (IL). GIBSON, C07K 14/705 (2006.01) A61P 35/00 (2006.01) Will [US/US]; c/o ImmPACT-Bio Ltd., 2 Ilian Ramon St., C07K 14/725 (2006.01) P.O. Box 4044, 7403635 Ness Ziona (TL). DAHARY, Dvir [EilL]; c/o ImmPACT-Bio Ltd., 2 Ilian Ramon St., P.O. (21) International Application Number: Box 4044, 7403635 Ness Ziona (IL). BEIMAN, Merav PCT/US2018/053583 [EilL]; c/o ImmPACT-Bio Ltd., 2 Ilian Ramon St., P.O. (22) International Filing Date: Box 4044, 7403635 Ness Ziona (E.). 28 September 2018 (28.09.2018) (74) Agent: MACDOUGALL, Christina, A. et al; Morgan, (25) Filing Language: English Lewis & Bockius LLP, One Market, Spear Tower, SanFran- cisco, CA 94105 (US). (26) Publication Language: English (81) Designated States (unless otherwise indicated, for every (30) Priority Data: kind of national protection available): AE, AG, AL, AM, 62/564,454 28 September 2017 (28.09.2017) US AO, AT, AU, AZ, BA, BB, BG, BH, BN, BR, BW, BY, BZ, 62/649,429 28 March 2018 (28.03.2018) US CA, CH, CL, CN, CO, CR, CU, CZ, DE, DJ, DK, DM, DO, (71) Applicant: IMMP ACT-BIO LTD. -

Sean Raspet – Molecules

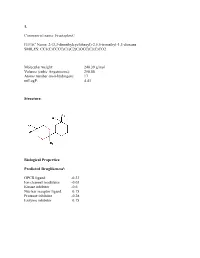

1. Commercial name: Fructaplex© IUPAC Name: 2-(3,3-dimethylcyclohexyl)-2,5,5-trimethyl-1,3-dioxane SMILES: CC1(C)CCCC(C1)C2(C)OCC(C)(C)CO2 Molecular weight: 240.39 g/mol Volume (cubic Angstroems): 258.88 Atoms number (non-hydrogen): 17 miLogP: 4.43 Structure: Biological Properties: Predicted Druglikenessi: GPCR ligand -0.23 Ion channel modulator -0.03 Kinase inhibitor -0.6 Nuclear receptor ligand 0.15 Protease inhibitor -0.28 Enzyme inhibitor 0.15 Commercial name: Fructaplex© IUPAC Name: 2-(3,3-dimethylcyclohexyl)-2,5,5-trimethyl-1,3-dioxane SMILES: CC1(C)CCCC(C1)C2(C)OCC(C)(C)CO2 Predicted Olfactory Receptor Activityii: OR2L13 83.715% OR1G1 82.761% OR10J5 80.569% OR2W1 78.180% OR7A2 77.696% 2. Commercial name: Sylvoxime© IUPAC Name: N-[4-(1-ethoxyethenyl)-3,3,5,5tetramethylcyclohexylidene]hydroxylamine SMILES: CCOC(=C)C1C(C)(C)CC(CC1(C)C)=NO Molecular weight: 239.36 Volume (cubic Angstroems): 252.83 Atoms number (non-hydrogen): 17 miLogP: 4.33 Structure: Biological Properties: Predicted Druglikeness: GPCR ligand -0.6 Ion channel modulator -0.41 Kinase inhibitor -0.93 Nuclear receptor ligand -0.17 Protease inhibitor -0.39 Enzyme inhibitor 0.01 Commercial name: Sylvoxime© IUPAC Name: N-[4-(1-ethoxyethenyl)-3,3,5,5tetramethylcyclohexylidene]hydroxylamine SMILES: CCOC(=C)C1C(C)(C)CC(CC1(C)C)=NO Predicted Olfactory Receptor Activity: OR52D1 71.900% OR1G1 70.394% 0R52I2 70.392% OR52I1 70.390% OR2Y1 70.378% 3. Commercial name: Hyperflor© IUPAC Name: 2-benzyl-1,3-dioxan-5-one SMILES: O=C1COC(CC2=CC=CC=C2)OC1 Molecular weight: 192.21 g/mol Volume