The Tree Data Model

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

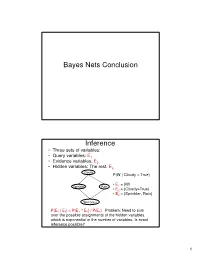

Bayes Nets Conclusion Inference

Bayes Nets Conclusion Inference • Three sets of variables: • Query variables: E1 • Evidence variables: E2 • Hidden variables: The rest, E3 Cloudy P(W | Cloudy = True) • E = {W} Sprinkler Rain 1 • E2 = {Cloudy=True} • E3 = {Sprinkler, Rain} Wet Grass P(E 1 | E 2) = P(E 1 ^ E 2) / P(E 2) Problem: Need to sum over the possible assignments of the hidden variables, which is exponential in the number of variables. Is exact inference possible? 1 A Simple Case A B C D • Suppose that we want to compute P(D = d) from this network. A Simple Case A B C D • Compute P(D = d) by summing the joint probability over all possible values of the remaining variables A, B, and C: P(D === d) === ∑∑∑ P(A === a, B === b,C === c, D === d) a,b,c 2 A Simple Case A B C D • Decompose the joint by using the fact that it is the product of terms of the form: P(X | Parents(X)) P(D === d) === ∑∑∑ P(D === d | C === c)P(C === c | B === b)P(B === b | A === a)P(A === a) a,b,c A Simple Case A B C D • We can avoid computing the sum for all possible triplets ( A,B,C) by distributing the sums inside the product P(D === d) === ∑∑∑ P(D === d | C === c)∑∑∑P(C === c | B === b)∑∑∑P(B === b| A === a)P(A === a) c b a 3 A Simple Case A B C D This term depends only on B and can be written as a 2- valued function fA(b) P(D === d) === ∑∑∑ P(D === d | C === c)∑∑∑P(C === c | B === b)∑∑∑P(B === b| A === a)P(A === a) c b a A Simple Case A B C D This term depends only on c and can be written as a 2- valued function fB(c) === === === === === === P(D d) ∑∑∑ P(D d | C c)∑∑∑P(C c | B b)f A(b) c b …. -

Disk Clone Industrial

Disk Clone Industrial USER MANUAL Ver. 1.0.0 Updated: 9 June 2020 | Contents | ii Contents Legal Statement............................................................................... 4 Introduction......................................................................................4 Cloning Data.................................................................................................................................... 4 Erasing Confidential Data..................................................................................................................5 Disk Clone Overview.......................................................................6 System Requirements....................................................................................................................... 7 Software Licensing........................................................................................................................... 7 Software Updates............................................................................................................................. 8 Getting Started.................................................................................9 Disk Clone Installation and Distribution.......................................................................................... 12 Launching and initial Configuration..................................................................................................12 Navigating Disk Clone.....................................................................................................................14 -

Tree-Combined Trie: a Compressed Data Structure for Fast IP Address Lookup

(IJACSA) International Journal of Advanced Computer Science and Applications, Vol. 6, No. 12, 2015 Tree-Combined Trie: A Compressed Data Structure for Fast IP Address Lookup Muhammad Tahir Shakil Ahmed Department of Computer Engineering, Department of Computer Engineering, Sir Syed University of Engineering and Technology, Sir Syed University of Engineering and Technology, Karachi Karachi Abstract—For meeting the requirements of the high-speed impact their forwarding capacity. In order to resolve two main Internet and satisfying the Internet users, building fast routers issues there are two possible solutions one is IPv6 IP with high-speed IP address lookup engine is inevitable. addressing scheme and second is Classless Inter-domain Regarding the unpredictable variations occurred in the Routing or CIDR. forwarding information during the time and space, the IP lookup algorithm should be able to customize itself with temporal and Finding a high-speed, memory-efficient and scalable IP spatial conditions. This paper proposes a new dynamic data address lookup method has been a great challenge especially structure for fast IP address lookup. This novel data structure is in the last decade (i.e. after introducing Classless Inter- a dynamic mixture of trees and tries which is called Tree- Domain Routing, CIDR, in 1994). In this paper, we will Combined Trie or simply TC-Trie. Binary sorted trees are more discuss only CIDR. In addition to these desirable features, advantageous than tries for representing a sparse population reconfigurability is also of great importance; true because while multibit tries have better performance than trees when a different points of this huge heterogeneous structure of population is dense. -

Heaps a Heap Is a Complete Binary Tree. a Max-Heap Is A

Heaps Heaps 1 A heap is a complete binary tree. A max-heap is a complete binary tree in which the value in each internal node is greater than or equal to the values in the children of that node. A min-heap is defined similarly. 97 Mapping the elements of 93 84 a heap into an array is trivial: if a node is stored at 90 79 83 81 index k, then its left child is stored at index 42 55 73 21 83 2k+1 and its right child at index 2k+2 01234567891011 97 93 84 90 79 83 81 42 55 73 21 83 CS@VT Data Structures & Algorithms ©2000-2009 McQuain Building a Heap Heaps 2 The fact that a heap is a complete binary tree allows it to be efficiently represented using a simple array. Given an array of N values, a heap containing those values can be built, in situ, by simply “sifting” each internal node down to its proper location: - start with the last 73 73 internal node * - swap the current 74 81 74 * 93 internal node with its larger child, if 79 90 93 79 90 81 necessary - then follow the swapped node down 73 * 93 - continue until all * internal nodes are 90 93 90 73 done 79 74 81 79 74 81 CS@VT Data Structures & Algorithms ©2000-2009 McQuain Heap Class Interface Heaps 3 We will consider a somewhat minimal maxheap class: public class BinaryHeap<T extends Comparable<? super T>> { private static final int DEFCAP = 10; // default array size private int size; // # elems in array private T [] elems; // array of elems public BinaryHeap() { . -

Tree Structures

Tree Structures Definitions: o A tree is a connected acyclic graph. o A disconnected acyclic graph is called a forest o A tree is a connected digraph with these properties: . There is exactly one node (Root) with in-degree=0 . All other nodes have in-degree=1 . A leaf is a node with out-degree=0 . There is exactly one path from the root to any leaf o The degree of a tree is the maximum out-degree of the nodes in the tree. o If (X,Y) is a path: X is an ancestor of Y, and Y is a descendant of X. Root X Y CSci 1112 – Algorithms and Data Structures, A. Bellaachia Page 1 Level of a node: Level 0 or 1 1 or 2 2 or 3 3 or 4 Height or depth: o The depth of a node is the number of edges from the root to the node. o The root node has depth zero o The height of a node is the number of edges from the node to the deepest leaf. o The height of a tree is a height of the root. o The height of the root is the height of the tree o Leaf nodes have height zero o A tree with only a single node (hence both a root and leaf) has depth and height zero. o An empty tree (tree with no nodes) has depth and height −1. o It is the maximum level of any node in the tree. CSci 1112 – Algorithms and Data Structures, A. -

A Simple Linear-Time Algorithm for Finding Path-Decompostions of Small Width

Introduction Preliminary Definitions Pathwidth Algorithm Summary A Simple Linear-Time Algorithm for Finding Path-Decompostions of Small Width Kevin Cattell Michael J. Dinneen Michael R. Fellows Department of Computer Science University of Victoria Victoria, B.C. Canada V8W 3P6 Information Processing Letters 57 (1996) 197–203 Kevin Cattell, Michael J. Dinneen, Michael R. Fellows Linear-Time Path-Decomposition Algorithm Introduction Preliminary Definitions Pathwidth Algorithm Summary Outline 1 Introduction Motivation History 2 Preliminary Definitions Boundaried graphs Path-decompositions Topological tree obstructions 3 Pathwidth Algorithm Main result Linear-time algorithm Proof of correctness Other results Kevin Cattell, Michael J. Dinneen, Michael R. Fellows Linear-Time Path-Decomposition Algorithm Introduction Preliminary Definitions Motivation Pathwidth Algorithm History Summary Motivation Pathwidth is related to several VLSI layout problems: vertex separation link gate matrix layout edge search number ... Usefullness of bounded treewidth in: study of graph minors (Robertson and Seymour) input restrictions for many NP-complete problems (fixed-parameter complexity) Kevin Cattell, Michael J. Dinneen, Michael R. Fellows Linear-Time Path-Decomposition Algorithm Introduction Preliminary Definitions Motivation Pathwidth Algorithm History Summary History General problem(s) is NP-complete Input: Graph G, integer t Question: Is tree/path-width(G) ≤ t? Algorithmic development (fixed t): O(n2) nonconstructive treewidth algorithm by Robertson and Seymour (1986) O(nt+2) treewidth algorithm due to Arnberg, Corneil and Proskurowski (1987) O(n log n) treewidth algorithm due to Reed (1992) 2 O(2t n) treewidth algorithm due to Bodlaender (1993) O(n log2 n) pathwidth algorithm due to Ellis, Sudborough and Turner (1994) Kevin Cattell, Michael J. Dinneen, Michael R. -

Forest Quickstart Guide for Linguists

Forest Quickstart Guide for Linguists Guido Vanden Wyngaerd [email protected] June 28, 2020 Contents 1 Introduction 1 2 Loading Forest 2 3 Basic Usage 2 4 Adjusting node spacing 4 5 Triangles 7 6 Unlabelled nodes 9 7 Horizontal alignment of terminals 10 8 Arrows 11 9 Highlighting 14 1 Introduction Forest is a package for drawing linguistic (and other) tree diagrams de- veloped by Sašo Živanović. This manual provides a quickstart guide for linguists with just the essential things that you need to get started. More 1 extensive documentation is available from the CTAN-archive. Forest is based on the TikZ package; more information about its commands, in par- ticular those controlling the appearance of the nodes, the arrows, and the highlighting can be found in the TikZ documentation. 2 Loading Forest In your preamble, put \usepackage[linguistics]{forest} The linguistics option makes for nice trees, in which the branches meet above the two nodes that they join; it will also align the example number (provided by linguex) with the top of the tree: (1) CP C IP I VP V NP 3 Basic Usage Forest uses a familiar labelled brackets syntax. The code below will out- put the tree in (1) above (\ex. requires the linguex package and provides the example number): \ex. \begin{forest} [CP[C][IP[I][VP[V][NP]]]] \end{forest} Forest will parse the above code without problem, but you are likely to soon get lost in your labelled brackets with more complicated trees if you write the code this way. The better alternative is to arrange the nodes over multiple lines: 2 \ex. -

Metadata for Everyone a Simple, Low-Cost Methodology Timothy D

SAS Global Forum 2008 Data Integration Paper 138-2008 Metadata for Everyone A Simple, Low-Cost Methodology Timothy D. Brown, Altoona, IA ABSTRACT In the context of Base SAS® programming, this paper uses “hardcoded” values as an introduction to “metadata” and the reasons for using it. It then describes a low cost and simple methodology for maintaining any kind of metadata. INTRODUCTION This discussion will take an indirect approach to defining metadata. It’ll describe the metadata which might be included, or hard-coded, in a Base SAS program and propose alternatives to storing and using the metadata. Outside of programs “data” and “code” are distinct. However within programs, the distinction gets blurred when data values, called “hardcoded” data, are included within the code. Hardcoded values include, but are not limited to: • Text constants, literals • Names of companies, people, organizations and places • Directory paths and file names • Parameters on SAS procedures such as WHERE, KEEP, DROP, RENAME, VARS, BY etc • Numeric constants including dates* • Statistical constants • Period begin and end dates • Mixed text and numeric values • Expressions in IF and WHERE clauses • What-if scenarios (* excluding dates which are derived logically using a SAS functions such as TODAY(), DATETIME(), INTNX and NXTPD) In addition, many small conversion, cross-reference and look-up tables, which might be hardcoded as SAS formats or read into a program from many different sources, work well as metadata and fit into this framework. Obviously, some hardcoded values might never change in the life of a program. So it might be prudent to leave some hardcoded values in the code. -

Binary Search Tree

ADT Binary Search Tree! Ellen Walker! CPSC 201 Data Structures! Hiram College! Binary Search Tree! •" Value-based storage of information! –" Data is stored in order! –" Data can be retrieved by value efficiently! •" Is a binary tree! –" Everything in left subtree is < root! –" Everything in right subtree is >root! –" Both left and right subtrees are also BST#s! Operations on BST! •" Some can be inherited from binary tree! –" Constructor (for empty tree)! –" Inorder, Preorder, and Postorder traversal! •" Some must be defined ! –" Insert item! –" Delete item! –" Retrieve item! The Node<E> Class! •" Just as for a linked list, a node consists of a data part and links to successor nodes! •" The data part is a reference to type E! •" A binary tree node must have links to both its left and right subtrees! The BinaryTree<E> Class! The BinaryTree<E> Class (continued)! Overview of a Binary Search Tree! •" Binary search tree definition! –" A set of nodes T is a binary search tree if either of the following is true! •" T is empty! •" Its root has two subtrees such that each is a binary search tree and the value in the root is greater than all values of the left subtree but less than all values in the right subtree! Overview of a Binary Search Tree (continued)! Searching a Binary Tree! Class TreeSet and Interface Search Tree! BinarySearchTree Class! BST Algorithms! •" Search! •" Insert! •" Delete! •" Print values in order! –" We already know this, it#s inorder traversal! –" That#s why it#s called “in order”! Searching the Binary Tree! •" If the tree is -

Four Ways to Reorder Your Variables, Ranked by Elegance and Efficiency Louise S

Order, Order! Four Ways To Reorder Your Variables, Ranked by Elegance and Efficiency Louise S. Hadden, Abt Associates Inc. ABSTRACT SAS® practitioners are frequently required to present variables in an output data file in a particular order, or standards may require variables in a production data file to be in a particular order. This paper and presentation offer several methods for reordering variables in a data file, encompassing both DATA step and procedural methods. Relative efficiency and elegance of the solutions will be discussed. INTRODUCTION SAS provides us with numerous methods to control all types of SAS output, including SAS data files, data tables in other formats, and ODS output. This paper focuses solely on output SAS data files (which may then be used to generate SAS data files and other types of output from SAS processes), and specifically on DATA step and PROC SQL methods. This short paper and presentation is suitable for all SAS practitioners at all levels of expertise. Attendees will gain a greater understanding of the processes by which SAS assigns variable attributes, including variable/column order within a data file, and how to obtain information on variable attributes – and in the process, learn how to reorder variables within a SAS data file. KNOW THY DATA It is always important to understand fully and explore the inputs to SAS-created output. SAS has provided users with a variety of possibilities in terms of determining locations of variables or columns (and other important details comprising metadata). These possibilities include, but are not limited to: 1. The CONTENTS Procedure 2. -

6.172 Lecture 19 : Cache-Oblivious B-Tree (Tokudb)

How TokuDB Fractal TreeTM Indexes Work Bradley C. Kuszmaul Guest Lecture in MIT 6.172 Performance Engineering, 18 November 2010. 6.172 —How Fractal Trees Work 1 My Background • I’m an MIT alum: MIT Degrees = 2 × S.B + S.M. + Ph.D. • I was a principal architect of the Connection Machine CM-5 super computer at Thinking Machines. • I was Assistant Professor at Yale. • I was Akamai working on network mapping and billing. • I am research faculty in the SuperTech group, working with Charles. 6.172 —How Fractal Trees Work 2 Tokutek A few years ago I started collaborating with Michael Bender and Martin Farach-Colton on how to store data on disk to achieve high performance. We started Tokutek to commercialize the research. 6.172 —How Fractal Trees Work 3 I/O is a Big Bottleneck Sensor Query Systems include Sensor Disk Query sensors and Sensor storage, and Query want to perform Millions of data elements arrive queries on per second Query recently arrived data using indexes. recent data. Sensor 6.172 —How Fractal Trees Work 4 The Data Indexing Problem • Data arrives in one order (say, sorted by the time of the observation). • Data is queried in another order (say, by URL or location). Sensor Query Sensor Disk Query Sensor Query Millions of data elements arrive per second Query recently arrived data using indexes. Sensor 6.172 —How Fractal Trees Work 5 Why Not Simply Sort? • This is what data Data Sorted by Time warehouses do. • The problem is that you Sort must wait to sort the data before querying it: Data Sorted by URL typically an overnight delay. -

Lowest Common Ancestors in Trees and Directed Acyclic Graphs1

Lowest Common Ancestors in Trees and Directed Acyclic Graphs1 Michael A. Bender2 3 Martín Farach-Colton4 Giridhar Pemmasani2 Steven Skiena2 5 Pavel Sumazin6 Version: We study the problem of finding lowest common ancestors (LCA) in trees and directed acyclic graphs (DAGs). Specifically, we extend the LCA problem to DAGs and study the LCA variants that arise in this general setting. We begin with a clear exposition of Berkman and Vishkin’s simple optimal algorithm for LCA in trees. The ideas presented are not novel theoretical contributions, but they lay the foundation for our work on LCA problems in DAGs. We present an algorithm that finds all-pairs-representative : LCA in DAGs in O~(n2 688 ) operations, provide a transitive-closure lower bound for the all-pairs-representative-LCA problem, and develop an LCA-existence algorithm that preprocesses the DAG in transitive-closure time. We also present a suboptimal but practical O(n3) algorithm for all-pairs-representative LCA in DAGs that uses ideas from the optimal algorithms in trees and DAGs. Our results reveal a close relationship between the LCA, all-pairs-shortest-path, and transitive-closure problems. We conclude the paper with a short experimental study of LCA algorithms in trees and DAGs. Our experiments and source code demonstrate the elegance of the preprocessing-query algorithms for LCA in trees. We show that for most trees the suboptimal Θ(n log n)-preprocessing Θ(1)-query algorithm should be preferred, and demonstrate that our proposed O(n3) algorithm for all- pairs-representative LCA in DAGs performs well in both low and high density DAGs.