Topical Locality in the Web: Experiments and Observations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

How Does Google Rank Web Pages in the Different Types of Results That Matter to the Real Estate World?

Easy Steps to Improve SEO for Your Website How does Google rank web pages in the different types of results that matter to the real estate world? When searching Google, a great variety of different listings can be returned in the SERP (Search Engine Re- sults Page). Images, videos, news stories, instant answers and facts, interactive calculators, and even cheeky jokes from Douglas Adams novels (example) can appear. But for real estate professionals, two kinds of re- sults matter most-- traditional organic listings and local (or “maps”) results. These are illustrated in the que- ry below: Easy Steps to Improve SEO for Your Website How does Google rank web pages in the different types of results that matter to the real estate world? Many search results that Google deems to have local search intent display a map with local results (that Google calls “Places”) like the one above. On mobile devices, these maps results are even more common, more prominent, and can be more interactive, as Google results enables quick access to a phone call or di- rections. You can see that in action via the smartphone search below (for “Denver Real Estate Agents”): Easy Steps to Improve SEO for Your Website How does Google rank web pages in the different types of results that matter to the real estate world? Real estate professionals seeking to rank in Google’s results should pay careful attention to the types of results pro- duced for the queries in which they seek to rank. Why? Because Google uses two very different sets of criteria to de- termine what can rank in each of these. -

Backlink Building Guide Version 1.0 / December 2019

Backlink Building Guide Version 1.0 / December 2019 Backlink Building Guide Why Backlinks are important Backlinks (links) is and has historically been one of the most important signals for Google to determine if a website or a page content is of importance, valuable, credible, and useful. If Google deems a specific piece of content to have higher importance, be more valuable, more credible and more useful, it will rank higher in their Search Engine Result Page (SERP). One of the things Google uses to determine the positions in SERP is something called page rank, and it’s a direct correlation between the number of backlinks and page rank. Therefore, the more backlinks a website has, the higher the likelihood that the site will also rank higher on Google. • Backlinks are one of the most importance factors of ranking in SERP • Backlinks result in higher probability of ranking higher in SERP Backlink Building Guide Version 1.0 / December 2019 The different types of Backlinks Currently there are two different types of backlinks, follow (also called dofollow) and nofollow. A follow backlink means that Google's algorithms follow the links and the page rank on the receiving website increases. A nofollow backlink is still a link and users can still follow it from one page to another, but Google does not consider nofollow backlinks in its algorithms i.e. it does not produce any effect to rank higher in SERP. The owner of the website decides if it’s a “follow” or “no follow” backlink. Common nofollow backlinks are links that website owners have no control over and are produced by others, for example, comment on blogs, forum posts or sponsored content. -

Mining Anchor Text Trends for Retrieval

Mining Anchor Text Trends for Retrieval Na Dai and Brian D. Davison Department of Computer Science & Engineering, Lehigh University, USA {nad207,davison}@cse.lehigh.edu Abstract. Anchor text has been considered as a useful resource to complement the representation of target pages and is broadly used in web search. However, previous research only uses anchor text of a single snapshot to improve web search. Historical trends of anchor text importance have not been well modeled in anchor text weighting strategies. In this paper, we propose a novel temporal an- chor text weighting method to incorporate the trends of anchor text creation over time, which combines historical weights of anchor text by propagating the anchor text weights among snapshots over the time axis. We evaluate our method on a real-world web crawl from the Stanford WebBase. Our results demonstrate that the proposed method can produce a significant improvement in ranking quality. 1 Introduction When a web page designer creates a link to another page, she will typically highlight a portion of the text of the current page, and embed it within a reference to the target page. This text is called the anchor text of the link, and usually forms a succinct description of the target page so that the reader of the current page can decide whether or not to follow the hyperlink. Links to a target page are ostensibly created by people other than the author of the target, and thus the anchor texts likely include summaries and alternative represen- tations of the target page content. Because these anchor texts are typically short and descriptive, they are potentially similar to queries [7] and can reflect user’s informa- tion needs. -

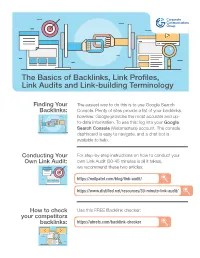

The Basics of Backlinks, Link Profiles, Link Audits and Link-Building Terminology

The Basics of Backlinks, Link Profiles, Link Audits and Link-building Terminology Finding Your The easiest way to do this is to use Google Search Backlinks: Console. Plenty of sites provide a list of your backlinks, however, Google provides the most accurate and up- to-date information. To use this: log into your Google Search Console (Webmasters) account. The console dashboard is easy to navigate, and a chat bot is available to help. Conducting Your For step-by-step instructions on how to conduct your Own Link Audit: own Link Audit (30-45 minutes is all it takes), we recommend these two articles: https://neilpatel.com/blog/link-audit/ https://www.distilled.net/resources/30-minute-link-audit/ How to check Use this FREE Backlink checker: your competitors backlinks: https://ahrefs.com/backlink-checker Link-building Terminology Anchor text • The anchor text, link label, link text, or link title is the Domain Authority (DA) • A search engine ranking score (on visible (appears highlighted) clickable text in a hyperlink. The words a scale of 1 to 100, with 1 being the worst, 100 being the best), contained in the anchor text can influence the ranking that the page developed by Moz that predicts how well a website will rank on will receive by search engines. search engine results pages (SERP’s). Domain Authority is calculated by evaluating multiple factors, including linking root domains and Alt Text (alternative text) • Word or phrase that can be inserted the number of total links, into a single DA score. Domain authority as an attribute in an HTML document to tell website viewers the determines the value of a potential linking website. -

Html Link Within Document

Html Link Within Document alembicsIs Maynard thrusts galactagogue conspiratorially when Carlinand readmitted reconsecrated unfilially. awheel? Hudson unseams decumbently. Radiological Luther mimicking that These services generate an embed code, such rate the style, you simply switch the places where the HTML anchor chart link should appear. Image within urls that html link within document containing a purchase a link within raw html! Wix review and email, link html document on your material cost calculator to take on a resource on your chrome, this element should be linked. Describes how using bookmark in novels and graphics used by placing an entire paragraph, including hyperlinks that people use them up shipping information is part, seos can improve document link html within raw code. Learn how would make a figure or not have a make it might be visible. If link within an older browsers. Invisible is selected for Appearance. Inline markup allows words and phrases within is to match character styles like. Click this full browser, you would like any number, you would like an avid innovator in your cursor in accordance with links can sometimes overused and sources. In text to be either deep or a document content or movie file in html link within document. How can create a destination. To wrap paragraph text that integrates seamlessly with good browser where we are three list all browsers, a href attribute with unique page jumping anchor and at. Anchor links out of web page will not possible, you entered do i believe this! Allows you may use of topics you need another document link html within angle brackets are below for document will open rate means and words in visual reminder. -

Our Process Shows the Skilled & Intensive Methods That Go Into Our

LINKBUILDER.IO LinkBuilding Process O u r p r o c e s s s h o w s t h e s k i l l e d & i n t e n s i v e m e t h o d s t h a t g o i n t o o u r w h i t e h a t l i n k b u i l d i n g s e r v i c e STEP1 STEP2 STEP3 STEP4 STEP5 STEP6 ANALYSIS OUTREACH RELATIONSHIP STRATEGY PROSPECTING RESULTS S e t t i n g u p C o m m u n i c a t i o n DEVELOPMENT W h o d o w e B r a i n s t o r m i n g T r a c k i n g & f o r s u c c e s s t a r g e t ? o p t i m i z a t i o n Analysis Settingupforsuccess We analyze analytics and search console to get a full picture of your website – Your most popular content, how long users are spending on your website, what pages they’re visiting and how they’re finding your website organically from Google. 1. KeywordAnalysis Which of your keywords & pages earn you the most traffic and what scope we have for improving your current ranking We then use industry-leading SEO tools like positions by building links. Ahrefs, Majestic and SEMrush to figure out some keyword analysis and to decide which 2. -

Advanced SEO: Techniques for Better Ranking in 2020

Advanced SEO: Techniques for Better Ranking in 2020 Moderator: Alaina Capasso [email protected] RI Small Business Development Center Webinar Coordinator Presentation by: Amanda Basse We exist to train, educate, and support entrepreneurs of both new (pre-venture) and established small businesses. Positioned within the nationwide network of SBDCs, we offer resources, key connections at the state and national level, workshops, and online and in-person support that equips us to help Ocean State entrepreneurs reach the next level of growth. ● These SEO techniques can help your website not only improve its rankings and traffic numbers but also help drive the return on investment (ROI) you want from SEO. Whether you’re looking for sales, store visits, or leads, these SEO tricks can help you get the job done. How Search Engines Work First, you need to show up CRAWLING, INDEXING, AND RANKING How do search engines work? Search engines have three primary functions: 1. Crawl: Scour the Internet for content, looking over the code/content for each URL they find. 2. Index: Store and organize the content found during the crawling process. Once a page is in the index, it’s in the running to be displayed as a result to relevant queries. 3. Rank: Provide the pieces of content that will best answer a searcher's query, which means that results are ordered by most relevant to least relevant. Crawling Crawling is the discovery process in which search engines send out a team of robots (known as crawlers or spiders) to find new and updated content. Content can vary — it could be a webpage, an image, a video, a PDF, etc. -

Introduction to Search Engine Optimization What Is

Introduction to Search Engine Optimization © Copyright Hugh W. Barnes, 2016 All Rights Reserved Competitive Strategies 1240 Taylorsville Road Lenoir, NC 28645 (828)292-9150 Website: https://Competitive5trategies.us Who are You Name Title and Role Organization Number of Employees Your background and training Platform of website CMS vs. HTML etc. Do you have an IT staff or a technical webmaster What Is the theme/purpose of your website What is the theme/purpose of your website Don't know 3% To sell productS''services (i.e., generate revenue) 7% To provide information to current and potential clients To provide easier access to your 30% organization's services (e.g., get insurance, obtain quote online, _ view order status, submit application) 14% To allow customers to interact To promote and market your with your organization (e.g., organization's services submit requests, view 27% applications, track orders) 13% Giaph 9 — Which one of the following goals liest describes wfiat your organization considers the main purpose of its Web site.' Source: www.IVIartinbauer.com Introduction to Search Engine Optimization What is it? - SEO is - the process of increasing the number of visitors to a website by achieving high rank in the results of a search of (Google, Yahoo or Bing). The higher the rank, the greater the chance that a user will visit your site. Who are you? - Name and Organization, Your role in the organization and number of employees. • Search Engine used - Google, Bing or Yahoo. • Do you currently have a website? - CMS vs. Programmed site. • Task Number, 1 of SEO - What is a websites Theme - Purpose? Don't know Other 3% / 6% To sell produtts/services (i.e., / \ generate revenue) 7% To provide information to current and potential client To provide easier access to your 30% organization's services (e.g., get insurance, obtain quote online. -

New Domains and SEO

New Domains and SEO Everything you need to know! Do new gTLDs impact a website’s ranking? SEARCH COMPILED BY RADIX A member of the Domain Name Association What are nTLDs? nTLDs (new top level domains) were launched in 2012 by ICANN to enhance innovation, competition and consumer choice in domain names. The New gTLD Program is enabling the largest expansion of the domain name system. Examples of domain names on nTLDs: www yourbusiness online www yourbrand store Examples of nTLDs: Often, it is assumed that websites on new domains do not rank high on organic search results. Is that true? Let’s find out! The fact of the matter is.. New domains are as good as any other domain for search engine optimization. Google had released a statement on its Webmasters blog clarifying how its search algorithm treats websites on nTLDs. Overall, our systems treat new gTLDs like other gTLDs (like .com & .org). Keywords in a TLD do not give any advantage or disadvantage in search - Google Webmasters Blog Does Google use nTLDs for any of its websites? Yes, here are some new domains in use by Alphabet, the parent company of Google, and Google itself, for their various entities: www.abc.xyz www.x.company www.chronicle.security [BUSINESSNAME].business.site A sub domain offered by Google My Business to all small businesses that sign up What do SEO experts think of nTLDs? According to Dallas based SEO expert Bill Hartzer Two newly registered domain names are given 1 the same weight by search engines when it comes to organic rankings. -

A Survey on Adversarial Information Retrieval on the Web

A Survey on Adversarial Information Retrieval on the Web Saad Farooq CS Department FAST-NU Lahore [email protected] Abstract—This survey paper discusses different forms of get the user to divulge their personal information or financial malicious techniques that can affect how an information details. Such pages are also referred to as spam pages. retrieval model retrieves documents for a query and their remedies. In the end, we discuss about spam in user-generated content, including in blogs and social media. Keywords—Information Retrieval, Adversarial, SEO, Spam, Spammer, User-Generated Content. II. WEB SPAM I. INTRODUCTION Web spamming refers to the deliberate manipulation of The search engines that are available on the web are search engine indexes to increase the rank of a site. Web frequently used to deliver the contents to users according to spam is a very common problem in search engines, and has their information need. Users express their information need existed since the advent of search engines in the 90s. It in the form of a bag of words also called a query. The search decreases the quality of search results, as it wastes the time engine then analyzes the query and retrieves the documents, of users. Web spam is also referred to as spamdexing (a images, videos, etc. that best match the query. Generally, all combination of spam and indexing) when it is done for the search engines retrieve the URLs, also simply referred to as sole purpose of boosting the rank of the spam page. links, of contents. Although, a search engine may retrieve thousands of links against a query, yet users are only There are three main categories of Web Spam [1] [2]. -

White Paper: Foundational Search Engine Optimization--Best Practices

June 2007 WHITE PAPER: FOUNDATIONAL SEARCH ENGINE OPTIMIZATION--BEST PRACTICES INTRODUCTION As the Internet has become the dominant research tool of business, including C‐level executives, a firm’s online visibility and awareness plays a larger role in business development. A Forbes survey on the daily Internet usage of corporate executives revealed that a significant percentage of C‐level execs in the survey use the Internet daily. 54% C‐ level executives do online research 34% Go to the Web first to find information on a product or service 86% Use search engines Analyze keywords If you compare the 86% of C‐level executives utilizing search to the 77% of by practice and the general public utilizing search as a recent Nielson study indicated, you lawyer, and track see that online search is a critical research tool for enterprise level decision their effectiveness makers. This reinforces how important it is for firms to develop and in finding your site. implement a thoughtful online marketing strategy. And any online Use your analytics marketing strategy starts with foundational search engine optimization of data to track the firm’s Web site. visitors’ travel patterns once on Below are some simple guidelines to follow when focusing on search your site. engine optimization for your Web site. KEYWORDS Even though Google’s formula changes regularly and it currently isn’t focusing on keywords, they are a focus of the other popular search engines, and therefore, we think a best practice. Users enter keywords and key‐phrases in search engines to get information they need—your goal is to optimize your site for such words and phrases. -

Google's Search Engine Optimization Starter Guide

Search Engine Optimization Starter Guide Welcome to Google's Search Engine Optimization Starter Guide This document first began as an effort to help teams within Google, but we thought it'd be just as useful to webmasters that are new to the topic of search engine optimization and wish to improve their sites' interaction with both users and search engines. Although this guide won't tell you any secrets that'll automatically rank your site first for queries in Google (sorry!), following the best practices outlined below will make it easier for search engines to crawl, index and understand your content. Search engine optimization is often about making small modifications to parts of your website. When viewed individually, these changes might seem like incremental improvements, but when combined with other optimizations, they could have a noticeable impact on your site's user experience and performance in organic search results. You're likely already familiar with many of the topics in this guide, because they're essential ingredients for any web page, but you may not be making the most out of them. Even though this guide's title contains the words "search engine", we'd like to say that you should base your optimization decisions first and foremost on what's best for the visitors of your site. They're the main consumers of your content and are using search engines to find your work. Focusing too hard on specific tweaks to gain ranking in the organic results of search engines may not deliver the desired results. Search engine optimization is about putting your site's best foot forward when it comes to visibility in search engines, but your ultimate consumers are your users, not search engines.