Understanding Streaming Music Popularity Using Machine Learning Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PERFORMED IDENTITIES: HEAVY METAL MUSICIANS BETWEEN 1984 and 1991 Bradley C. Klypchak a Dissertation Submitted to the Graduate

PERFORMED IDENTITIES: HEAVY METAL MUSICIANS BETWEEN 1984 AND 1991 Bradley C. Klypchak A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2007 Committee: Dr. Jeffrey A. Brown, Advisor Dr. John Makay Graduate Faculty Representative Dr. Ron E. Shields Dr. Don McQuarie © 2007 Bradley C. Klypchak All Rights Reserved iii ABSTRACT Dr. Jeffrey A. Brown, Advisor Between 1984 and 1991, heavy metal became one of the most publicly popular and commercially successful rock music subgenres. The focus of this dissertation is to explore the following research questions: How did the subculture of heavy metal music between 1984 and 1991 evolve and what meanings can be derived from this ongoing process? How did the contextual circumstances surrounding heavy metal music during this period impact the performative choices exhibited by artists, and from a position of retrospection, what lasting significance does this particular era of heavy metal merit today? A textual analysis of metal- related materials fostered the development of themes relating to the selective choices made and performances enacted by metal artists. These themes were then considered in terms of gender, sexuality, race, and age constructions as well as the ongoing negotiations of the metal artist within multiple performative realms. Occurring at the juncture of art and commerce, heavy metal music is a purposeful construction. Metal musicians made performative choices for serving particular aims, be it fame, wealth, or art. These same individuals worked within a greater system of influence. Metal bands were the contracted employees of record labels whose own corporate aims needed to be recognized. -

Special Issue

ISSUE 750 / 19 OCTOBER 2017 15 TOP 5 MUST-READ ARTICLES record of the week } Post Malone scored Leave A Light On Billboard Hot 100 No. 1 with “sneaky” Tom Walker YouTube scheme. Relentless Records (Fader) out now Tom Walker is enjoying a meteoric rise. His new single Leave } Spotify moves A Light On, released last Friday, is a brilliant emotional piano to formalise pitch led song which builds to a crescendo of skittering drums and process for slots in pitched-up synths. Co-written and produced by Steve Mac 1 as part of the Brit List. Streaming support is big too, with top CONTENTS its Browse section. (Ed Sheeran, Clean Bandit, P!nk, Rita Ora, Liam Payne), we placement on Spotify, Apple and others helping to generate (MusicAlly) love the deliberate sense of space and depth within the mix over 50 million plays across his repertoire so far. Active on which allows Tom’s powerful vocals to resonate with strength. the road, he is currently supporting The Script in the US and P2 Editorial: Paul Scaife, } Universal Music Support for the Glasgow-born, Manchester-raised singer has will embark on an eight date UK headline tour next month RotD at 15 years announces been building all year with TV performances at Glastonbury including a London show at The Garage on 29 November P8 Special feature: ‘accelerator Treehouse on BBC2 and on the Today Show in the US. before hotfooting across Europe with Hurts. With the quality Happy Birthday engagement network’. Recent press includes Sunday Times Culture “Breaking Act”, of this single, Tom’s on the edge of the big time and we’re Record of the Day! (PRNewswire) The Sun (Bizarre), Pigeons & Planes, Clash, Shortlist and certain to see him in the mix for Brits Critics’ Choice for 2018. -

2020 China Country Profile

1 China Music Industry Development Report COUNTRY China: statistics PROFILE MARKET PROFILE claiming that China’s digital music business 13.08.20 ❱China increased by 5.5% from 2017 to 2018 while the number of digital music users exceeded Population... 1.4bn 550m, a jump of 5.1% year-on-year. GDP (purchasing power parity)... $25.36tn GDP per capita (PPP)... $18,200 What, exactly, these digital music users are doing is still not entirely clear. Streaming, as 904m Internet users... you might imagine, dominates digital music Broadband connections... 407.39m consumption in China. The divide, however, Broadband - subscriptions per 100 inhabitants... 29 between ad-supported and paid users is opaque at best. The IFPI, for example, reports Mobile phone subscriptions... 1.65bn that revenue from ad-supported streaming Smartphone users... 781.7m is greater than that of subscription in China Sources: CIA World Factbook/South China Morning Post/Statista – a claim that was met with some surprise by some local Music Ally sources. “For most of the platforms, subscription income is still the largest contributor in the digital music market,” says a representative of NetEase A lack of reliable figures makes China a Cloud Music. difficult music market to understand. Even so, Simon Robson, president, Asia Region, its digital potential is vast. Warner Music, says that the ad-supported China basically lives model isn’t really set up to make money in China but is instead designed to drive traffic CHINA’S GROWING IMPORTANCE as a service NetEase Cloud Music, warned inside WeChat now... to the service. “Within China, the GDP is so digital music market is perhaps only matched that IFPI numbers for China were largely we fix problems for the different from city to city that it becomes by a fundamental lack of understanding based on advances; that means they may difficult to set standard pricing per month that exists about the country. -

Issue Date: March 22Nd 2021 # Lw Bp Woc Tfo Artist Single 1 2 1 9 9 the WEEKND SAVE YOUR TEARS Theweeknd.Com

Issue Date: March 22nd 2021 # lw bp woc tfo artist single 1 2 1 9 9 THE WEEKND SAVE YOUR TEARS theweeknd.com. ***back to #1***/***5 weeks at #1*** album: After Hours (Republic - Universal Music) 2 3 2 10 7 TIESTO FEAT. TY DOLLA $IGN THE BUSINESS www.tiesto.com single (Atlantic - Dancing Bear) 3 1 1 16 16 AVA MAX MY HEAD & MY HEART avamax.com album: Heaven & Hell (Atlantic - Dancing Bear) 4 5 1 11 11 ED SHEERAN AFTERGLOW edsheeran.com single (Asylum - Dancing Bear) 5 4 4 5 5 SIA WITH DAVID GUETTA FLOATING THROUGH SPACE www.siamusic.net album: Music - Songs from and Inspired by the Motion Picture (Atlantic - Dancing Bear) 6 7 6 8 8 ATB X TOPIC X A7S YOUR LOVE (9PM) atb-music.com album: Positions (Virgin - Universal Music) 7 13 5 36 31 MEDUZA FEAT. DERMOT KENNEDY PARADISE www.soundofmeduza.com single (Virgin - Universal Music) 8 23 8 4 4 NATHAN EVANS WELLERMAN (SEA SHANTY/220 KID X BILLEN TED REMIX) twitter.com/NathanDawe ***greatest gainer TFO*** single (Polydor - Universal Music) 9 18 8 6 6 RAG'N'BONE MAN ALL YOU EVER WANTED www.ragnboneman.com single (Columbia - Menart) 10 6 2 8 8 OLIVIA RODRIGO DRIVERS LICENSE oliviarodrigo.com single (Geffen - Universal Music) 11 12 11 4 4 DUA LIPA WE'RE GOOD www.dualipa.com album: Future Nostalgia: The Moonlight edition (Warner - Dancing Bear) 12 14 1 23 23 DUA LIPA FEAT. DABABY LEVITATING www.dualipa.com album: Future Nostalgia (Warner - Dancing Bear) 13 17 13 6 3 RITON & NIGHTCRAWLERS FEAT. -

The Changing Face of China's Music Market Musicdish*China, May 2017 China’S Old Music Industry Changing Landscape

THE CHANGING FACE OF CHINA'S MUSIC MARKET MUSICDISH*CHINA, MAY 2017 CHINA’S OLD MUSIC INDUSTRY CHANGING LANDSCAPE • 2013 estimated value of recorded music industry of US$82.6 million (5.6% increase), 21st largest (IFPI) • 2015 estimated value of recorded music industry of US$169.7 million (63.8% increase), 14th largest (IFPI) • 2016 value of recorded music industry grew 20.3% on 30.6% increase in streaming revenue, 12th largest (IFPI) • Digital music industry compound annual growth rate (2011-15): +28.5% (Nielsen) • Government policy: copyright law and enforcement • Market consolidation & music licensing • Shift to smartphone, lower mobile data cost, increased connectivity 40 Tencent: QQ + Kugou + Kuwo ~ 78% 30 Netease 9% 20 Xiami (Alibaba) 10 4% Other: Baidu, Apple Music,… 9% 0 QQ Kugou Kuwo Netease Xiami Other STEFANIE SUN & APPLE MUSIC • EP “RAINBOW BOT” EXCLUSIVELY ON APPLE MUSIC • EXCLUSIVE 3 MINUTE DOCUMENTARY • RANKED NUMBER 4 FOR “5 CAN’T-MISS APPLE MUSIC EXCLUSIVE ALBUM” STREAMING WARS: TENCENT VS. NETEASE • 10th most valued company, world’s largest video game company by revenue, WeChat has 889M active users • Tencent has 600M active monthly users and over 15M paying music subscribers • QQ Music has 200M active monthly users • Combined with Kuwo and Kugou (CMC acquisition), control 77% of music streaming market • NetEase Music Cloud recently joined ranks of unicorns • Netease has over 300M active monthly users MOBILE MUSIC LANDSCAPE • 2015 mobile music industry US$945 million • Estimated 2016 mobile music industry US$1.4 billion -

Popular Songs and Ballads of Han China

Popular Songs and Ballads of Han China Popular Songs and Ballads of Han China ANNE BIRRELL Open Access edition funded by the National Endowment for the Humanities / Andrew W. Mellon Foundation Humanities Open Book Program. Licensed under the terms of Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 In- ternational (CC BY-NC-ND 4.0), which permits readers to freely download and share the work in print or electronic format for non-commercial purposes, so long as credit is given to the author. Derivative works and commercial uses require per- mission from the publisher. For details, see https://creativecommons.org/licenses/by-nc-nd/4.0/. The Cre- ative Commons license described above does not apply to any material that is separately copyrighted. Open Access ISBNs: 9780824880347 (PDF) 9780824880354 (EPUB) This version created: 17 May, 2019 Please visit www.hawaiiopen.org for more Open Access works from University of Hawai‘i Press. © 1988, 1993 Anne Birrell All rights reserved To Hans H. Frankel, pioneer of yüeh-fu studies Acknowledgements I wish to thank The British Academy for their generous Fel- lowship which assisted my research on this book. I would also like to take this opportunity of thanking the University of Michigan for enabling me to commence my degree programme some years ago by awarding me a National Defense Foreign Language Fellowship. I am indebted to my former publisher, Mr. Rayner S. Unwin, now retired, for his helpful advice in pro- ducing the first edition. For this revised edition, I wish to thank sincerely my col- leagues whose useful corrections and comments have been in- corporated into my text. -

Songs by Artist

Songs by Artist Title Title (Hed) Planet Earth 2 Live Crew Bartender We Want Some Pussy Blackout 2 Pistols Other Side She Got It +44 You Know Me When Your Heart Stops Beating 20 Fingers 10 Years Short Dick Man Beautiful 21 Demands Through The Iris Give Me A Minute Wasteland 3 Doors Down 10,000 Maniacs Away From The Sun Because The Night Be Like That Candy Everybody Wants Behind Those Eyes More Than This Better Life, The These Are The Days Citizen Soldier Trouble Me Duck & Run 100 Proof Aged In Soul Every Time You Go Somebody's Been Sleeping Here By Me 10CC Here Without You I'm Not In Love It's Not My Time Things We Do For Love, The Kryptonite 112 Landing In London Come See Me Let Me Be Myself Cupid Let Me Go Dance With Me Live For Today Hot & Wet Loser It's Over Now Road I'm On, The Na Na Na So I Need You Peaches & Cream Train Right Here For You When I'm Gone U Already Know When You're Young 12 Gauge 3 Of Hearts Dunkie Butt Arizona Rain 12 Stones Love Is Enough Far Away 30 Seconds To Mars Way I Fell, The Closer To The Edge We Are One Kill, The 1910 Fruitgum Co. Kings And Queens 1, 2, 3 Red Light This Is War Simon Says Up In The Air (Explicit) 2 Chainz Yesterday Birthday Song (Explicit) 311 I'm Different (Explicit) All Mixed Up Spend It Amber 2 Live Crew Beyond The Grey Sky Doo Wah Diddy Creatures (For A While) Me So Horny Don't Tread On Me Song List Generator® Printed 5/12/2021 Page 1 of 334 Licensed to Chris Avis Songs by Artist Title Title 311 4Him First Straw Sacred Hideaway Hey You Where There Is Faith I'll Be Here Awhile Who You Are Love Song 5 Stairsteps, The You Wouldn't Believe O-O-H Child 38 Special 50 Cent Back Where You Belong 21 Questions Caught Up In You Baby By Me Hold On Loosely Best Friend If I'd Been The One Candy Shop Rockin' Into The Night Disco Inferno Second Chance Hustler's Ambition Teacher, Teacher If I Can't Wild-Eyed Southern Boys In Da Club 3LW Just A Lil' Bit I Do (Wanna Get Close To You) Outlaw No More (Baby I'ma Do Right) Outta Control Playas Gon' Play Outta Control (Remix Version) 3OH!3 P.I.M.P. -

Dynamics of Musical Success: a Bayesian Nonparametric Approach

Dynamics of Musical Success: A Bayesian Nonparametric Approach Khaled Boughanmi1 Asim Ansari Rajeev Kohli (Preliminary Draft) October 01, 2018 1This paper is based on the first essay of Khaled Boughnami's dissertation. Khaled Boughanmi is a PhD candidate in Marketing, Columbia Business School. Asim Ansari is the William T. Dillard Professor of Marketing, Columbia Business School. Rajeev Kohli is the Ira Leon Rennert Professor of Business, Columbia Business School. We thank Prof. Michael Mauskapf for his insightful suggestions about the music industry. We also thank the Deming Center, Columbia University, for generous funding to support this research. Abstract We model the dynamics of musical success of albums over the last half century with a view towards constructing musically well-balanced playlists. We develop a novel nonparametric Bayesian modeling framework that combines data of different modalities (e.g. metadata, acoustic and textual data) to infer the correlates of album success. We then show how artists, music platforms, and label houses can use the model estimates to compile new albums and playlists. Our empirical investigation uses a unique dataset which we collected using different online sources. The modeling framework integrates different types of nonparametrics. One component uses a supervised hierarchical Dirichlet process to summarize the perceptual information in crowd-sourced textual tags and another time-varying component uses dynamic penalized splines to capture how different acoustical features of music have shaped album success over the years. Our results illuminate the broad patterns in the rise and decline of different musical genres, and in the emergence of new forms of music. They also characterize how various subjective and objective acoustic measures have waxed and waned in importance over the years. -

Songs by Artist

Andromeda II DJ Entertainment Songs by Artist www.adj2.com Title Title Title 10,000 Maniacs 50 Cent AC DC Because The Night Disco Inferno Stiff Upper Lip Trouble Me Just A Lil Bit You Shook Me All Night Long 10Cc P.I.M.P. Ace Of Base I'm Not In Love Straight To The Bank All That She Wants 112 50 Cent & Eminen Beautiful Life Dance With Me Patiently Waiting Cruel Summer 112 & Ludacris 50 Cent & The Game Don't Turn Around Hot & Wet Hate It Or Love It Living In Danger 112 & Supercat 50 Cent Feat. Eminem And Adam Levine Sign, The Na Na Na My Life (Clean) Adam Gregory 1975 50 Cent Feat. Snoop Dogg And Young Crazy Days City Jeezy Adam Lambert Love Me Major Distribution (Clean) Never Close Our Eyes Robbers 69 Boyz Adam Levine The Sound Tootsee Roll Lost Stars UGH 702 Adam Sandler 2 Pac Where My Girls At What The Hell Happened To Me California Love 8 Ball & MJG Adams Family 2 Unlimited You Don't Want Drama The Addams Family Theme Song No Limits 98 Degrees Addams Family 20 Fingers Because Of You The Addams Family Theme Short Dick Man Give Me Just One Night Adele 21 Savage Hardest Thing Chasing Pavements Bank Account I Do Cherish You Cold Shoulder 3 Degrees, The My Everything Hello Woman In Love A Chorus Line Make You Feel My Love 3 Doors Down What I Did For Love One And Only Here Without You a ha Promise This Its Not My Time Take On Me Rolling In The Deep Kryptonite A Taste Of Honey Rumour Has It Loser Boogie Oogie Oogie Set Fire To The Rain 30 Seconds To Mars Sukiyaki Skyfall Kill, The (Bury Me) Aah Someone Like You Kings & Queens Kho Meh Terri -

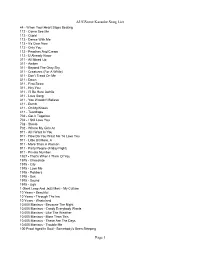

Augsome Karaoke Song List Page 1

AUGSome Karaoke Song List 44 - When Your Heart Stops Beating 112 - Come See Me 112 - Cupid 112 - Dance With Me 112 - It's Over Now 112 - Only You 112 - Peaches And Cream 112 - U Already Know 311 - All Mixed Up 311 - Amber 311 - Beyond The Gray Sky 311 - Creatures (For A While) 311 - Don't Tread On Me 311 - Down 311 - First Straw 311 - Hey You 311 - I'll Be Here Awhile 311 - Love Song 311 - You Wouldn't Believe 411 - Dumb 411 - On My Knees 411 - Teardrops 702 - Get It Together 702 - I Still Love You 702 - Steelo 702 - Where My Girls At 911 - All I Want Is You 911 - How Do You Want Me To Love You 911 - Little Bit More, A 911 - More Than A Woman 911 - Party People (Friday Night) 911 - Private Number 1927 - That's When I Think Of You 1975 - Chocolate 1975 - City 1975 - Love Me 1975 - Robbers 1975 - Sex 1975 - Sound 1975 - Ugh 1 Giant Leap And Jazz Maxi - My Culture 10 Years - Beautiful 10 Years - Through The Iris 10 Years - Wasteland 10,000 Maniacs - Because The Night 10,000 Maniacs - Candy Everybody Wants 10,000 Maniacs - Like The Weather 10,000 Maniacs - More Than This 10,000 Maniacs - These Are The Days 10,000 Maniacs - Trouble Me 100 Proof Aged In Soul - Somebody's Been Sleeping Page 1 AUGSome Karaoke Song List 101 Dalmations - Cruella de Vil 10Cc - Donna 10Cc - Dreadlock Holiday 10Cc - I'm Mandy 10Cc - I'm Not In Love 10Cc - Rubber Bullets 10Cc - Things We Do For Love, The 10Cc - Wall Street Shuffle 112 And Ludacris - Hot And Wet 12 Gauge - Dunkie Butt 12 Stones - Crash 12 Stones - We Are One 1910 Fruitgum Co. -

Factors Affecting Popularity of Netease Cloud Music App

FACTORS AFFECTING POPULARITY OF NETEASE CLOUD MUSIC APP FACTORS AFFECTING POPULARITY OF NETEASE CLOUD MUSIC APP Wu Xinyu This Independent Study Manuscript Presented to The Graduated School of Bangkok University in Partial Fulfillment of the Requirements for the Degree Master of degree in Communication Arts 2018 © 2018 Wu Xinyu All Rights Reserved Wu. X., M.C.A., August 2018, Graduate School, Bangkok University. Factors Affecting Popularity of NetEase Cloud Music App (86 pp.) Advisor: Assoc. Prof. Rose ChongpornKomolsevin, Ph.D. ABSTRACT In recent years, with the build-out of the internet infrastructure and the consistent growth of the economy, music app as a convenient and easy app attracts more and more people. However, with the development of digital technique, music industry faces challenges. The system of music industry in China is not impeccable, such as the weak originality, profit model of music app cannot adapt to the market, there is no unique character of interfacial design and functional feature. These kinds issues limit the progress of music industry. This study aims at the factors for the popularity of NCM can better inform people about music social networking. The result of the NCM can be borrowed from more merchants and make greater contribution to the development of network technology and music app market. Keywords: Music app, Music app industry, Market, Ncm v ACKNOWLEDGEMENT This research was fully supported by Dr. Rose ChongpornKomolsevinwho was my project advisor. I would like to express my gratitude to her for all of her patience, suggestion, review, and valuable times to my study. After the topic selected, the research process and knowledge were suggested and guided by advisor. -

Love As a Love Song

WHEN LOVE CRIES: POPULAR 1980s LOVE SONGS EXAMINED THROUGH INTIMATE PARTNER VIOLENCE A Thesis Presented to The Graduate Faculty of The University of Akron In Partial Fulfillment of the Requirements for the Degree Master of Arts Megan Ward May, 2014 WHEN LOVE CRIES: POPULAR 1980s LOVE SONGS EXAMINED THROUGH INTIMATE PARTNER VIOLENCE Megan Ward Thesis Approved: Accepted: ______________________________ ______________________________ Advisor Dean of College Dr. Therese Lueck Dr. Chand Midha ______________________________ ______________________________ Committee Member Dean of Graduate School Dr. Kathleen Endres Dr. George R. Newkome ______________________________ _______________________________ Committee Member Date Dr. Tang Tang ______________________________ School Director Dr. Elizabeth Graham ii ABSTRACT Romantic love and intimate partner relationships are themes that dominate popular music. As cultivation theory suggests, music cultivates attitudes and beliefs regarding romantic love and relationships modeled by the narratives evident in music. Women are particularly drawn to popular music and, thus, romantic love narratives. However, what happens when a real-life romantic love narrative is afflicted by abuse? What do such narratives cultivate in regards to the acceptance of intimate partner violence (IPV)? Research on battering and battered women raised awareness about battering. Lenore Walker’s The Battered Woman published in 1979, perhaps the most comprehensive research to date regarding IPV, revealed the common dynamics of an abusive relationship and introduced the Cycle of Violence (COV), a repetitive cycle consisting of three phases. As such, a narrative analysis of top 1980’s love songs was conducted to first, identify common narratives of romantic love between intimate partners present in the lyrics and then examine these narratives through narratives of abuse, including the COV.