Rudiments of Simulation-Based Computer-Assisted Analysis Including a Demonstration with Steve Reich’S Clapping Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

THE CLEVELAN ORCHESTRA California Masterwor S

����������������������� �������������� ��������������������������������������������� ������������������������ �������������������������������������� �������� ������������������������������� ��������������������������� ��������������������������������������������������� �������������������� ������������������������������������������������������� �������������������������� ��������������������������������������������� ������������������������ ������������������������������������������������� ���������������������������� ����������������������������� ����� ������������������������������������������������ ���������������� ���������������������������������������� ��������������������������� ���������������������������������������� ��������� ������������������������������������� ���������� ��������������� ������������� ������ ������������� ��������� ������������� ������������������ ��������������� ����������� �������������������������������� ����������������� ����� �������� �������������� ��������� ���������������������� Welcome to the Cleveland Museum of Art The Cleveland Orchestra’s performances in the museum California Masterworks – Program 1 in May 2011 were a milestone event and, according to the Gartner Auditorium, The Cleveland Museum of Art Plain Dealer, among the year’s “high notes” in classical Wednesday evening, May 1, 2013, at 7:30 p.m. music. We are delighted to once again welcome The James Feddeck, conductor Cleveland Orchestra to the Cleveland Museum of Art as this groundbreaking collaboration between two of HENRY COWELL Sinfonietta -

Computer Music Experiences, 1961-1964 James Tenney I. Introduction I Arrived at the Bell Telephone Laboratories in September

Computer Music Experiences, 1961-1964 James Tenney I. Introduction I arrived at the Bell Telephone Laboratories in September, 1961, with the following musical and intellectual baggage: 1. numerous instrumental compositions reflecting the influence of Webern and Varèse; 2. two tape-pieces, produced in the Electronic Music Laboratory at the University of Illinois — both employing familiar, “concrete” sounds, modified in various ways; 3. a long paper (“Meta+Hodos, A Phenomenology of 20th Century Music and an Approach to the Study of Form,” June, 1961), in which a descriptive terminology and certain structural principles were developed, borrowing heavily from Gestalt psychology. The central point of the paper involves the clang, or primary aural Gestalt, and basic laws of perceptual organization of clangs, clang-elements, and sequences (a higher order Gestalt unit consisting of several clangs); 4. a dissatisfaction with all purely synthetic electronic music that I had heard up to that time, particularly with respect to timbre; 2 5. ideas stemming from my studies of acoustics, electronics and — especially — information theory, begun in Lejaren Hiller’s classes at the University of Illinois; and finally 6. a growing interest in the work and ideas of John Cage. I leave in March, 1964, with: 1. six tape compositions of computer-generated sounds — of which all but the first were also composed by means of the computer, and several instrumental pieces whose composition involved the computer in one way or another; 2. a far better understanding of the physical basis of timbre, and a sense of having achieved a significant extension of the range of timbres possible by synthetic means; 3. -

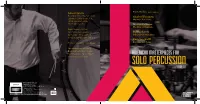

Solo Percussion Is Published Ralph Shapey by Theodore Presser; All Other Soli for Solo Percussion

Tom Kolor, percussion Acknowledgments Recorded in Slee Hall, University Charles Wuorinen at Buffalo SUNY. Engineered, Marimba Variations edited, and mastered by Christopher Jacobs. Morton Feldman The King of Denmark Ralph Shapey’s Soli for Solo Percussion is published Ralph Shapey by Theodore Presser; all other Soli for Solo Percussion works are published by CF Peters. Christian Wolff Photo of Tom Kolor: Irene Haupt Percussionist Songs Special thanks to my family, Raymond DesRoches, Gordon Gottlieb, and to my colleagues AMERICAN MASTERPIECES FOR at University of Buffalo. SOLO PERCUSSION VOLUME II WWW.ALBANYRECORDS.COM TROY1578 ALBANY RECORDS U.S. 915 BROADWAY, ALBANY, NY 12207 TEL: 518.436.8814 FAX: 518.436.0643 ALBANY RECORDS U.K. BOX 137, KENDAL, CUMBRIA LA8 0XD TEL: 01539 824008 © 2015 ALBANY RECORDS MADE IN THE USA DDD WARNING: COPYRIGHT SUBSISTS IN ALL RECORDINGS ISSUED UNDER THIS LABEL. AMERICAN MASTERPIECES FOR AMERICAN MASTERPIECES FOR Ralph Shapey TROY1578 Soli for Solo Percussion SOLO PERCUSSION 3 A [6:14] VOLUME II [6:14] 4 A + B 5 A + B + C [6:19] Tom Kolor, percussion Christian Wolf SOLO PERCUSSION Percussionist Songs Charles Wuorinen 6 Song 1 [3:12] 1 Marimba Variations [11:11] 7 Song 2 [2:58] [2:21] 8 Song 3 Tom Kolor, percussion • Morton Feldman VOLUME II 9 Song 4 [2:15] 2 The King of Denmark [6:51] 10 Song 5 [5:33] [1:38] 11 Song 6 VOLUME II • 12 Song 7 [2:01] Tom Kolor, percussion Total Time = 56:48 SOLO PERCUSSION WWW.ALBANYRECORDS.COM TROY1578 ALBANY RECORDS U.S. TROY1578 915 BROADWAY, ALBANY, NY 12207 TEL: 518.436.8814 FAX: 518.436.0643 ALBANY RECORDS U.K. -

The Philip Glass Ensemble in Downtown New York, 1966-1976 David Allen Chapman Washington University in St

Washington University in St. Louis Washington University Open Scholarship All Theses and Dissertations (ETDs) Spring 4-27-2013 Collaboration, Presence, and Community: The Philip Glass Ensemble in Downtown New York, 1966-1976 David Allen Chapman Washington University in St. Louis Follow this and additional works at: https://openscholarship.wustl.edu/etd Part of the Music Commons Recommended Citation Chapman, David Allen, "Collaboration, Presence, and Community: The hiP lip Glass Ensemble in Downtown New York, 1966-1976" (2013). All Theses and Dissertations (ETDs). 1098. https://openscholarship.wustl.edu/etd/1098 This Dissertation is brought to you for free and open access by Washington University Open Scholarship. It has been accepted for inclusion in All Theses and Dissertations (ETDs) by an authorized administrator of Washington University Open Scholarship. For more information, please contact [email protected]. WASHINGTON UNIVERSITY IN ST. LOUIS Department of Music Dissertation Examination Committee: Peter Schmelz, Chair Patrick Burke Pannill Camp Mary-Jean Cowell Craig Monson Paul Steinbeck Collaboration, Presence, and Community: The Philip Glass Ensemble in Downtown New York, 1966–1976 by David Allen Chapman, Jr. A dissertation presented to the Graduate School of Arts and Sciences of Washington University in partial fulfillment of the requirements for the degree of Doctor of Philosophy May 2013 St. Louis, Missouri © Copyright 2013 by David Allen Chapman, Jr. All rights reserved. CONTENTS LIST OF FIGURES .................................................................................................................... -

UC San Diego UC San Diego Electronic Theses and Dissertations

UC San Diego UC San Diego Electronic Theses and Dissertations Title Experimental Music: Redefining Authenticity Permalink https://escholarship.org/uc/item/3xw7m355 Author Tavolacci, Christine Publication Date 2017 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA, SAN DIEGO Experimental Music: Redefining Authenticity A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Musical Arts in Contemporary Music Performance by Christine E. Tavolacci Committee in charge: Professor John Fonville, Chair Professor Anthony Burr Professor Lisa Porter Professor William Propp Professor Katharina Rosenberger 2017 Copyright Christine E. Tavolacci, 2017 All Rights Reserved The Dissertation of Christine E. Tavolacci is approved, and is acceptable in quality and form for publication on microfilm and electronically: Chair University of California, San Diego 2017 iii DEDICATION This dissertation is dedicated to my parents, Frank J. and Christine M. Tavolacci, whose love and support are with me always. iv TABLE OF CONTENTS Signature Page.……………………………………………………………………. iii Dedication………………………..…………………………………………………. iv Table of Contents………………………..…………………………………………. v List of Figures….……………………..…………………………………………….. vi AcknoWledgments….………………..…………………………...………….…….. vii Vita…………………………………………………..………………………….……. viii Abstract of Dissertation…………..………………..………………………............ ix Introduction: A Brief History and Definition of Experimental Music -

Battles Around New Music in New York in the Seventies

Presenting the New: Battles around New Music in New York in the Seventies A Dissertation SUBMITTED TO THE FACULTY OF UNIVERSITY OF MINNESOTA BY Joshua David Jurkovskis Plocher IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY David Grayson, Adviser December 2012 © Joshua David Jurkovskis Plocher 2012 i Acknowledgements One of the best things about reaching the end of this process is the opportunity to publicly thank the people who have helped to make it happen. More than any other individual, thanks must go to my wife, who has had to put up with more of my rambling than anybody, and has graciously given me half of every weekend for the last several years to keep working. Thank you, too, to my adviser, David Grayson, whose steady support in a shifting institutional environment has been invaluable. To the rest of my committee: Sumanth Gopinath, Kelley Harness, and Richard Leppert, for their advice and willingness to jump back in on this project after every life-inflicted gap. Thanks also to my mother and to my kids, for different reasons. Thanks to the staff at the New York Public Library (the one on 5th Ave. with the lions) for helping me track down the SoHo Weekly News microfilm when it had apparently vanished, and to the professional staff at the New York Public Library for Performing Arts at Lincoln Center, and to the Fales Special Collections staff at Bobst Library at New York University. Special thanks to the much smaller archival operation at the Kitchen, where I was assisted at various times by John Migliore and Samara Davis. -

Alan Shockley, Director

Luciano Berio in Milan and in Berlin. He has also taught for many years at the Royal Conservatory. Andriessen’s music combines such influences as American minimalism and jazz, as well as the music of Stravinsky and of Claude Vivier. He is now widely acknowledged as a central figure in contemporary European composition. Like Martin Bresnick, Andriessen is also well-known as an educator, and several of his former students are noted composers themselves. Workers Union is scored for “any loud sounding group of instruments.” The composer writes that the piece “is a combination of individual freedom and severe discipline: its rhythm is exactly fixed; the pitch, on the other hand, is indicated only approximately.” The title seems to indicate political motivations, and Andriessen says that it “is difficult to play in an ensemble and to remain in step, sort of like organizing and carrying on political action.” An ensemble of twelve musicians performed the premiere, blocking an Amsterdam street and using construction materials as percussion instruments. The composer was arrested at the performance and spent the night in jail. Depending on what repeats are taken, the work is anywhere from 15 to 20 minutes long. UPCOMING COMPOSITION STUDIES EVENTS ALAN SHOCKLEY, DIRECTOR • Sunday, November 18, 2012: Laptop Ensemble, Martin Herman, director 8:00pm Daniel Recital Hall $10/7 “WORKERS UNION” MONDAY, NOVEMBER 5, 2012 8:00PM GERALD R. DANIEL RECITAL HALL For ticket information please call 562.985.7000 or visit the web at: PLEASE SILENCE ALL ELECTRONIC MOBILE DEVICES. This concert is funded in part by the INSTRUCTIONALLY RELATED ACTIVITIES FUNDS (IRA) provided by California State University, Long Beach. -

The Early History of Music Programming and Digital Synthesis, Session 20

Chapter 20. Meeting 20, Languages: The Early History of Music Programming and Digital Synthesis 20.1. Announcements • Music Technology Case Study Final Draft due Tuesday, 24 November 20.2. Quiz • 10 Minutes 20.3. The Early Computer: History • 1942 to 1946: Atanasoff-Berry Computer, the Colossus, the Harvard Mark I, and the Electrical Numerical Integrator And Calculator (ENIAC) • 1942: Atanasoff-Berry Computer 467 Courtesy of University Archives, Library, Iowa State University of Science and Technology. Used with permission. • 1946: ENIAC unveiled at University of Pennsylvania 468 Source: US Army • Diverse and incomplete computers © Wikimedia Foundation. License CC BY-SA. This content is excluded from our Creative Commons license. For more information, see http://ocw.mit.edu/fairuse. 20.4. The Early Computer: Interface • Punchcards • 1960s: card printed for Bell Labs, for the GE 600 469 Courtesy of Douglas W. Jones. Used with permission. • Fortran cards Courtesy of Douglas W. Jones. Used with permission. 20.5. The Jacquard Loom • 1801: Joseph Jacquard invents a way of storing and recalling loom operations 470 Photo courtesy of Douglas W. Jones at the University of Iowa. 471 Photo by George H. Williams, from Wikipedia (public domain). • Multiple cards could be strung together • Based on technologies of numerous inventors from the 1700s, including the automata of Jacques Vaucanson (Riskin 2003) 20.6. Computer Languages: Then and Now • Low-level languages are closer to machine representation; high-level languages are closer to human abstractions • Low Level • Machine code: direct binary instruction • Assembly: mnemonics to machine codes • High-Level: FORTRAN • 1954: John Backus at IBM design FORmula TRANslator System • 1958: Fortran II 472 • 1977: ANSI Fortran • High-Level: C • 1972: Dennis Ritchie at Bell Laboratories • Based on B • Very High-Level: Lisp, Perl, Python, Ruby • 1958: Lisp by John McCarthy • 1987: Perl by Larry Wall • 1990: Python by Guido van Rossum • 1995: Ruby by Yukihiro “Matz” Matsumoto 20.7. -

Rethinking Minimalism: at the Intersection of Music Theory and Art Criticism

Rethinking Minimalism: At the Intersection of Music Theory and Art Criticism Peter Shelley A dissertation submitted in partial fulfillment of requirements for the degree of Doctor of Philosophy University of Washington 2013 Reading Committee Jonathan Bernard, Chair Áine Heneghan Judy Tsou Program Authorized to Offer Degree: Music Theory ©Copyright 2013 Peter Shelley University of Washington Abstract Rethinking Minimalism: At the Intersection of Music Theory and Art Criticism Peter James Shelley Chair of the Supervisory Committee: Dr. Jonathan Bernard Music Theory By now most scholars are fairly sure of what minimalism is. Even if they may be reluctant to offer a precise theory, and even if they may distrust canon formation, members of the informed public have a clear idea of who the central canonical minimalist composers were or are. Sitting front and center are always four white male Americans: La Monte Young, Terry Riley, Steve Reich, and Philip Glass. This dissertation negotiates with this received wisdom, challenging the stylistic coherence among these composers implied by the term minimalism and scrutinizing the presumed neutrality of their music. This dissertation is based in the acceptance of the aesthetic similarities between minimalist sculpture and music. Michael Fried’s essay “Art and Objecthood,” which occupies a central role in the history of minimalist sculptural criticism, serves as the point of departure for three excursions into minimalist music. The first excursion deals with the question of time in minimalism, arguing that, contrary to received wisdom, minimalist music is not always well understood as static or, in Jonathan Kramer’s terminology, vertical. The second excursion addresses anthropomorphism in minimalist music, borrowing from Fried’s concept of (bodily) presence. -

JAMES TENNEY Saxony Pieces Is Often Much More Precisely Notated Than May David Mott, Saxophone Be Usual

~. JOHN MELBY Concerto for Violin, English Horn and Computer-Synthesized Tape Gregory Fulkerson, violin Thomas Stacy, English horn David Liptak, conductor JAMES TENNEY Saxony pieces is often much more precisely notated than may David Mott, saxophone be usual. The degree of control necessary in making a tape of the sort that allows this to happen is one ofthe chief attractions that the computer holds for me, since I can specify durations and attack patterns very much John Melbywas born in 1941 in Whitehall, Wisconsin: more precisely than it would be possible to do in an an he was educated at the Curtis Institute of Music, the alog studio. I am also fascinated by the way in which University of Pennsylvania and Princeton University, the computer can perform certain transformational which awarded him a Ph.D. in Composition in 1972. procedures which fit very well into my basically His composition teachers included Vincent Persichetti, Schenker-derived method of composition. Henry Weinberg, George Crumb, Peter Westergaard, As a composer, I have a certain aversion to provid J.K. Randall and Milton Babbitt. Melby's music, espe ing program notes about my pieces, a feeling which is cially those works for computer-synthesized tape, both shared by many, although certainly not all, of my col with and without live performers, has been performed leagues. I feel that such notes often do more harm widely. He is the recipient of many awards, including a than good, since they sometimes predispose the lis Guggenheim Fellowship, First Prize in the 1979 Inter tener to certain modes of listening which are inappro national Electroacoustic Music Awards in Bourges, priate to the content of the work. -

Comprehensibility and Ben Johnston's String Quartet No. 9

Comprehensibility and Ben Johnston’s String Quartet No. 9 * Laurence Sinclair Willis NOTE: The examples for the (text-only) PDF version of this item are available online at: hp://www.mtosmt.org/issues/mto.19.25.1/mto.19.25.1.willis.php KEYWORDS: just intonation, Ben Johnston, twentieth-century music, Harry Partch, string quartet ABSTRACT: Ben Johnston’s just-intonation music is of startling aural variety and presents novel solutions to age-old tuning problems. In this paper, I describe the way that Johnston reoriented his compositional practice in the 1980s as evidenced in his musical procedures. Johnston became aware of the disconnect between Western art music composers and their audiences. He therefore set about composing more accessible music that listeners could easily comprehend. His String Quartet No. 9 gives an instructive example of the negotiation between just intonation and comprehensibility. By integrating unusual triadic sonorities with background tonal relationships, Johnston reveals an evolution of just-intonation pitch structures across the work. This paper provides an example of an analytical method for exploring Johnston’s works in a way that moves beyond simply describing the structure of his system and into more musically tangible questions of form and process. Received June 2016 Volume 25, Number 1, May 2019 Copyright © 2019 Society for Music Theory [1.1] Perhaps the best way to introduce the music explored in this article is for you, the reader, to listen to some of it. So, please listen to Audio Example 1.(1) In some ways this music is very familiar: you heard a string quartet playing tonal music, and they used similar devices of phrasing that we might hear in a classical or romantic quartet. -

With the Laptop Ensemble Martin Herman, Director

NEW MUSIC ENSEMBLE ALAN SHOCKLEY, DIRECTOR WITH THE LAPTOP ENSEMBLE MARTIN HERMAN, DIRECTOR 4th INTERNATIONAL CONFERENCE ON MUSIC & MINIMALISM FRIDAY, OCTOBER 4, 2013 8:00PM GERALD R. DANIEL RECITAL HALL PLEASE SILENCE ALL ELECTRONIC MOBILE DEVICES. PROGRAM Pendulum Music (1968) ...............................................................................................................................................................................................................................Steve Reich (b. 1936) Red Arc / Blue Veil (2002) ..............................................................................................................................................................................................John Luther Adams (b. 1953) Vent (1990) ........................................................................................................................................................................................................................................................................ David Lang (b. 1957) I’m worried now, but I won’t be worried long (2010)............................................................................................................................... Eve Beglarian (b. 1958) Swell Piece No. 3 (1971) ........................................................................................................................................................................................................................ James Tenney Swell Piece (1967) (1934-2006) “We