Forensics Book 2: Investigating Hard Disk and File and Operating Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Copy on Write Based File Systems Performance Analysis and Implementation

Copy On Write Based File Systems Performance Analysis And Implementation Sakis Kasampalis Kongens Lyngby 2010 IMM-MSC-2010-63 Technical University of Denmark Department Of Informatics Building 321, DK-2800 Kongens Lyngby, Denmark Phone +45 45253351, Fax +45 45882673 [email protected] www.imm.dtu.dk Abstract In this work I am focusing on Copy On Write based file systems. Copy On Write is used on modern file systems for providing (1) metadata and data consistency using transactional semantics, (2) cheap and instant backups using snapshots and clones. This thesis is divided into two main parts. The first part focuses on the design and performance of Copy On Write based file systems. Recent efforts aiming at creating a Copy On Write based file system are ZFS, Btrfs, ext3cow, Hammer, and LLFS. My work focuses only on ZFS and Btrfs, since they support the most advanced features. The main goals of ZFS and Btrfs are to offer a scalable, fault tolerant, and easy to administrate file system. I evaluate the performance and scalability of ZFS and Btrfs. The evaluation includes studying their design and testing their performance and scalability against a set of recommended file system benchmarks. Most computers are already based on multi-core and multiple processor architec- tures. Because of that, the need for using concurrent programming models has increased. Transactions can be very helpful for supporting concurrent program- ming models, which ensure that system updates are consistent. Unfortunately, the majority of operating systems and file systems either do not support trans- actions at all, or they simply do not expose them to the users. -

NTFS • Windows Reinstallation – Bypass ACL • Administrators Privilege – Bypass Ownership

Windows Encrypting File System Motivation • Laptops are very integrated in enterprises… • Stolen/lost computers loaded with confidential/business data • Data Privacy Issues • Offline Access – Bypass NTFS • Windows reinstallation – Bypass ACL • Administrators privilege – Bypass Ownership www.winitor.com 01 March 2010 Windows Encrypting File System Mechanism • Principle • A random - unique - symmetric key encrypts the data • An asymmetric key encrypts the symmetric key used to encrypt the data • Combination of two algorithms • Use their strengths • Minimize their weaknesses • Results • Increased performance • Increased security Asymetric Symetric Data www.winitor.com 01 March 2010 Windows Encrypting File System Characteristics • Confortable • Applying encryption is just a matter of assigning a file attribute www.winitor.com 01 March 2010 Windows Encrypting File System Characteristics • Transparent • Integrated into the operating system • Transparent to (valid) users/applications Application Win32 Crypto Engine NTFS EFS &.[ßl}d.,*.c§4 $5%2=h#<.. www.winitor.com 01 March 2010 Windows Encrypting File System Characteristics • Flexible • Supported at different scopes • File, Directory, Drive (Vista?) • Files can be shared between any number of users • Files can be stored anywhere • local, remote, WebDav • Files can be offline • Secure • Encryption and Decryption occur in kernel mode • Keys are never paged • Usage of standardized cryptography services www.winitor.com 01 March 2010 Windows Encrypting File System Availibility • At the GUI, the availibility -

The Linux Kernel Module Programming Guide

The Linux Kernel Module Programming Guide Peter Jay Salzman Michael Burian Ori Pomerantz Copyright © 2001 Peter Jay Salzman 2007−05−18 ver 2.6.4 The Linux Kernel Module Programming Guide is a free book; you may reproduce and/or modify it under the terms of the Open Software License, version 1.1. You can obtain a copy of this license at http://opensource.org/licenses/osl.php. This book is distributed in the hope it will be useful, but without any warranty, without even the implied warranty of merchantability or fitness for a particular purpose. The author encourages wide distribution of this book for personal or commercial use, provided the above copyright notice remains intact and the method adheres to the provisions of the Open Software License. In summary, you may copy and distribute this book free of charge or for a profit. No explicit permission is required from the author for reproduction of this book in any medium, physical or electronic. Derivative works and translations of this document must be placed under the Open Software License, and the original copyright notice must remain intact. If you have contributed new material to this book, you must make the material and source code available for your revisions. Please make revisions and updates available directly to the document maintainer, Peter Jay Salzman <[email protected]>. This will allow for the merging of updates and provide consistent revisions to the Linux community. If you publish or distribute this book commercially, donations, royalties, and/or printed copies are greatly appreciated by the author and the Linux Documentation Project (LDP). -

Active@ UNDELETE Documentation

Active @ UNDELETE Users Guide | Contents | 2 Contents Legal Statement.........................................................................................................5 Active@ UNDELETE Overview............................................................................. 6 Getting Started with Active@ UNDELETE.......................................................... 7 Active@ UNDELETE Views And Windows...................................................................................................... 7 Recovery Explorer View.......................................................................................................................... 8 Logical Drive Scan Result View..............................................................................................................9 Physical Device Scan View......................................................................................................................9 Search Results View...............................................................................................................................11 File Organizer view................................................................................................................................ 12 Application Log...................................................................................................................................... 13 Welcome View........................................................................................................................................14 Using -

Vorlesung-Print.Pdf

1 Betriebssysteme Prof. Dipl.-Ing. Klaus Knopper (C) 2019 <[email protected]> Live GNU/Linux System Schwarz: Transparent,KNOPPIX CD−Hintergrundfarbe (silber) bei Zweifarbdruck, sonst schwarz. Vorlesung an der DHBW Karlsruhe im Sommersemester 2019 Organisatorisches + Vorlesung mit Ubungen¨ Betriebssysteme WWI17B2 jeweils Montags (einzelne Termine) in A369 + http://knopper.net/bs/ (spater¨ moodle) Folie 1 Kursziel µ Grundsatzlichen¨ Aufbau von Betriebssystemen in Theorie und Praxis kennen und verstehen, µ grundlegende Konzepte von Multitasking, Multiuser-Betrieb und Hardware-Unterstutzung¨ / Resource-Sharing erklaren¨ konnen,¨ µ Sicherheitsfragen und Risiken des Ubiquitous und Mobile Computing auf Betriebssystemebene analysieren, µ mit heterogenen Betriebssystemumgebungen und Virtua- lisierung arbeiten, Kompatibilitatsprobleme¨ erkennen und losen.¨ Folie 2 0 Themen (Top-Down) + Ubersicht¨ Betriebssysteme und Anwendungen, Unterschiede in Aufbau und Einsatz, Lizenzen, Distributionen, + GNU/Linux als OSS-Lernsystem fur¨ die Vorlesung, Tracing und Analyse des Bootvorgangs, + User Interface(s), + Dateisystem: VFS, reale Implementierungen, + Multitasking: Scheduler, Interrupts, Speicherverwaltung (VM), Prozessverwaltung (Timesharing), + Multiuser: Benutzerverwaltung, Rechtesystem, + Hardware-Unterstutzung:¨ Kernel und Module vs. Treiber“ - Kon- ” zept, + Kompatibilitat,¨ API-Emulation, Virtualisierung, Softwareentwick- lung. + Sicherheits-Aspekte von Betriebssystemen, Schadsoftware“ und ” forensische Analyse bei Kompromittierung oder Datenverlust. -

A Large-Scale Study of File-System Contents

A Large-Scale Study of File-System Contents John R. Douceur and William J. Bolosky Microsoft Research Redmond, WA 98052 {johndo, bolosky}@microsoft.com ABSTRACT sizes are fairly consistent across file systems, but file lifetimes and file-name extensions vary with the job function of the user. We We collect and analyze a snapshot of data from 10,568 file also found that file-name extension is a good predictor of file size systems of 4801 Windows personal computers in a commercial but a poor predictor of file age or lifetime, that most large files are environment. The file systems contain 140 million files totaling composed of records sized in powers of two, and that file systems 10.5 TB of data. We develop analytical approximations for are only half full on average. distributions of file size, file age, file functional lifetime, directory File-system designers require usage data to test hypotheses [8, size, and directory depth, and we compare them to previously 10], to drive simulations [6, 15, 17, 29], to validate benchmarks derived distributions. We find that file and directory sizes are [33], and to stimulate insights that inspire new features [22]. File- fairly consistent across file systems, but file lifetimes vary widely system access requirements have been quantified by a number of and are significantly affected by the job function of the user. empirical studies of dynamic trace data [e.g. 1, 3, 7, 8, 10, 14, 23, Larger files tend to be composed of blocks sized in powers of two, 24, 26]. However, the details of applications’ and users’ storage which noticeably affects their size distribution. -

Active @ UNDELETE Users Guide | TOC | 2

Active @ UNDELETE Users Guide | TOC | 2 Contents Legal Statement..................................................................................................4 Active@ UNDELETE Overview............................................................................. 5 Getting Started with Active@ UNDELETE........................................................... 6 Active@ UNDELETE Views And Windows......................................................................................6 Recovery Explorer View.................................................................................................... 7 Logical Drive Scan Result View.......................................................................................... 7 Physical Device Scan View................................................................................................ 8 Search Results View........................................................................................................10 Application Log...............................................................................................................11 Welcome View................................................................................................................11 Using Active@ UNDELETE Overview................................................................. 13 Recover deleted Files and Folders.............................................................................................. 14 Scan a Volume (Logical Drive) for deleted files..................................................................15 -

Globalfs: a Strongly Consistent Multi-Site File System

GlobalFS: A Strongly Consistent Multi-Site File System Leandro Pacheco Raluca Halalai Valerio Schiavoni University of Lugano University of Neuchatelˆ University of Neuchatelˆ Fernando Pedone Etienne Riviere` Pascal Felber University of Lugano University of Neuchatelˆ University of Neuchatelˆ Abstract consistency, availability, and tolerance to partitions. Our goal is to ensure strongly consistent file system operations This paper introduces GlobalFS, a POSIX-compliant despite node failures, at the price of possibly reduced geographically distributed file system. GlobalFS builds availability in the event of a network partition. Weak on two fundamental building blocks, an atomic multicast consistency is suitable for domain-specific applications group communication abstraction and multiple instances of where programmers can anticipate and provide resolution a single-site data store. We define four execution modes and methods for conflicts, or work with last-writer-wins show how all file system operations can be implemented resolution methods. Our rationale is that for general-purpose with these modes while ensuring strong consistency and services such as a file system, strong consistency is more tolerating failures. We describe the GlobalFS prototype in appropriate as it is both more intuitive for the users and detail and report on an extensive performance assessment. does not require human intervention in case of conflicts. We have deployed GlobalFS across all EC2 regions and Strong consistency requires ordering commands across show that the system scales geographically, providing replicas, which needs coordination among nodes at performance comparable to other state-of-the-art distributed geographically distributed sites (i.e., regions). Designing file systems for local commands and allowing for strongly strongly consistent distributed systems that provide good consistent operations over the whole system. -

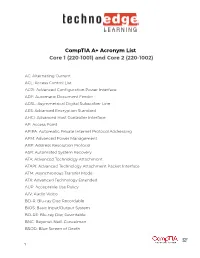

Comptia A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002)

CompTIA A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002) AC: Alternating Current ACL: Access Control List ACPI: Advanced Configuration Power Interface ADF: Automatic Document Feeder ADSL: Asymmetrical Digital Subscriber Line AES: Advanced Encryption Standard AHCI: Advanced Host Controller Interface AP: Access Point APIPA: Automatic Private Internet Protocol Addressing APM: Advanced Power Management ARP: Address Resolution Protocol ASR: Automated System Recovery ATA: Advanced Technology Attachment ATAPI: Advanced Technology Attachment Packet Interface ATM: Asynchronous Transfer Mode ATX: Advanced Technology Extended AUP: Acceptable Use Policy A/V: Audio Video BD-R: Blu-ray Disc Recordable BIOS: Basic Input/Output System BD-RE: Blu-ray Disc Rewritable BNC: Bayonet-Neill-Concelman BSOD: Blue Screen of Death 1 BYOD: Bring Your Own Device CAD: Computer-Aided Design CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart CD: Compact Disc CD-ROM: Compact Disc-Read-Only Memory CD-RW: Compact Disc-Rewritable CDFS: Compact Disc File System CERT: Computer Emergency Response Team CFS: Central File System, Common File System, or Command File System CGA: Computer Graphics and Applications CIDR: Classless Inter-Domain Routing CIFS: Common Internet File System CMOS: Complementary Metal-Oxide Semiconductor CNR: Communications and Networking Riser COMx: Communication port (x = port number) CPU: Central Processing Unit CRT: Cathode-Ray Tube DaaS: Data as a Service DAC: Discretionary Access Control DB-25: Serial Communications -

Latency-Aware, Inline Data Deduplication for Primary Storage

iDedup: Latency-aware, inline data deduplication for primary storage Kiran Srinivasan, Tim Bisson, Garth Goodson, Kaladhar Voruganti NetApp, Inc. fskiran, tbisson, goodson, [email protected] Abstract systems exist that deduplicate inline with client requests for latency sensitive primary workloads. All prior dedu- Deduplication technologies are increasingly being de- plication work focuses on either: i) throughput sensitive ployed to reduce cost and increase space-efficiency in archival and backup systems [8, 9, 15, 21, 26, 39, 41]; corporate data centers. However, prior research has not or ii) latency sensitive primary systems that deduplicate applied deduplication techniques inline to the request data offline during idle time [1, 11, 16]; or iii) file sys- path for latency sensitive, primary workloads. This is tems with inline deduplication, but agnostic to perfor- primarily due to the extra latency these techniques intro- mance [3, 36]. This paper introduces two novel insights duce. Inherently, deduplicating data on disk causes frag- that enable latency-aware, inline, primary deduplication. mentation that increases seeks for subsequent sequential Many primary storage workloads (e.g., email, user di- reads of the same data, thus, increasing latency. In addi- rectories, databases) are currently unable to leverage the tion, deduplicating data requires extra disk IOs to access benefits of deduplication, due to the associated latency on-disk deduplication metadata. In this paper, we pro- costs. Since offline deduplication systems impact la- -

Using the Andrew File System on *BSD [email protected], Bsdcan, 2006 Why Another Network Filesystem

Using the Andrew File System on *BSD [email protected], BSDCan, 2006 why another network filesystem 1-slide history of Andrew File System user view admin view OpenAFS Arla AFS on OpenBSD, FreeBSD and NetBSD Filesharing on the Internet use FTP or link to HTTP file interface through WebDAV use insecure protocol over vpn History of AFS 1984: developed at Carnegie Mellon 1989: TransArc Corperation 1994: over to IBM 1997: Arla, aimed at Linux and BSD 2000: IBM releases source 2000: foundation of OpenAFS User view <1> global filesystem rooted at /afs /afs/cern.ch/... /afs/cmu.edu/... /afs/gorlaeus.net/users/h/hugo/... User view <2> authentication through Kerberos #>kinit <username> obtain krbtgt/<realm>@<realm> #>afslog obtain afs@<realm> #>cd /afs/<cell>/users/<username> User view <3> ACL (dir based) & Quota usage runs on Windows, OS X, Linux, Solaris ... and *BSD Admin view <1> <cell> <partition> <server> <volume> <volume> <server> <partition> Admin view <2> /afs/gorlaeus.net/users/h/hugo/presos/afs_slides.graffle gorlaeus.net /vicepa fwncafs1 users hugo h bram <server> /vicepb Admin view <2a> /afs/gorlaeus.net/users/h/hugo/presos/afs_slides.graffle gorlaeus.net /vicepa fwncafs1 users hugo /vicepa fwncafs2 h bram Admin view <3> servers require KeyFile ~= keytab procedure differs for Heimdal: ktutil copy MIT: asetkey add Admin view <4> entry in CellServDB >gorlaeus.net #my cell name 10.0.0.1 <dbserver host name> required on servers required on clients without DynRoot Admin view <5> File locking no databases on AFS (requires byte range locking) -

On the Performance Variation in Modern Storage Stacks

On the Performance Variation in Modern Storage Stacks Zhen Cao1, Vasily Tarasov2, Hari Prasath Raman1, Dean Hildebrand2, and Erez Zadok1 1Stony Brook University and 2IBM Research—Almaden Appears in the proceedings of the 15th USENIX Conference on File and Storage Technologies (FAST’17) Abstract tions on different machines have to compete for heavily shared resources, such as network switches [9]. Ensuring stable performance for storage stacks is im- In this paper we focus on characterizing and analyz- portant, especially with the growth in popularity of ing performance variations arising from benchmarking hosted services where customers expect QoS guaran- a typical modern storage stack that consists of a file tees. The same requirement arises from benchmarking system, a block layer, and storage hardware. Storage settings as well. One would expect that repeated, care- stacks have been proven to be a critical contributor to fully controlled experiments might yield nearly identi- performance variation [18, 33, 40]. Furthermore, among cal performance results—but we found otherwise. We all system components, the storage stack is the corner- therefore undertook a study to characterize the amount stone of data-intensive applications, which become in- of variability in benchmarking modern storage stacks. In creasingly more important in the big data era [8, 21]. this paper we report on the techniques used and the re- Although our main focus here is reporting and analyz- sults of this study. We conducted many experiments us- ing the variations in benchmarking processes, we believe ing several popular workloads, file systems, and storage that our observations pave the way for understanding sta- devices—and varied many parameters across the entire bility issues in production systems.