In Silico Simulations for DNA Shuffling Experiments Luciana Montera

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

In Silico Tools for Splicing Defect Prediction: a Survey from the Viewpoint of End Users

© American College of Medical Genetics and Genomics REVIEW In silico tools for splicing defect prediction: a survey from the viewpoint of end users Xueqiu Jian, MPH1, Eric Boerwinkle, PhD1,2 and Xiaoming Liu, PhD1 RNA splicing is the process during which introns are excised and informaticians in relevant areas who are working on huge data sets exons are spliced. The precise recognition of splicing signals is critical may also benefit from this review. Specifically, we focus on those tools to this process, and mutations affecting splicing comprise a consider- whose primary goal is to predict the impact of mutations within the able proportion of genetic disease etiology. Analysis of RNA samples 5′ and 3′ splicing consensus regions: the algorithms used by different from the patient is the most straightforward and reliable method to tools as well as their major advantages and disadvantages are briefly detect splicing defects. However, currently, the technical limitation introduced; the formats of their input and output are summarized; prohibits its use in routine clinical practice. In silico tools that predict and the interpretation, evaluation, and prospection are also discussed. potential consequences of splicing mutations may be useful in daily Genet Med advance online publication 21 November 2013 diagnostic activities. In this review, we provide medical geneticists with some basic insights into some of the most popular in silico tools Key Words: bioinformatics; end user; in silico prediction tool; for splicing defect prediction, from the viewpoint of end users. Bio- medical genetics; splicing consensus region; splicing mutation INTRODUCTION TO PRE-mRNA SPLICING AND small nuclear ribonucleoproteins and more than 150 proteins, MUTATIONS AFFECTING SPLICING serine/arginine-rich (SR) proteins, heterogeneous nuclear ribo- Sixty years ago, the milestone discovery of the double-helix nucleoproteins, and the regulatory complex (Figure 1). -

In Silico Protein Design: a Combinatorial and Global Optimization Approach by John L

From SIAM News, Volume 37, Number 1, January/February 2004 In Silico Protein Design: A Combinatorial and Global Optimization Approach By John L. Klepeis and Christodoulos A. Floudas The use of computational techniques to create peptide- and protein-based therapeutics is an important challenge in medicine. The ultimate goal, defined about two decades ago, is to use computer algorithms to identify amino acid sequences that not only adopt particular three-dimensional structures but also perform specific functions. To those familiar with the field of structural biology, it is certainly not surprising that this problem has been described as “inverse protein folding” [16]. That is, while the grand challenge of protein folding is to understand how a particular protein, defined by its amino acid sequence, finds its unique three-dimensional structure, protein design involves the discovery of sets of amino acid sequences that form functional proteins and fold into specific target structures. Experimental, computational, and hybrid approaches have all contributed to advances in protein design. Applying mutagenesis and rational design techniques, for example, experimentalists have created enzymes with altered functionalities and increased stability. The coverage of sequence space is highly restricted for these techniques, however [4]. An approach that samples more diverse sequences, called directed protein evolution, iteratively applies the techniques of genetic recombination and in vitro functional assays [1]. These methods, although they do a better job of sampling sequence space and generating functionally diverse proteins, are still restricted to the screening of 103 – 106 sequences [22]. Challenges of Generic Computational Protein Design The limitations of experimental techniques serve to highlight the importance of computational protein design. -

7 Systems Biotechnology: Combined in Silico and Omics Analyses for The

0195300815_0193-0231_ Ch-07.qxd 23/6/06 4:56 PM Page 193 7 Systems Biotechnology: Combined in Silico and Omics Analyses for the Improvement of Microorganisms for Industrial Applications Sang Yup Lee, Dong-Yup Lee, Tae Yong Kim, Byung Hun Kim, & Sang Jun Lee Biotechnology plays an increasingly important role in the healthcare, pharmaceutical, chemical, food, and agricultural industries. Microorganisms have been successfully employed for the production of recombinant proteins [1–4] and various primary and secondary metabolites [5–8]. As in other engineering disciplines, one of the ulti- mate goals of industrial biotechnology is to develop lower-cost and higher-yield processes. Toward this goal, fermentation and down- stream processes have been significantly improved thanks to the effort of biochemical engineers [9]. In addition to the effort of making these mid- to downstream processes more efficient, microbial strains have been improved by recombinant and other molecular biological methods, leading to the increase in microbial metabolic activities toward desired goals [10]. However, these conventional attempts have not always been successful owing to unexpected changes in the physiology and metabolism of host cells. Alternatively, rational meta- bolic and cellular engineering approaches have been tried to solve such problems in a number of cases, but they were also still limited to the manipulation of only a handful of genes encoding enzymes and regulatory proteins. In this regard, systematic approaches are indeed required not only for understanding the global context of the meta- bolic system but also for designing better metabolic engineering strategies. Recent advances in high-throughput experimental techniques have resulted in rapid accumulation of a wide range of biological data and information at different levels: genome, transcriptome, pro- teome, metabolome, and fluxome [11–17]. -

In Silico Prediction of Protein Flexibility with Local Structure Approach

Bioinformatics protein flexibility prediction Preprint – accepted for publication in Biochimie in silico prediction of protein flexibility with local structure approach. Tarun J. Narwani1,2,3,+, Catherine Etchebest1,2,3,+, Pierrick Craveur1,2,3,4, Sylvain Léonard1,2,3, Joseph Rebehmed1,2,3,5, Narayanaswamy Srinivasan6, Aurélie Bornot1,2,3, #, Jean-Christophe Gelly1,2,3 & Alexandre G. de Brevern1,2,3,4,* 1 INSERM, U 1134, DSIMB, Univ Paris, Univ de la Réunion, Univ des Antilles, F-75739 Paris, France. 2 Institut National de la Transfusion Sanguine (INTS), F-75739 Paris, France. 3 Laboratoire d'Excellence GR-Ex, F-75739 Paris, France. 4 Molecular Graphics Laboratory, Department of Integrative Structural and Computational Biology, The Scripps Research Institute, La Jolla, CA 92037, USA. 5 Department of Computer Science and Mathematics, Lebanese American University, Byblos 1h401 2010, Lebanon. 9 MBU, IISc, Bangalore, India # Present address: AstraZeneca, Discovery Sciences, Computational Biology, Alderley Park UK. Short title: bioinformatics protein flexibility prediction * Corresponding author: Mailing address: Dr. de Alexandre G. de Brevern, INSERM UMR_S 1134, DSIMB, Université Paris, Institut National de Transfusion Sanguine (INTS), 6, rue Alexandre Cabanel, 75739 Paris cedex 15, France e-mail : [email protected] 1 Bioinformatics protein flexibility prediction Abstract Flexibility is an intrinsic essential feature of protein structures, directly linked to their functions. To this day, most of the prediction methods use the crystallographic data (namely B-factors) as the only indicator of protein’s inner flexibility and predicts them as rigid or flexible. PredyFlexy stands differently from other approaches as it relies on the definition of protein flexibility (i) not only taken from crystallographic data, but also (ii) from Root Mean Square Fluctuation (RMSFs) observed in Molecular Dynamics simulations. -

Bioinformatics Glossary Based Database of Biological Databases: DBD

J Biochem Tech (2009) 1(3):88-90 ISSN: 0974-2328 Bioinformatics glossary based Database of Biological Databases: DBD Siva Kiran RR, Setty MVN, Hanumatha Rao G* Received: 16 May 2009 / Received in revised form: 17 May 2009, Accepted: 18 May 2009, Published online: 6 June 2009 © Sevas Educational Society 2008 Abstract Database of Biological/Bioinformatics Databases (DBD) is a Databases of Biological Databases such as DOD – Database of collection of 1669 databases and online resources collected from Databases (http://www.progenebio.in/DoD/DoD.htm), MetaBase - NAR Database Summary Papers (http://www.oxfordjournals.org The Database of Biological Database (http://biodatabase.org) and /nar/database/a/) & Internet search engines. The database has been The Molecular Biology Database Collection – Updates developed based on 437 keywords (Glossary) available in (Baxevanis 2000), published annually by journal entitled “Nucleic http://falcon.roswellpark.org/labweb/glossary.html. Keywords with Acids Research” help researchers to identify and correlate important their relevant databases are arranged in alphabetic order which queries beside providing a common platform for various molecular enables quick accession of databases by researchers. Database biology databases. Databases in DOD, Metabase & others have been description provides brief information about the database with a link grouped into categories as conceived by the respective authors. For to main web page. DBD is available online and can be accessed at instance, DOD has grouped 719 databases into 14 major categories http://www.biodbs.info. (Galperin 2005) while Metabase has grouped 1119 databases into 21 categories. Keywords: Databases, biological databases, bioinformatics databases, bioinformatics glossary The uniqueness of the present DBD is the alphabetic listing of all databases based on technical terms (keywords) viz. -

In Silico Analysis of Protein 211012, Uttar Pradesh, India, Tel: 9450900033; Email

Central JSM Bioinformatics, Genomics and Proteomics Bringing Excellence in Open Access Research Article *Corresponding author Nidhi Mishra, CC-III, Indian Institute of Information Technology Allahabad, Devghat, Jhalwa, Allahabad- In silico Analysis of Protein 211012, Uttar Pradesh, India, Tel: 9450900033; Email: Nishtha Singh, Sonal Upadhyay, Ankur Jaiswar and Nidhi Submitted: 28 June 2016 Mishra* Accepted: 31 August 2016 Applied Science Division, Indian Institute of Information Technology, India Published: 07 September 2016 Copyright Abstract © 2016 Mishra et al. Proteins are inimitable as principal functional agent of living system. Therefore, OPEN ACCESS comprehension of protein sequence and structure and its correlation with its function is equivalent to deciphering almost all of fundamental features of any biological/living Keywords system. A treasure of In silico tools is accessible for analysis of protein. Understanding • Protein analysis and regeneration of protein function requires comprehension of reliance between • In Silico protein sequence and its structure, its localization in cell and its interaction with other • Function functional partners. This review provides an insight for various tools for In silico analysis • Sequence of protein. • Tools ABBREVIATIONS psi-blast (it establishes distant relationship between proteins), blastx (comparison of six-frame translation product of query UNIPROT: Universal Protein Resource; FASTA: FAST sequence of nucleotide against database of protein sequence) Alignment; BLAST: Basic Local Alignment Search Tool; Blastn: , tblastx (comparison of six-frame translation product of query Nucleotide Basic Local Alignment Search Tool; Blastp: Protein sequence of nucleotide against database of nucleotide sequence) andtblastn (comparison of protein query sequence against six Iterated Basic Local Alignment Search Tool; SOPMA: Self reading frames of database of nucleotide sequence) [2]. -

Bioinformatics Is a New Discipline That Addresses the Need to Manage and Interpret the Data That in the Past Decade Was Massively Generated by Genomic Research

SABU M. THAMPI Assistant Professor Dept. of CSE LBS College of Engineering Kasaragod, Kerala-671542 [email protected] Introduction Bioinformatics is a new discipline that addresses the need to manage and interpret the data that in the past decade was massively generated by genomic research. This discipline represents the convergence of genomics, biotechnology and information technology, and encompasses analysis and interpretation of data, modeling of biological phenomena, and development of algorithms and statistics. Bioinformatics is by nature a cross-disciplinary field that began in the 1960s with the efforts of Margaret O. Dayhoff, Walter M. Fitch, Russell F. Doolittle and others and has matured into a fully developed discipline. However, bioinformatics is wide-encompassing and is therefore difficult to define. For many, including myself, it is still a nebulous term that encompasses molecular evolution, biological modeling, biophysics, and systems biology. For others, it is plainly computational science applied to a biological system. Bioinformatics is also a thriving field that is currently in the forefront of science and technology. Our society is investing heavily in the acquisition, transfer and exploitation of data and bioinformatics is at the center stage of activities that focus on the living world. It is currently a hot commodity, and students in bioinformatics will benefit from employment demand in government, the private sector, and academia. With the advent of computers, humans have become ‘data gatherers’, measuring every aspect of our life with inferences derived from these activities. In this new culture, everything can and will become data (from internet traffic and consumer taste to the mapping of galaxies or human behavior). -

Correction for Bell Et Al., in Silico Design and Validation of High-Affinity RNA Aptamers Targeting Epithelial Cellular Adhesion

Correction BIOPHYSICS AND COMPUTATIONAL BIOLOGY, CHEMISTRY Correction for “In silico design and validation of high-affinity RNA aptamers targeting epithelial cellular adhesion molecule dimers,” by David R. Bell, Jeffrey K. Weber, Wang Yin, Tien Huynh, Wei Duan, and Ruhong Zhou, which was first published March 31, 2020; 10.1073/pnas.1913242117 (Proc. Natl. Acad. Sci. U.S.A. 117, 8486–8493). The authors note that an additional affiliation was incorrectly identified for David R. Bell. This author’s sole affiliation should be listed as Computational Biological Center, IBM Thomas J. Watson Research Center, Yorktown Heights, NY 10598. The online version has been corrected. Published under the PNAS license. Published February 8, 2021. www.pnas.org/cgi/doi/10.1073/pnas.2100827118 CORRECTION PNAS 2021 Vol. 118 No. 7 e2100827118 https://doi.org/10.1073/pnas.2100827118 | 1of1 Downloaded by guest on September 24, 2021 In silico design and validation of high-affinity RNA aptamers targeting epithelial cellular adhesion molecule dimers David R. Bella,1, Jeffrey K. Webera,1, Wang Yinb,1, Tien Huynha, Wei Duanb,2, and Ruhong Zhoua,c,d,2 aComputational Biological Center, IBM Thomas J. Watson Research Center, Yorktown Heights, NY 10598; bSchool of Medicine, Deakin University, Waurn Ponds, VIC 3216, Australia; cDepartment of Chemistry, Columbia University, New York, NY 10027; and dInstitute of Quantitative Biology, Zhejiang University, 310027 Hangzhou, China Edited by Peter Schuster, University of Vienna, Vienna, Austria, and approved March 6, 2020 (received for review August 1, 2019) Nucleic acid aptamers hold great promise for therapeutic applica- modes to the target biomolecule. Although several groups have tions due to their favorable intrinsic properties, as well as high- sought to improve protein–RNA docking (16, 17), whole-molecule throughput experimental selection techniques. -

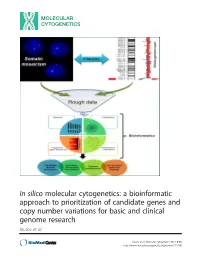

A Bioinformatic Approach to Prioritization of Candidate Genes and Copy Number Variations for Basic and Clinical Genome Research Iourov Et Al

In silico molecular cytogenetics: a bioinformatic approach to prioritization of candidate genes and copy number variations for basic and clinical genome research Iourov et al. Iourov et al. Molecular Cytogenetics 2014, 7:98 http://www.molecularcytogenetics.org/content/7/1/98 Iourov et al. Molecular Cytogenetics 2014, 7:98 http://www.molecularcytogenetics.org/content/7/1/98 METHODOLOGY Open Access In silico molecular cytogenetics: a bioinformatic approach to prioritization of candidate genes and copy number variations for basic and clinical genome research Ivan Y Iourov1,2,3*, Svetlana G Vorsanova1,2 and Yuri B Yurov1,2 Abstract Background: The availability of multiple in silico tools for prioritizing genetic variants widens the possibilities for converting genomic data into biological knowledge. However, in molecular cytogenetics, bioinformatic analyses are generally limited to result visualization or database mining for finding similar cytogenetic data. Obviously, the potential of bioinformatics might go beyond these applications. On the other hand, the requirements for performing successful in silico analyses (i.e. deep knowledge of computer science, statistics etc.) can hinder the implementation of bioinformatics in clinical and basic molecular cytogenetic research. Here, we propose a bioinformatic approach to prioritization of genomic variations that is able to solve these problems. Results: Selecting gene expression as an initial criterion, we have proposed a bioinformatic approach combining filtering and ranking prioritization strategies, which includes analyzing metabolome and interactome data on proteins encoded by candidate genes. To finalize the prioritization of genetic variants, genomic, epigenomic, interactomic and metabolomic data fusion has been made. Structural abnormalities and aneuploidy revealed by array CGH and FISH have been evaluated to test the approach through determining genotype-phenotype correlations, which have been found similar to those of previous studies. -

Hydrophobic Collapse In

Computational Biology and Chemistry 30 (2006) 255–267 Hydrophobic collapse in (in silico) protein folding Michal Brylinski a,b, Leszek Konieczny c, Irena Roterman a,d,∗ a Department of Bioinformatics and Telemedicine, Collegium Medicum, Jagiellonian University, Kopernika 17, 31-501 Krakow, Poland b Faculty of Chemistry, Jagiellonian University, Ingardena 3, 30-060 Krakow, Poland c Institute of Medical Biochemistry, Collegium Medicum, Jagiellonian University, Kopernika 7, 31 034 Krakow, Poland d Faculty of Physics, Jagiellonian University, Reymonta 4, 30-060 Krakow, Poland Received 19 December 2005; received in revised form 6 April 2006; accepted 6 April 2006 Abstract A model of hydrophobic collapse, which is treated as the driving force for protein folding, is presented. This model is the superposition of three models commonly used in protein structure prediction: (1) ‘oil-drop’ model introduced by Kauzmann, (2) a lattice model introduced to decrease the number of degrees of freedom for structural changes and (3) a model of the formation of hydrophobic core as a key feature in driving the folding of proteins. These three models together helped to develop the idea of a fuzzy-oil-drop as a model for an external force field of hydrophobic character mimicking the hydrophobicity-differentiated environment for hydrophobic collapse. All amino acids in the polypeptide interact pair-wise during the folding process (energy minimization procedure) and interact with the external hydrophobic force field defined by a three-dimensional Gaussian function. The value of the Gaussian function usually interpreted as a probability distribution is treated as a normalized hydrophobicity distribution, with its maximum in the center of the ellipsoid and decreasing proportionally with the distance versus the center. -

In Silico Systems Analysis of Biopathways

In Silico Systems Analysis of Biopathways Dissertation zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften der Universität Bielefeld. Vorgelegt von Ming Chen Bielefeld, im März 2004 MSc. Ming Chen AG Bioinformatik und Medizinische Informatik Technische Fakultät Universität Bielefeld Email: [email protected] Genehmigte Dissertation zur Erlangung des akademischen Grades Doktor der Naturwissenschaften (Dr.rer.nat.) Von Ming Chen am 18 März 2004 Der Technischen Fakultät an der Universität Bielefeld vorgelegt Am 10 August 2004 verteidigt und genehmiget Prüfungsausschuß: Prof. Dr. Ralf Hofestädt, Universität Bielefeld Prof. Dr. Thomas Dandekar, Universität Würzburg Prof. Dr. Robert Giegerich, Universität Bielefeld PD Dr. Klaus Prank, Universität Bielefeld Dr. Dieter Lorenz / Dr. Dirk Evers, Universität Bielefeld ii Abstract In the past decade with the advent of high-throughput technologies, biology has migrated from a descriptive science to a predictive one. A vast amount of information on the metabolism have been produced; a number of specific genetic/metabolic databases and computational systems have been developed, which makes it possible for biologists to perform in silico analysis of metabolism. With experimental data from laboratory, biologists wish to systematically conduct their analysis with an easy-to-use computational system. One major task is to implement molecular information systems that will allow to integrate different molecular database systems, and to design analysis tools (e.g. simulators of complex metabolic reactions). Three key problems are involved: 1) Modeling and simulation of biological processes; 2) Reconstruction of metabolic pathways, leading to predictions about the integrated function of the network; and 3) Comparison of metabolism, providing an important way to reveal the functional relationship between a set of metabolic pathways. -

Methods in Brief

RESEARCH HIGHLIGHTS METHODS IN BRIEF IMAGING Super-resolution imaging of neuronal circuits Fluorescence microscopy is a valuable tool for volumetric neural circuit reconstruction, but small structures such as synapses can be difficult to identify when imaged at diffraction- limited resolution. To address this issue and image neurons and synapses at high resolution over a large volume, Sigal et al. combined stochastic optical reconstruction microscopy (STORM) with serial ultra-thin sectioning. STORM was used to provide super-resolution images of neurons and synaptic proteins in each section, and the images were then aligned to generate 3D volumetric reconstructions using automated image-analysis tools. The authors used their platform to study on-off direction-selective ganglion cells in the mouse retina and were able to gain new insights into the structural basis of crossover inhibition in these cells. Sigal, Y.M. et al. Cell 163, 493–505 (2015). SYNTHETIC BIOLOGY Efficient in vivo mutagenesis in bacteria Introducing random mutations into DNA with in vitro techniques is easy, but getting this mutagenized DNA back into cells often is not. Performing mutagenesis directly in cells allows researchers to avoid this bottleneck, but current in vivo methods show only modest mutation rates. To improve these rates, Badran and Liu created and tested several plasmids that include genes affecting mutation frequency. The final plasmid tested, MP6, encodes dominant negative variants of a DNA-proofreading enzyme, a protein that impairs mismatch repair, a cytidine deaminase that increases base transitions and a transcriptional repressor. Escherichia coli carrying MP6 showed a 322,000-fold increase in the mutation rate of their chromosomal DNA compared to wild-type E.