A Standards-Based Framework for Test-Driven Agile Simulation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Customizing Eclipse RCP Applications Techniques to Use with SWT and Jface

Customizing Eclipse RCP applications Techniques to use with SWT and JFace Skill Level: Intermediate Scott Delap ([email protected]) Desktop/Enterprise Java Consultant Annas Andy Maleh ([email protected]) Consultant 27 Feb 2007 Most developers think that an Eclipse Rich Client Platform (RCP) application must look similar in nature to the Eclipse integrated development environment (IDE). This isn't the case, however. This tutorial will explain a number of simple techniques you can use with the Standard Widget Toolkit (SWT) and JFace to create applications that have much more personality than the Eclipse IDE. Section 1. Before you start About this tutorial This tutorial will explain a number of UI elements that can be changed in Eclipse RCP, JFace, and SWT. Along the way, you will learn about basic changes you can make, such as fonts and colors. You will also learn advanced techniques, including how to create custom wizards and section headers. Using these in conjunction should provide you the ability to go from a typical-looking Eclipse RCP application to a distinctive but visually appealing one. Prerequisites Customizing Eclipse RCP applications © Copyright IBM Corporation 1994, 2008. All rights reserved. Page 1 of 40 developerWorks® ibm.com/developerWorks You should have a basic familiarity with SWT, JFace, and Eclipse RCP. System requirements To run the examples, you need a computer capable of adequately running Eclipse V3.2 and 50 MB of free disk space. Section 2. Heavyweight and lightweight widgets Before diving into techniques that can be used to modify SWT, JFace, and Eclipse RCP in general, it's important to cover the fundamental characteristics of SWT and how they apply to the appearance of the widget set. -

Recoder with Eclipse

School of Mathematics and Systems Engineering Reports from MSI - Rapporter från MSI Recoder with Eclipse Saúl Díaz González Álvaro Pariente Alonso June MSI Report 09031 2009 Växjö University ISSN 1650-2647 SE-351 95 VÄXJÖ ISRN VXU/MSI/DA/E/--09031/--SE Abstract RECODER is a Java framework aimed at source code analysis and metaprogramming. It works on several layers to offer a set of semi-automatic transformations and tools, ranging from a source code parser and unparser, offering a highly detailed syntactical model, analysis tools which are able to infer types of expressions, evaluate compile-time constants and keep cross-reference information, to transformations of the very Java sources, containing a library of common transformations and incremental analysis capabilities. These make up an useful set of tools which can be extended to provide the basis for more advanced refactoring and metacompiler applications, in very different fields, from code beautification and simple preprocessors, stepping to software visualization and design problem detection tools to adaptive programming environments and invasive software composition. The core system development of RECODER started in the academic field and as such, it was confined into a small platform of users. Although a powerful tool, RECODER framework lacks usability and requires extensive and careful configuration to work properly. In order to overcome such limitations, we have taken advantage of the Eclipse Integrated Development Environment (Eclipse IDE) developed by IBM, specifically its Plugin Framework Architecture to build a tool and a vehicle where to integrate RECODER functionalities into a wide-used, well-known platform to provide a semi- automated and user-friendly interface. -

Eclipse (Software) 1 Eclipse (Software)

Eclipse (software) 1 Eclipse (software) Eclipse Screenshot of Eclipse 3.6 Developer(s) Free and open source software community Stable release 3.6.2 Helios / 25 February 2011 Preview release 3.7M6 / 10 March 2011 Development status Active Written in Java Operating system Cross-platform: Linux, Mac OS X, Solaris, Windows Platform Java SE, Standard Widget Toolkit Available in Multilingual Type Software development License Eclipse Public License Website [1] Eclipse is a multi-language software development environment comprising an integrated development environment (IDE) and an extensible plug-in system. It is written mostly in Java and can be used to develop applications in Java and, by means of various plug-ins, other programming languages including Ada, C, C++, COBOL, Perl, PHP, Python, Ruby (including Ruby on Rails framework), Scala, Clojure, and Scheme. The IDE is often called Eclipse ADT for Ada, Eclipse CDT for C/C++, Eclipse JDT for Java, and Eclipse PDT for PHP. The initial codebase originated from VisualAge.[2] In its default form it is meant for Java developers, consisting of the Java Development Tools (JDT). Users can extend its abilities by installing plug-ins written for the Eclipse software framework, such as development toolkits for other programming languages, and can write and contribute their own plug-in modules. Released under the terms of the Eclipse Public License, Eclipse is free and open source software. It was one of the first IDEs to run under GNU Classpath and it runs without issues under IcedTea. Eclipse (software) 2 Architecture Eclipse employs plug-ins in order to provide all of its functionality on top of (and including) the runtime system, in contrast to some other applications where functionality is typically hard coded. -

Virgo White Paper Introduction the Eclipse Virgo Project Provides a Modular Java Server Runtime and Is Part of the Eclipse Runtime (Eclipsert) Umbrella Project

Eclipse Virgo A Technical Overview White Paper Version 1.2 June 2012 Table of Contents Introduction.......................................................................................................................................... 3 OSGi Introduction................................................................................................................................ 3 History.................................................................................................................................................. 4 Virgo Feature Summary........................................................................................................................4 Benefits.................................................................................................................................................5 Why Choose Virgo?..............................................................................................................................9 Success Stories................................................................................................................................... 10 A Warning........................................................................................................................................... 11 Technology......................................................................................................................................... 11 Virgo Runtime Deliverables.............................................................................................................. -

An Empirical Evaluation of Osgi Dependencies Best Practices in the Eclipse IDE Lina Ochoa, Thomas Degueule, Jurgen Vinju

An Empirical Evaluation of OSGi Dependencies Best Practices in the Eclipse IDE Lina Ochoa, Thomas Degueule, Jurgen Vinju To cite this version: Lina Ochoa, Thomas Degueule, Jurgen Vinju. An Empirical Evaluation of OSGi Dependencies Best Practices in the Eclipse IDE. 15th International Conference on Mining Software Repositories, May 2018, Gothenburg, Sweden. 10.1145/3196398.3196416. hal-01740131 HAL Id: hal-01740131 https://hal.archives-ouvertes.fr/hal-01740131 Submitted on 27 Mar 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. An Empirical Evaluation of OSGi Dependencies Best Practices in the Eclipse IDE Lina Ochoa Thomas Degueule Jurgen Vinju Centrum Wiskunde & Informatica Centrum Wiskunde & Informatica Centrum Wiskunde & Informatica Amsterdam, Netherlands Amsterdam, Netherlands Amsterdam, Netherlands [email protected] [email protected] Eindhoven University of Technology Eindhoven, Netherlands [email protected] ABSTRACT that can be implemented and tested independently. This also fos- OSGi is a module system and service framework that aims to fill ters reuse by allowing software components to be reused from one Java’s lack of support for modular development. Using OSGi, devel- system to the other, or even to be substituted by one another pro- opers divide software into multiple bundles that declare constrained vided that they satisfy the appropriate interface expected by a client. -

Flexibility at the Roots of Eclipse

6°ÊÈ >ʽäÇ Dynamic Wizard Modeling with GMF Introduction to the Using GMF to Build a Dynamic Wizard Generic Eclipse Framework and a Graphical Editor Modeling System Developing a Deploying the BIRT Graphical Modeling Viewer to JBoss Tool for Eclipse Disseminate Report Content to an Application Server Subversive The Eclipse Enabling Plug-In for Integration and Subversion Interoperability for Eclipse based Development An Introduction to the Corona Project Flexibility at the Roots of Eclipse Solving the GUI Dilemma: SWTSwing and Eclipse on Swing 6°ÊÈ >ʽäÇ Vol.6 January 2007 Dynamic Wizard Modeling with GMF Introduction to the Using GMF to Build a Dynamic Wizard Generic Eclipse Table of Contents Framework and a Graphical Editor Modeling System Developing a Deploying the BIRT Graphical Modeling Viewer to JBoss Tool for Eclipse Disseminate Report Content to an Application Server Subversive The Eclipse Enabling Plug-In for Integration and Subversion FEATURES Interoperability for Eclipse based Development An Introduction to the Corona Project Flexibility at the Roots of Eclipse 29 Flexibility at the Roots of Eclipse Solving the GUI Dilemma: SWTSwing and Eclipse on Solving the GUI Dilemma: Swing SWTSwing and Eclipse on Swing No trench in the world of Java is deeper then that between SWT and Swing or Eclipse and Sun. Unity is only found in the knowledge that everybody suff ers from this argument. But how to end this almost religious battle over the righteous GUI-toolkit? How to bang their heads together if they only know DEPARTMENT one point of view—for them or against them! Th e sister projects SWTSwing and Eclipse on Swing News & Trends (EOS) achieve this trick. -

Model-Based Development of Engine Control Systems: Experiences and Lessons Learnt

This is a repository copy of Model-Based Development of Engine Control Systems : Experiences and Lessons Learnt. White Rose Research Online URL for this paper: https://eprints.whiterose.ac.uk/177763/ Version: Accepted Version Proceedings Paper: Cooper, Justin, De La Vega, Alfonso, Paige, Richard Freeman orcid.org/0000-0002-1978- 9852 et al. (5 more authors) (Accepted: 2021) Model-Based Development of Engine Control Systems : Experiences and Lessons Learnt. In: ACM/IEEE 24th International Conference on Model Driven Engineering Languages and Systems. (In Press) Reuse Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of the full text version. This is indicated by the licence information on the White Rose Research Online record for the item. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ Model-Based Development of Engine Control Systems: Experiences and Lessons Learnt Justin Cooper, Alfonso de la Vega, Michael Bennett, Caroline Brown, Richard Paige, Dimitris Kolovos Beatriz Sanchez Pina,˜ Horacio Hoyos Rodriguez Department of Computer Science Rolls-Royce, -

Liberty Quarterly Update 19.0.0.7-19.0.0.9

Liberty Quarterly Update Liberty Quarterly Update 19.0.0.7-19.0.0.9 Alasdair Nottingham STSM @nottycode WebSphere Proof of Technology Agenda • Part 1: Liberty value and evolution • Part 2: Technical deep-dive: what’s new this quarter • Part 2a: Jakarta EE & Eclipse MicroProfile – Kevin Sutter • Part 2c: Liberty Performance Update – Vijay Sundaresan • Part 5: Q&A • For complete WAS Update presentation, see http://ibm.biz/WebSphereUpdate Liberty Quarterly Update Liberty Quarterly Update Liberty value and evolution Why would I want it? WebSphere Proof of Technology Why are customers choosing Liberty? • “We want to reduce costs, simplify, and become cloud ready” • Development efficiencies • Flexible license options – even with collective management • Rapid provisioning – small disk size – zip packages – remove the Ops team from the app update flow • Lower running costs – dynamic config – zero migration – self-tuning thread pools Liberty Quarterly Update Fit-for-purpose server • You control which features are loaded into each server instance <feature>servlet<feature>jsf-2.3</feature>-4.0</feature> jsp-2.3 jsf-2.3 Java EE servlet-4.0 http-1.1 appmgr Kernel Liberty Quarterly Update Liberty in Docker IBM Cloud Kubernetes Service app and config Azure Kubernetes Service Hosted Google Kubernetes Engine + Cloud Amazon Elastic Kubernetes Service liberty and java Jelastic = + os config portable container Red Hat Open Shift Container Platform + Private Cloud Pivotal Kubernetes Service Pivotal Cloud Foundry FROM dockeropen-liberty ADD myapp.war /config/dropins/myapp.war -

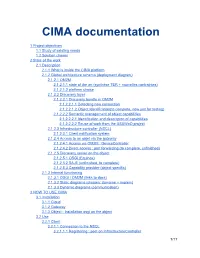

CIMA Documentation

CIMA documentation 1 Project objectives 1.1 Study of existing needs 1.2 Solution chosen 2 State of the work 2.1 Description 2.1.1 What is inside the CIMA platform 2.1.2 Global architecture schema (deployment diagram) 2.1.2.1 OM2M 2.1.2.1.1 state of the art (synthèse TER + nouvelles contraintes) 2.1.2.1.2 platform choice 2.1.2.2 Discovery layer 2.1.2.2.1 Discovery bundle in OM2M 2.1.2.2.1.1 Detecting new connection 2.1.2.2.1.2 Object Identification(to complete, now just for testing) 2.1.2.2.2 Semantic management of object capabilities 2.1.2.2.2.1 Identification and descritpion of capabilities 2.1.2.2.2.2 Reuse of work from the ASAWoO project 2.1.2.3 Infrastructure controller (NSCL) 2.1.2.3.1 Client notification system 2.1.2.4 Access to an objet via the gateway 2.1.2.4.1 Access via OM2M : DeviceController 2.1.2.4.2 Direct access : port forwarding (to complete, unfinished) 2.1.2.5 Discovery server on the object 2.1.2.5.1 OSGi (Equinox) 2.1.2.5.2 SAJE (unfinished, to complete) 2.1.2.5.3 Capability provider (object specific) 2.1.3 Internal functioning 2.1.3.1 OSGi / OM2M (links to docs) 2.1.3.2 Static diagrams (classes: domaine + implem) 2.1.3.3 Dynamic diagrams (communication) 3 HOW TO USE CIMA 3.1 Installation 3.1.1 Cloud 3.1.2 Gateway 3.1.3 Object : Installation osgi on the object 3.2 Use 3.2.1 Client 3.2.1.1 Connection to the NSCL 3.2.1.1.1 Registering : post on InfrastructureController 1/17 3.2.1.1.2 Notification system : several methods, OM2Mbased 3.2.1.2 InfrastructureController API 3.2.2 Creating a server on the object 3.2.2.1 ServerInfos -

Using Actuate BIRT Designer Professional Information in This Document Is Subject to Change Without Notice

Using Actuate BIRT Designer Professional Information in this document is subject to change without notice. Examples provided are fictitious. No part of this document may be reproduced or transmitted in any form, or by any means, electronic or mechanical, for any purpose, in whole or in part, without the express written permission of Actuate Corporation. © 1995 - 2012 by Actuate Corporation. All rights reserved. Printed in the United States of America. Contains information proprietary to: Actuate Corporation, 951 Mariners Island Boulevard, San Mateo, CA 94404 www.actuate.com www.birt-exchange.com The software described in this manual is provided by Actuate Corporation under an Actuate License agreement. The software may be used only in accordance with the terms of the agreement. Actuate software products are protected by U.S. and International patents and patents pending. For a current list of patents, please see http://www.actuate.com/patents. Actuate Corporation trademarks and registered trademarks include: Actuate, ActuateOne, the Actuate logo, Archived Data Analytics, BIRT, BIRT 360, BIRT Data Analyzer, BIRT Performance Analytics, Collaborative Reporting Architecture, e.Analysis, e.Report, e.Reporting, e.Spreadsheet, Encyclopedia, Interactive Viewing, OnPerformance, Performancesoft, Performancesoft Track, Performancesoft Views, Report Encyclopedia, Reportlet, The people behind BIRT, X2BIRT, and XML reports. Actuate products may contain third-party products or technologies. Third-party trademarks or registered trademarks of their respective owners, companies, or organizations include: Mark Adler and Jean-loup Gailly (www.zlib.net): zLib. Adobe Systems Incorporated: Flash Player. Apache Software Foundation (www.apache.org): Axis, Axis2, Batik, Batik SVG library, Commons Command Line Interface (CLI), Commons Codec, Derby, Hive driver for Hadoop, Shindig, Struts, Tomcat, Xalan, Xerces, Xerces2 Java Parser, and Xerces-C++ XML Parser. -

CS5233 Components – Models and Engineering (Komponententechnologien) Master of Science (Informatik)

Prof. Dr. Th. Letschert CS5233 Components – Models and Engineering (Komponententechnologien) Master of Science (Informatik) OSGI - Introduction Seite 1 OSGI – what, why and who What OSGi stands for once: OSGi : Open Service Gateway Initiative now: OSGi is a name for a Dynamic Module System for Java Slides on OSGi are based on – OSGi Alliance: OSGi Service Platform Core Specification OSGi 2009 – J. McAffer, P. Vanderlei, S. Archer: OSGi and Equinox, Addison-Wesley 2010 – Wütherich/Hartmann/Kolb/Lübken, Die OSGI Service Platform – Dpunkt Verlag, 2008 Seite 2 OSGI – what, why and who What OSGi is: OSGi is about building systems from components OSGi - Components work together in a system can be managed (installed / removed) … at runtime Seite 3 OSGI – what, why and who What OSGi is: OSGi is a component framework for Java OSGi-components are called bundles are units of resources can be installed have managed mutual dependencies are independent units can export services and run processes run in an OSGi-container Seite 4 OSGI – what, why and who http://www.osgi.org What OSGi is: OSGi is a (a set of) specification(s) Alcatel-Lucent Aplix Corporation by the OSGi-Alliance (founded 1999) Deutsche Telekom Ericsson Mobile Platforms AB with wide adoption outside the alliance Hitachi, Ltd. IBM Corporation developed in expert groups LinkedIn Makewave Core platform (CPEG) Mitsubishi Electric Corporation (VEG) OSGi in vehicles NEC Corporation Vehicle NTT Mobile (MEG) OSGi in mobile devices Oracle Corporation Paremus, Ltd. Enterprise (EEG) OSGi in enterprise solutions Progress Software ProSyst Software GmbH Residential (REG) OSGi in home automation Qualcomm Red Hat structured into 2 documents SAP AG Siemens AG Core Specification : framework Siemens Enterprise Communications Service Compendium : standard services Software AG Sonatype Inc. -

Marketing Update for June 2012 Eclipsecon 2013

Marketing Update for June 2012 EclipseCon 2013 • Seaport Hotel and Trade Center, Boston, MA • March 25-28, 2012 Eclipse Training Series Marketing program for Eclipse members that offer training courses on RCP, Modeling and BIRT Promotion runs mid May to end of June Juno Launch (June 2012) Juno Demo Camps for June Focus on Eclipse 4 being the primary platform for Juno Key Highlights Code Recommneders Equinox support for OSGi R5 Virgo Nano release supporting very small and fast OSGi applications Xtend introduces support for debugging and improved IDE integration M2M Industry Working Group Published common marketing presentation Press release published highlighting accomplishments over the past 6 months Accomplishments Paho code available for an MQTT client Lua client code proposed Koneki part of the Juno release train Active participation form Sierra Wireless, Eurotech, IBM and Band XI We need to do recruitment of new members Juno Launch (June 2012) Juno Demo Camps for June Focus on Eclipse 4 being the primary platform for Juno Key Highlights Code Recommneders Equinox support for OSGi R5 Virgo Nano release supporting very small and fast OSGi applications Xtend introduces support for debugging and improved IDE integration ECLIPSE OPEN SOURCE DEVELOPER REPORT JUNE 2012 COPYRIGHT © 2012 THE ECLIPSE FOUNDATION ALL RIGHTS RESERVED THIS WORK IS LICENSED UNDER THE CREATIVE COMMONS ATTRIBUTION 3.0 UNPORTED LICENSE. TO VIEW A COPY OF THIS LICENSE, VISIT HTTP://CREATIVECOMMONS.ORG/LICENSES/BY/3.0/ OR SEND A LETTER TO CREATIVE COMMONS, 171 SECOND STREET, SUITE 300, SAN FRANCISCO, CALIFORNIA, 94105, USA ABOUT THE SURVEY • Survey was open to the Eclipse community from April 23 to May 15, 2012.