Toolkit for Social Experiments in VR

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Current, August 23, 2010

University of Missouri, St. Louis IRL @ UMSL Current (2010s) Student Newspapers 8-23-2010 Current, August 23, 2010 University of Missouri-St. Louis Follow this and additional works at: https://irl.umsl.edu/current2010s Recommended Citation University of Missouri-St. Louis, "Current, August 23, 2010" (2010). Current (2010s). 19. https://irl.umsl.edu/current2010s/19 This Newspaper is brought to you for free and open access by the Student Newspapers at IRL @ UMSL. It has been accepted for inclusion in Current (2010s) by an authorized administrator of IRL @ UMSL. For more information, please contact [email protected]. AUG. 23, 2010 VOL. 44; TheWWW.THECURRENT-ONLINE.COM Current ISSUE 1317 ALSO INSIDE Triton eyes PGA Scott Pilgram City Museum 5 Golf ace Matt Rau’s bright future 9 Best comic book movie ever 16 The only museum made for kids 2 | The Current | AUGUST 23, 2010 The Current Vol. 44, Issue 1317 www.thecurrent-online.com Statshot EDITORIAL STAFF WEEKLY WEB POLL Editor-in-Chief...............................................................Sequita Bean Managing Editor..............................................................Gene Doyel Features Editor.................................................................Jen O’Hara Sports Editor..........................................................Michael Frederick Did you get your free Assoc. Sports Editor...................................................Stephanie Benz 24% 6% A&E Editor.....................................................................William Kyle metro pass yet? Assoc. -

Videojuegos Como Forma De Repensar La Relación Entre Estética Y Política

Videojuegos como forma de repensar la relación entre estética y política. Reflexiones desde la periferia Sebastián Gómez Urra Introducción Mi objetivo es plantear la inquietud de pensar los videojuegos como un caso de estudio no solamente importante, sino también necesario para entender hoy nuestra cultura. Este trabajo se hará cargo de la ausente perspectiva latinoamericana al respecto, ausencia entendible como síntoma y como consecuencia de variadas motivaciones económicas y socioculturales que han, de cierta forma, relegado a nuestro continente a la periferia de la estructura que forma esta industria cultural. La industria de los videojuegos, considerada ya por el gobierno británico desde hace casi diez años como una parte fundamental de la economía perteneciente al sector de las industrias culturales y creativas1 (Creative Industries Economic Estimates), ha llegado a ser considerada por algunos autores (Dyer-Witheford y de Peuter; Kirkpatrick, Computer Games and the Social Imaginary) como el ejemplo paradigmático del ca- pitalismo actual. Bajo esta perspectiva, toda industria que implique algún tipo de labor intelectual ha tendido a seguir los patrones instaurados por los regímenes lúdicos de trabajo que sustentan la constante alimentación de información y creatividad que el capitalismo cognitivo posfordista requiere para su funcionamiento. Mi intención, por tanto, es recontextualizar este discurso en la rea- lidad latinoamericana. Para ello, me enfocaré en dos aristas propuestas por Dyer-Witheford y de Peuter en Games of Empire, que muestran a la industria de los videojuegos como una estrategia de avanzada del 1 Con esto quiero hacer énfasis en la existencia de políticas públicas y fondos culturales dedicados específicamente a ella. -

Universidade Presbiteriana Mackenzie Érika Fernanda

UNIVERSIDADE PRESBITERIANA MACKENZIE ÉRIKA FERNANDA CARAMELLO VIDA EXTRA: vivendo e aprendendo na indústria brasileira de games São Paulo 2019 1 ÉRIKA FERNANDA CARAMELLO VIDA EXTRA: vivendo e aprendendo na indústria brasileira de games Tese apresentada ao Programa de Pós-Graduação em Educação, Arte e História da Cultura da Universidade Presbiteriana Mackenzie como requisito parcial à obtenção do título de Doutora em Educação, Arte e História da Cultura. ORIENTADORA: Profa. Dra. Cláudia Coelho Hardagh São Paulo 2019 C259v Caramello, Érika Fernanda. Vida extra: vivendo e aprendendo na indústria brasileira de games / Érika Fernanda Caramello. 230 f.: il. ; 30 cm Tese (Doutorado em Educação, Arte e História da Cultura) – Universidade Presbiteriana Mackenzie, São Paulo, 2019. Orientadora: Cláudia Coelho Hardagh. Referências bibliográficas: f. 154-163. 1. Games. 2. Práxis pedagógica. 3. Formação profissional. 4. Indústria cultural. 5. Indústria criativa. 6. Identidade cultural nacional. I. Hardagh, Cláudia Coelho, orientadora. II. Título. CDD 794.8 Bibliotecária Responsável: Andrea Alves de Andrade - CRB 8/9204 2 3 Dedico a todos os agentes nacionais da indústria de games, especialmente aqueles que estão promovendo a mudança, acreditando que a sua participação para o crescimento e o reconhecimento dela é fundamental. 4 AGRADECIMENTOS À Universidade Presbiteriana Mackenzie, que me ofereceu uma bolsa de estudos integral durante o curso, sem a qual seria inviável a realização deste trabalho. Aos meus mestres Silvana Seabra, Márcia Tiburi, Wilton Azevedo (in memoriam), Elcie Masini, Mírian Celeste e Regina Giora, aos demais professores e a todos os colegas do Programa de Pós-Graduação em Educação, Arte e História da Cultura, pelo acolhimento e bons momentos durante estes anos. -

Eternal Coders the Veterans Making Games on Mobile

ALL FORMATS LIFTING THE LID ON VIDEO GAMES Eternal coders The veterans making games on mobile Reviling rodents Point Blank Why rats continue to Code your own infest our video games shooting gallery Issue 20 £3 wfmag.cc Stealth meets Spaghetti Western UPGRADE TO LEGENDARY AG273QCX 2560x1440 F2P game makers have a responsibility to their players ree-to-play has brought gaming to billions offer: any player can close their account and receive of new players across the globe: from the a full refund for any spending within the last 60 days. middle-aged Middle Americans discovering This time limit gives parents, guardians, carers, and F hidden object puzzles to the hundreds of remorseful spenders a full billing cycle to spot errant millions of mobile gamers playing MOBAs in China. spending and another cycle to request their funds People who wouldn’t (or couldn’t) buy games now have WILL LUTON back. It’s also a clear signal to our players and the an unfathomable choice, spanning military shooters to gaming community that F2P is not predicated on Will Luton is a veteran interior decorating sims. one-time tricks but on building healthy, sustainable game designer and F2P is solely responsible for this dramatic expansion product manager relationships with players. of our art across the borders of age, race, culture, and who runs Department How such a refund policy would be publicised and continents. The joy of gaming is now shared by nearly of Play, the games adopted is a little more tricky. But faced with mounting industry’s first the entire globe. -

A Very Happy Wireframe-Iversary!

ALL FORMATS ビデオゲームの秘密を明らかにする THE ENDLESS MISSION Learn Unity in a genre- bending sandbox MURDER BY NUMBERS Solve pixel puzzles to catch a killer Issue 26 £3 ANNIVERSARY wfmag.cc EDITION The 25 finest games of the past 12 months 01_WF#26_Cover V4_RL_VI_DH_RL.indd 4 31/10/2019 11:14 JOIN THE PRO SQUAD! Free GB2560HSU¹ | GB2760HSU¹ | GB2760QSU² 24.5’’ 27’’ Sync Panel TN LED / 1920x1080¹, TN LED / 2560x1440² Response time 1 ms, 144Hz, FreeSync™ Features OverDrive, Black Tuner, Blue Light Reducer, Predefined and Custom Gaming Modes Inputs DVI-D², HDMI, DisplayPort, USB Audio speakers and headphone connector Height adjustment 13 cm Design edge-to-edge, height adjustable stand with PIVOT gmaster.iiyama.com The myth of the video game auteur orget the breaking news. Flip past the Years earlier, Interplay features, the tips, tricks, and top ten lists. had adopted the No, the true joy of old gaming magazines corporate philosophy F lies in the advertising. After all, video game of creating products “by advertisements offer a window into how the industry gamers, for gamers,” understands its products, its audience, and itself at JESS and that motto appears a specific moment – timestamped snapshots of the MORRISSETTE prominently in the ad. medium’s prevailing attitudes and values. Of course, Jess Morrissette is a Yet, everything else sometimes you stumble across a vintage ad that’s professor of Political about the campaign so peculiar it merits further attention. That was Science at Marshall seems to communicate certainly the case when I recently encountered a 1999 University, where he precisely the opposite. studies the politics of advertisement for Interplay Entertainment. -

1001 Video Games You Must Play Before You Die

1001 Video Games You Must Play Before You Die G = Completed B = Played R = Not played bold = in collection 1971-1980 (1 st GENERATION) 1. The Oregon Trail Multi-platform MECC 1971 2. Pong Multi-platform Atari 1972 1976-1992 (2 nd GENERATION) 3. Breakout Multi-platform Atari 1976 4. Boot Hill Arcade Midway 1977 5. Combat Atari 2600 Atari 1977 6. Space Invaders Arcade Taito 1978 7. Adventure Atari 2600 Atari 1979 8. Asteroids Arcade Atari 1979 9. Galaxian Arcade Namco 1979 10. Lunar Lander Arcade Atari 1979 11. Battle Zone Arcade Atari 1980 12. Defender Arcade Williams 1980 13. Eamon Apple II D. Brown 1980 14. Missile Command Arcade Atari 1980 15. Rogue Multi-platform M. Toy, G. Wichman, K. Arnold 1980 16. Tempest Arcade Atari 1980 17. MUD Multi-platform R. Trubshaw, R. Bartle 1980 18. Pac-Man Arcade Namco 1980 19. Phoenix Arcade Amstar Electronics 1980 20. Zork I Multi-platform Infocom 1980 21. Warlords Arcade / Atari 2600 Atari 1980 22. Centipede Arcade Atari 1980 23. Galaga Arcade Namco 1981 24. Donkey Kong Arcade Nintendo 1981 25. Qix Arcade R. Pfeiffer, S. Pfeiffer 1981 26. Scramble Arcade Konami 1981 27. Stargate Arcade Vid Kidz 1981 28. Venture Multi-platform Exidy 1981 29. Ms. Pac-Man Arcade Midway 1981 30. Frogger Arcade Konami 1981 31. Gorf Arcade Midway 1981 32. Ultima Multi-platform Origin Systems 1981 33. Gravitar Arcade Atari 1982 34. Joust Arcade Williams 1982 35. The Hobbit Multi-platform Beam Software 1982 36. Choplifter Multi-platform Brøderbund 1982 37. Robotron 2084 Arcade Williams 1982 38. -

Procedural Content Generation for Cooperative Games Bologna

Procedural Content Generation for Cooperative Games Rafael Vilela Pinheiro de Passos Ramos Thesis to obtain the Master of Science Degree in Bologna Master Degree in Information Systems and Computer Engineering Supervisors: Prof. Rui Felipe Fernandes Prada Prof. Carlos António Roque Martinho Examination Committee: Chairperson: Prof. Mário Rui Fonseca dos Santos Gomes Supervisor: Prof. Rui Felipe Fernandes Prada Member of the Committee: Prof. Luis Manuel Ferreira Fernandes Moniz November 2015 Acknowledgments I thank my supervisors Rui Prada and Carlos Martinho for guidance and insight provided throughout the year, when developing this work. I would also like to thank fellow student Miguel Santos for his invaluable assistance during testing even when health wasn’t helping. I would like thank Sofia Oliveira for her suggestions in experimental methodology. Lastly a big thanks to my parents, my family and all my friends who put up with me year long, listening and discussing whatever subject I threw at them. i Abstract A popular topic now more than ever, procedural content generation is an interesting concept worked on several areas, including in game-making. Previously mainly as a mean to compact a lot of data generated content has been used to generate theoretically infinite worlds. Level and content generation ease the burden on game developers and artist. As more realism and bigger diversity is being required the need for efficient and fun level generators increases. On the other hand, a cooperative option on games is becoming more and more common, with a majority of games having a cooperative mode. A noticeable amount of research has been done on both of these areas and what makes them fun, however, little work has been done on a joint effort on both these fields. -

EDICIÓN ESPECIAL Lo Mejor De La E3 |UNA REALIZACION DE PLAYADICTOS| 03 Todojuegos Índice 04

OPINION EN MODO HARDCORE JULIO 2014 TodoCompletaJuegos guía de todos los juegos anunciados Los “indies” vuelven a robarse la película Cómo vivimos “in situ” la feria en Los Angeles EDICIÓN ESPECIAL Lo Mejor de la E3 |UNA REALIZACION DE PLAYADICTOS| 03 TodoJuegos Índice 04. Una cita que deja la vara alta 72. Mario Maker 10. Así vivimos la E3 74. Metal Gear Solid V 16. Abzu 76. Mirror’s Edge 18. Assassin’s Creed Unity 78. Mortal Kombat X 20. Batman: Arkham Knight 80. No Man’s Sky 22. Battlefield: Hardline 82. Ori and the Blind Forest 24. Bayonetta 2 84. Phantom Dust 26. Bloodborne 86. Pokemon Rubí Omega & Zafiro Alfa 28. Captain Toad Treasure Tracker 88. Rainbow Six Siege 30. Crackdown 90. Rise of the Tomb Raider 32. D4: Dark Dreams Don’t Die 92. Scalebound 34. Dead Island 2 94. Splatoon 36. Destiny 96. Star Wars: Battlefront 38. Devil’s Third 98. Sunset Overdrive 40. Dragon Age: Inquisition 100. Super Smash Bros 42. Dragon Ball Xenoverse 102. The Crew 44. Evolve 104. The Division 46. Far Cry 4 106. The Legend of Zelda 48. FIFA 15 108. The Order: 1886 50. Final Fantasy Type-0 110. The Sims 4 52. Forza Horizon 2 112. The Witcher 3: Wild Hunt 54. Grand Theft Auto V 114. Uncharted 4: A Thief’s End 56. Grim Fandango 116. Xenoblade Chronicles 58. Halo: Master Chief Collection 118. Yoshi Wolly World 60. Hyrule Warriors 120. Un vistazo a Mass Effect 62. Inside 124. Choza de la INDIEgencia 64. Kirby and the Rainbow Curse 128. -

ISSUE #82 with Dragons in an It Is Almost Time to Be Hometown Endless Dungeon

Family Friendly Gaming The VOICE of the FAMILY in GAMING Batman Tinkers Get those edicts ready. Sing of your ISSUE #82 with Dragons in an It is almost time to be HomeTown Endless Dungeon. El Presidente in Story on a Can you Tumble- May 2014 Tropic 5!! Journey to stone the Altitude? meet PI. CONTENTS ISSUE #82 May 2014 CONTENTS Links: Home Page Section Page(s) Editor’s Desk 4 Female Side 5 Working Man Gamer 7 Sound Off 8 - 10 Talk To Me Now 12 - 13 Devotional 14 Video Games 101 15 In The News 16 - 23 State of Gaming 24 Reviews 25 - 37 Sports 38 - 41 Developing Games 42 - 65 Recent Releases 66 - 75 Last Minute Tidbits 76 - 90 “Family Friendly Gaming” is trademarked. Contents of Family Friendly Gaming is the copyright of Paul Bury, and Yolanda Bury with the exception of trademarks and related indicia (example Digital Praise); which are prop- erty of their individual owners. Use of anything in Family Friendly Gaming that Paul and Yolanda Bury claims copyright to is a violation of federal copyright law. Contact the editor at the business address of: Family Friendly Gaming 7910 Autumn Creek Drive Cordova, TN 38018 [email protected] Trademark Notice Nintendo, Sony, Microsoft all have trademarks on their respective machines, and games. The current seal of approval, and boy/girl pics were drawn by Elijah Hughes thanks to a wonderful donation from Tim Emmerich. Peter and Noah are inspiration to their parents. Family Friendly Gaming Page 2 Page 3 Family Friendly Gaming Editor’s Desk FEMALE SIDE perfect. -

Computoredge 08/01/14: a Show for the Technophile

August 1, 2014 List of ComputorEdge Sponsors List of ComputorEdge Sponsors San Diego ComputorEdge Sponsors Colocation and Data Center redIT With approaches like smart security, customized colocation and an extensive range of managed services, redIT helps you intelligently leverage IT. Computer Store, Full Service Chips and Memory New Systems Starting At $299 Visit Our Website or Call for Hardware, Software, Systems, or Components Laptop*Desktop*Server IT Service * Upgrades * Service Everyday Low Prices Macintosh Specialists Maximizers Serving San Diego County Since 1988 * Onsite Macintosh Service for Home and Small Office Needs * ACSP: Apple Certified Support Professional ACTC: Apple Certified Technical Coordinator Apple Consultant's Network Repair General Hi-Tech Computers Notebooks, Monitors, Computers and Printers We Buy Memory, CPU Chips, Monitors and Hard Drives Windows 7 Upgrades Phone (858) 560-8547 Colorado ComputorEdge Sponsors 2 August 1, 2014 ComputorEdge™ Online — 08/01/14 ComputorEdge™ Online — 08/01/14 Click to Visit ComputorEdge™ Online on the Web! A Show for the Technophile The Open Source Convention (OSCON) is all about free software. Magazine Summary List of ComputorEdge Sponsors Digital Dave by Digital Dave Digital Dave answers your tech questions. Finalize a DVD After Recording?; Unwanted Windows Programs. OSCON: A Computer Show for Nerds by Jack Dunning Open Source Enthusiasts Gather to Share Their Love of Free Software Jack takes a day to meet the people and explorer the offerings of open source software. Calorie Counting Revisited with AutoHotkey by Jack Dunning After One Year, the Calorie Counting Script Comes Out of the Drawer for New Features Very few people stay on a diet for more than a couple of weeks. -

Title Brief Significance Icivics Cart Life Papers, Please

Title Brief Significance iCivics Cart Life Papers, please Re-Mission, Re-Mission2 Fight against Cancer PeaceMaker Playing two representations/ perspectives of the same topic (2 games in one?); brings isolated events together; significance of leveraging Reuters; What pieces of the game experiences allow players to make choices? Some experiences within the game are intentionally provocative and some not. Depends of target audience/ player's conscious choice about game play and meaning making. Trace Effects A time-traveling adventure that teaches English as a second language EVOKE EnerCities At-Risk Spent SimCity Societies Shape cultures, societal behaviors and environments Vigilance 1.0 Protect a city from immorality while trying to maximize your score What the Frack Single-player, text-based decision Acting as the state government for making game a region that has recently discovered a large deposit of shale oil and natural gas beneath its ground, the player must make decisions that impact the economy, environment, public health, and “quality of life” (a combination of the previous three plus public opinion and industry support) of the community. Macon Money Lit to Quit Mobile game for smoking reduction 9 Minutes 9 months of pregnancy in 9 Healthy birthing practices : 9 minutes. months of pregancy in 9 minutes. users play out the adventure of pregnancy and are rewarded for keeping both the mother-to-be and the baby inside of her happy and healthy. Worm Attack Deworming awareness Family Choices Highlighting place and value of girls in the -

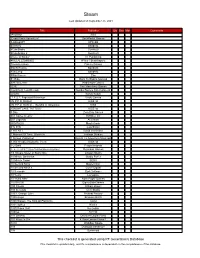

This Checklist Is Generated Using RF Generation's Database This Checklist Is Updated Daily, and It's Completeness Is Dependent on the Completeness of the Database

Steam Last Updated on September 25, 2021 Title Publisher Qty Box Man Comments !AnyWay! SGS !Dead Pixels Adventure! DackPostal Games !LABrpgUP! UPandQ #Archery Bandello #CuteSnake Sunrise9 #CuteSnake 2 Sunrise9 #Have A Sticker VT Publishing #KILLALLZOMBIES 8Floor / Beatshapers #monstercakes Paleno Games #SelfieTennis Bandello #SkiJump Bandello #WarGames Eko $1 Ride Back To Basics Gaming √Letter Kadokawa Games .EXE Two Man Army Games .hack//G.U. Last Recode Bandai Namco Entertainment .projekt Kyrylo Kuzyk .T.E.S.T: Expected Behaviour Veslo Games //N.P.P.D. RUSH// KISS ltd //N.P.P.D. RUSH// - The Milk of Ultraviolet KISS //SNOWFLAKE TATTOO// KISS ltd 0 Day Zero Day Games 001 Game Creator SoftWeir Inc 007 Legends Activision 0RBITALIS Mastertronic 0°N 0°W Colorfiction 1 HIT KILL David Vecchione 1 Moment Of Time: Silentville Jetdogs Studios 1 Screen Platformer Return To Adventure Mountain 1,000 Heads Among the Trees KISS ltd 1-2-Swift Pitaya Network 1... 2... 3... KICK IT! (Drop That Beat Like an Ugly Baby) Dejobaan Games 1/4 Square Meter of Starry Sky Lingtan Studio 10 Minute Barbarian Studio Puffer 10 Minute Tower SEGA 10 Second Ninja Mastertronic 10 Second Ninja X Curve Digital 10 Seconds Zynk Software 10 Years Lionsgate 10 Years After Rock Paper Games 10,000,000 EightyEightGames 100 Chests William Brown 100 Seconds Cien Studio 100% Orange Juice Fruitbat Factory 1000 Amps Brandon Brizzi 1000 Stages: The King Of Platforms ltaoist 1001 Spikes Nicalis 100ft Robot Golf No Goblin 100nya .M.Y.W. 101 Secrets Devolver Digital Films 101 Ways to Die 4 Door Lemon Vision 1 1010 WalkBoy Studio 103 Dystopia Interactive 10k Dynamoid This checklist is generated using RF Generation's Database This checklist is updated daily, and it's completeness is dependent on the completeness of the database.