Over Snow by DARRELL CLEM Streets and Roads

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Markov Game Model for Valuing Player Actions in Ice Hockey

A Markov Game Model for Valuing Player Actions in Ice Hockey Kurt Routley Oliver Schulte School of Computing Science School of Computing Science Simon Fraser University Simon Fraser University Vancouver, BC, Canada Vancouver, BC, Canada [email protected] [email protected] Abstract Markov Game model comprises over 1.3M states. Whereas most previous works on Markov Game models aim to com- A variety of advanced statistics are used to pute optimal strategies or policies [Littman, 1994] (i.e., evaluate player actions in the National Hockey minimax or equilibrium strategies), we learn a model of League, but they fail to account for the context how hockey is actually played, and do not aim to com- in which an action occurs or to look ahead to pute optimal strategies. In reinforcement learning (RL) the long-term effects of an action. We apply the terminology, we use dynamic programming to compute an action-value on policy Markov Game formalism to develop a novel ap- Q-function in the setting [Sutton and proach to valuing player actions in ice hockey Barto, 1998]. In RL notation, the expression Q(s; a) de- that incorporates context and lookahead. Dy- notes the expected reward of taking action a in state s. namic programming is used to learn Q-functions that quantify the impact of actions on goal scor- Motivation Motivation for learning a Q-function for ing resp. penalties. Learning is based on a NHL hockey dynamics includes the following. massive dataset that contains over 2.8M events Knowledge Discovery. The Markov Game model provides in the National Hockey League. -

2011-12 Rochester Americans Media Guide (.Pdf)

Rochester Americans Table of Contents Rochester Americans Personnel History Rochester Americans Staff Directory........................................................................................4 All-Time Records vs. Current AHL Clubs ..........................................................................203 Amerks 2011-12 Schedule ............................................................................................................5 All-Time Coaches .........................................................................................................................204 Amerks Executive Staff ....................................................................................................................6 Coaches Lifetime Records ......................................................................................................205 Amerks Hockey Department Staff ..........................................................................................10 Presidents & General Managers ...........................................................................................206 Amerks Front Office Personnel ................................................................................................ 17 All-Time Captains ..........................................................................................................................207 Affiliation Timeline ........................................................................................................................208 Players Amerks Firsts & Milestones -

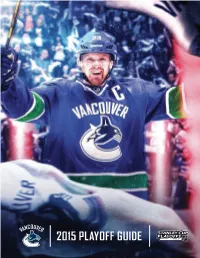

2015 Playoff Guide Table of Contents

2015 PLAYOFF GUIDE TABLE OF CONTENTS Company Directory ......................................................2 Brad Richardson. 60 Luca Sbisa ..............................................................62 PLAYOFF SCHEDULE ..................................................4 Daniel Sedin ............................................................ 64 MEDIA INFORMATION. 5 Henrik Sedin ............................................................ 66 Ryan Stanton ........................................................... 68 CANUCKS HOCKEY OPS EXECUTIVE Chris Tanev . 70 Trevor Linden, Jim Benning ................................................6 Linden Vey .............................................................72 Victor de Bonis, Laurence Gilman, Lorne Henning, Stan Smyl, Radim Vrbata ...........................................................74 John Weisbrod, TC Carling, Eric Crawford, Ron Delorme, Thomas Gradin . 7 Yannick Weber. 76 Jonathan Wall, Dan Cloutier, Ryan Johnson, Dr. Mike Wilkinson, Players in the System ....................................................78 Roger Takahashi, Eric Reneghan. 8 2014.15 Canucks Prospects Scoring ........................................ 84 COACHING STAFF Willie Desjardins .........................................................9 OPPONENTS Doug Lidster, Glen Gulutzan, Perry Pearn, Chicago Blackhawks ..................................................... 85 Roland Melanson, Ben Cooper, Glenn Carnegie. 10 St. Louis Blues .......................................................... 86 Anaheim Ducks -

2008-09 Upper Deck Collector's Choice Hockey

2008-09 Upper Deck Collector’s Choice Hockey Page 1 of 2 200 base cards 100 short prints Base Set (1‐200) 1 Ales Hemsky 55 Erik Cole 109 Martin St. Louis 2 Ales Kotalik 56 Erik Johnson 110 Marty Turco 3 Alex Kovalev 57 Evgeni Malkin 111 Mats Sundin 4 Alex Tanguay 58 Evgeni Nabokov 112 Matt Stajan 5 Alexander Edler 59 George Parros 113 Matthew Lombardi 6 Alexander Frolov 60 Gilbert Brule 114 Michael Peca 7 Alexander Ovechkin 61 Chuck Kobasew 115 Michael Ryder 8 Alexander Semin 62 Guillaume Latendresse 116 Michal Rozsival 9 Alexander Steen 63 Henrik Lundqvist 117 Miikka Kiprusoff 10 Andrei Kostitsyn 64 Henrik Sedin 118 Mike Cammalleri 11 Andrew Cogliano 65 Henrik Zetterberg 119 Mike Comrie 12 Anze Kopitar 66 Ilya Bryzgalov 120 Mike Knuble 13 Bill Guerin 67 Ilya Kovalchuk 121 Mike Modano 14 Brad Boyes 68 J.P. Dumont 122 Mike Ribeiro 15 Brad Richards 69 Jack Johnson 123 Mike Richards 16 Brendan Morrison 70 Jarome Iginla 124 Mike Smith 17 Aaron Voros 71 Jarret Stoll 125 Mikko Koivu 18 Brenden Morrow 72 Jason Arnott 126 Milan Hejduk 19 Brian Campbell 73 Jason LaBarbera 127 Milan Lucic 20 Brian Gionta 74 Jason Pominville 128 Milan Michalek 21 Brian Rolston 75 Jason Spezza 129 Miroslav Satan 22 Cam Ward 76 Jay Bouwmeester 130 Nathan Horton 23 Carey Price 77 Jean-Sebastien Giguere 131 Nicklas Backstrom 24 Chris Drury 78 Jeff Carter 132 Nicklas Lidstrom 25 Chris Higgins 79 Jere Lehtinen 133 Niklas Backstrom 26 Chris Kunitz 80 Joe Sakic 134 Nikolai Antropov 27 Chris Osgood -

Vancouver Canucks

NATIONAL POST NHL PREVIEW Aquilini Investment Group | Owner Trevor Linden | President of hockey operations General manager Head coach Jim Benning Willie Desjardins $700M 19,770 VP player personnel, ass’t GM Assistant coaches Forbes 2013 valuation Average 2013-14 attendance Lorne Henning Glen Gulutzan NHL rank: Fourth NHL rank: Fifth VP hockey operations, ass’t GM Doug Lidster Laurence Gilman Roland Melanson (goalies) Dir. of player development Ben Cooper (video) Current 2014-15 payroll Stan Smyl Roger Takahashi $66.96M NHL rank: 13th Chief amateur scout (strength and conditioning) Ron Delorme Glenn Carnegie (skills) 2014-15 VANCOUVER CANUCKS YEARBOOK FRANCHISE OUTLOOK RECORDS 2013-14 | 83 points (36-35-11), fifth in Pacific | 2.33 goals per game (28th); 2.53 goals allowed per game (14th) On April 14, with the Vancouver 0.93 5-on-5 goal ratio (21st) | 15.2% power play (26th); 83.2% penalty kill (9th) | +2.4 shot differential per game (8th) GAMES PLAYED, CAREER Canucks logo splashed across the 2013-14 post-season | Did not qualify 1. Trevor Linden | 1988-98, 2001-08 1,140 backdrop behind him, John Tortor- 2. Henrik Sedin | 2000-14 1,010 ella spoke like a man who suspected THE FRANCHISE INDEX: THE CANUCKS SINCE INCEPTION 3. Daniel Sedin | 2000-14 979 the end was near. The beleaguered NUMBER OF NUMBER OF WON STANLEY LOST STANLEY LOCKOUT coach seemed to speak with unusual POINTS WON GAMES PLAYED CUP FINAL CUP FINAL GOALS, CAREER candor, even by his standards, es- 1. Markus Naslund | 1995-2008 346 pecially when asked if the team’s 140 roster needed “freshening.” SEASON CANCELLED 140 2. -

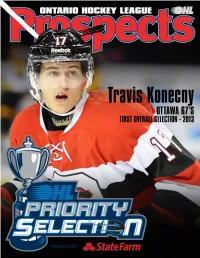

2014 Ohl Priority Selection Information Guide

2014 OHL PRIORITY SELECTION INFORMATION GUIDE 1 2 ONTARIO HOCKEY LEAGUE OHL PRIORITY SELECTION OHL Priority Selection Process In 2001, the Ontario Hockey League Selected Players in the OHL games with non-playoff teams select- Scouting Bureau with evaluations from conducted the annual Priority Selec- OHL Member Teams are permitted to ing ahead of playoff teams. their team scouting staffs to make their tion process by way of the Internet for register a maximum of four 16 year old player selections. the first time in league history. players selected in the OHL Priority Teams are permitted to trade draft Selection. Those 16 year old players choices, other than their first round The OHL Central Scouting Bureau The new process allowed for eligible that are allowed to be signed are the selection, during the trading period has been evaluating players since the players and their families, as well as first two 16 year old players selected from Monday March 31 to Thursday 1975-76 season. fans across the league to follow the and a maximum addition of two 16 April 3, 2014 at 3:00 p.m. process in real time online. year old wild carded players in any OHL Central Scouting Staff round of the OHL Priority Selection. OHL Central Scouting Chief Scout - Darrell Woodley The 2014 OHL Priority Selection pre- The Central Scouting Bureau of the GTA - Tim Cherry sented by State Farm will once again All other 16-year-old players selected Ontario Hockey League is an informa- Central Ontario - Kyle Branch be conducted online on Saturday April are eligible to be called up as an tion service and support organization Kingston and Area - John Finlay 5, 2014 beginning at 9:00 a.m. -

OHL Priority Selection Preview and Media Guide:OHL News.Qxd

OHL PRIORITY SELECTION OHL Priority Selection Process In 2001, the Ontario Hockey League Selected Players in the OHL with non-playoff teams selecting ahead Scouting Bureau with evaluations from conducted the annual Priority Selec- OHL Member Teams are permitted to of playoff teams. their team scouting staffs to make their tion process by way of the Internet for register a maximum of four 16 year old player selections. the first time in league history. players selected in the OHL Priority Teams are permitted to trade draft Selection. Those 16 year old players choices, other than their first round se- The OHL Central Scouting Bureau The new process allowed for eligible that are allowed to be signed are the lection, during the trading period from has been evaluating players since the players and their families, as well as fans first two 16 year old players selected Monday April 28 to Friday May 2, 1975-76 season. across the league to follow the process and a maximum addition of two 16 2008 at 3:00 p.m. in real time online. year old wild carded players in any OHL Central Scouting Staff round of the OHL Priority Selection. OHL Central Scouting Chief Scout - Robert Kitamura The 2008 OHL Priority Selection will The Central Scouting Bureau of the GTA - Tim Cherry once again be conducted online on All other 16-year-old players selected Ontario Hockey League is an informa- Central Ontario - Kyle Branch Saturday May 3, 2008 beginning at are eligible to be called up as an “affili- tion service and support organization Kingston and Area - John Finlay 9:00 a.m. -

Mtois F/A L/G 6’2” 199 01/08/99 Longueuil, Que./Qc Victoriaville (QMJHL) 2017 Draft/Rep

CANADA'S NATIONAL MEN'S UNDER-18 TEAM ÉQUIPE NATIONALE MASCULINE DES MOINS DE 18 ANS DU CANADA 2017 IIHF U18 WORLD CHAMPIONSHIP / CHAMPIONNAT MONDIAL DES M18 2017 DE L'IIHF APRIL 13-23, 2017 / 13-23 AVRIL 2017 POPRAD & SPISSKA NOVA VES, SLOVAKIA/SLOVAQUIE Team Canada Media Guide / Guide de presse d’Équipe Canada ROSTER FORMATION # Name P S/C Ht. Wt. Born Hometown Club Team Pro Status No Nom P T/A Gr. Pds DDN Ville d’origine Équipe de club Statut prof. 1 Ian Scott G L/G 6’3” 174 01/11/99 Calgary, Alta./Alb. Prince Albert (WHL) 2017 Draft/Rep. 29 Alexis Gravel G R/D 6’2” 210 03/21/00 Asbestos, Que./Qc Halifax (QMJHL) 2018 Draft/Rep. 30 Jacob McGrath G L/G 6’1” 158 01/07/99 Mississauga, Ont. Sudbury (OHL) 2017 Draft/Rep. 2 Josh Brook D R/D 6’1” 182 06/17/99 Roblin, Man. Moose Jaw (WHL) 2017 Draft/Rep. 3 Ian Mitchell D R/D 5’11” 171 01/18/99 Calahoo, Alta./Alb. Spruce Grove (AJHL) 2017 Draft/Rep. 4 Ty Smith D L/G 5’10” 166 03/24/00 Lloydminster, Alta./Alb. Spokane (WHL) 2018 Draft/Rep. 5 Jocktan Chainey D L/G 6’1” 194 09/08/99 Asbestos, Que./Qc Halifax (QMJHL) 2017 Draft/Rep. 8 Jett Woo D R/D 6’0” 194 07/27/00 Winnipeg, Man. Moose Jaw (WHL) 2018 Draft/Rep. 23 David Noël D L/G 6’1” 170 04/10/99 Quebec City, Que./Qc Val-d’Or (QMJHL) 2017 Draft/Rep. -

OHL Priority Selection Process

OHL PRIORITY SELECTION OHL Priority Selection Process In 2001, the Ontario Hockey League Selected Players in the OHL with non-playoff teams selecting ahead Scouting Bureau with evaluations from conducted the annual Priority Selec- OHL Member Teams are permitted to of playoff teams. their team scouting staffs to make their tion process by way of the Internet for register a maximum of four 16 year old player selections. the first time in league history. players selected in the OHL Priority Teams are permitted to trade draft Selection. Those 16 year old players choices, other than their first round se- The OHL Central Scouting Bureau The new process allowed for eligible that are allowed to be signed are the lection, during the trading period from has been evaluating players since the players and their families, as well as fans first two 16 year old players selected Monday April 26 to Friday April 30, 1975-76 season. across the league to follow the process and a maximum addition of two 16 2010 at 3:00 p.m. in real time online. year old wild carded players in any OHL Central Scouting Staff round of the OHL Priority Selection. OHL Central Scouting Chief Scout - Robert Kitamura The 2010 OHL Priority Selection will The Central Scouting Bureau of the GTA - Tim Cherry once again be conducted online on All other 16-year-old players selected Ontario Hockey League is an informa- Central Ontario - Kyle Branch Saturday May 1, 2010 beginning at are eligible to be called up as an “affili- tion service and support organization Kingston and Area - John Finlay 9:00 a.m. -

Philadelpha-Flyers-2014-15-Media

ExProvidingpe a Totrali e Entertainmentnce Comcast-Spectacor continues to establish itself as a leader in the sports and entertainment industry, providing high quality sports and entertainment to millions of fans across North America. In addition to owning the Philadelphia Flyers, Comcast- Spectacor operates arenas, convention centers, stadiums, and theaters through its subsidiary Global Spectrum; provides food, beverage, and catering services to these and other venues through its subsidiary Ovations Food Services; sells millions of tickets to events and attractions at a variety of public assembly venues through Paciolan, a leader in providing venue establishment, ticketing, fundraising and ticketing technology, ® ® and enhances revenue streams for public assembly facilities and other clients through its subsidiary Front Row Marketing Services. Comcast-Spectacor owns a series of community ice hockey rinks, Flyers Skate Zone. Comcast-Spectacor is committed to improving the quality of life for people in the communities it serves. The Comcast-Spectacor Charities, and Flyers Charities, have contributed more than $26 million to countless recipients and touched hundreds of thousands of lives. COMCAST-SPECTACOR.COM ® ® COMCAST-SPECTACOR STAFF DIRECTORY OFFICE OF THE CHAIR MARKETING/PUBLIC RELATIONS Chairman ...............................................................................Ed Snider Director of Marketing ...............................................Melissa Schaaf Vice Chairman ..................................................................Fred -

2014-15 O-Pee-Chee Hockey Set Checklist 500 Karet

2014-15 O-Pee-Chee Hockey Set Checklist 500 karet Není SP (#1-500) SP (#1-600) Paralelní karty Red Border Wrapper Redemption (5 ks v redemption balíčku), Retro (1:1 h, 1:2 r, 1:2 b, 2:1 fat balíček), Retro Blank Backs, Rainbow Foil (1:4 h, 1:8 r, 1:7 b, 1:8 fat), Rainbow Foil Black #/100 (pouze ve verzi Hobby) 1 Martin Brodeur - New Jersey Devils 2 Teemu Selanne - Anaheim Ducks 3 Jean-Sebastien Giguere - Colorado Avalanche 4 Daniel Alfredsson - Detroit Red Wings 5 Jaromir Jagr - New Jersey Devils 6 Jarret Stoll - Los Angeles Kings 7 Andrew Ference - Edmonton Oilers 8 Chris Kreider - New York Rangers 9 P.K. Subban - Montreal Canadiens 10 Brent Seabrook - Chicago Blackhawks 11 Milan Lucic - Boston Bruins 12 Ryan Garbutt - Dallas Stars 13 Bobby Ryan - Ottawa Senators 14 Dany Heatley - Minnesota Wild 15 Mark Letestu - Columbus Blue Jackets 16 Oliver Ekman-Larsson - Arizona Coyotes 17 Tyler Ennis - Buffalo Sabres 18 Sean Monahan - Calgary Flames 19 Cam Ward - Carolina Hurricanes 20 Sean Bergenheim - Florida Panthers 21 Kyle Palmieri - Anaheim Ducks 22 Craig Smith - Nashville Predators 23 Tom Sestito - Vancouver Canucks 24 Jarome Iginla - Boston Bruins 25 Olli Jokinen - Winnipeg Jets 26 Teddy Purcell - Tampa Bay Lightning 27 Mason Raymond - Toronto Maple Leafs 28 Mikkel Boedker - Arizona Coyotes 29 Jamie McGinn - Colorado Avalanche 30 Ryan McDonagh - New York Rangers 31 Rich Peverley - Dallas Stars 32 Marian Hossa - Chicago Blackhawks 33 Calvin de Haan - New York Islanders 34 Viktor Fasth - Edmonton Oilers 35 Max Pacioretty - Montreal Canadiens 36 Marcel Goc - Pittsburgh Penguins 37 Jonas Brodin - Minnesota Wild 38 Pavel Datsyuk - Detroit Red Wings 39 Luke Schenn - Philadelphia Flyers 40 Tyler Toffoli - Los Angeles Kings 41 Carl Hagelin - New York Rangers 42 Joe Thornton - San Jose Sharks 43 Andy Greene - New Jersey Devils 44 Brock Nelson - New York Islanders 45 Alexander Ovechkin - Washington Capitals 46 Elias Lindholm - Carolina Hurricanes 47 Sven Baertschi - Calgary Flames 48 Jimmy Hayes - Florida Panthers 49 Alex Pietrangelo - St. -

Acadie-Bathurst Titan 68 8 54 5 1 22 141 336

CONTENTS | TABLE DES MATIÈRES PR Contact Information | Coordonnées des relations médias .............................................4 2019 Memorial Cup Schedule | Horaire du tournoi ............................................................5 Competing Teams | Équipes .................................................................................................7 WHL ................................................................................................................................... 11 OHL .................................................................................................................................... 17 QMJHL | LHJMQ ................................................................................................................ 23 Halifax Mooseheads | Mooseheads d'Halifax ................................................................... 29 Rouyn-Noranda Huskies | Huskies de Rouyn-Noranda ..................................................... 59 Guelph Storm | Storm de Guelph ...................................................................................... 89 Prince Albert Raiders | Raiders de Prince Albert .............................................................. 121 History of the Memorial Cup | L’Histoire de la Coupe Memorial .................................... 150 Memorial Cup Championship Rosters | Listes de championnat...................................... 152 All-Star Teams | Équipes D’étoiles ................................................................................... 160