TUGBOAT Volume 41, Number 3 / 2020

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Latex on Windows

LaTeX on Windows Installing MikTeX and using TeXworks, as described on the main LaTeX page, is enough to get you started using LaTeX on Windows. This page provides further information for experienced users. Tips for using TeXworks Forward and Inverse Search If you are working on a long document, forward and inverse searching make editing much easier. • Forward search means jumping from a point in your LaTeX source file to the corresponding line in the pdf output file. • Inverse search means jumping from a line in the pdf file back to the corresponding point in the source file. In TeXworks, forward and inverse search are easy. To do a forward search, right-click on text in the source window and choose the option "Jump to PDF". Similarly, to do an inverse search, right-click in the output window and choose "Jump to Source". Other Front End Programs Among front ends, TeXworks has several advantages, principally, it is bundled with MikTeX and it works without any configuration. However, you may want to try other front end programs. The most common ones are listed below. • Texmaker. Installation notes: 1. After you have installed Texmaker, go to the QuickBuild section of the Configuration menu and choose pdflatex+pdfview. 2. Before you use spell-check in Texmaker, you may need to install a dictionary; see section 1.3 of the Texmaker user manual. • Winshell. Installation notes: 1. Install Winshell after installing MiKTeX. 2. When running the Winshell Setup program, choose the pdflatex-optimized toolbar. 3. Winshell uses an external pdf viewer to display output files. -

Cumberland Tech Ref.Book

Forms Printer 258x/259x Technical Reference DRAFT document - Monday, August 11, 2008 1:59 pm Please note that this is a DRAFT document. More information will be added and a final version will be released at a later date. August 2008 www.lexmark.com Lexmark and Lexmark with diamond design are trademarks of Lexmark International, Inc., registered in the United States and/or other countries. © 2008 Lexmark International, Inc. All rights reserved. 740 West New Circle Road Lexington, Kentucky 40550 Draft document Edition: August 2008 The following paragraph does not apply to any country where such provisions are inconsistent with local law: LEXMARK INTERNATIONAL, INC., PROVIDES THIS PUBLICATION “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of express or implied warranties in certain transactions; therefore, this statement may not apply to you. This publication could include technical inaccuracies or typographical errors. Changes are periodically made to the information herein; these changes will be incorporated in later editions. Improvements or changes in the products or the programs described may be made at any time. Comments about this publication may be addressed to Lexmark International, Inc., Department F95/032-2, 740 West New Circle Road, Lexington, Kentucky 40550, U.S.A. In the United Kingdom and Eire, send to Lexmark International Ltd., Marketing and Services Department, Westhorpe House, Westhorpe, Marlow Bucks SL7 3RQ. Lexmark may use or distribute any of the information you supply in any way it believes appropriate without incurring any obligation to you. -

LATEX for Beginners

LATEX for Beginners Workbook Edition 5, March 2014 Document Reference: 3722-2014 Preface This is an absolute beginners guide to writing documents in LATEX using TeXworks. It assumes no prior knowledge of LATEX, or any other computing language. This workbook is designed to be used at the `LATEX for Beginners' student iSkills seminar, and also for self-paced study. Its aim is to introduce an absolute beginner to LATEX and teach the basic commands, so that they can create a simple document and find out whether LATEX will be useful to them. If you require this document in an alternative format, such as large print, please email [email protected]. Copyright c IS 2014 Permission is granted to any individual or institution to use, copy or redis- tribute this document whole or in part, so long as it is not sold for profit and provided that the above copyright notice and this permission notice appear in all copies. Where any part of this document is included in another document, due ac- knowledgement is required. i ii Contents 1 Introduction 1 1.1 What is LATEX?..........................1 1.2 Before You Start . .2 2 Document Structure 3 2.1 Essentials . .3 2.2 Troubleshooting . .5 2.3 Creating a Title . .5 2.4 Sections . .6 2.5 Labelling . .7 2.6 Table of Contents . .8 3 Typesetting Text 11 3.1 Font Effects . 11 3.2 Coloured Text . 11 3.3 Font Sizes . 12 3.4 Lists . 13 3.5 Comments & Spacing . 14 3.6 Special Characters . 15 4 Tables 17 4.1 Practical . -

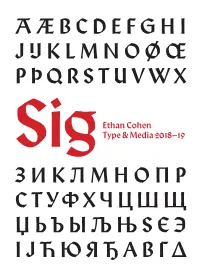

Sig Process Book

A Æ B C D E F G H I J IJ K L M N O Ø Œ P Þ Q R S T U V W X Ethan Cohen Type & Media 2018–19 SigY Z А Б В Г Ґ Д Е Ж З И К Л М Н О П Р С Т У Ф Х Ч Ц Ш Щ Џ Ь Ъ Ы Љ Њ Ѕ Є Э І Ј Ћ Ю Я Ђ Α Β Γ Δ SIG: A Revival of Rudolf Koch’s Wallau Type & Media 2018–19 ЯREthan Cohen ‡ Submitted as part of Paul van der Laan’s Revival class for the Master of Arts in Type & Media course at Koninklijke Academie von Beeldende Kunsten (Royal Academy of Art, The Hague) INTRODUCTION “I feel such a closeness to William Project Overview Morris that I always have the feeling Sig is a revival of Rudolf Koch’s Wallau Halbfette. My primary source that he cannot be an Englishman, material was the Klingspor Kalender für das Jahr 1933 (Klingspor Calen- dar for the Year 1933), a 17.5 × 9.6 cm book set in various cuts of Wallau. he must be a German.” The Klingspor Kalender was an annual promotional keepsake printed by the Klingspor Type Foundry in Offenbach am Main that featured different Klingspor typefaces every year. This edition has a daily cal- endar set in Magere Wallau (Wallau Light) and an 18-page collection RUDOLF KOCH of fables set in 9 pt Wallau Halbfette (Wallau Semibold) with woodcut illustrations by Willi Harwerth, who worked as a draftsman at the Klingspor Type Foundry. -

SC22/WG20 N896 L2/01-476 Universal Multiple-Octet Coded Character Set International Organization for Standardization Organisation Internationale De Normalisation

SC22/WG20 N896 L2/01-476 Universal multiple-octet coded character set International organization for standardization Organisation internationale de normalisation Title: Ordering rules for Khmer Source: Kent Karlsson Date: 2001-12-19 Status: Expert contribution Document type: Working group document Action: For consideration by the UTC and JTC 1/SC 22/WG 20 1 Introduction The Khmer script in Unicode/10646 uses conjoining characters, just like the Indic scripts and Hangul. An alternative that is suitable (and much more elegant) for Khmer, but not for Indic scripts, would have been to have combining- below (and sometimes a bit to the side) consonants and combining-below independent vowels, similar to the combining-above Latin letters recently encoded, as well as how Tibetan is handled. However that is not the chosen solution, which instead uses a combining character (COENG) that makes characters conjoin (glue) like it is done for Indic (Brahmic) scripts and like Hangul Jamo, though the latter does not have a separate gluer character. In the Khmer script, using the COENG based approach, the words are formed from orthographic syllables, where an orthographic syllable has the following structure [add ligation control?]: Khmer-syllable ::= (K H)* K M* where K is a Khmer consonant (most with an inherent vowel that is pronounced only if there is no consonant, independent vowel, or dependent vowel following it in the orthographic syllable) or a Khmer independent vowel, H is the invisible Khmer conjoint former COENG, M is a combining character (including COENG, though that would be a misspelling), in particular a combining Khmer vowel (noted A below) or modifier sign. -

The Unicode Cookbook for Linguists: Managing Writing Systems Using Orthography Profiles

Zurich Open Repository and Archive University of Zurich Main Library Strickhofstrasse 39 CH-8057 Zurich www.zora.uzh.ch Year: 2017 The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles Moran, Steven ; Cysouw, Michael DOI: https://doi.org/10.5281/zenodo.290662 Posted at the Zurich Open Repository and Archive, University of Zurich ZORA URL: https://doi.org/10.5167/uzh-135400 Monograph The following work is licensed under a Creative Commons: Attribution 4.0 International (CC BY 4.0) License. Originally published at: Moran, Steven; Cysouw, Michael (2017). The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles. CERN Data Centre: Zenodo. DOI: https://doi.org/10.5281/zenodo.290662 The Unicode Cookbook for Linguists Managing writing systems using orthography profiles Steven Moran & Michael Cysouw Change dedication in localmetadata.tex Preface This text is meant as a practical guide for linguists, and programmers, whowork with data in multilingual computational environments. We introduce the basic concepts needed to understand how writing systems and character encodings function, and how they work together. The intersection of the Unicode Standard and the International Phonetic Al- phabet is often not met without frustration by users. Nevertheless, thetwo standards have provided language researchers with a consistent computational architecture needed to process, publish and analyze data from many different languages. We bring to light common, but not always transparent, pitfalls that researchers face when working with Unicode and IPA. Our research uses quantitative methods to compare languages and uncover and clarify their phylogenetic relations. However, the majority of lexical data available from the world’s languages is in author- or document-specific orthogra- phies. -

Adobe Font Folio Opentype Edition 2003

Adobe Font Folio OpenType Edition 2003 The Adobe Folio 9.0 containing PostScript Type 1 fonts was replaced in 2003 by the Adobe Folio OpenType Edition ("Adobe Folio 10") containing 486 OpenType font families totaling 2209 font styles (regular, bold, italic, etc.). Adobe no longer sells PostScript Type 1 fonts and now sells OpenType fonts. OpenType fonts permit of encrypting and embedding hidden private buyer data (postal address, email address, bank account number, credit card number etc.). Embedding of your private data is now also customary with old PS Type 1 fonts, but here you can detect more easily your hidden private data. For an example of embedded private data see http://www.sanskritweb.net/forgers/lino17.pdf showing both encrypted and unencrypted private data. If you are connected to the internet, it is easy for online shops to spy out your private data by reading the fonts installed on your computer. In this way, Adobe and other online shops also obtain email addresses for spamming purposes. Therefore it is recommended to avoid buying fonts from Adobe, Linotype, Monotype etc., if you use a computer that is connected to the internet. See also Prof. Luc Devroye's notes on Adobe Store Security Breach spam emails at this site http://cgm.cs.mcgill.ca/~luc/legal.html Note 1: 80% of the fonts contained in the "Adobe" FontFolio CD are non-Adobe fonts by ITC, Linotype, and others. Note 2: Most of these "Adobe" fonts do not permit of "editing embedding" so that these fonts are practically useless. Despite these serious disadvantages, the Adobe Folio OpenType Edition retails presently (March 2005) at US$8,999.00. -

Steganography in Arabic Text Using Full Diacritics Text

Steganography in Arabic Text Using Full Diacritics Text Ammar Odeh Khaled Elleithy Computer Science & Engineering, Computer Science & Engineering, University of Bridgeport, University of Bridgeport, Bridgeport, CT06604, USA Bridgeport, CT06604, USA [email protected] [email protected] Abstract 1. Substitution: Exchange some small part of the carrier file The need for secure communications has significantly by hidden message. Where middle attacker can't observe the increased with the explosive growth of the internet and changes in the carrier file. On the other hand, choosing mobile communications. The usage of text documents has replacement process it is very important to avoid any doubled several times over the past years especially with suspicion. This means to select insignificant part from the mobile devices. In this paper we propose a new file then replace it. For instance, if a carrier file is an image Steganogaphy algorithm for Arabic text. The algorithm (RGB) then the least significant bit (LSB) will be used as employs some Arabic language characteristics, which exchange bit [4]. represent as small vowel letters. Arabic Diacritics is an 2. Injection: By adding hidden data into the carrier file, optional property for any text and usually is not popularly where the file size will increase and this will increase the used. Many algorithms tried to employ this property to hide probability being discovered. The main goal in this data in Arabic text. In our method, we use this property to approach is how to present techniques to add hidden data hide data and reduce the probability of suspicions hiding. and to void attacker suspicion [4]. -

Cygwin User's Guide

Cygwin User’s Guide Cygwin User’s Guide ii Copyright © Cygwin authors Permission is granted to make and distribute verbatim copies of this documentation provided the copyright notice and this per- mission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this documentation under the conditions for verbatim copying, provided that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this documentation into another language, under the above conditions for modified versions, except that this permission notice may be stated in a translation approved by the Free Software Foundation. Cygwin User’s Guide iii Contents 1 Cygwin Overview 1 1.1 What is it? . .1 1.2 Quick Start Guide for those more experienced with Windows . .1 1.3 Quick Start Guide for those more experienced with UNIX . .1 1.4 Are the Cygwin tools free software? . .2 1.5 A brief history of the Cygwin project . .2 1.6 Highlights of Cygwin Functionality . .3 1.6.1 Introduction . .3 1.6.2 Permissions and Security . .3 1.6.3 File Access . .3 1.6.4 Text Mode vs. Binary Mode . .4 1.6.5 ANSI C Library . .4 1.6.6 Process Creation . .5 1.6.6.1 Problems with process creation . .5 1.6.7 Signals . .6 1.6.8 Sockets . .6 1.6.9 Select . .7 1.7 What’s new and what changed in Cygwin . .7 1.7.1 What’s new and what changed in 3.2 . -

CLASS: Working with Students with Dysgraphia

A Quick Guide to Working with Students with Dysgraphia Characteristics of the Condition This is a general term is used to describe any of several distinct difficulties in producing written language. These problems may be developmental in origin or may result from a brain injury or neurological disease. They often occur with other difficulties (e.g., dyslexia), but may be encountered in isolation. • Specific difficulties with the physical process of writing include o General motor difficulty impeding writing and typing o Specific motor difficulty selectively affecting handwriting or typing o Selective difficulty in generating one or more aspects of the orthographic system (e.g., print vs. cursive forms; upper case vs. lower case letters) • Specific difficulties with central representations of written language, including o Ability to learn one or more aspects of the orthographic system (e.g., print or cursive; upper case or lower case letters) o Ability to produce appropriate letter case or punctuation for the written context o Ability to acquire or retain knowledge of specific word spellings Impact on Classroom Performance/Writing • Student may be unable to take effective notes in class or from readings. • Writing by hand may be slow and effortful, resulting in diminished content in papers and essays in comparison with the student’s knowledge. • Legibility of written work may be poor, becoming increasingly worse from the beginning to the end of the document. • Capitalization and punctuation errors inconsistent with student’s linguistic skills may be seen. • Spelling may be inaccurate and/or inconsistent. • Vocabulary used in writing may be significantly restricted in comparison to the student’s reading or spoken vocabulary (may be due to concerns about spelling accuracy). -

COMPARATIVE VIDEOGAME CRITICISM by Trung Nguyen

COMPARATIVE VIDEOGAME CRITICISM by Trung Nguyen Citation Bogost, Ian. Unit Operations: An Approach to Videogame Criticism. Cambridge, MA: MIT, 2006. Keywords: Mythical and scientific modes of thought (bricoleur vs. engineer), bricolage, cyber texts, ergodic literature, Unit operations. Games: Zork I. Argument & Perspective Ian Bogost’s “unit operations” that he mentions in the title is a method of analyzing and explaining not only video games, but work of any medium where works should be seen “as a configurative system, an arrangement of discrete, interlocking units of expressive meaning.” (Bogost x) Similarly, in this chapter, he more specifically argues that as opposed to seeing video games as hard pieces of technology to be poked and prodded within criticism, they should be seen in a more abstract manner. He states that “instead of focusing on how games work, I suggest that we turn to what they do— how they inform, change, or otherwise participate in human activity…” (Bogost 53) This comparative video game criticism is not about invalidating more concrete observances of video games, such as how they work, but weaving them into a more intuitive discussion that explores the true nature of video games. II. Ideas Unit Operations: Like I mentioned in the first section, this is a different way of approaching mediums such as poetry, literature, or videogames where works are a system of many parts rather than an overarching, singular, structured piece. Engineer vs. Bricoleur metaphor: Bogost uses this metaphor to compare the fundamentalist view of video game critique to his proposed view, saying that the “bricoleur is a skillful handy-man, a jack-of-all-trades who uses convenient implements and ad hoc strategies to achieve his ends.” Whereas the engineer is a “scientific thinker who strives to construct holistic, totalizing systems from the top down…” (Bogost 49) One being more abstract and the other set and defined. -

The Video Game Industry an Industry Analysis, from a VC Perspective

The Video Game Industry An Industry Analysis, from a VC Perspective Nik Shah T’05 MBA Fellows Project March 11, 2005 Hanover, NH The Video Game Industry An Industry Analysis, from a VC Perspective Authors: Nik Shah • The video game industry is poised for significant growth, but [email protected] many sectors have already matured. Video games are a large and Tuck Class of 2005 growing market. However, within it, there are only selected portions that contain venture capital investment opportunities. Our analysis Charles Haigh [email protected] highlights these sectors, which are interesting for reasons including Tuck Class of 2005 significant technological change, high growth rates, new product development and lack of a clear market leader. • The opportunity lies in non-core products and services. We believe that the core hardware and game software markets are fairly mature and require intensive capital investment and strong technology knowledge for success. The best markets for investment are those that provide valuable new products and services to game developers, publishers and gamers themselves. These are the areas that will build out the industry as it undergoes significant growth. A Quick Snapshot of Our Identified Areas of Interest • Online Games and Platforms. Few online games have historically been venture funded and most are subject to the same “hit or miss” market adoption as console games, but as this segment grows, an opportunity for leading technology publishers and platforms will emerge. New developers will use these technologies to enable the faster and cheaper production of online games. The developers of new online games also present an opportunity as new methods of gameplay and game genres are explored.