Identifying Transcriptomic Correlates of Histology Using Deep Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mutations in CDK5RAP2 Cause Seckel Syndrome Go¨ Khan Yigit1,2,3,A, Karen E

ORIGINAL ARTICLE Mutations in CDK5RAP2 cause Seckel syndrome Go¨ khan Yigit1,2,3,a, Karen E. Brown4,a,Hu¨ lya Kayserili5, Esther Pohl1,2,3, Almuth Caliebe6, Diana Zahnleiter7, Elisabeth Rosser8, Nina Bo¨ gershausen1,2,3, Zehra Oya Uyguner5, Umut Altunoglu5, Gudrun Nu¨ rnberg2,3,9, Peter Nu¨ rnberg2,3,9, Anita Rauch10, Yun Li1,2,3, Christian Thomas Thiel7 & Bernd Wollnik1,2,3 1Institute of Human Genetics, University of Cologne, Cologne, Germany 2Center for Molecular Medicine Cologne (CMMC), University of Cologne, Cologne, Germany 3Cologne Excellence Cluster on Cellular Stress Responses in Aging-Associated Diseases (CECAD), University of Cologne, Cologne, Germany 4Chromosome Biology Group, MRC Clinical Sciences Centre, Imperial College School of Medicine, Hammersmith Hospital, London, W12 0NN, UK 5Department of Medical Genetics, Istanbul Medical Faculty, Istanbul University, Istanbul, Turkey 6Institute of Human Genetics, Christian-Albrechts-University of Kiel, Kiel, Germany 7Institute of Human Genetics, Friedrich-Alexander University Erlangen-Nuremberg, Erlangen, Germany 8Department of Clinical Genetics, Great Ormond Street Hospital for Children, London, WC1N 3EH, UK 9Cologne Center for Genomics, University of Cologne, Cologne, Germany 10Institute of Medical Genetics, University of Zurich, Schwerzenbach-Zurich, Switzerland Keywords Abstract CDK5RAP2, CEP215, microcephaly, primordial dwarfism, Seckel syndrome Seckel syndrome is a heterogeneous, autosomal recessive disorder marked by pre- natal proportionate short stature, severe microcephaly, intellectual disability, and Correspondence characteristic facial features. Here, we describe the novel homozygous splice-site Bernd Wollnik, Center for Molecular mutations c.383+1G>C and c.4005-9A>GinCDK5RAP2 in two consanguineous Medicine Cologne (CMMC) and Institute of families with Seckel syndrome. CDK5RAP2 (CEP215) encodes a centrosomal pro- Human Genetics, University of Cologne, tein which is known to be essential for centrosomal cohesion and proper spindle Kerpener Str. -

Ovarian Gene Expression in the Absence of FIGLA, an Oocyte

BMC Developmental Biology BioMed Central Research article Open Access Ovarian gene expression in the absence of FIGLA, an oocyte-specific transcription factor Saurabh Joshi*1, Holly Davies1, Lauren Porter Sims2, Shawn E Levy2 and Jurrien Dean1 Address: 1Laboratory of Cellular and Developmental Biology, NIDDK, National Institutes of Health, Bethesda, MD 20892, USA and 2Department of Biomedical Informatics, Vanderbilt University Medical Center, Nashville, TN 37232, USA Email: Saurabh Joshi* - [email protected]; Holly Davies - [email protected]; Lauren Porter Sims - [email protected]; Shawn E Levy - [email protected]; Jurrien Dean - [email protected] * Corresponding author Published: 13 June 2007 Received: 11 December 2006 Accepted: 13 June 2007 BMC Developmental Biology 2007, 7:67 doi:10.1186/1471-213X-7-67 This article is available from: http://www.biomedcentral.com/1471-213X/7/67 © 2007 Joshi et al; licensee BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Abstract Background: Ovarian folliculogenesis in mammals is a complex process involving interactions between germ and somatic cells. Carefully orchestrated expression of transcription factors, cell adhesion molecules and growth factors are required for success. We have identified a germ-cell specific, basic helix-loop-helix transcription factor, FIGLA (Factor In the GermLine, Alpha) and demonstrated its involvement in two independent developmental processes: formation of the primordial follicle and coordinate expression of zona pellucida genes. Results: Taking advantage of Figla null mouse lines, we have used a combined approach of microarray and Serial Analysis of Gene Expression (SAGE) to identify potential downstream target genes. -

Propranolol-Mediated Attenuation of MMP-9 Excretion in Infants with Hemangiomas

Supplementary Online Content Thaivalappil S, Bauman N, Saieg A, Movius E, Brown KJ, Preciado D. Propranolol-mediated attenuation of MMP-9 excretion in infants with hemangiomas. JAMA Otolaryngol Head Neck Surg. doi:10.1001/jamaoto.2013.4773 eTable. List of All of the Proteins Identified by Proteomics This supplementary material has been provided by the authors to give readers additional information about their work. © 2013 American Medical Association. All rights reserved. Downloaded From: https://jamanetwork.com/ on 10/01/2021 eTable. List of All of the Proteins Identified by Proteomics Protein Name Prop 12 mo/4 Pred 12 mo/4 Δ Prop to Pred mo mo Myeloperoxidase OS=Homo sapiens GN=MPO 26.00 143.00 ‐117.00 Lactotransferrin OS=Homo sapiens GN=LTF 114.00 205.50 ‐91.50 Matrix metalloproteinase‐9 OS=Homo sapiens GN=MMP9 5.00 36.00 ‐31.00 Neutrophil elastase OS=Homo sapiens GN=ELANE 24.00 48.00 ‐24.00 Bleomycin hydrolase OS=Homo sapiens GN=BLMH 3.00 25.00 ‐22.00 CAP7_HUMAN Azurocidin OS=Homo sapiens GN=AZU1 PE=1 SV=3 4.00 26.00 ‐22.00 S10A8_HUMAN Protein S100‐A8 OS=Homo sapiens GN=S100A8 PE=1 14.67 30.50 ‐15.83 SV=1 IL1F9_HUMAN Interleukin‐1 family member 9 OS=Homo sapiens 1.00 15.00 ‐14.00 GN=IL1F9 PE=1 SV=1 MUC5B_HUMAN Mucin‐5B OS=Homo sapiens GN=MUC5B PE=1 SV=3 2.00 14.00 ‐12.00 MUC4_HUMAN Mucin‐4 OS=Homo sapiens GN=MUC4 PE=1 SV=3 1.00 12.00 ‐11.00 HRG_HUMAN Histidine‐rich glycoprotein OS=Homo sapiens GN=HRG 1.00 12.00 ‐11.00 PE=1 SV=1 TKT_HUMAN Transketolase OS=Homo sapiens GN=TKT PE=1 SV=3 17.00 28.00 ‐11.00 CATG_HUMAN Cathepsin G OS=Homo -

Autism Multiplex Family with 16P11.2P12.2 Microduplication Syndrome in Monozygotic Twins and Distal 16P11.2 Deletion in Their Brother

European Journal of Human Genetics (2012) 20, 540–546 & 2012 Macmillan Publishers Limited All rights reserved 1018-4813/12 www.nature.com/ejhg ARTICLE Autism multiplex family with 16p11.2p12.2 microduplication syndrome in monozygotic twins and distal 16p11.2 deletion in their brother Anne-Claude Tabet1,2,3,4, Marion Pilorge2,3,4, Richard Delorme5,6,Fre´de´rique Amsellem5,6, Jean-Marc Pinard7, Marion Leboyer6,8,9, Alain Verloes10, Brigitte Benzacken1,11,12 and Catalina Betancur*,2,3,4 The pericentromeric region of chromosome 16p is rich in segmental duplications that predispose to rearrangements through non-allelic homologous recombination. Several recurrent copy number variations have been described recently in chromosome 16p. 16p11.2 rearrangements (29.5–30.1 Mb) are associated with autism, intellectual disability (ID) and other neurodevelopmental disorders. Another recognizable but less common microdeletion syndrome in 16p11.2p12.2 (21.4 to 28.5–30.1 Mb) has been described in six individuals with ID, whereas apparently reciprocal duplications, studied by standard cytogenetic and fluorescence in situ hybridization techniques, have been reported in three patients with autism spectrum disorders. Here, we report a multiplex family with three boys affected with autism, including two monozygotic twins carrying a de novo 16p11.2p12.2 duplication of 8.95 Mb (21.28–30.23 Mb) characterized by single-nucleotide polymorphism array, encompassing both the 16p11.2 and 16p11.2p12.2 regions. The twins exhibited autism, severe ID, and dysmorphic features, including a triangular face, deep-set eyes, large and prominent nasal bridge, and tall, slender build. The eldest brother presented with autism, mild ID, early-onset obesity and normal craniofacial features, and carried a smaller, overlapping 16p11.2 microdeletion of 847 kb (28.40–29.25 Mb), inherited from his apparently healthy father. -

CST9L (NM 080610) Human Tagged ORF Clone – RC206646L4

OriGene Technologies, Inc. 9620 Medical Center Drive, Ste 200 Rockville, MD 20850, US Phone: +1-888-267-4436 [email protected] EU: [email protected] CN: [email protected] Product datasheet for RC206646L4 CST9L (NM_080610) Human Tagged ORF Clone Product data: Product Type: Expression Plasmids Product Name: CST9L (NM_080610) Human Tagged ORF Clone Tag: mGFP Symbol: CST9L Synonyms: bA218C14.1; CTES7B Vector: pLenti-C-mGFP-P2A-Puro (PS100093) E. coli Selection: Chloramphenicol (34 ug/mL) Cell Selection: Puromycin ORF Nucleotide The ORF insert of this clone is exactly the same as(RC206646). Sequence: Restriction Sites: SgfI-MluI Cloning Scheme: ACCN: NM_080610 ORF Size: 441 bp This product is to be used for laboratory only. Not for diagnostic or therapeutic use. View online » ©2021 OriGene Technologies, Inc., 9620 Medical Center Drive, Ste 200, Rockville, MD 20850, US 1 / 2 CST9L (NM_080610) Human Tagged ORF Clone – RC206646L4 OTI Disclaimer: The molecular sequence of this clone aligns with the gene accession number as a point of reference only. However, individual transcript sequences of the same gene can differ through naturally occurring variations (e.g. polymorphisms), each with its own valid existence. This clone is substantially in agreement with the reference, but a complete review of all prevailing variants is recommended prior to use. More info OTI Annotation: This clone was engineered to express the complete ORF with an expression tag. Expression varies depending on the nature of the gene. RefSeq: NM_080610.1 RefSeq Size: 982 bp RefSeq ORF: 444 bp Locus ID: 128821 UniProt ID: Q9H4G1, A0A140VJH1 Protein Families: Secreted Protein, Transmembrane MW: 17.3 kDa Gene Summary: The cystatin superfamily encompasses proteins that contain multiple cystatin-like sequences. -

A Computational Approach for Defining a Signature of Β-Cell Golgi Stress in Diabetes Mellitus

Page 1 of 781 Diabetes A Computational Approach for Defining a Signature of β-Cell Golgi Stress in Diabetes Mellitus Robert N. Bone1,6,7, Olufunmilola Oyebamiji2, Sayali Talware2, Sharmila Selvaraj2, Preethi Krishnan3,6, Farooq Syed1,6,7, Huanmei Wu2, Carmella Evans-Molina 1,3,4,5,6,7,8* Departments of 1Pediatrics, 3Medicine, 4Anatomy, Cell Biology & Physiology, 5Biochemistry & Molecular Biology, the 6Center for Diabetes & Metabolic Diseases, and the 7Herman B. Wells Center for Pediatric Research, Indiana University School of Medicine, Indianapolis, IN 46202; 2Department of BioHealth Informatics, Indiana University-Purdue University Indianapolis, Indianapolis, IN, 46202; 8Roudebush VA Medical Center, Indianapolis, IN 46202. *Corresponding Author(s): Carmella Evans-Molina, MD, PhD ([email protected]) Indiana University School of Medicine, 635 Barnhill Drive, MS 2031A, Indianapolis, IN 46202, Telephone: (317) 274-4145, Fax (317) 274-4107 Running Title: Golgi Stress Response in Diabetes Word Count: 4358 Number of Figures: 6 Keywords: Golgi apparatus stress, Islets, β cell, Type 1 diabetes, Type 2 diabetes 1 Diabetes Publish Ahead of Print, published online August 20, 2020 Diabetes Page 2 of 781 ABSTRACT The Golgi apparatus (GA) is an important site of insulin processing and granule maturation, but whether GA organelle dysfunction and GA stress are present in the diabetic β-cell has not been tested. We utilized an informatics-based approach to develop a transcriptional signature of β-cell GA stress using existing RNA sequencing and microarray datasets generated using human islets from donors with diabetes and islets where type 1(T1D) and type 2 diabetes (T2D) had been modeled ex vivo. To narrow our results to GA-specific genes, we applied a filter set of 1,030 genes accepted as GA associated. -

Remote Ischemic Preconditioning (RIPC) Modifies Plasma Proteome in Humans

Remote Ischemic Preconditioning (RIPC) Modifies Plasma Proteome in Humans Michele Hepponstall1,2,3,4, Vera Ignjatovic1,3, Steve Binos4, Paul Monagle1,3, Bryn Jones1,2, Michael H. H. Cheung1,2,3, Yves d’Udekem1,2, Igor E. Konstantinov1,2,3* 1 Haematology Research, Murdoch Childrens Research Institute; Melbourne, Victoria, Australia, 2 Cardiac Surgery Unit and Cardiology, Royal Children’s Hospital; Melbourne, Victoria, Australia, 3 Department of Paediatrics, The University of Melbourne; Melbourne, Victoria, Australia, 4 Bioscience Research Division, Department of Primary Industries, Melbourne, Victoria, Australia Abstract Remote Ischemic Preconditioning (RIPC) induced by brief episodes of ischemia of the limb protects against multi-organ damage by ischemia-reperfusion (IR). Although it has been demonstrated that RIPC affects gene expression, the proteomic response to RIPC has not been determined. This study aimed to examine RIPC induced changes in the plasma proteome. Five healthy adult volunteers had 4 cycles of 5 min ischemia alternating with 5 min reperfusion of the forearm. Blood samples were taken from the ipsilateral arm prior to first ischaemia, immediately after each episode of ischemia as well as, at 15 min and 24 h after the last episode of ischemia. Plasma samples from five individuals were analysed using two complementary techniques. Individual samples were analysed using 2Dimensional Difference in gel electrophoresis (2D DIGE) and mass spectrometry (MS). Pooled samples for each of the time-points underwent trypsin digestion and peptides generated were analysed in triplicate using Liquid Chromatography and MS (LC-MS). Six proteins changed in response to RIPC using 2D DIGE analysis, while 48 proteins were found to be differentially regulated using LC-MS. -

Mouse CELA3B ORF Mammalian Expression Plasmid, C-His Tag

Mouse CELA3B ORF mammalian expression plasmid, C-His tag Catalog Number: MG53134-CH General Information Plasmid Resuspension protocol Gene : chymotrypsin-like elastase family, 1. Centrifuge at 5,000×g for 5 min. member 3B 2. Carefully open the tube and add 100 l of sterile water to Official Symbol : CELA3B dissolve the DNA. Synonym : Ela3; Ela3b; AI504000; 0910001F22Rik; 2310074F01Rik 3. Close the tube and incubate for 10 minutes at room Source : Mouse temperature. cDNA Size: 810bp 4. Briefly vortex the tube and then do a quick spin to RefSeq : NM_026419.2 concentrate the liquid at the bottom. Speed is less than Description 5000×g. Lot : Please refer to the label on the tube 5. Store the plasmid at -20 ℃. Vector : pCMV3-C-His Shipping carrier : The plasmid is ready for: Each tube contains approximately 10 μg of lyophilized plasmid. • Restriction enzyme digestion Storage : • PCR amplification The lyophilized plasmid can be stored at ambient temperature for three months. • E. coli transformation Quality control : • DNA sequencing The plasmid is confirmed by full-length sequencing with primers in the sequencing primer list. E.coli strains for transformation (recommended Sequencing primer list : but not limited) pCMV3-F: 5’ CAGGTGTCCACTCCCAGGTCCAAG 3’ Most commercially available competent cells are appropriate for pcDNA3-R : 5’ GGCAACTAGAAGGCACAGTCGAGG 3’ the plasmid, e.g. TOP10, DH5α and TOP10F´. Or Forward T7 : 5’ TAATACGACTCACTATAGGG 3’ ReverseBGH : 5’ TAGAAGGCACAGTCGAGG 3’ pCMV3-F and pcDNA3-R are designed by Sino Biological Inc. Customers can order the primer pair from any oligonucleotide supplier. Manufactured By Sino Biological Inc., FOR RESEARCH USE ONLY. NOT FOR USE IN HUMANS. -

Serum Concentrations of Afamin Are Elevated in Patients with Polycystic Ovary Syndrome

AKo¨ ninger et al. Afamin in patients with PCOS 1–7 3:120 Research Open Access Serum concentrations of afamin are elevated in patients with polycystic ovary syndrome Angela Ko¨ ninger, Philippos Edimiris, Laura Koch, Antje Enekwe, Claudia Lamina1, Sabine Kasimir-Bauer, Rainer Kimmig and Hans Dieplinger1,2 Correspondence Department of Gynecology and Obstetrics, University of Duisburg-Essen, D-45122 Essen, Germany should be addressed 1Division of Genetic Epidemiology, Department of Medical Genetics, Molecular and Clinical Pharmacology, to H Dieplinger Innsbruck Medical University, Scho¨ pfstrasse 41, A-6020 Innsbruck, Austria Email 2Vitateq Biotechnology GmbH, A-6020 Innsbruck, Austria [email protected] Abstract Oxidative stress seems to be present in patients with polycystic ovary syndrome (PCOS). Key Words The aim of this study was to evaluate the correlation between characteristics of PCOS and " vitamin E-binding protein serum concentrations of afamin, a novel binding protein for the antioxidant vitamin E. " afamin A total of 85 patients with PCOS and 76 control subjects were investigated in a pilot cross- " polycystic ovary syndrome sectional study design between 2009 and 2013 in the University Hospital of Essen, Germany. " oxidative stress Patients with PCOS were diagnosed according to the Rotterdam ESHRE/ASRM-sponsored " insulin resistance PCOS Consensus Workshop Group. Afamin and diagnostic parameters of PCOS were determined at early follicular phase. Afamin concentrations were significantly higher in Endocrine Connections patients with PCOS than in controls (odds ratio (OR) for a 10 mg/ml increase in afaminZ1.3, 95% CIZ1.08–1.58). This difference vanished in a model adjusting for age, BMI, free testosterone index (FTI), and sex hormone-binding globulin (SHBG) (ORZ1.05, 95% CIZ0.80–1.38). -

Anatomy of the Axon

Anatomy of the Axon: Dissecting the role of microtubule plus-end tracking proteins in axons of hippocampal neurons ISBN: 978-90-393-7095-7 On the cover: Interpretation of Rembrandt van Rijn’s iconic oil painting The Anatomy Lesson of Dr. Nicolaes Tulp (1632). Instead of a human subject, a neuron is being dissected under the watchful eye of members of the Hoogenraad & Akhmanova labs circa 2018. The doctoral candidate takes on the role of Dr. Nicolaes Tulp. The year in which this thesis is published, 2019, marks the 350th anniversary of Rembrandt’s passing. It is therefore declared Year of Rembrandt. Cover photography: Jasper Landman (Bob & Fzbl Photography) Design and layout: Dieudonnée van de Willige © 2019; CC-BY-NC-ND. Printed in The Netherlands. Anatomy of the Axon: Dissecting the role of microtubule plus-end tracking proteins in axons of hippocampal neurons Anatomie van de Axon: Ontleding van de rol van microtubulus-plus-eind-bindende eiwitten in axonen van hippocampale neuronen (met een samenvatting in het Nederlands) Proefschrift ter verkrijging van de graad van doctor aan de Universiteit Utrecht op gezag van de rector magnificus, prof.dr. H.R.B.M. Kummeling, ingevolge het besluit van het college voor promoties in het openbaar te verdedigen op woensdag 22 mei 2019 des middags te 2.30 uur door Dieudonnée van de Willige geboren op 24 oktober 1990 te Antwerpen, België Promotoren: Prof. dr. C.C. Hoogenraad Prof. dr. A. Akhmanova Voor Hans & Miranda Index Preface 7 List of non-standard abbreviations 9 Microtubule plus-end tracking proteins -

Copy Number Variation in Fetal Alcohol Spectrum Disorder

Biochemistry and Cell Biology Copy number variation in fetal alcohol spectrum disorder Journal: Biochemistry and Cell Biology Manuscript ID bcb-2017-0241.R1 Manuscript Type: Article Date Submitted by the Author: 09-Nov-2017 Complete List of Authors: Zarrei, Mehdi; The Centre for Applied Genomics Hicks, Geoffrey G.; University of Manitoba College of Medicine, Regenerative Medicine Reynolds, James N.; Queen's University School of Medicine, Biomedical and Molecular SciencesDraft Thiruvahindrapuram, Bhooma; The Centre for Applied Genomics Engchuan, Worrawat; Hospital for Sick Children SickKids Learning Institute Pind, Molly; University of Manitoba College of Medicine, Regenerative Medicine Lamoureux, Sylvia; The Centre for Applied Genomics Wei, John; The Centre for Applied Genomics Wang, Zhouzhi; The Centre for Applied Genomics Marshall, Christian R.; The Centre for Applied Genomics Wintle, Richard; The Centre for Applied Genomics Chudley, Albert; University of Manitoba Scherer, Stephen W.; The Centre for Applied Genomics Is the invited manuscript for consideration in a Special Fetal Alcohol Spectrum Disorder Issue? : Keyword: Fetal alcohol spectrum disorder, FASD, copy number variations, CNV https://mc06.manuscriptcentral.com/bcb-pubs Page 1 of 354 Biochemistry and Cell Biology 1 Copy number variation in fetal alcohol spectrum disorder 2 Mehdi Zarrei,a Geoffrey G. Hicks,b James N. Reynolds,c,d Bhooma Thiruvahindrapuram,a 3 Worrawat Engchuan,a Molly Pind,b Sylvia Lamoureux,a John Wei,a Zhouzhi Wang,a Christian R. 4 Marshall,a Richard F. Wintle,a Albert E. Chudleye,f and Stephen W. Scherer,a,g 5 aThe Centre for Applied Genomics and Program in Genetics and Genome Biology, The Hospital 6 for Sick Children, Toronto, Ontario, Canada 7 bRegenerative Medicine Program, University of Manitoba, Winnipeg, Canada 8 cCentre for Neuroscience Studies, Queen's University, Kingston, Ontario, Canada. -

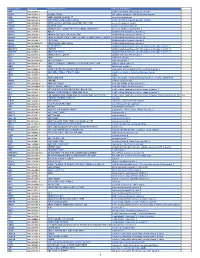

Pancancer Progression Human Vjune2017

Gene Symbol Accession Alias/Prev Symbol Official Full Name AAMP NM_001087.3 - angio-associated, migratory cell protein ABI3BP NM_015429.3 NESHBP|TARSH ABI family, member 3 (NESH) binding protein ACHE NM_000665.3 ACEE|ARACHE|N-ACHE|YT acetylcholinesterase ACTG2 NM_001615.3 ACT|ACTA3|ACTE|ACTL3|ACTSG actin, gamma 2, smooth muscle, enteric ACVR1 NM_001105.2 ACTRI|ACVR1A|ACVRLK2|ALK2|FOP|SKR1|TSRI activin A receptor, type I ACVR1C NM_145259.2 ACVRLK7|ALK7 activin A receptor, type IC ACVRL1 NM_000020.1 ACVRLK1|ALK-1|ALK1|HHT|HHT2|ORW2|SKR3|TSR-I activin A receptor type II-like 1 ADAM15 NM_207195.1 MDC15 ADAM metallopeptidase domain 15 ADAM17 NM_003183.4 ADAM18|CD156B|CSVP|NISBD|TACE ADAM metallopeptidase domain 17 ADAM28 NM_014265.4 ADAM 28|ADAM23|MDC-L|MDC-Lm|MDC-Ls|MDCL|eMDC II|eMDCII ADAM metallopeptidase domain 28 ADAM8 NM_001109.4 CD156|MS2 ADAM metallopeptidase domain 8 ADAM9 NM_001005845.1 CORD9|MCMP|MDC9|Mltng ADAM metallopeptidase domain 9 ADAMTS1 NM_006988.3 C3-C5|METH1 ADAM metallopeptidase with thrombospondin type 1 motif, 1 ADAMTS12 NM_030955.2 PRO4389 ADAM metallopeptidase with thrombospondin type 1 motif, 12 ADAMTS8 NM_007037.4 ADAM-TS8|METH2 ADAM metallopeptidase with thrombospondin type 1 motif, 8 ADAP1 NM_006869.2 CENTA1|GCS1L|p42IP4 ArfGAP with dual PH domains 1 ADD1 NM_001119.4 ADDA adducin 1 (alpha) ADM2 NM_001253845.1 AM2|dJ579N16.4 adrenomedullin 2 ADRA2B NM_000682.4 ADRA2L1|ADRA2RL1|ADRARL1|ALPHA2BAR|alpha-2BAR adrenoceptor alpha 2B AEBP1 NM_001129.3 ACLP AE binding protein 1 AGGF1 NM_018046.3 GPATC7|GPATCH7|HSU84971|HUS84971|VG5Q