POSEIDON: Analyzing the Secrets of the Trident Node Monitoring Tool AUGUST 2018

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IMPLEMENTATION of TRIDENT: a DISCOVERY-CLASS MISSION to TRITON. K. L. Mitchell1 , L. M. Prockter2, W. E. Frazier1, W. D. Smythe1, B

50th Lunar and Planetary Science Conference 2019 (LPI Contrib. No. 2132) 3200.pdf IMPLEMENTATION OF TRIDENT: A DISCOVERY-CLASS MISSION TO TRITON. K. L. Mitchell1 , L. M. Prockter2, W. E. Frazier1, W. D. Smythe1, B. M. Sutin1, D. A. Bearden1, and the Trident Team. 1Jet Propulsion Laboratory ([email protected]), Pasadena, CA 91109-8099, United States, 2Lunar and Planetary Insti- tute/USRA, Houston, TX, United States. Overview: Trident is an exciting mission concept to Why Now?: By launching during 2026, Trident investigate Neptune’s large moon Triton, an exotic can- takes advantage of a rare, efficient gravity-assist align- didate ocean world at 30 AU (Prockter et al., this meet- ment, to capitalize on a narrow – but closing – observa- ing). The concept is responsive to recommendations of tional window that enables assessment of changes in the recent NASA Roadmap to Ocean Worlds study Triton’s plume activity and surface characteristics since (Hendrix et al., 2019), and to the 2013 Planetary Deca- Voyager 2’s encounter one Neptune-Triton season ago. dal Survey’s habitability and workings themes (Squyres Conclusion: This mission design allows Trident to et al., 2011). A rare, low Δv trajectory (Fig. 1) enables accomplish a scientifically rich yet radically cost-effec- an MMRTG-powered spacecraft fitting under the Dis- tive investigation of an unusual icy world, dramatically covery cost cap. The spacecraft has a robust design and expanding the horizons of NASA’s Discovery Program. uses high heritage instruments (table 1) with minimal References: [1] Prockter L. M. et al. (2019) LPS L, development costs. -

Lecture 9 Good Morning and Welcome to LLT121 Classical Mythology

Lecture 9 Good morning and welcome to LLT121 Classical Mythology. In our last exciting class, we were discussing the various sea gods. You’ll recall that, in the beginning, Gaia produced, all by herself, Uranus, the sky, Pontus, the sea and various mountains and whatnot. Pontus is the Greek word for “ocean.” Oceanus is another one. Oceanus is one of the Titans. Guess what his name means in ancient Greek? You got it. It means “ocean.” What we have here is animism, pure and simple. By and by, as Greek civilization develops, they come to think of the sea as ruled by this bearded god, lusty, zesty kind of god. Holds a trident. He’s seriously malformed. He has an arm growing out of his neck this morning. You get the picture. This is none other than the god Poseidon, otherwise known to the Romans as Neptune. One of the things that I’m going to be mentioning as we meet the individual Olympian gods, aside from their Roman names—Molly, do you have a problem with my drawing? Oh, that was the problem. Okay, aside from their Roman names is also their quote/unquote attributes. Here’s what I mean by attributes: recognizable features of a particular god or goddess. When you’re looking at, let’s say, ancient Greek pottery, all gods and goddesses look pretty much alike. All the gods have beards and dark hair. All the goddesses have long, flowing hair and are wearing dresses. The easiest way to tell them apart is by their attributes. -

Greek Gods & Goddesses

Greek Gods & Goddesses The Greek Gods and GodessesMyths https://greekgodsandgoddesses.net/olympians/ The Twelve Olympians In the ancient Greek world, the Twelve great gods and goddesses of the Greeks were referred to as the Olympian Gods, or the Twelve Olympians. The name of this powerful group of gods comes from Mount Olympus, where the council of 12 met to discuss matters. All 12 Olympians had a home on Mount Olympus and that was where they were most commonly found. HADES, the god of the Underworld, preferred to live there, and POSEIDON often chose to stay in his palace under the sea. Most of the other Olympians would be on Mount Olympus year round unless they were travelling. HESTIA used to be one of the Olympians, but the constant fighting and bickering between the gods annoyed her and she eventually gave up her seat to the god of wine, DIONYSUS. Even though she left the council, Hestia still kept a home on Mount Olympus. APHRODITE was on the council but, in most Greek mythological stories, her husband HEPHAESTUS was not. At the famous Parthenon temple in Greece, there is a statue of each of the 12 Olympian gods. Hades does not have a statue, but Hephaestus does. The question of who the 12 Olympians are really depends on who is telling the story. Nobody is truly sure if Hades of Hephaestus can be classed as the Twelfth Olympian. So, because of the way Greek myths were told and retold in different ways, there are actually 14 gods and goddesses who can be considered as an Olympian god. -

Focus on Greek Gods and Goddesses

Focus on Greek Gods and Goddesses Ancient Greek gods and goddesses at The Ashmolean • The Ashmolean Museum’s collections from ancient Greece include Greek gods and goddesses represented on pottery, coins and in sculpture. • Gallery displays also include objects used in religious contexts including votive offerings, inscribed plaques, and lekythoi which once contained liquids for making offerings to the gods. • The same gods were worshipped throughout ancient Greece and formed a key part of daily life. • The Greeks believed their gods were very powerful and should be treated with reverence and respect. They honoured the gods by presenting them with gifts and sacrifices at temples. In return, they hoped that the gods would help them with different aspects of their lives. • Greek religion was polytheistic. Each god was responsble for many different areas of life and was worshipped according to the needs of the individual at the time. They were represented in human form, each with their own attributes. Galleries containing ancient Greek objects Gallery 7: Money Gallery Gallery 14: Cast Gallery Gallery 16: The Greek World Gallery 20: Aegean World Gallery 21: Greek and Roman Sculpture Gallery 16: The Greek World Gallery 14: Cast Gallery 1. Zeus 2. Nike Cast of a bronze statue of a man, about 470BC Cast of Nike of Paionios, from Olympia, c425- possibly Zeus 421BC The original was found in the 1920s at sea near Euboea, Greece. The nude bearded male about Nike, the winged goddess of victory, is flying to hurl a now-lost object is most commonly in to land on top of a tall triangular pillar as an identified as Zeus brandishing a thunderbolt, as eagle passes beneath her feet. -

Ancient Thunder Study Guide 2021 1

BTE • Ancient Thunder Study Guide 2021 1 Ancient Thunder Study Guide In this study guide you will find all kinds of information to help you learn more about the myths that inspired BTE's original play Ancient Thunder, and about Ancient Greece and its infleunce on culture down to the present time. What is Mythology? ...................................................2 The stories the actors tell in Ancient Thunder are called myths. Learn what myths are and how they are associated with world cultures. When and where was Ancient Greece? ..................... 10 The myths in Ancient Thunder were created thousands of years ago by people who spoke Greek. Learn about where and when Ancient Greece was. What was Greek Art like? ..........................................12 Learn about what Greek art looked like and how it inspired artists who came after them. How did the Greeks make Theatre? .......................... 16 The ancient Greeks invented a form of theatre that has influenced storytelling for over two thousand years. Learn about what Greek theatre was like. What was Greek Music like? ..................................... 18 Music, chant, and song were important to Greek theatre and culture. Learn about how the Greeks made music. What are the Myths in Ancient Thunder? ................. 20 Learn more about the Greek stories that are told in Ancient Thunder. Actvities ........................................................................................................... 22 Sources ..............................................................................................................24 Meet the Cast .................................................................................................... 25 2 BTE • Ancient Thunder Study Guide 2021 What is Mythology? Myths are stories that people tell to explain and understand how things came to be and how the world works. The word myth comes from a Greek word that means story. Mythology is the study of myths. -

“How Athens Got Its Name” Retelling by Joanna Davis-Swing

HOw AThEnS GOt ITs NAmE Retelling by Joanna Davis-Swing In ancient times, when the world was young, Cecrops was born 1 of the earth. Half man, half snake, he roamed rugged mountains, wooded valleys, and rocky shores. He traveled wide before venturing into the region of Attica in Greece. Here lived goat farmers and bee keepers in peaceful tranquility, and Cecrops became their king. He planned a beautiful city, set atop a large rock that rose 2 from the plains. Fertile land spread out below, and the nearby sea boasted two open harbors. The gods on Olympus watched Cecrops and approved of his plans. They could foresee that his city would rise to fame and fortune in the world, and they wanted a share of the glory. Indeed, the gods fought over who was to be the patron of the city. Zeus, who deplored fighting on Mount Olympus, declared a contest between Poseidon, god of the sea, and Athena, goddess of wisdom. Each would give Cecrops a gift, and Cecrops himself would decide who would be patron of his city. On the appointed day, Poseidon and Athena gathered with 3 Cecrops and his people on top of the rock. Poseidon carried his trident and stood proudly surveying the land. Modest Athena wore her golden helmet and carried her spear. Cecrops signaled for the contest to begin, and Poseidon immediately raised his trident high, then struck it mightily into the ground. The rock shook and the earth trembled. A great crack appeared in the rock and spread wide, filling with water. -

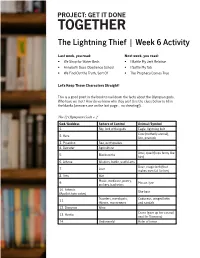

The Lightning Thief | Week 6 Activity

The Lightning Thief | Week 6 Activity Last week, you read: Next week, you read: • We Shop for Water Beds • I Battle My Jerk Relative • Annabeth Does Obedience School • I Settle My Tab • We Find Out the Truth, Sort Of • The Prophecy Comes True Let’s Keep These Characters Straight! This is a good point in the book to nail down the facts about the Olympian gods. Who have we met? How do we know who they are? Use the clues below to fill in the blanks (answers are on the last page… no cheating!). The 12 Olympian Gods + 2 God/Goddess Sphere of Control Animal/Symbol 1. Sky, lord of the gods Eagle, lightning bolt Cow (motherly animal), 2. Hera lion, peacock 3. Poseidon Sea, earthquakes 4. Demeter Agriculture Anvil, quail (hops funny like 5. Blacksmiths him) 6. Athena Wisdom, battle, useful arts Dove, magic belt (that 7. Love makes men fall for her) 8. Ares War Music, medicine, poetry, 9. Mouse, lyre archery, bachelors 10. Artemis She-bear (Apollo’s twin sister) Travelers, merchants, Caduceus, winged helm 11. thieves, messengers and sandals 12. Dionysus Wine Crane (gave up her council 13. Hestia seat for Dionysus) 14. Underworld Helm of terror The Lightning Thief | Week 6 Activity Your New Neighborhood: Elysium Look through magazines or the internet for pictures of beautiful houses you’d like to live in. Make a collage of what you image Elysium looks like. The Lightning Thief | Week 6 Activity Answers God/Goddess Sphere of Control Animal/Symbol 1. Zeus Sky, lord of the gods Eagle, lightning bolt Cow (motherly animal), 2. -

Greek Mythology #18—POSEIDON by Joy Journeay

Western Regional Button Association is pleased to share our educational articles with the button collecting community. This article appeared in the May 2017 WRBA Territorial News. Enjoy! WRBA gladly offers our articles for reprint, as long as credit is given to WRBA as the source, and the author. Please join WRBA! Go to www.WRBA.us Greek Mythology #18—POSEIDON by Joy Journeay God of: The Sea, Earthquakes, Storms and Horses Home: MOUNT OLYMPUS or the Sea Symbols: Trident, Fish, Dolphin, Horse and Bull Parents: Cronus and Rhea Consorts: Amphitrite Siblings: Hestia, Hades, Hera, Zeus, Demeter Children: Theseus, Triton, Polyphemus, Belus, Agenor, Neleus, Atlas Roman Counterpart: Neptune Poseidon was the second son of Chronus and Rhea. Like his other siblings, he was swallowed by Cronus at birth then later saved by his brother Zeus. Poseidon is second in power only to Zeus. Poseidon is most often shown in a chariot pulled by horses or hippocampus—beasts with the front half of a horse and the back half of a fish tail. Poseidon is associated with dolphins and his trident, a three- pronged spear. His beautiful palace on the seafloor was made of coral and gemstones. Poseidon had a strong and difficult personality, and was known for his greed. He was often at odds with other gods as his eyes often rested upon their possessions, which he wanted to make his own. Athena and Poseidon competed for the city of Athens, resolving to Poseidon from Milos. settle the issue by each offering the Athenians a gift and letting the city 2nd Century BC. -

Greek Gods/Mythology Notes - Information on the Greek Belief System Comes from Many Sources

Greek Gods/Mythology Notes - Information on the Greek belief system comes from many sources. Unlike followers of religions such as Christianity, Judaism, & Islam, the Greeks did not have a single sacred text, such as the Bible or Koran from which their beliefs and religious practices derived. Instead, they generally used oral traditions, passed on by word of mouth, to relate sacred stories. Priest and priestesses to various gods would also guide people in worship in various temples across Greece. We know something about these beliefs because Greek poets such as Homer, Hesiod and Pindar, and Greek dramatists such as Euripides, Aristophanes & Sophocles mention the myths in their various works. Greek mythology, however, was not static- it was constantly changing and evolving. Thus, there are often many different versions (and some that are contradictory toward one another) of the various Greek myths. Thus, some of the example myths you read in here may differ from ones you have previously heard. It does not necessarily make either version “wrong”- simply different. - The Greeks had many Gods & Goddesses- over three thousand if one were to count the many minor gods and goddesses. These deities made up the Greek pantheon, a word used to mean all the gods and goddesses (from the Greek word “pan” meaning all, and “theos” meaning gods). However, throughout Greece, there were always twelve (called the Twelve Olympians) that were the most important. They are: 1. Zeus 2. Hera 3. Poseidon 4. Athena 5. Apollo 6. Artemis 7. Hephaestus 8. Ares 9. Hermes 10. Aphrodite 11. Demeter 12. Dionysus 13. -

Ancient Greece

Diagram – Map of Ancient Greece Places, People, and Daily Life in Ancient Greece The Acropolis of Athens is the best known Key Fact: acropolis in Greece – an acropolis is a The Acropolis is on Where? Map of Ancient Greece a flat-topped rock The Acropolis settlement built on high ground. It was built Athens during the rules of Pericles, a golden age for that rises 150m Greece’s position next to the sea Athens, as a monument to the city’s greatness. above sea level (there are over 1400 islands) The Parthenon is a temple in the middle of the meant Ancient Greeks were a Acropolis in Athens. It was a temple to Athena, Key Fact: Where? The building used seafaring people. Trade The Parthenon the goddess of wisdom, and originally had a between the islands led to the Athens 22,000 tonnes of statue to her. It has now stood for nearly 2,500 marble! creation of ‘city-states’ (polis). years, a superb architectural achievement! Each city-state was ruled by a Mount Olympus is the highest mountain in Where? powerful city, led by a ruler or Greece. It was believed in Ancient Greek times Key Fact: 50 miles (later) government. Greece is a Mount Olympus Mount Olympus that when things needed to be decided in the southwest of rises to 2,918m warm country, but winds from mystical world, the 12 main Gods would gather Thessaloniki the Mediterranean, and rains at Mount Olympus, and that many lived there. from the north, kept Knossos Palace is positioned in what was the Key Fact: temperatures livable and capital of Minoan Crete. -

Greek God Pantheon.Pdf

Zeus Cronos, father of the gods, who gave his name to time, married his sister Rhea, goddess of earth. Now, Cronos had become king of the gods by killing his father Oranos, the First One, and the dying Oranos had prophesied, saying, “You murder me now, and steal my throne — but one of your own Sons twill dethrone you, for crime begets crime.” So Cronos was very careful. One by one, he swallowed his children as they were born; First, three daughters Hestia, Demeter, and Hera; then two sons — Hades and Poseidon. One by one, he swallowed them all. Rhea was furious. She was determined that he should not eat her next child who she felt sure would he a son. When her time came, she crept down the slope of Olympus to a dark place to have her baby. It was a son, and she named him Zeus. She hung a golden cradle from the branches of an olive tree, and put him to sleep there. Then she went back to the top of the mountain. She took a rock and wrapped it in swaddling clothes and held it to her breast, humming a lullaby. Cronos came snorting and bellowing out of his great bed, snatched the bundle from her, and swallowed it, clothes and all. Rhea stole down the mountainside to the swinging golden cradle, and took her son down into the fields. She gave him to a shepherd family to raise, promising that their sheep would never be eaten by wolves. Here Zeus grew to be a beautiful young boy, and Cronos, his father, knew nothing about him. -

Athena Tritogeneia, Poseidon's Trident And

ATHENA TRITOGENEIA , POSEIDON ’S TRIDENT AND EARLY SACRED TRINITY DMITRI PANCHENKO Saint-Petersburg State University; Higher School of Economics in Saint-Petersburg [email protected] ABSTRACT . The name Tritogeneia likely means ‘born of the Third’, this Third one being the supreme god, the Most High. Poseidon (at least Poseidon Helikonios) was once such a god. He was the lord of the water that descended from heaven and a deity closely asso- ciated with the celestial pole. His trident is the symbol that indicates his celestial nature, and this symbol developed from a previous one – a raised hand with three fingers. This number of fingers signified the similarity with the dwellers of the sky – the birds, with their three toes in front. KEYWORDS : Athena, Tritogeneia, Poseidon, trident, triads, mythology . Athena is repeatedly called Τριτογένεια in Homeric poems ( Il . 4. 515; 8. 39; 22. 183; Od. 3. 378), in Hesiod ( Theog . 895; 924) and elsewhere. One may wonder whether Homer and Hesiod were aware of the meaning of the epithet they used, but one confidently concludes that the later Greeks were not. This follows from the varie- ty of interpretations suggested in antiquity. To be sure, there was common agreement that the second part of the word meant ‘born’. Concerning the first part, two main ideas were current. Some derived it from τρίτο̋, ‘third’, the others from the names Τρίτων or Τριτωνί̋ , identified either with a lake in Libya or with a stream in Boeotia or Thessaly or elsewhere. Both versions figured already in the authors of the fifth century BC. The cata- logue of Democritus’ works composed by Thrasylus included the title Τριτογένεια, “so called because three things, on which all human things depend, come from her” (D.L.