Optimization of In-Vehicle Network Design

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Automotive Grade DO-218 Load Dump TVS Series

Issue Number │001 May 2019 New Product Announcement DM5WxxAQ DM6WxxAQ DM8WxxAQ Automotive-Grade DO-218 Load Dump TVS Series Diodes Incorporated introduces a series of new high-temperature automotive-compliant load-dump transient voltage suppressors (TVS) packaged in DO-218. The devices are designed to protect sensitive semiconductors in electronic modules from load- dump surge transients, as defined in ISO16750-2, that generate if the battery is disconnected from the vehicle while the alternator charges. Featuring a choice of reverse stand-off voltage from 10V to 36V (or 43V for the DM8WxxAQ series), these new automotive- The Diodes Advantage compliant TVS devices are offered as unidirectional devices . AEC-Q101 Qualified and PPAP Supported and are able to dissipate up to These devices are qualified to high reliability qualification standards in 3600W (DM5WxxAQ), 4600W accordance with AEC-Q101 and supported by a production part approval (DM6WxxAQ), and 6600W procedure (PPAP). (DM8WxxAQ) per 10/1000µs . ISO 16750-2 Compliance pulse transient. These parts are suitable to protect sensitive automotive circuits against load- All devices are rated to +175ºC, dump surge defined in ISO16750-2 (Pulse A and B). and comply with the automotive . ISO 7637-2 Compliance standard ISO7637-2 (pulses 1, These parts are suitable to protect sensitive automotive circuits against surges 2a, 2b, 3) and load dump ISO defined in ISO7637-2 (pulses 1,2a, 2b and 3). 16750-2 (pulse A and B). High Forward Surge Current Capability and Excellent Clamping Capability The case material is composed of halogen-free “green” molding The high forward surge overload rating ensures more rugged applications and compound for protection of the improves device reliability. -

Automotive Electronics

Automotive Electronics Amphenol Advanced Sensors Advanced Driver Assistance Systems (ADAS) Automotive Ethernet Body Control, Safety, Security, and Comfort Telematics, Multimedia, Infotainment, GPS, Navigation, and Camera Head & Interior LED Lighting Battery Management Systems and EV Charging Stations Enabling the Automotive Industry with our Connection Innovation and High Speed Technology 1 ABOUT AMPHENOL ICC AUTOMOTIVE Amphenol ICC brings a broad array of innovative technology and solutions to support the growth in the automotive industry, particularly in plating, signal integrity performance, and power management. As automobiles become more ADAS rich and head towards being a total autonomous operation, the key differentiator between suppliers will be their capabilities, and performance. Amphenol ICC's capable engineering team develops connector solutions according to customer needs, even during the design phase of application. INDUSTRY LEADER TECHNOLOGIES & SOLUTIONS CUSTOMER CAPABILITIES EXPERT SATISFACTION • Products accommodate standards like Open • Leverage in deploying • Automotive offerings in • Meet short lead time Alliance, USCAR, LV214, new solutions for WTB, BTB, I/O, and requirements ISO 8092, Kojiri,and Automotive applications: FFC/FPC • Advocate value-added SAE J 2223 - Signal integrity: • Strength of existing custom solutions • Solutions enable HS Signal Transmission portfolio in modular wherever possible signaling technologies and EMC BTB & WTB • Better value like LVDS and Ethernet - Power contact • Focus on new standard • Close proximity to • Leader in Automotive design and simulation IO connectors and customers’ location Ethernet and capabilities integration of new committed to Open - Contact and connector standard consumer Alliance TC2 / TC9 with miniaturization electronics IO 100 BASE-T1 (100 Mbps) - PCB contact • Interconnect solutions and 1000 BASE-T1 technologies for High Speed (1 Gbps). -

Current Catalog

YorkELECTRONIC Electronics SERVICE CENTRE Mobile Automotive Electronic Accessory Specialists Aftermarket Product Guide [email protected] 1-888-650-YORK (9675) www.yorkelec.com Press 1, 1 for Client Call Center Version:MMXXI YorkELECTRONIC Electronics SERVICE CENTRE Factory Navigation Upgrade • Available for most 2013 and up GM vehicles • Vehicles must be equipped with MyLink/Intellilink/CUE • Use factory GM parts to upgrade to Navigation NAVGM20 Kenwood Navigation • 6.8” WVGA Monitor • Apple CarPlay™ & Android Auto™ Ready • Garmin GPS built in with 3D Terrain View • Built in Bluetooth (Dual phone connection) & HD Radio • Sirius/XM ready with optional module • Dual USB, Audio/Video, mini jack inputs • 3 camera inputs • Dash Camera ready • DVD/CD/MP3/DIVX/MPEG/VCD compatible • Add or retain a backup camera, call for details NAVG20 XM Add On • Add XM (Subscription required) to your factory radio via USB • Vehicle applications may vary USBXM20 Dual USB Charger • Add USB charging to any vehicle • Charges two devices at one time • Dual 2.5A charge ports USBCH10 [email protected] 1-888-650-YORK (9675) www.yorkelec.com 1 Press 1, 1 for Client Call Center Version:MMXXI YorkELECTRONIC Electronics SERVICE CENTRE Advent Overhead DVD • Full colour monitor with built in DVD player • Available in black, pewter or shale colours Add extra headphones • Play audio through vehicle stereo to any video system • LED dome lights 1ch HP100 • 2 wireless headphones 2ch HP200 • Wireless remote controls • AUX input • Side load DVD mechanism • Available in 9”, 10” or -

Vehicle Technology Trends in Electronics for the North American Market; Opportunities for the Taiwanese Automotive Industry

Vehicle Technology Trends in Electronics for the North American Market; Opportunities for the Taiwanese Automotive Industry by December 2006 The statements, findings, and conclusions herein are those of the authors and do not necessarily reflect the views of the project sponsor. Table of Contents Table of Contents........................................................................................................................... i List of Tables................................................................................................................................. ii List of Figures ............................................................................................................................... ii List of Charts................................................................................................................................. ii Acknowledgements...................................................................................................................... iii Introduction ...................................................................................................................................1 Active Safety .................................................................................................................................2 Safety Systems..........................................................................................................................................2 Infotainment...............................................................................................................................................5 -

AUMT 1349 Automotive Electronics Theory Course Syllabus: Spring 2017

AUMT 1349 Automotive Electronics Theory Course Syllabus: Spring 2017 “Northeast Texas Community College exists to provide responsible, exemplary learning opportunities.” Professor: Robert Spencer Office: Carroll Shelby Automotive IT-112 Phone: 903-434-8156 Email: [email protected] Office Hours Monday Tuesday Wednesday Thursday Friday Online 9:30a - 3:00p Appointment N/A The information contained in this syllabus is subject to change without notice. Students are expected to be aware of any additional course policies presented by the instructor during the course. Catalog Course Description: AUMT 1349 - Automotive Electronics Theory 3 credit hours Lecture/Lab/Clinical: Two hours of lecture and two hours of lab each week. A course in automotive technology including electrical principles, semiconductor and integrated circuits, digital fundamentals, 5 volt binary coding, hexadecimal codes, microcomputer systems, and electrical test equipment. Note: Additional course fee(s) required. Class Schedule: M/W tba Required Textbook(s): Fundamentals of Automotive Technology – Principles & Practice CDX Automotive Jones and Bartlett Learning ISBN 978-1-4496-7108-2 Recommended Reading(s): See: Blackboard Student Provisions: Certain tools and equipment related to the topic of study will be REQUIRED of the student to provide for themselves. A tool list is available from your instructor. Your instructor may also require additional tools and/or determine certain tool specifications, for use in classes during the term. It is HIGHLY RECOMMENDED that the student furnish a lab vehicle to be used in lab and hands-on practical exercises. This vehicle is NOT the student’s daily driver. The student should understand that the vehicle can greatly enhance their learning experience. -

Silver in Printed & Flexible Electronics

Market Trend Report Silver in Printed & Flexible Electronics Silver in Printed & Flexible Electronics Table of Contents Section Page Executive Summary 2 Chart 1: Printed & Flexible Electronics Market Silver Demand 2 Electronics Market Segments – Breaking It Down 3 Chart 2: Comparison of Electronics Markets Revenue in 2020 & 2025 4 Precious Metals Used in the Electronic Markets 6 Chart 3: Electronics Precious Metals Demand Percent of Total Supply 7 Chart 4: Electronics Precious Metals Demand 7 Chart 5: Electronics Market Segment Precious Metals Spend as a Percent of Market Revenue 8 Precious Metals Products 8 Chart 6: Silver Demand by Market Segment 9 The Fundamentals of Printed and Flexible Electronics 10 Chart 7: 2020 Printed & Flexible Electronics Market Share 11 Chart 8: Electronics Market Trajectory Comparison 12 Visualizing the Future: Printed and Flexible Electronics Roadmap 12 Conclusion 13 Chart 9: Silver Industrial Demand Forecast 14 1 Silver in Printed & Flexible Electronics Executive Summary This Market Trend Report for the Silver Institute examines silver’s growing role in printed and flexible electronics. According to our research, 33.9% of the annual silver global supply in 2020 ended up in electronics. This is a total of 327 million troy ounces (Moz) that finds its way into various electronics markets every year. Given the projected growth of electrification, we are confident that this will continue to grow over time since silver is the world’s most conductive EXponent Flexible Electronics material. Adding to this growth will be an expansion in the solar photovoltaic segment, which already consumes 10% of the global silver supply. According to our forecasts, the amount of silver consumed in solar photovoltaics (solar PV) will climb to 15% (155 Moz) by 2025, and 19% (197 Moz) by 2030. -

2021 Rosen In-Vehicle Entertainment Catalog

2021 Product Guide Headrest Systems | Seat-Back Systems | Overhead Systems 2021 Rosen Product Guide SMART TV SEAT-BACK SYSTEMS BUILT-IN VOLTAGE DOWNLOAD YOUR FAVORITE APPS, PLAY YOUR DVD COLLECTION AND CONTROL THE SEAT-BACK SYSTEM STABILIZER FROM ANYWHERE IN THE VEHICLE. FOR VEHICLES WITH NOTE: ANDROID OS INCLUDES GOOGLE PLAY APP STORE TO DOWNLOAD FUEL SAVER (START/STOP) FEATURE AND ACCESS ALL APPS ON THE SYSTEM. ALL APPS SHOWN ARE TRADEMARKS OR REGISTERED TRADEMARKS OF THEIR RESPECTIVE OWNER. ROSSBA2 DUAL 10.1” SMART TV SEAT-BACK ENTERTAINMENT SYSTEM DUAL ANDROID, DVD, HDMI, SD, USB, AND TOUCH SCREEN INTERFACE The ROSSBA2 Seat-back system brings vehicle entertainment to a whole new level. Bringing you a Dual Android OS touch-screen interface with DVD, SD Card reader, USB ports and HDMI ports. This Ultimate Seat-back system allows you to download your favorite Apps, play your DVD collection and control the monitors from anywhere in the vehicle using the Smart-phone App. • 10.1” Digital LCD Display (1024 x 600) • Google Play Store the Vehicle Sound System) • Capacitive Multi-Touch Panel • USB Input for Digital Media Playback and Smart • (2) Dual Channel Wireless Headphones Included • Android 8.1+ Operating System Device Charging • Universal Mounting Bracket (Does not Interfere with • Quad Core Process – 8GB RAM • Built-in DVD Player Factory Headrest Movement) • 16GB Built-in Memory to Download Off-Line Content • HDMI Input to Connect an External Game System • Specialty Mounting Brackets Available as Optional from Apps such as Netflix and -

Car Infotainment Systems Need Multi-Output

http://www.eetimes.com/General/PrintView/4408195 Engineers from Linear Technology outline the need for a multi-output IC that can provide a small solution footprint with a configurable number of moderately powered rails. Background The popularity of automotive infotainment systems continues to explode. Modern technological advancements such as satellite radio, touch screens, navigation systems, Bluetooth, HDTV, integrated cell phones, media players and video game systems have enhanced the driving experience. With over 50 million cars produced each year worldwide, the majority have some type of integrated infotainment system. From a power supply perspective, a basic infotainment console may require several low voltage power supply rails with several Amps of total current, and a premium console may require even more. Traditionally, these voltage rails and current levels have been supplied by a multitude of discrete power regulator ICs or large overly-integrated power management integrated circuits (PMICs). However, these large PMICs often have more rails than are needed, require a large circuit footprint and are usually under-powered for some of the rails. As a result, there is a need for a multi-output IC that can provide a small solution footprint with a configurable number of moderately powered rails. Furthermore, wouldn’t there be further benefit if this same IC could be configured a variety of different ways to accommodate changes in power requirements that might arise during the development process? Thanks to innovative circuit design, this type of IC is now a reality. Infotainment power system design challenges 1 of 9 3/7/2013 10:27 AM http://www.eetimes.com/General/PrintView/4408195 Electronic systems design for automotive applications is challenging for many reasons: space is highly restricted, the operating temperature range must be wide, noise must be minimized, battery transients must be tolerated and quality levels must be high. -

Automotive Circuit Protection Using Littelfuse Automotive TVS Diodes

Application Note Automotive Circuit Protection using Littelfuse Automotive TVS Diodes The Challenge The designers of automotive electronics face many technical challenges during the system design process, including designing methods of protection against a variety of electrical hazards. The three major sources of electrical hazards in these systems are electrostatic discharge (ESD), lightning, and switching loads in power electronics circuits. Overcoming transient surges that can harm the vehicle’s electronics is one of the biggest challenges of the design process. The Solution Protecting automotive electronics includes eliminating transient surges that can damage the control units, infotainment electronics, sensors, fuel injectors, valves, motors, 12/24/42/48 volts powertrains, and hydrolytic controllers, etc. Note: For 48V power system with high power surge rating, welcome to contact Littelfuse for technical support and application test) What do Littelfuse Transient Voltage Suppression (TVS) Diodes Protect? As shown in Figure 1, Littelfuse TVS diodes provide protection for four main categories of vehicle systems: safety, performance and emissions, comfort and convenience, and hybrid vehicles. In modern automotive designs, all on-board electronics are connected to the battery and the alternator. As indicated in Figure 2, the output of the alternator is unstable and requires further conditioning before it can be used to power the vehicle’s other systems. Currently, most of the alternators have zener diodes to protect against load dump surges; however, these are still not sufficient. During the powering or switching of inductive loads, the battery is disconnected, so that unwanted spikes or transients are generated. If left uncorrected, these transients would be transmitted along the power line, causing individual electronics and sensors to malfunction or permanently damaging the vehicle’s electronic system, affecting overall reliability. -

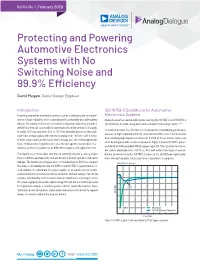

Protecting and Powering Automotive Electronics Systems with No Switching Noise and 99.9% Efficiency

Vol 54 No 1, February 2020 Protecting and Powering Automotive Electronics Systems with No Switching Noise and 99.9% Efficiency David Megaw , Senior Design Engineer Introduction ISO 16750-2 Conditions for Automotive Powering automotive electronics systems can be challenging due to require- Electronics Systems ments of high reliability while contending with a relatively unstable battery Analog Devices has several publications covering the ISO 7367-2 and ISO 16750-2 voltage. The variety of electrical and mechanical systems that interface with a specifications in detail, along with how to simulate them using LTspice®.1,2,3,4 vehicle’s battery can cause wild voltage excursions in the nominal 12 V supply. In its latest iteration, the ISO 7367-2 electromagnetic compatibility specification In reality, 12 V can vary from –14 V to +35 V for extended periods of time and focuses on high amplitude (>100 V), short duration (150 ns to 2 ms) transients experience voltage spikes with extremes ranging from +150 V to –220 V. Some from relatively high impedance sources (2 Ω to 50 Ω). These voltage spikes can of these surges and transients arise from everyday use, others from fault condi- often be mitigated with passive components. Figure 1 shows ISO 7367-2 pulse 1 tions or human error. Regardless of cause, the damage they can produce in a as defined and with an added 330 µF bypass capacitor. The capacitance reduces vehicle’s electronics system can be difficult to diagnose and expensive to fix. the spike’s amplitude from –150 V to –16 V, well within the range of reverse The experience of automakers over the last century has led to a catalog of elec- battery protection circuitry. -

CHAPTER 5 Automotive Power Electronics 421

Automotive Power Semiconductor Applications Philips Semiconductors CHAPTER 5 Automotive Power Electronics 5.1 Automotive Motor Control (including selection guides) 5.2 Automotive Lamp Control (including selection guides) 5.3 The TOPFET 5.4 Automotive Ignition 421 Automotive Power Semiconductor Applications Philips Semiconductors Automotive Motor Control (including selection guides) 423 Automotive Power Semiconductor Applications Philips Semiconductors 5.1.1 Automotive Motor Control with Philips MOSFETS The trend for comfort and convenience features in today’s Wound field DC Commutator Motors cars means that more electric motors are required than ever - a glance at Table 1 will show that up to 30 motors may be Traditionally motors with wound stator fields, a rotor supply used in top of the range models, and the next generation fed via brushes and a multi-segment commutator - see of cars will require most of these features as standard in Fig. 1 - have been widely used. Recently, however, they middle of the range models. have been largely replaced by permanent magnet motors. All these motors need to be activated and deactivated, Characteristically they are found with square frames. They usually from the dashboard; that requires a lot of copper may be Series wound (with high torque at start up but tend cable in the wiring harnesses - up to 4km in overall length, to ’run away’ on no-load), Shunt wound (with relatively flat weighing about 20 kg. Such a harness might contain over speed/torque characteristics) or (rarely) Compound wound. 1000 wires, each requiring connectors at either end and taking up to six hours to build. Not only does this represent a cost and weight penalty, it can also create major DC ’bottlenecks’ at locations such as door hinges, where it becomes almost impossible to physically accommodate the field 70-80 wires required. -

Fatigue Mechanisms in Al-Based Metallizations in Power Mosfets Roberta Ruffilli

Fatigue mechanisms in Al-based metallizations in power MOSFETs Roberta Ruffilli To cite this version: Roberta Ruffilli. Fatigue mechanisms in Al-based metallizations in power MOSFETs. Material chem- istry. Université Paul Sabatier - Toulouse III, 2017. English. NNT : 2017TOU30256. tel-01666437v3 HAL Id: tel-01666437 https://tel.archives-ouvertes.fr/tel-01666437v3 Submitted on 23 Nov 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THTHESEESE`` En vue de l’obtention du DOCTORAT DE L’UNIVERSITE´ DE TOULOUSE D´elivr´e par : l’Universit´eToulouse 3 Paul Sabatier (UT3 Paul Sabatier) Pr´esent´ee et soutenue le 08/12/2017 par : Roberta RUFFILLI Modes de fatigue des m´etallisations `abase d’aluminium dans les composants MOSFET de puissance Fatigue mechanisms in Al-based metallizations in power MOSFETs JURY Mauro CIAPPA ETH, Zurich Rapporteur Josef LUTZ TU, Chemnitz Rapporteur Marie-Laure LOCATELLI Laboratoire LAPLACE, Toulouse Membre du Jury Michael NELHIEBEL K.A.I - Infineon, Villach Membre du Jury Stephane´ LEFEBVRE SATIE - ENS, Cachan Membre du Jury