When Bad Code Comes from Good Specs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

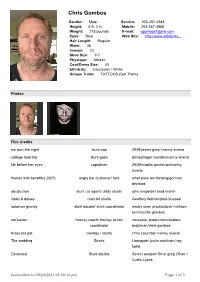

Chris Gombos

Chris Gombos Gender: Male Service: 203-257-4988 Height: 6 ft. 2 in. Mobile: 203-257-4988 Weight: 215 pounds E-mail: [email protected] Eyes: Blue Web Site: http://www.atbstunts... Hair Length: Regular Waist: 36 Inseam: 33 Shoe Size: 9.5 Physique: Athletic Coat/Dress Size: 45 Ethnicity: Caucasian / White Unique Traits: TATTOOS (Got Them) Photos Film Credits we own the night stunt cop 2929/james gray/ manny siverio college road trip stunt goon disney/roger kumble/manny siverio life before her eyes cop/driver 29/29/vladim perelman/manhy siverio friends with benefits( 2007) angry bar customer/ falls what were we thinking/gorman bechard abuduction stunt cia agent/ utility stunts john singleton/ brad martin violet & daisey man #4 stunts Geoffery fletcher/pete bucossi solomon grundy stunt double/ stunt coordinator wacky chan productions/ mattson tomlin/chris gombos nor'easter hockey coach/ hockey action noreaster productions/andrew coordinator brotzman/chris gombos brass tea pot cowboy / stunts chris columbo/ manny siverio The wedding Stunts Lionsgate/ justin zackham/ roy farfel Devoured Stunt double Secret weapon films/ greg Oliver / Curtis Lyons Generated on 09/24/2021 08:39:14 pm Page 1 of 3 Television Bored to death season 3 ep8 Stunt double Danny hoch Hbo / /Pete buccossi Law & order svu " pursuit" Stunt double Jery hewitt / Ian McLaughlin ( stunt coordinators ) jersey city goon #2/ stunts fx/daniel minahan/pete buccosi person of interest season 1 ep 2 rough dude#2 / stunts cbs/ /jeff gibson "ghost" Boardwalk empire season 2 ep Mike Griswalt/ stunts Hbo/ Timothy van patten/ steve 12 pope Onion news network season 2 ep Stunt driver/ glen the producer Comedy central / / manny Siverio 4 Onion news network season 2 ep Stunt reporter Comedy central / / manny Siverio 6 gun hill founding patriot1 / stunts bet/reggie rock / jeff ward blue bloods ep "uniforms" s2 joey sava / stunts cbs/ / cort hessler ep11 Person of interest ep firewall Stunts/ hr cop CBS / Jonah Nolan / jeff gibson Person of interest ep bad code Brad / stunts CBS/. -

Adam Tornhill — «Your Code As a Crime Scene

Early praise for Your Code as a Crime Scene This book casts a surprising light on an unexpected place—my own code. I feel like I’ve found a secret treasure chest of completely unexpected methods. Useful for programmers, the book provides a powerful tool to smart testers, too. ➤ James Bach Author, Lessons Learned in Software Testing You think you know your code. After all, you and your fellow programmers have been sweating over it for years now. Adam Tornhill uses thinking in psychology together with hands-on tools to show you the bad parts. This book is a red pill. Are you ready? ➤ Björn Granvik Competence manager Adam Tornhill presents code as it exists in the real world—tightly coupled, un- wieldy, and full of danger zones even when past developers had the best of inten- tions. His forensic techniques for analyzing and improving both the technical and the social aspects of a code base are a godsend for developers working with legacy systems. I found this book extremely useful to my own work and highly recommend it! ➤ Nell Shamrell-Harrington Lead developer, PhishMe By enlisting simple heuristics and data from everyday tools, Adam shows you how to fight bad code and its cohorts—all interleaved with intriguing anecdotes from forensic psychology. Highly recommended! ➤ Jonas Lundberg Senior programmer and team leader, Netset AB After reading this book, you will never look at code in the same way again! ➤ Patrik Falk Agile developer and coach Do you have a large pile of code, and mysterious and unpleasant bugs seem to appear out of nothing? This book lets you profile and find out the weak areas in your code and how to fight them. -

Refactoring-Javascript.Pdf

Refactoring JavaScript Turning Bad Code into Good Code Evan Burchard Refactoring JavaScript by Evan Burchard Copyright © 2017 Evan Burchard. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotion- al use. Online editions are also available for most titles (http://oreilly.com/ safari). For more information, contact our corporate/institutional sales depart- ment: 800-998-9938 or [email protected]. • Editors: Nan Barber and Allyson MacDonald • Production Editor: Kristen Brown • Copyeditor: Rachel Monaghan • Proofreader: Rachel Head • Indexer: Ellen Troutman-Zaig • Interior Designer: David Futato • Cover Designer: Karen Montgomery • Illustrator: Rebecca Demarest • March 2017: First Edition Revision History for the First Edition • 2017-03-10: First Release See http://oreilly.com/catalog/errata.csp?isbn=9781491964927 for release details. The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Refactoring JavaScript, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc. While the publisher and the author have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the pub- lisher and the author disclaim all responsibility for errors or omissions, includ- ing without limitation responsibility for damages resulting from the use of or re- liance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work con- tains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof com- plies with such licenses and/or rights. -

1 Combinatorial Methods in Testing

1 Combinatorial Methods in Testing Developers of large software systems often notice an interesting phenomenon: if usage of an application suddenly increases, components that have been working correctly develop previously undetected failures. For example, the application may have been installed with a different OS or DBMS system from what was used previously, or newly added customers may have account records with combinations of values that have not occurred before. Some of these rare combinations trigger failures that have escaped previous testing and extensive use. Such failures are known as interaction failures, because they are only exposed when two or more input values interact to cause the program to reach an incorrect result. 1.1 Software Failures and the Interaction Rule Interaction failures are one of the primary reasons why software testing is so difficult. If failures only depended on one variable value at a time, we could simply test each value once, or for continuous-valued variables, one value from each representative range. If our application had inputs with v values each, this would only require a total of v tests – one value from each input per test. Unfortunately, the situation is much more complicated than this. Combinatorial testing can help detect problems like those described above early in the testing life cycle. The key insight underlying t-way combinatorial testing is that not every parameter contributes to every failure and most failures are triggered by a single parameter value or interactions between a relatively small number of parameters (for more on the number of parameters interacting in failures, see Appendix B). -

When and Why Your Code Starts to Smell Bad (And Whether the Smells Go Away)

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TSE.2017.2653105, IEEE Transactions on Software Engineering When and Why Your Code Starts to Smell Bad (and Whether the Smells Go Away) Michele Tufano1, Fabio Palomba2, Gabriele Bavota3 Rocco Oliveto4, Massimiliano Di Penta5, Andrea De Lucia2, Denys Poshyvanyk1 1The College of William and Mary, Williamsburg, VA, USA 2University of Salerno, Fisciano (SA), Italy, 3Universita` della Svizzera italiana (USI), Switzerland, 4University of Molise, Pesche (IS), Italy, 5University of Sannio, Benevento (BN), Italy [email protected], [email protected], [email protected] [email protected], [email protected], [email protected], [email protected] Abstract—Technical debt is a metaphor introduced by Cunningham to indicate “not quite right code which we postpone making it right”. One noticeable symptom of technical debt is represented by code smells, defined as symptoms of poor design and implementation choices. Previous studies showed the negative impact of code smells on the comprehensibility and maintainability of code. While the repercussions of smells on code quality have been empirically assessed, there is still only anecdotal evidence on when and why bad smells are introduced, what is their survivability, and how they are removed by developers. To empirically corroborate such anecdotal evidence, we conducted a large empirical study over the change history of 200 open source projects. This study required the development of a strategy to identify smell-introducing commits, the mining of over half a million of commits, and the manual analysis and classification of over 10K of them. -

The-Art-Of-Readable-Code.Pdf

The Art of Readable Code Dustin Boswell and Trevor Foucher Beijing • Cambridge • Farnham • Köln • Sebastopol • Tokyo The Art of Readable Code by Dustin Boswell and Trevor Foucher Copyright © 2012 Dustin Boswell and Trevor Foucher. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Editor: Mary Treseler Indexer: Potomac Indexing, LLC Production Editor: Teresa Elsey Cover Designer: Susan Thompson Copyeditor: Nancy Wolfe Kotary Interior Designer: David Futato Proofreader: Teresa Elsey Illustrators: Dave Allred and Robert Romano November 2011: First Edition. Revision History for the First Edition: 2011-11-01 First release See http://oreilly.com/catalog/errata.csp?isbn=9780596802295 for release details. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. The Art of Readable Code, the image of sheet music, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and authors assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. -

The Great Methodologies Debate: Part 2

ACCESS TO THE EXPERTS The Journal of Information Technology Management January 2002 Vol. 15, No. 1 The Great Methodologies Debate: Part 2 Resolved “Today, a new debate rages: agile software Traditional methodologists development versus rigorous software are a bunch of process- development.” dependent stick-in-the-muds who’d rather produce flawless Jim Highsmith, Guest Editor documentation than a working system that meets business needs. Opening Statement Rebuttal Jim Highsmith 2 Lightweight, er, “agile” methodologists are a bunch of glorified hackers who are going to be in for a heck of a surprise Agile Software Development Joins the when they try to scale up their “Would-Be” Crowd “toys” into enterprise-level Alistair Cockburn 6 software. The Bogus War Stephen J. Mellor 13 A Resounding “Yes” to Agile Processes — But Also to More Ivar Jacobson 18 Agile or Rigorous OO Methodologies: Getting the Best of Both Worlds Brian Henderson-Sellers 25 Big and Agile? Matt Simons 34 Opening Statement by Jim Highsmith Who wants to fly a wet beer mat? rigorous (or whatever label one expect significant variations in the What is the opposite of a Bengal chooses) methodologies a others. High-level CMM organiza- tiger? For answers to these and chaordic perspective, collab- tions tout their ability to achieve other intriguing questions, read on! orative values and principles, and their planned goals most of the barely sufficient methodology. A time (99.5% was reported in one As Ive read through the articles chaordic perspective arises from article on a new Level 5 organiza- for the two methodology debate the recognition and acceptance of tion [1]). -

Introduction to Software Development

Warwick Research Software Engineering Introduction to Software Development H. Ratcliffe and C.S. Brady Senior Research Software Engineers \The Angry Penguin", used under creative commons licence from Swantje Hess and Jannis Pohlmann. December 10, 2017 Contents Preface i 0.1 About these Notes.............................i 0.2 Disclaimer..................................i 0.3 Example Programs............................. ii 0.4 Code Snippets................................ ii 0.5 Glossaries and Links............................ iii 1 Introduction to Software Development1 1.1 Basic Software Design...........................1 1.2 Aside - Programming Paradigms......................8 1.3 How to Create a Program From a Blank Editor.............9 1.4 Patterns and Red Flags........................... 11 1.5 Practical Design............................... 13 1.6 Documentation Strategies......................... 14 1.7 Getting Data in and out of your Code.................. 19 1.8 Sharing Code................................ 23 Glossary - Software Development........................ 24 2 Principles of Testing and Debugging 27 2.1 What is a bug?............................... 27 2.2 Bug Catalogue............................... 27 2.3 Non Bugs or \Why doesn't it just..."................... 36 2.4 Aside - History of the Bug and Debugging................ 37 2.5 Your Compiler (or Interpreter) Wants to Help You........... 38 2.6 Basic Debugging.............................. 39 2.7 Assertions and Preconditions........................ 41 2.8 Testing Principles............................. -

Writing Secure Code Code

spine = 1.82” ”During the past two Proven techniques from the security experts to decades, computers have revolutionized the way we live. help keep hackers at bay—now updated with BEST PRACTICES They are now part of every lessons from the Microsoft security pushes BEST PRACTICES critical infrastructure, from Hackers cost countless dollars and cause endless worry every year as they attack secure software telecommunications to banking DEVELOPMENT SERIES to transportation, and they networked applications, steal credit-card numbers, deface Web sites, and slow network SECURE CODE WRITING contain vast amounts of sensitive traffi c to a crawl. Learn techniques that can help keep the bad guys at bay with this SECURE CODE WRITING data, such as personal health and entertaining, eye-opening book—now updated with the latest security threats plus WRITING fi nancial records. Building secure lessons learned from the recent security pushes at Microsoft. You’ll see how to padlock software is now more critical than your applications throughout development—from designing secure applications ever to protecting our future, and to writing robust code that can withstand repeated attacks to testing applications every software developer must for security fl aws. Easily digested chapters explain security principles, strategies, learn how to integrate security and coding techniques that can help make your code more resistant to attack. The into all their projects. Writing authors—two battle-scarred veterans who have solved some of the industry’s toughest SECURE Secure Code, which is required security problems—provide sample code to demonstrate specifi c development Second Edition reading at Microsoft and which techniques. -

Hh334.Pdf (3.880Mb)

THE APPSMITHS: COMMUNITY, IDENTITY, AFFECT AND IDEOLOGY AMONG COCOA DEVELOPERS FROM NEXT TO IPHONE A Dissertation Presented to the Faculty of the Graduate School of Cornell University In Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy| by Hansen Hsu May 2015 © 2015 Hansen Hsu THE APPSMITHS: COMMUNITY, IDENTITY, AFFECT AND IDEOLOGY AMONG COCOA DEVELOPERS FROM NEXT TO IPHONE Hansen Hsu, Ph.D. Cornell University 2015 This dissertation is an ethnographic study, accomplished through semi-structured interviews and participant observation, of the cultural world of third party Apple software developers who use Apple’s Cocoa libraries to create apps. It answers the questions: what motivates Apple developers’ devotion to Cocoa technology, and why do they believe it is a superior programming environment? What does it mean to be a “good” Cocoa programmer, technically and morally, in the Cocoa community of practice, and how do people become one? I argue that in this culture, ideologies, normative values, identities, affects, and practices interact with each other and with Cocoa technology in a seamless web, which I call a “techno-cultural frame.” This frame includes the construction of a developer’s identity as a vocational craftsman, and a utopian vision of software being developed by millions of small-scale freelance developers, or “indies,” rather than corporations. This artisanal production is made possible by the productivity gains of Cocoa technology, which ironically makes indies dependent on Apple for tools. This contradiction is reconciled through quasi-religious narratives about Apple and Steve Jobs, which enrolls developers into seeing themselves as partners in a shared mission with Apple to empower users with technology. -

Arcade Documentation Release 2021

Arcade Documentation Release 2021 Paul Vincent Craven Sep 22, 2021 CONTENTS 1 Chapters 3 1.1 Foreword.................................................3 1.2 Setting Up Your System.........................................5 1.3 Version Control Systems.........................................7 1.4 The Print Function............................................ 18 1.5 How to Draw with Your Computer.................................... 27 1.6 What is a Programming Language?................................... 47 1.7 Variables and Expressions........................................ 53 1.8 Creating Functions............................................ 62 1.9 Drawing With Functions......................................... 77 1.10 If Statements............................................... 86 1.11 For Loops................................................. 98 1.12 While Loops............................................... 107 1.13 Random Numbers............................................ 111 1.14 Advanced Looping............................................ 116 1.15 Introduction to Lists........................................... 124 1.16 Classes, Constructors, and Attributes.................................. 137 1.17 Class Methods.............................................. 149 1.18 Using the Window Class......................................... 163 1.19 User Control............................................... 171 1.20 Sound Effects............................................... 185 1.21 Sprites And Collisions......................................... -

Oreilly.Beautiful.Code.Jun.2007.Pdf

Beautiful Code Other resources from O’Reilly Related titles Applied Software Project Head First Object-Oriented Management Analysis & Design The Art of Project Mastering Regular Management Expressions Database in Depth The Myths of Innovation Essential Business Process Prefactoring Modeling Process Improvement Head First Design Patterns Essentials oreilly.com oreilly.com is more than a complete catalog of O’Reilly books. You’ll also find links to news, events, articles, weblogs, sample chapters, and code examples. oreillynet.com is the essential portal for developers interested in open and emerging technologies, including new plat- forms, programming languages, and operating systems. Conferences O’Reilly brings diverse innovators together to nurture the ideas that spark revolutionary industries. We specialize in documenting the latest tools and systems, translating the innovator’s knowledge into useful skills for those in the trenches. Visit conferences.oreilly.com for our upcoming events. Safari Bookshelf (safari.oreilly.com) is the premier online reference library for programmers and IT professionals. Conduct searches across more than 1,000 books. Sub- scribers can zero in on answers to time-critical questions in a matter of seconds. Read the books on your Bookshelf from cover to cover or simply flip to the page you need. Try it today for free. Beautiful Code Edited by Andy Oram and Greg Wilson Beijing • Cambridge • Farnham • Köln • Paris • Sebastopol • Taipei • Tokyo Beautiful Code Edited by Andy Oram and Greg Wilson Copyright © 2007 O’Reilly Media, Inc. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc. 1005 Gravenstein Highway North, Sebastopol, CA 95472 O’Reilly books may be purchased for educational, business, or sales promotional use.