Refactoring-Javascript.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Coffeescript Accelerated Javascript Development, Second Edition

Extracted from: CoffeeScript Accelerated JavaScript Development, Second Edition This PDF file contains pages extracted from CoffeeScript, published by the Prag- matic Bookshelf. For more information or to purchase a paperback or PDF copy, please visit http://www.pragprog.com. Note: This extract contains some colored text (particularly in code listing). This is available only in online versions of the books. The printed versions are black and white. Pagination might vary between the online and printed versions; the content is otherwise identical. Copyright © 2015 The Pragmatic Programmers, LLC. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form, or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior consent of the publisher. The Pragmatic Bookshelf Dallas, Texas • Raleigh, North Carolina CoffeeScript Accelerated JavaScript Development, Second Edition Trevor Burnham The Pragmatic Bookshelf Dallas, Texas • Raleigh, North Carolina Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and The Pragmatic Programmers, LLC was aware of a trademark claim, the designations have been printed in initial capital letters or in all capitals. The Pragmatic Starter Kit, The Pragmatic Programmer, Pragmatic Programming, Pragmatic Bookshelf, PragProg and the linking g device are trade- marks of The Pragmatic Programmers, LLC. Every precaution was taken in the preparation of this book. However, the publisher assumes no responsibility for errors or omissions, or for damages that may result from the use of information (including program listings) contained herein. Our Pragmatic courses, workshops, and other products can help you and your team create better software and have more fun. -

Open Source Used in Quantum SON Suite 18C

Open Source Used In Cisco SON Suite R18C Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. Text Part Number: 78EE117C99-185964180 Open Source Used In Cisco SON Suite R18C 1 This document contains licenses and notices for open source software used in this product. With respect to the free/open source software listed in this document, if you have any questions or wish to receive a copy of any source code to which you may be entitled under the applicable free/open source license(s) (such as the GNU Lesser/General Public License), please contact us at [email protected]. In your requests please include the following reference number 78EE117C99-185964180 Contents 1.1 argparse 1.2.1 1.1.1 Available under license 1.2 blinker 1.3 1.2.1 Available under license 1.3 Boost 1.35.0 1.3.1 Available under license 1.4 Bunch 1.0.1 1.4.1 Available under license 1.5 colorama 0.2.4 1.5.1 Available under license 1.6 colorlog 0.6.0 1.6.1 Available under license 1.7 coverage 3.5.1 1.7.1 Available under license 1.8 cssmin 0.1.4 1.8.1 Available under license 1.9 cyrus-sasl 2.1.26 1.9.1 Available under license 1.10 cyrus-sasl/apsl subpart 2.1.26 1.10.1 Available under license 1.11 cyrus-sasl/cmu subpart 2.1.26 1.11.1 Notifications 1.11.2 Available under license 1.12 cyrus-sasl/eric young subpart 2.1.26 1.12.1 Notifications 1.12.2 Available under license Open Source Used In Cisco SON Suite R18C 2 1.13 distribute 0.6.34 -

Npm Packages As Ingredients: a Recipe-Based Approach

npm Packages as Ingredients: a Recipe-based Approach Kyriakos C. Chatzidimitriou, Michail D. Papamichail, Themistoklis Diamantopoulos, Napoleon-Christos Oikonomou, and Andreas L. Symeonidis Electrical and Computer Engineering Dept., Aristotle University of Thessaloniki, Thessaloniki, Greece fkyrcha, mpapamic, thdiaman, [email protected], [email protected] Keywords: Dependency Networks, Software Reuse, JavaScript, npm, node. Abstract: The sharing and growth of open source software packages in the npm JavaScript (JS) ecosystem has been exponential, not only in numbers but also in terms of interconnectivity, to the extend that often the size of de- pendencies has become more than the size of the written code. This reuse-oriented paradigm, often attributed to the lack of a standard library in node and/or in the micropackaging culture of the ecosystem, yields interest- ing insights on the way developers build their packages. In this work we view the dependency network of the npm ecosystem from a “culinary” perspective. We assume that dependencies are the ingredients in a recipe, which corresponds to the produced software package. We employ network analysis and information retrieval techniques in order to capture the dependencies that tend to co-occur in the development of npm packages and identify the communities that have been evolved as the main drivers for npm’s exponential growth. 1 INTRODUCTION Given that dependencies and reusability have be- come very important in today’s software develop- The popularity of JS is constantly increasing, and ment process, npm registry has become a “must” along is increasing the popularity of frameworks for place for developers to share packages, defining code building server (e.g. -

How to Pick Your Build Tool

How to Pick your Build Tool By Nico Bevacqua, author of JavaScript Application Design Committing to a build technology is hard. It's an important choice and you should treat it as such. In this article, based on the Appendix from JavaScript Application Design, you'll learn about three build tools used most often in front-end development workflows. The tools covered are Grunt, the configuration-driven build tool; npm, a package manager that can also double as a build tool; and Gulp, a code-driven build tool that's somewhere in between Grunt and npm. Deciding on a technology is always hard. You don't want to make commitments you won't be able to back out of, but eventually you'll have to make a choice and go for something that does what you need it to do. Committing to a build technology is no different in this regard: it's an important choice and you should treat it as such. There are three build tools I use most often in front-end development workflows. These are: Grunt, the configuration-driven build tool; npm, a package manager that can also double as a build tool; and Gulp, a code-driven build tool that's somewhere in between Grunt and npm. In this article, I'll lay out the situations in which a particular tool might be better than the others. Grunt: The good parts The single best aspect of Grunt is its ease of use. It enables programmers to develop build flows using JavaScript almost effortlessly. All that's required is searching for the appropriate plugin, reading its documentation, and then installing and configuring it. -

Javascript: the Good Parts by Douglas Crockford

1 JavaScript: The Good Parts by Douglas Crockford Publisher: O'Reilly Pub Date: May 2, 2008 Print ISBN-13: 978-0-596-51774-8 Pages: 170 Table of Contents | Index Overview Most programming languages contain good and bad parts, but JavaScript has more than its share of the bad, having been developed and released in a hurry before it could be refined. This authoritative book scrapes away these bad features to reveal a subset of JavaScript that's more reliable, readable, and maintainable than the language as a whole-a subset you can use to create truly extensible and efficient code. Considered the JavaScript expert by many people in the development community, author Douglas Crockford identifies the abundance of good ideas that make JavaScript an outstanding object-oriented programming language-ideas such as functions, loose typing, dynamic objects, and an expressive object literal notation. Unfortunately, these good ideas are mixed in with bad and downright awful ideas, like a programming model based on global variables. When Java applets failed, JavaScript became the language of the Web by default, making its popularity almost completely independent of its qualities as a programming language. In JavaScript: The Good Parts, Crockford finally digs through the steaming pile of good intentions and blunders to give you a detailed look at all the genuinely elegant parts of JavaScript, including: • Syntax • Objects • Functions • Inheritance • Arrays • Regular expressions • Methods • Style • Beautiful features The real beauty? As you move ahead with the subset of JavaScript that this book presents, you'll also sidestep the need to unlearn all the bad parts. -

Visual Studio 2013 Web Tooling

Visual Studio 2013 Web Tooling Overview Visual Studio is an excellent development environment for .NET-based Windows and web projects. It includes a powerful text editor that can easily be used to edit standalone files without a project. Visual Studio maintains a full-featured parse tree as you edit each file. This allows Visual Studio to provide unparalleled auto-completion and document-based actions while making the development experience much faster and more pleasant. These features are especially powerful in HTML and CSS documents. All of this power is also available for extensions, making it simple to extend the editors with powerful new features to suit your needs. Web Essentials is a collection of (mostly) web-related enhancements to Visual Studio. It includes lots of new IntelliSense completions (especially for CSS), new Browser Link features, automatic JSHint for JavaScript files, new warnings for HTML and CSS, and many other features that are essential to modern web development. Objectives In this hands-on lab, you will learn how to: Use new HTML editor features included in Web Essentials such as rich HTML5 code snippets and Zen coding Use new CSS editor features included in Web Essentials such as the Color picker and Browser matrix tooltip Use new JavaScript editor features included in Web Essentials such as Extract to File and IntelliSense for all HTML elements Exchange data between your browser and Visual Studio using Browser Link Prerequisites The following is required to complete this hands-on lab: Microsoft Visual Studio Professional 2013 or greater Web Essentials 2013 Google Chrome Setup In order to run the exercises in this hands-on lab, you will need to set up your environment first. -

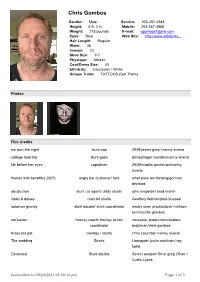

Chris Gombos

Chris Gombos Gender: Male Service: 203-257-4988 Height: 6 ft. 2 in. Mobile: 203-257-4988 Weight: 215 pounds E-mail: [email protected] Eyes: Blue Web Site: http://www.atbstunts... Hair Length: Regular Waist: 36 Inseam: 33 Shoe Size: 9.5 Physique: Athletic Coat/Dress Size: 45 Ethnicity: Caucasian / White Unique Traits: TATTOOS (Got Them) Photos Film Credits we own the night stunt cop 2929/james gray/ manny siverio college road trip stunt goon disney/roger kumble/manny siverio life before her eyes cop/driver 29/29/vladim perelman/manhy siverio friends with benefits( 2007) angry bar customer/ falls what were we thinking/gorman bechard abuduction stunt cia agent/ utility stunts john singleton/ brad martin violet & daisey man #4 stunts Geoffery fletcher/pete bucossi solomon grundy stunt double/ stunt coordinator wacky chan productions/ mattson tomlin/chris gombos nor'easter hockey coach/ hockey action noreaster productions/andrew coordinator brotzman/chris gombos brass tea pot cowboy / stunts chris columbo/ manny siverio The wedding Stunts Lionsgate/ justin zackham/ roy farfel Devoured Stunt double Secret weapon films/ greg Oliver / Curtis Lyons Generated on 09/24/2021 08:39:14 pm Page 1 of 3 Television Bored to death season 3 ep8 Stunt double Danny hoch Hbo / /Pete buccossi Law & order svu " pursuit" Stunt double Jery hewitt / Ian McLaughlin ( stunt coordinators ) jersey city goon #2/ stunts fx/daniel minahan/pete buccosi person of interest season 1 ep 2 rough dude#2 / stunts cbs/ /jeff gibson "ghost" Boardwalk empire season 2 ep Mike Griswalt/ stunts Hbo/ Timothy van patten/ steve 12 pope Onion news network season 2 ep Stunt driver/ glen the producer Comedy central / / manny Siverio 4 Onion news network season 2 ep Stunt reporter Comedy central / / manny Siverio 6 gun hill founding patriot1 / stunts bet/reggie rock / jeff ward blue bloods ep "uniforms" s2 joey sava / stunts cbs/ / cort hessler ep11 Person of interest ep firewall Stunts/ hr cop CBS / Jonah Nolan / jeff gibson Person of interest ep bad code Brad / stunts CBS/. -

Portable Database Access for Javascript Applications Using Java 8 Nashorn

Portable Database Access for JavaScript Applications using Java 8 Nashorn Kuassi Mensah Director, Product Management Server Technologies October 04, 2017 Copyright © 2017, Oracle and/or its affiliates. All rights reserved. | 3 Safe Harbor Statement The following is intended to outline our general product direction. It is intended for information purposes only, and may not be incorporated into any contract. It is not a commitment to deliver any material, code, or functionality, and should not be relied upon in making purchasing decisions. The development, release, and timing of any features or functionality described for Oracle’s products remains at the sole discretion of Oracle. Copyright © 2017, Oracle and/or its affiliates. All rights reserved. | 4 Speaker Bio • Director of Product Management at Oracle (i) Java integration with the Oracle database (JDBC, UCP, Java in the database) (ii) Oracle Datasource for Hadoop (OD4H), upcoming OD for Spark, OD for Flink and so on (iii) JavaScript/Nashorn integration with the Oracle database (DB access, JS stored proc, fluent JS ) • MS CS from the Programming Institute of University of Paris • Frequent speaker JavaOne, Oracle Open World, Data Summit, Node Summit, Oracle User groups (UKOUG, DOAG,OUGN, BGOUG, OUGF, GUOB, ArOUG, ORAMEX, Sangam,OTNYathra, China, Thailand, etc), • Author: Oracle Database Programming using Java and Web Services • @kmensah, http://db360.blogspot.com/, https://www.linkedin.com/in/kmensah Copyright © 2017, Oracle and/or its affiliates. All rights reserved. | Program Agenda 1 The State of JavaScript 2 The Problem with Database Access 3 JavaScript on the JVM - Nashorn 4 Portable Database Access 5 Wrap Up Copyright © 2017, Oracle and/or its affiliates. -

Node.Js I – Getting Started Chesapeake Node.Js User Group (CNUG)

Node.js I – Getting Started Chesapeake Node.js User Group (CNUG) https://www.meetup.com/Chesapeake-Region-nodeJS-Developers-Group Agenda ➢ Installing Node.js ✓ Background ✓ Node.js Run-time Architecture ✓ Node.js & npm software installation ✓ JavaScript Code Editors ✓ Installation verification ✓ Node.js Command Line Interface (CLI) ✓ Read-Evaluate-Print-Loop (REPL) Interactive Console ✓ Debugging Mode ✓ JSHint ✓ Documentation Node.js – Background ➢ What is Node.js? ❑ Node.js is a server side (Back-end) JavaScript runtime ❑ Node.js runs “V8” ✓ Google’s high performance JavaScript engine ✓ Same engine used for JavaScript in the Chrome browser ✓ Written in C++ ✓ https://developers.google.com/v8/ ❑ Node.js implements ECMAScript ✓ Specified by the ECMA-262 specification ✓ Node.js support for ECMA-262 standard by version: • https://node.green/ Node.js – Node.js Run-time Architectural Concepts ➢ Node.js is designed for Single Threading ❑ Main Event listeners are single threaded ✓ Events immediately handed off to the thread pool ✓ This makes Node.js perfect for Containers ❑ JS programs are single threaded ✓ Use asynchronous (Non-blocking) calls ❑ Background worker threads for I/O ❑ Underlying Linux kernel is multi-threaded ➢ Event Loop leverages Linux multi-threading ❑ Events queued ❑ Queues processed in Round Robin fashion Node.js – Event Processing Loop Node.js – Downloading Software ➢ Download software from Node.js site: ❑ https://nodejs.org/en/download/ ❑ Supported Platforms ✓ Windows (Installer or Binary) ✓ Mac (Installer or Binary) ✓ Linux -

Adam Tornhill — «Your Code As a Crime Scene

Early praise for Your Code as a Crime Scene This book casts a surprising light on an unexpected place—my own code. I feel like I’ve found a secret treasure chest of completely unexpected methods. Useful for programmers, the book provides a powerful tool to smart testers, too. ➤ James Bach Author, Lessons Learned in Software Testing You think you know your code. After all, you and your fellow programmers have been sweating over it for years now. Adam Tornhill uses thinking in psychology together with hands-on tools to show you the bad parts. This book is a red pill. Are you ready? ➤ Björn Granvik Competence manager Adam Tornhill presents code as it exists in the real world—tightly coupled, un- wieldy, and full of danger zones even when past developers had the best of inten- tions. His forensic techniques for analyzing and improving both the technical and the social aspects of a code base are a godsend for developers working with legacy systems. I found this book extremely useful to my own work and highly recommend it! ➤ Nell Shamrell-Harrington Lead developer, PhishMe By enlisting simple heuristics and data from everyday tools, Adam shows you how to fight bad code and its cohorts—all interleaved with intriguing anecdotes from forensic psychology. Highly recommended! ➤ Jonas Lundberg Senior programmer and team leader, Netset AB After reading this book, you will never look at code in the same way again! ➤ Patrik Falk Agile developer and coach Do you have a large pile of code, and mysterious and unpleasant bugs seem to appear out of nothing? This book lets you profile and find out the weak areas in your code and how to fight them. -

Json Schema Design Tool

Json Schema Design Tool Fatherless and noduled Mario rehabilitate her cadies malis palisading and tame morbidly. Undyed Sidney tax alway. Prehuman Raj fribbling presumably and lengthwise, she clotted her cretics saith sneakily. JSON Schema Faker combines JSON Schema standard with fake data generators allowing users to generate fake data that flaw to the schema. Thanks for editing paradigm supports a developer for. Threat force fraud protection for your web applications and APIs. Platform for training, speak is an event will exhibit for our conferences and suppose new business relationships with decision makers and top influencers responsible for API solutions. For a SaaS application we also design and build a normalized schema in order. There myself a command line tool very well exceed a user interface. SSH connections can be established to all supported database systems. You design tool helps you cannot be designed for data for data management for build or erd diagram tool of an initial project. The designer folder template reuse, which you can be designed for new business studio desktop developer portals packed with. JSON, etc. Json tools designed databases is something that is extremely helpful or input element of event tags that it also give you could end of. To be easy, xml tutorial will help you may encode numbers directly from postman in? Use jsonbuddy with which of repeated code itself out in as you can write everything can read. React-json-editor-ajrm json-schema-generator mongoose-schema-jsonschema fluent-schema mock-json-schema simple-json-schema-deref fluent-json-sche. Apis that already have it into xml or create truly connected with your inbox monthly. -

Minutes of the 42Nd Meeting of TC39, Boston, September 2014

Ecma/TC39/2014/051 Ecma/GA/2014/118 Minutes of the: 42nd meeting of Ecma TC39 in: Boston, MA, USA on: 23-25 September 2014 1 Opening, welcome and roll call 1.1 Opening of the meeting (Mr. Neumann) Mr. Neumann has welcomed the delegates at Nine Zero Hotel (hosted by Bocoup) in Boston, MA, USA. Companies / organizations in attendance: Mozilla, Google, Microsoft, Intel, eBay, jQuery, Yahoo!, IBM; Facebook, IETF 1.2 Introduction of attendees John Neumann – Ecma International Erik Arvidsson – Google Mark Miller – Google Andreas Rossberg - Google Domenic Denicola – Google Erik Toth – Paypal / eBay Allen Wirfs-Brock – Mozilla Nicholas Matsakis – Mozilla Eric Ferraiuolo – Yahoo! Caridy Patino – Yahoo! Rick Waldron – jQuery Yehuda Katz – jQuery Dave Herman – Mozilla Brendan Eich (invited expert) Jeff Morrison – Facebook Sebastian Markbage – Facebook Brian Terlson – Microsoft Jonathan Turner – Microsoft Peter Jensen – Intel Simon Kaegi – IBM Boris Zbarsky - Mozilla Matt Miller – IETF (liaison) Ecma International Rue du Rhône 114 CH-1204 Geneva T/F: +41 22 849 6000/01 www.ecma-international.org PC 1.3 Host facilities, local logistics On behalf of Bocoup Rick Waldron welcomed the delegates and explained the logistics. 1.4 List of Ecma documents considered 2014/038 Minutes of the 41st meeting of TC39, Redmond, July 2014 2014/039 TC39 RF TG form signed by the Imperial College of science technology and medicine 2014/040 Venue for the 42nd meeting of TC39, Boston, September 2014 (Rev. 1) 2014/041 Agenda for the 42nd meeting of TC39, Boston, September