Search for Neutrinos from Annihilating Dark Matter in Galaxies and Galaxy Clusters with the Icecube Detector

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Star Maps: Where Are the Black Holes?

BLACK HOLE FAQ’s 1. What is a black hole? A black hole is a region of space that has so much mass concentrated in it that there is no way for a nearby object to escape its gravitational pull. There are three kinds of black hole that we have strong evidence for: a. Stellar-mass black holes are the remaining cores of massive stars after they die in a supernova explosion. b. Mid-mass black hole in the centers of dense star clusters Credit : ESA, NASA, and F. Mirabel c. Supermassive black hole are found in the centers of many (and maybe all) galaxies. 2. Can a black hole appear anywhere? No, you need an amount of matter more than 3 times the mass of the Sun before it can collapse to create a black hole. 3. If a star dies, does it always turn into a black hole? No, smaller stars like our Sun end their lives as dense hot stars called white dwarfs. Much more massive stars end their lives in a supernova explosion. The remaining cores of only the most massive stars will form black holes. 4. Will black holes suck up all the matter in the universe? No. A black hole has a very small region around it from which you can't escape, called the “event horizon”. If you (or other matter) cross the horizon, you will be pulled in. But as long as you stay outside of the horizon, you can avoid getting pulled in if you are orbiting fast enough. 5. What happens when a spaceship you are riding in falls into a black hole? Your spaceship, along with you, would be squeezed and stretched until it was torn completely apart as it approached the center of the black hole. -

The Sky Tonight

MARCH POUTŪ-TE-RANGI HIGHLIGHTS Conjunction of Saturn and the Moon A conjunction is when two astronomical objects appear close in the sky as seen THE- SKY TONIGHT- - from Earth. The planets, along with the TE AHUA O TE RAKI I TENEI PO Sun and the Moon, appear to travel across Brightest Stars our sky roughly following a path called the At this time of the year, we can see the ecliptic. Each body travels at its own speed, three brightest stars in the night sky. sometimes entering ‘retrograde’ where they The brightness of a star, as seen from seem to move backwards for a period of time Earth, is measured as its apparent (though the backwards motion is only from magnitude. Pictured on the cover is our vantage point, and in fact the planets Sirius, the brightest star in our night sky, are still orbiting the Sun normally). which is 8.6 light-years away. Sometimes these celestial bodies will cross With an apparent magnitude of −1.46, paths along the ecliptic line and occupy the this star can be found in the constellation same space in our sky, though they are still Canis Major, high in the northern sky. millions of kilometres away from each other. Sirius is actually a binary star system, consisting of Sirius A which is twice the On March 19, the Moon and Saturn will be size of the Sun, and a faint white dwarf in conjunction. While the unaided eye will companion named Sirius B. only see Saturn as a bright star-like object (Saturn is the eighth brightest object in our Sirius is almost twice as bright as the night sky), a telescope can offer a spectacular second brightest star in the night sky, view of the ringed planet close to our Moon. -

Stargazer Vice President: James Bielaga (425) 337-4384 Jamesbielaga at Aol.Com P.O

1 - Volume MMVII. No. 1 January 2007 President: Mark Folkerts (425) 486-9733 folkerts at seanet.com The Stargazer Vice President: James Bielaga (425) 337-4384 jamesbielaga at aol.com P.O. Box 12746 Librarian: Mike Locke (425) 259-5995 mlocke at lionmts.com Everett, WA 98206 Treasurer: Carol Gore (360) 856-5135 janeway7C at aol.com Newsletter co-editor: Bill O’Neil (774) 253-0747 wonastrn at seanet.com Web assistance: Cody Gibson (425) 348-1608 sircody01 at comcast.net See EAS website at: (change ‘at’ to @ to send email) http://members.tripod.com/everett_astronomy nearby Diablo Lake. And then at night, discover the night sky like EAS BUSINESS… you've never seen it before. We hope you'll join us for a great weekend. July 13-15, North Cascades Environmental Learning Center North Cascades National Park. More information NEXT EAS MEETING – SATURDAY JANUARY 27TH including pricing, detailed program, and reservation forms available shortly, so please check back at Pacific Science AT 3:00 PM AT THE EVERETT PUBLIC LIBRARY, IN Center's website. THE AUDITORIUM (DOWNSTAIRS) http://www.pacsci.org/travel/astronomy_weekend.html People should also join and send mail to the mail list THIS MONTH'S MEETING PROGRAM: [email protected] to coordinate spur-of-the- Toby Smith, lecturer from the University of Washington moment observing get-togethers, on nights when the sky Astronomy department, will give a talk featuring a clears. We try to hold informal close-in star parties each month visualization presentation he has prepared called during the spring, summer, and fall months on a weekend near “Solar System Cinema”. -

Turning the Tides on the Ultra-Faint Dwarf Spheroidal Galaxies: Coma

Turning the Tides on the Ultra-Faint Dwarf Spheroidal Galaxies: Coma Berenices and Ursa Major II1 Ricardo R. Mu˜noz2, Marla Geha2, & Beth Willman3 ABSTRACT We present deep CFHT/MegaCam photometry of the ultra-faint Milky Way satellite galaxies Coma Berenices (ComBer) and Ursa Major II (UMa II). These data extend to r ∼ 25, corresponding to three magnitudes below the main se- quence turn-offs in these galaxies. We robustly calculate a total luminosity of MV = −3.8±0.6 for ComBer and MV = −3.9±0.5 for UMa II, in agreement with previous results. ComBer shows a fairly regular morphology with no signs of ac- tive tidal stripping down to a surface brightness limit of 32.4 mag arcsec−2. Using a maximum likelihood analysis, we calculate the half-light radius of ComBer tobe ′ rhalf = 74±4pc (5.8±0.3 ) and its ellipticity ǫ =0.36±0.04. In contrast, UMa II shows signs of on-going disruption. We map its morphology down to µV = 32.6 mag arcsec−2 and found that UMa II is larger than previously determined, ex- tending at least ∼ 700pc (1.2◦ on the sky) and it is also quite elongated with an ellipticity of ǫ = 0.50 ± 0.2. However, our estimate for the half-light radius, 123 ± 3pc (14.1 ± 0.3′) is similar to previous results. We discuss the implications of these findings in the context of potential indirect dark matter detections and galaxy formation. We conclude that while ComBer appears to be a stable dwarf galaxy, UMa II shows signs of on-going tidal interaction. -

And Ecclesiastical Cosmology

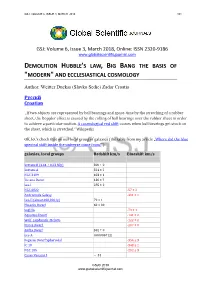

GSJ: VOLUME 6, ISSUE 3, MARCH 2018 101 GSJ: Volume 6, Issue 3, March 2018, Online: ISSN 2320-9186 www.globalscientificjournal.com DEMOLITION HUBBLE'S LAW, BIG BANG THE BASIS OF "MODERN" AND ECCLESIASTICAL COSMOLOGY Author: Weitter Duckss (Slavko Sedic) Zadar Croatia Pусскй Croatian „If two objects are represented by ball bearings and space-time by the stretching of a rubber sheet, the Doppler effect is caused by the rolling of ball bearings over the rubber sheet in order to achieve a particular motion. A cosmological red shift occurs when ball bearings get stuck on the sheet, which is stretched.“ Wikipedia OK, let's check that on our local group of galaxies (the table from my article „Where did the blue spectral shift inside the universe come from?“) galaxies, local groups Redshift km/s Blueshift km/s Sextans B (4.44 ± 0.23 Mly) 300 ± 0 Sextans A 324 ± 2 NGC 3109 403 ± 1 Tucana Dwarf 130 ± ? Leo I 285 ± 2 NGC 6822 -57 ± 2 Andromeda Galaxy -301 ± 1 Leo II (about 690,000 ly) 79 ± 1 Phoenix Dwarf 60 ± 30 SagDIG -79 ± 1 Aquarius Dwarf -141 ± 2 Wolf–Lundmark–Melotte -122 ± 2 Pisces Dwarf -287 ± 0 Antlia Dwarf 362 ± 0 Leo A 0.000067 (z) Pegasus Dwarf Spheroidal -354 ± 3 IC 10 -348 ± 1 NGC 185 -202 ± 3 Canes Venatici I ~ 31 GSJ© 2018 www.globalscientificjournal.com GSJ: VOLUME 6, ISSUE 3, MARCH 2018 102 Andromeda III -351 ± 9 Andromeda II -188 ± 3 Triangulum Galaxy -179 ± 3 Messier 110 -241 ± 3 NGC 147 (2.53 ± 0.11 Mly) -193 ± 3 Small Magellanic Cloud 0.000527 Large Magellanic Cloud - - M32 -200 ± 6 NGC 205 -241 ± 3 IC 1613 -234 ± 1 Carina Dwarf 230 ± 60 Sextans Dwarf 224 ± 2 Ursa Minor Dwarf (200 ± 30 kly) -247 ± 1 Draco Dwarf -292 ± 21 Cassiopeia Dwarf -307 ± 2 Ursa Major II Dwarf - 116 Leo IV 130 Leo V ( 585 kly) 173 Leo T -60 Bootes II -120 Pegasus Dwarf -183 ± 0 Sculptor Dwarf 110 ± 1 Etc. -

Dark Matter Distribution in Dwarf Spheroidals

Research Collection Doctoral Thesis Dark Matter Distribution in Dwarf Spheroidals Author(s): Steger, Pascal S.P. Publication Date: 2015 Permanent Link: https://doi.org/10.3929/ethz-a-010476521 Rights / License: In Copyright - Non-Commercial Use Permitted This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library DARK MATTER Distribution in Dwarf Spheroidals PhD Thesis Pascal Stephan Philipp Steger March 2015 DISS. ETH NO. 22628 Dark Matter Distribution in Dwarf Spheroidals A thesis submitted to attain the degree of DOCTOR OF SCIENCES of ETH ZURICH (Dr. sc. ETH Zurich) presented by Pascal Stephan Philipp Steger MSc Physics ETH born on 04. 08. 1986 citizen of Emmen / Ettiswil LU, Switzerland accepted on the recommendation of Prof. Dr. Simon Lilly Prof. Dr. Justin I. Read Prof. Dr. Jorge Pe˜narrubia 2015 Acknowledgements I warmly thank Justin Read for the pleasant environment. You guided me around numer- ous obstacles, and showed me what science really is all about. You helped me with inputs for simplifications and generalisations, whenever they were sorely needed. And some cute integral transformations. You helped me personally by showing me what counts in life, and how adventurous ones dreams might be. I want to thank Simon Lilly for the hassle- free administrative takeover. Thank you for your patience and for enabling me to show my work at all the conferences, too. Frank Schweitzer, thank you for your succinct and energetic clarifications on the scientific method. It has been proven to be an invaluable guide to perform research. -

The Milky Way the Milky Way's Neighbourhood

The Milky Way What Is The Milky Way Galaxy? The.Milky.Way.is.the.galaxy.we.live.in..It.contains.the.Sun.and.at.least.one.hundred.billion.other.stars..Some.modern. measurements.suggest.there.may.be.up.to.500.billion.stars.in.the.galaxy..The.Milky.Way.also.contains.more.than.a.billion. solar.masses’.worth.of.free-floating.clouds.of.interstellar.gas.sprinkled.with.dust,.and.several.hundred.star.clusters.that. contain.anywhere.from.a.few.hundred.to.a.few.million.stars.each. What Kind Of Galaxy Is The Milky Way? Figuring.out.the.shape.of.the.Milky.Way.is,.for.us,.somewhat.like.a.fish.trying.to.figure.out.the.shape.of.the.ocean.. Based.on.careful.observations.and.calculations,.though,.it.appears.that.the.Milky.Way.is.a.barred.spiral.galaxy,.probably. classified.as.a.SBb.or.SBc.on.the.Hubble.tuning.fork.diagram. Where Is The Milky Way In Our Universe’! The.Milky.Way.sits.on.the.outskirts.of.the.Virgo.supercluster..(The.centre.of.the.Virgo.cluster,.the.largest.concentrated. collection.of.matter.in.the.supercluster,.is.about.50.million.light-years.away.).In.a.larger.sense,.the.Milky.Way.is.at.the. centre.of.the.observable.universe..This.is.of.course.nothing.special,.since,.on.the.largest.size.scales,.every.point.in.space. is.expanding.away.from.every.other.point;.every.object.in.the.cosmos.is.at.the.centre.of.its.own.observable.universe.. Within The Milky Way Galaxy, Where Is Earth Located’? Earth.orbits.the.Sun,.which.is.situated.in.the.Orion.Arm,.one.of.the.Milky.Way’s.66.spiral.arms..(Even.though.the.spiral. -

Brightest Stars : Discovering the Universe Through the Sky's Most Brilliant Stars / Fred Schaaf

ffirs.qxd 3/5/08 6:26 AM Page i THE BRIGHTEST STARS DISCOVERING THE UNIVERSE THROUGH THE SKY’S MOST BRILLIANT STARS Fred Schaaf John Wiley & Sons, Inc. flast.qxd 3/5/08 6:28 AM Page vi ffirs.qxd 3/5/08 6:26 AM Page i THE BRIGHTEST STARS DISCOVERING THE UNIVERSE THROUGH THE SKY’S MOST BRILLIANT STARS Fred Schaaf John Wiley & Sons, Inc. ffirs.qxd 3/5/08 6:26 AM Page ii This book is dedicated to my wife, Mamie, who has been the Sirius of my life. This book is printed on acid-free paper. Copyright © 2008 by Fred Schaaf. All rights reserved Published by John Wiley & Sons, Inc., Hoboken, New Jersey Published simultaneously in Canada Illustration credits appear on page 272. Design and composition by Navta Associates, Inc. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as permitted under Section 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600, or on the web at www.copy- right.com. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) 748-6011, fax (201) 748-6008, or online at http://www.wiley.com/go/permissions. -

Evolutionary N-Body Simulations to Determine the Origin and Structure of the Milky Way Galaxy’S Halo Using Volunteer Computing

Evolutionary N-Body Simulations to Determine the Origin and Structure of the Milky Way Galaxy's Halo using Volunteer Computing Travis Desell, Malik Magdon-Ismail, Boleslaw Szymanski, Benjamin A. Willett, Matthew Arsenault, Carlos A. Varela Heidi Newberg Department of Computer Science Department of Physics, Applied Physics and Astronomy Rensselaer Polytechnic Institute Rensselaer Polytechnic Institute Troy, NY, USA Troy, NY, USA deselt,magdon,szymansk,[email protected] willeb,arsenm2,[email protected] Abstract—The MilkyWay@Home project uses N-body sim- using N-body simulations to model this tidal disruption of ulations to model the formation of the Milky Way galaxy's dwarf galaxies and their interaction with the Milky Way. The N halo. While there have been previous efforts to use - disruption depends on initial properties of the dwarf galaxy body simulations to perform astronomical modeling, to our knowledge, this is the first time that evolutionary algorithms are and on the gravitational potential of the Milky Way, which used to discover the best initial parameters of the simulations is primarily due to dark matter. Asynchronous evolutionary so that they accurately model observed data. Performing a algorithms (AEAs) have been used successfully to find single 32,000-body simulation takes on average about 108 the optimal input parameters for the probabilistic sampling trillion computer cycles (15 hours on a 2GHz processor), and method [3], [4], [5], [6], and this work expands these can take up to an extra order of magnitude more. Since N optimizing the input parameters to these simulations typically AEAs to find the optimal input parameters for the -body requires at least 30,000 simulations, we need to fully utilize the simulations, which will in turn provide new understanding 35,000 volunteered hosts, which are currently providing around of the origin and structure of the Milky Way. -

Constellations

Your Guide to the CONSTELLATIONS INSTRUCTOR'S HANDBOOK Lowell L. Koontz 2002 ii Preface We Earthlings are far more aware of the surroundings at our feet than we are in the heavens above. The study of observational astronomy and locating someone who has expertise in this field has become a rare find. The ancient civilizations had a keen interest in their skies and used the heavens as a navigational tool and as a form of entertainment associating mythology and stories about the constellations. Constellations were derived from mankind's attempt to bring order to the chaos of stars above them. They also realized the celestial objects of the night sky were beyond the control of mankind and associated the heavens with religion. Observational astronomy and familiarity with the night sky today is limited for the following reasons: • Many people live in cities and metropolitan areas have become so well illuminated that light pollution has become a real problem in observing the night sky. • Typical city lighting prevents one from seeing stars that are of fourth, fifth, sixth magnitude thus only a couple hundred stars will be seen. • Under dark skies this number may be as high as 2,500 stars and many of these dim stars helped form the patterns of the constellations. • Light pollution is accountable for reducing the appeal of the night sky and loss of interest by many young people as the night sky is seldom seen in its full splendor. • People spend less time outside than in the past, particularly at night. • Our culture has developed such a profusion of electronic devices that we find less time to do other endeavors in the great outdoors. -

9. the Grand Universe

Chapter 9 The Grand Universe The galaxies of the grand universe cluster about the plane of material creation, which increases in height and depth as the distance from Paradise increases. They have the appearance of a V-shaped structure when viewed in cross-section. The superuniverse space level is divided into seven equal space segments, which establishes proportional relationships between the size of the grand universe and the dimensions of each superuniverse. The locations of the seven superuniverses relative to Paradise North are given, and a chart of the internal structure of the grand universe can be drawn. Our superuniverse of Orvonton has a southeast compass bearing from Paradise North, as defined by the circulation of cosmic force through the inner zone of nether Paradise. Uversa is the headquarters world of Orvonton and is between 200,000 and 250,000 light-years away. It is concealed behind the stars and interstellar dust in the plane of the Milky Way. There are potentially 10 trillion suns in Orvonton which are segregated in ten major sectors or star drifts. At the time The Urantia Book was indited, eight of these ten major sectors had been identified by Edwin Hubble in his 1936 work, The Realm of the Nebulae. Hubble called these eight nebulae the Local Group. The Milky Way and Andromeda galaxies are part of the Local Group and each contains about one trillion solar masses. Recent studies estimate the mass of the Local Group at roughly 5 trillion solar masses, which is 65 percent of the eight trillion observable suns in Orvonton. -

CHAPTER 25 Stars and Galaxies 524-S1-Mss05ges 8/20/04 1:17 PM Page 725

524-S1-MSS05ges 8/20/04 1:17 PM Page 722 Stars and Galaxies What’s your address? sections You know your address at home. You also 1 Stars know your address at school. But do you 2 The Sun know your address in space? You live on a Lab Sunspots planet called Earth that revolves around a star 3 Evolution of Stars called the Sun. Earth and the Sun are part of 4 Galaxies and the Universe a galaxy called the Milky Way. It looks similar Lab Measuring Parallax to galaxy M83, shown in the photo. Virtual Lab How does the chemical composition Science Journal Write a description in your Science of stars determine their classification? Journal of the galaxy shown on this page. 722 TSADO/ ESO/ Tom Stack & Assoc. 524-S1-MSS05ges 8/20/04 1:17 PM Page 723 Start-Up Activities Stars, Galaxies, and the Universe Make the following Foldable to show what you Why do clusters of galaxies move know about stars, galaxies, and apart? the universe. Astronomers know that most galaxies occur STEP 1 Fold a sheet of paper in groups of galaxies called clusters. These from side to side. clusters are moving away from each other in Make the front edge space. The fabric of space is stretching like an about 1.25 cm inflating balloon. shorter than the back edge. 1. Partially inflate a balloon. Use a piece of string to seal the neck. STEP 2 Turn lengthwise and fold into 2. Draw six evenly spaced dots on the bal- thirds. loon with a felt-tipped marker.