Connecting Virtual Reality and Ecology: a New Tool to Run Seamless Immersive Experiments in R

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Quick Guide to Southeast Florida's Coral Reefs

A Quick Guide to Southeast Florida’s Coral Reefs DAVID GILLIAM NATIONAL CORAL REEF INSTITUTE NOVA SOUTHEASTERN UNIVERSITY Spring 2013 Prepared by the Land-based Sources of Pollution Technical Advisory Committee (TAC) of the Southeast Florida Coral Reef Initiative (SEFCRI) BRIAN WALKER NATIONAL CORAL REEF INSTITUTE, NOVA SOUTHEASTERN Southeast Florida’s coral-rich communities are more valuable than UNIVERSITY the Spanish treasures that sank nearby. Like the lost treasures, these amazing reefs lie just a few hundred yards off the shores of Martin, Palm Beach, Broward and Miami-Dade Counties where more than one-third of Florida’s 19 million residents live. Fishing, diving, and boating help attract millions of visitors to southeast Florida each year (30 million in 2008/2009). Reef-related expen- ditures generate $5.7 billion annually in income and sales, and support more than 61,000 local jobs. Such immense recreational activity, coupled with the pressures of coastal development, inland agriculture, and robust cruise and commercial shipping industries, threaten the very survival of our reefs. With your help, reefs will be protected from local stresses and future generations will be able to enjoy their beauty and economic benefits. Coral reefs are highly diverse and productive, yet surprisingly fragile, ecosystems. They are built by living creatures that require clean, clear seawater to settle, mature and reproduce. Reefs provide safe havens for spectacular forms of marine life. Unfortunately, reefs are vulnerable to impacts on scales ranging from local and regional to global. Global threats to reefs have increased along with expanding ART SEITZ human populations and industrialization. Now, warming seawater temperatures and changing ocean chemistry from carbon dioxide emitted by the burning of fossil fuels and deforestation are also starting to imperil corals. -

RML Algol 60 Compiler Accepts the Full ASCII Character Set Described in the Manual

I I I · lL' I I 1UU, Alsol 60 CP/M 5yst.. I I , I I '/ I I , ALGOL 60 VERSION 4.8C REI.!AS!- NOTE I I I I CP/M Vers1on·2 I I When used with CP/M version 2 the Algol Ifste. will accept disk drive Dames extending from A: to P: I I I ASSEMBLY CODE ROUTINES I I .'n1~ program CODE.ALG and CODE.ASe 1s an aid to users who wish ·to add I their own code routines to the runtime system. The program' produc:es an I output file which contains a reconstruction of the INPUT, OUTPUT, IOC and I ERROR tables described in the manual. 1111s file forms the basis of an I assembly code program to which users may edit in their routines... Pat:ts I not required may be removed as desired. The resulting program should I then be assembled and overlaid onto the runtime system" using DDT, the I resulti;lg code being SAVEd as a new runtime system. It 1s important that. I the CODE program be run using the version of the runtimesY$tem to. which I the code routines are to be linked else the tables produced DUly, be·\, I incompatible. - I I I I Another example program supplied. 1. QSORT. the sortiDI pro~edure~, I described in the manual. I I I I I I I I I I , I I I -. I I 'It I I I I I I I I I I I ttKL Aleol 60 C'/M Systea I LONG INTEGER ALGOL Arun-L 1s a version of the RML zao Algol system 1nwh1c:h real variables are represented, DOt in the normal mantissalexponent form but rather as 32 .bit 2 s complement integers. -

Workplace Learning Connection Annual Report 2019-20

2019 – 2020 ANNUAL REPORT SERVING SCHOOLS, STUDENTS, EMPLOYERS, AND COMMUNITIES IN BENTON, CEDAR, IOWA, JOHNSON, JONES, LINN, AND WASHINGTON COUNTIES Connecting today’s students to tomorrow’s careers LINN COUNTY JONES COUNTY IOWA COUNTY CENTER REGIONAL CENTER REGIONAL CENTER 200 West St. 1770 Boyson Rd. 220 Welter Dr. Williamsburg, Iowa 52361 Hiawatha, Iowa 52233 Monticello, Iowa 52310 866-424-5669 319-398-1040 855-467-3800 KIRKWOOD REGIONAL CENTER WASHINGTON COUNTY AT THE UNIVERSITY OF IOWA REGIONAL CENTER 2301 Oakdale Blvd. 2192 Lexington Blvd. Coralville, Iowa 52241 Washington, Iowa 52353 319-887-3970 855-467-3900 BENTON COUNTY CENTER CEDAR COUNTY CENTER 111 W. 3rd St. 100 Alexander Dr., Suite 2 WORKPLACE LEARNING Vinton, Iowa 52349 Tipton, Iowa 52772 CONNECTION 866-424-5669 855-467-3900 2019 – 2020 AT A GLANCE Thank you to all our contributors, supporters, and volunteers! Workplace Learning Connection uses a diverse funding model that allows all partners to sustain their participation in an equitable manner. Whether a partners’ interest are student career development, future workforce development, or regional economic development, all funds provide age-appropriate career awareness and exploration services matching the mission of WLC and our partners. Funding the Connections… 2019 – 2020 Revenue Sources Kirkwood Community College (General Fund) $50,000 7% State and Federal Grants (Kirkwood: Perkins, WTED, IIG) $401,324 54% Grant Wood Area Education Agency $40,000 5% K through 12 School Districts Fees for Services $169,142 23% Contributions, -

Think Complexity: Exploring Complexity Science in Python

Think Complexity Version 2.6.2 Think Complexity Version 2.6.2 Allen B. Downey Green Tea Press Needham, Massachusetts Copyright © 2016 Allen B. Downey. Green Tea Press 9 Washburn Ave Needham MA 02492 Permission is granted to copy, distribute, transmit and adapt this work under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License: https://thinkcomplex.com/license. If you are interested in distributing a commercial version of this work, please contact the author. The LATEX source for this book is available from https://github.com/AllenDowney/ThinkComplexity2 iv Contents Preface xi 0.1 Who is this book for?...................... xii 0.2 Changes from the first edition................. xiii 0.3 Using the code.......................... xiii 1 Complexity Science1 1.1 The changing criteria of science................3 1.2 The axes of scientific models..................4 1.3 Different models for different purposes............6 1.4 Complexity engineering.....................7 1.5 Complexity thinking......................8 2 Graphs 11 2.1 What is a graph?........................ 11 2.2 NetworkX............................ 13 2.3 Random graphs......................... 16 2.4 Generating graphs........................ 17 2.5 Connected graphs........................ 18 2.6 Generating ER graphs..................... 20 2.7 Probability of connectivity................... 22 vi CONTENTS 2.8 Analysis of graph algorithms.................. 24 2.9 Exercises............................. 25 3 Small World Graphs 27 3.1 Stanley Milgram......................... 27 3.2 Watts and Strogatz....................... 28 3.3 Ring lattice........................... 30 3.4 WS graphs............................ 32 3.5 Clustering............................ 33 3.6 Shortest path lengths...................... 35 3.7 The WS experiment....................... 36 3.8 What kind of explanation is that?............... 38 3.9 Breadth-First Search..................... -

Coral 66 Language Reference Makrjal

CORAL 66 LANGUAGE REFERENCE MAKRJAL The Centre for Computing History Nl www.CompuUngHistofy.ofg.uk \/ k > MICRO FOCUS CORAL 66 LANGUAGE REFERENCE MANUAL Version 3 Micro Focus Ltd. Issue 2 March 1982 Not to be copied without the consent of Micro Focus Ltd. This language definition reproduces material from the British Standard BS5905 which is hereby acknowledged as a source. / \ / N Micro Focus Ltd. 58,Acacia Rood, St. Johns Wbod, MICRO FOCUS London NW8 6AG Telephones: 017228843/4/5/6/7 Telex: 28536 MICROFG CX)PYRI(fflT 1981, 1982 by Micro Focus Ltd. ii CORAL 66 LANGUAGE REFERENCE MANUAL AMENDMENT RECORD AMENDMENT DATED INSERTED BY SIGNATURE DATE NUMBER iii PREFACE This manual defines the programming language CORAL 66 as implemented in Version 3 of the CORAL compilers known as RCC80 and RCC86. Now that British Standard BS5905 has become the main source for CORAL implementation its structure and style have been adopted. Con5)ared to previous version's of the RCC compilers. Version 3 incorporates 'TABLE' and 'OVERLAY', and allows additional forms of Real numbers in line with the British Standard. Coiiq}iler error reporting has been .modified slightly - in particular all error messages now have identifying numbers and are listed in appendices to the operating manuals. Micro Focus has now assumed direct responsibility for production of all RCC CORAL manuals and believes that this will offer users a better service than has been possible in the past. iv AUDIENCE This manual is intended as a description of CORAL 66 for reference and assumes familiarity with use of the language. -

Numerical Models Show Coral Reefs Can Be Self -Seeding

MARINE ECOLOGY PROGRESS SERIES Vol. 74: 1-11, 1991 Published July 18 Mar. Ecol. Prog. Ser. Numerical models show coral reefs can be self -seeding ' Victorian Institute of Marine Sciences, 14 Parliament Place, Melbourne, Victoria 3002, Australia Australian Institute of Marine Science, PMB No. 3, Townsville MC, Queensland 4810, Australia ABSTRACT: Numerical models are used to simulate 3-dimensional circulation and dispersal of material, such as larvae of marine organisms, on Davies Reef in the central section of Australia's Great Barner Reef. Residence times on and around this reef are determined for well-mixed material and for material which resides at the surface, sea bed and at mid-depth. Results indcate order-of-magnitude differences in the residence times of material at different levels in the water column. They confirm prevlous 2- dimensional modelling which indicated that residence times are often comparable to the duration of the planktonic larval life of many coral reef species. Results reveal a potential for the ma~ntenanceof local populations of vaiious coral reef organisms by self-seeding, and allow reinterpretation of the connected- ness of the coral reef ecosystem. INTRODUCTION persal of particles on reefs. They can be summarised as follows. Hydrodynamic experiments of limited duration Circulation around the reefs is typified by a complex (Ludington 1981, Wolanski & Pickard 1983, Andrews et pattern of phase eddies (Black & Gay 1987a, 1990a). al. 1984) have suggested that flushing times on indi- These eddies and other components of the currents vidual reefs of Australia's Great Barrier Reef (GBR) are interact with the reef bathymetry to create a high level relatively short in comparison with the larval life of of horizontal mixing on and around the reefs (Black many different coral reef species (Yamaguchi 1973, 1988). -

Coral Populations on Reef Slopes and Their Major Controls

Vol. 7: 83-115. l982 MARINE ECOLOGY - PROGRESS SERIES Published January 1 Mar. Ecol. Prog. Ser. l l REVIE W Coral Populations on Reef Slopes and Their Major Controls C. R. C. Sheppard Australian Institute of Marine Science, P.M.B. No. 3, Townsville M.S.O.. Q. 4810, Australia ABSTRACT: Ecological studies of corals on reef slopes published in the last 10-15 y are reviewed. Emphasis is placed on controls of coral distributions. Reef slope structures are defined with particular reference to the role of corals in providing constructional framework. General coral distributions are synthesized from widespread reefs and are described in the order: shallowest, most exposed reef slopes; main region of hermatypic growth; deepest studies conducted by SCUBA or submersible, and cryptic habitats. Most research has concerned the area between the shallow and deep extremes. Favoured methods of study have involved cover, zonation and diversity, although inadequacies of these simple measurements have led to a few multivariate treatments of data. The importance of early life history strategies and their influence on succession and final community structure is emphasised. Control of coral distribution exerted by their dual nutrition requirements - particulate food and light - are the least understood despite being extensively studied Well studied controls include water movement, sedimentation and predation. All influence coral populations directly and by acting on competitors. Finally, controls on coral population structure by competitive processes between species, and between corals and other taxa are illustrated. Their importance to general reef ecology so far as currently is known, is described. INTRODUCTION ecological studies of reef corals beyond 50 m to as deep as 100 m. -

The Secret Life of Coral Reefs: a Dominican Republic Adventure TEACHER's GUIDE

The Secret Life of Coral Reefs: A Dominican Republic Adventure TEACHER’S GUIDE Grades: All Subjects: Science and Geography Live event date: May 10th, 2019 at 1:00 PM ET Purpose: This guide contains a set of discussion questions and answers for any grade level, which can be used after the virtual field trip. It also contains links to additional resources and other resources ranging from lessons, activities, videos, demonstrations, experiments, real-time data, and multimedia presentations. Dr. Joseph Pollock Event Description: Coral Strategy Director Is it a rock? Is it a plant? Or is it something else entirely? Caribbean Division The Nature Conservancy Discover the amazing world of coral reefs with coral scientist Joe Pollock, as he takes us on a virtual field trip to the beautiful coastline of the Dominican Republic. We’ll dive into the waters of the Caribbean to see how corals form, the way they grow into reefs, and how they support an incredible array of plants and animals. Covering less than 1% of the ocean floor, coral reefs are home to an estimated 25% of all marine species. That’s why they’re often called the rainforests of the sea! Explore this amazing ecosystem and learn how the reefs are more than just a pretty place—they provide habitat for the fish we eat, compounds for the medicines we take, and even coastal protection during severe weather. Learn how these fragile reefs are being damaged by human activity and climate change, and how scientists from The Nature Conservancy and local organizations are developing ways to restore corals in the areas where they need the most help. -

Cape Coral, Fl 2018-2021

:: CAPE CORAL, FL 2018-2021 STRATEGIC PLAN ECONOMY • ENVIRONMENT • QUALITY OF LIFE IDOWU KOYENIKAN “Someone is sitting in the shade today because someone planted a tree a long time ago”. Warren Buffet said this, and I am struck by how true, yet simple, it is. The key to success is planning. Not the plan, not the goal, but the actual process during which visions are established, goals are set, and plans are made for successful achievement of those goals. As we continue to implement our strategic plan for smart, sustainable growth, our stakeholders, consisting of City Staff, City Council, Landowners, Residents, and Business Owners, all have Cape Coral’s best interest at heart. If we are to create desirable employment opportunities, we must be able to attract and WHEN A GROUP OF PEOPLE WITH SIMILAR TO WORK TOWARDS THE SAME GOALS.” TO WORK TOWARDS retain businesses and commercial interests that are motivated to succeed. Cape Coral’s preplatted community structure is a liability we must offset to increase our market value and competitiveness. Centric to this concept is the steadfast pursuit of sustaining our ability to meet the future infra-structure and ancillary needs of these new stakeholders. It is with the utmost humility and fervent resolve that your City Council seeks to join with you and all stakeholders in support of this plan which will perpetuate the ongoing journey of this great city. Regards, Joe Coviello GETS TOGETHER IMMENSE POWER Mayor “THERE IS INTERESTS 2 | Cape Coral Strategic Plan “Functional leadership is key to economic sustainability.” On behalf of the City Council and City staff, I am proud to present the City of Cape Coral’s Fiscal Year 2018-2021 Strategic Plan. -

THE DEVELOPMENT of JOVIAL Jules L Schwartz Computer

THE DEVELOPMENT OF JOVIAL Jules L Schwartz Computer Sciences Corp. 1. THE BACKGROUND One of the significant things that got the JOVIAL lan- guage and compiler work started was an article on 1.1 The Environmental and Personnel Setting Expression Analysis that appeared in the 1958 ACM Communications (Wolpe 1958). The fact that this was The time was late 1958. Computers that those who were actually quite a revelation to us at that time seems involved with the first JOVIAL project had been using interesting now. Since that day, of course, there have included the JOHNNIAC (built at Rand Corporation), the been many developments in the parsing of mathematical IBM AN/FSQ-7 (for SAGE), 701, and 704. A few had and logical expressions. However, that article was the worked with board wired calculators. Some had never first exposure many of us had to the subject. Some of programmed. For those using IBM, the 709 was the us who had just finished work on some other projects current machine. There were various other manufactur- (including SAGE) began experimentation with the proc- ers at the time, some of whom do not exist today as essing of complex expressions to produce an interme- computer manufacturers. diate language utilizing the techniques described. There was no compiler language at the time in our plans, but The vacuum tube was still in vogue, Very large memo- the idea of being able to understand and parse complex ries, either high speed direct access or peripheral expressions in itself was of sufficient interest to moti- storage, didn't exist, except for magnetic tape and vate our efforts. -

Comparative Programming Languages CM20253

We have briefly covered many aspects of language design And there are many more factors we could talk about in making choices of language The End There are many languages out there, both general purpose and specialist And there are many more factors we could talk about in making choices of language The End There are many languages out there, both general purpose and specialist We have briefly covered many aspects of language design The End There are many languages out there, both general purpose and specialist We have briefly covered many aspects of language design And there are many more factors we could talk about in making choices of language Often a single project can use several languages, each suited to its part of the project And then the interopability of languages becomes important For example, can you easily join together code written in Java and C? The End Or languages And then the interopability of languages becomes important For example, can you easily join together code written in Java and C? The End Or languages Often a single project can use several languages, each suited to its part of the project For example, can you easily join together code written in Java and C? The End Or languages Often a single project can use several languages, each suited to its part of the project And then the interopability of languages becomes important The End Or languages Often a single project can use several languages, each suited to its part of the project And then the interopability of languages becomes important For example, can you easily -

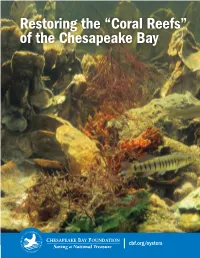

Restoring the “Coral Reefs” of the Chesapeake Bay

Restoring the “Coral Reefs” of the Chesapeake Bay Rebuilding the Reefs Support Efforts to Restore Three-Dimensional Reefs to the Bay cbf.org/oysters The Benefits of Oyster Reefs Structure and Flow Habitat and Diversity Clean Water Number of Organisms in One Square Meter of Oyster Reef Three-dimensional reef Oyster reefs are more than When oysters and other Un- systems affect the physical just mounds that change reef species feed in the Restored Restored environment within the water flow. The intricate currents, they filter the water Black-clawed 108 3 Bay in the same way that latticework of shells provides for small particles, usually Mud Crab mountain ranges affect diverse habitats for many microscopic plants called weather patterns. Reefs small plants and animals phytoplankton. A single Flat Mud Crab 90 1 interrupt currents, speeding that make their homes on oyster can filter up to fifty them up and creating reefs. Some attached gallons of Bay water a day. Grass Shrimp 40 1 turbulence behind the reefs. animals, such as barnacles, They remove algae, bacteria, Many reef organisms have mussels, and bryozoans, lie and bits of decayed material. Scud 43 2 evolved to take advantage flat against the oyster shell. Oyster feces and the other of such currents and Others like redbeard sponges, matter they discard fuel Soft Shell Clam 122 42 are less abundant or non- flower-like anemones, and the reef community where existent in a flat habitat. We feathery hydroids branch out bacteria convert the nitrogen Barnacle 3040 89 know that oysters thrive at into the water.