Tommi Mikkonen Adnan Ashraf (Editors) Developing Cloud Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Top 10 Best Practices for Successful Multi-Cloud Management

best practices for successful Top 10 multi-cloud management How the multi-cloud world is changing the face of IT Top 10 best practices for successful Overview multi-cloud management A multi-cloud world is quickly becoming the new normal for many enterprises. But embarking on a cloud journey and managing cloud-based services across multiple providers can seem overwhelming. Even the term multi-cloud can be confusing. Multi-cloud is not the same as hybrid cloud. The technical definition of hybrid cloud is an environment that includes traditional data centers with physical servers, private cloud with virtualized servers as well as public cloud provisioned by service providers. Quite often, multi-cloud simply means that an organization uses multiple public clouds from many vendors to deliver its IT services. In other words, organizations can have a multi-cloud without having a hybrid cloud, or they can have a multi-cloud as part of a hybrid cloud. When an organization’s users take the initiative to adopt infrastructure and solutions from different cloud vendors, challenges emerge. Each new cloud service comes with its own tools that can increase complexity. Multi-cloud environments require new management solutions to optimize performance, control costs and secure complicated mixes of applications and environments, regardless of whether they are inside the data center or in the cloud. Today, IT users have a choice. Corporate IT departments know that if they don’t react, they may find themselves irrelevant. As a result, they are moving away from the capital investment model of IT—in which standing up a data center was essential—to assembling a catalog of IT services available from CSPs. -

Headless CMS Breathes New Life Into “Content Anywhere”

Insight Brief Headless CMS Breathes New Life into “Content Anywhere” By: Connie Moore Content Is Everywhere Silicon Valley constantly ushers in technologies and Anywhere for creating new content types for various delivery in Today’s Organization channels, such as Alexa, Twitter feeds, Facebook media, YouTube clips, CCTV film, drone footage, Although pundits declare that we are immersed in 3-D models, wearable output, biometrics output, a world of big data, the sheer volume and velocity voice recognition content, podcasts, IM texts, and at which digital content is created, consumed, so forth. Although YouTube, Twitter, and Facebook updated, and stored throughout organizations are utterly familiar today, they were once among is at an all-time high, validating the age-old the cacophony of emerging technologies waiting expression“content is king.” Content, in its many to go viral. Even today, the next new “whatever” is established and evolving forms, has been king, is jostling in the mix, vying to become the next really king, and will be king for the foreseeable future, big, new thing. making it imperative to anticipate and plan new Who can say with certainty what content approaches to deliver the customer experience challenges will arise within the next year, much and produce, manage, and govern increasingly less the next five years? But it is still possible diversified and complex content. to assume a certain growth rate for content in the organization and to ensure that the content management architecture is flexible, adaptive, and well-positioned to handle new challenges and scale Sponsored by for more content. DIGITAL CLARITY GROUP | © 2017 | [email protected] Headless CMS Breathes New Life into “Content Anywhere” | 1 Planning for growth, new expectations, and communication, the contact center, and more. -

It's Time for a Unified Information Strategy

Scaling Knowledge Management: IT’S TIME FOR A UNIFIED INFORMATION STRATEGY Sponsored by Authored by Joe McKendrick, Lead Analyst Scaling Knowledge Management: It’s Time for a Unified Information Strategy Authored by Joe McKendrick, Lead Analyst To succeed in today’s diverse digital economy, Real-World Challenges with enterprises need to proactively and effectively create, store and deliver content. Doing so means Knowledge Management superior customer experience, enhanced employee While a knowledgebase may exist in a digital productivity, and stronger partnerships. A format, its reach and relevance may be severely knowledge-driven enterprise needs to provide impeded if it cannot be scaled to meet new managers, knowledge workers and line employees requirements or grow with the organization. all the information they need, at the moment they Today’s enterprises are adding and expanding need it, to respond to customer and market digital channels and processes, reaching new needs—to support product design, product global markets, and innovating at a blinding maintenance, information systems management, pace. A knowledge management solution and software creation. However, much of this needs to be able to expand and meet this information—in the form of documentation, accelerating pace. messaging, graphics, video files, audio files, and Ongoing surveys by KMWorld find that transactional data—is locked away in silos and embedding knowledge management into the within diverse applications across organizations, day-to-day work at enterprises is top of mind. The requiring inordinate amounts of time and treatment of KM as a standalone function resources to locate. continues to be the issue that concerns respondents While information from all these sources is the most, along with a related concern, siloed critical to business success, the chaos continues to information systems. -

Cloud Adoption the Definitive Guide to a Business Technology Revolution

THETHE WHITEWHITE BOOKBOOK OF...OF... Cloud Adoption The definitive guide to a business technology revolution shaping tomorrow with you THE WHITE BOOK OF… Cloud Adoption Contents Acknowledgments 4 Preface 5 1: What is Cloud? 6 2: What Cloud Means to Business 10 3: CIO Headaches 16 4: Adoption Approaches 22 5: The Changing Role of the Service Management Organisation 42 6: The Changing Role of The Enterprise Architecture Team 46 7: The Future of Cloud 50 8: The Last Word on Cloud 54 Cloud Speak: Key Terms Explained 57 Acknowledgments In compiling and developing this publication, Fujitsu is very grateful to the members of its CIO Advisory Board. Particular thanks are extended to the following for their contributions: l Neil Farmer, IT Director, Crossrail l Nick Gaines, Group IS Director, Volkswagen UK l Peter Lowe, PDCS Change & Transformation Director, DWP l Nick Masterson-Jones, CIO, Travelex Global Business Payments With further thanks to our authors: l Ian Mitchell, Chief Architect, UK and Ireland, Fujitsu l Stephen Isherwood, Head of Infrastructure Services Marketing, UK and Ireland, Fujitsu For more information on the steps to cloud computing, go to: http://uk.fujitsu.com/cloud If you would like to further discuss with us the steps to cloud computing, please contact: [email protected] ISBN: 978-0-9568216-0-7 Published by Fujitsu Services Ltd. Copyright © Fujitsu Services Ltd 2011. All rights reserved. No part of this document may be reproduced, stored or transmitted in any form without prior written permission of Fujitsu Services Ltd. Fujitsu Services Ltd endeavours to ensure that the information in this document is correct and fairly stated, but does not accept liability for any errors or omissions. -

Chapter 4 the Digital Economy, New Business Models and Key Features

4. THEDIGITALECONOMY,NEWBUSINESSMODELSANDKEYFEATURES – 69 Chapter 4 The digital economy, new business models and key features This chapter discusses the spread of information and communication technology (ICT) across the economy, provides examples of business models that have emerged as a consequence of the advances in ICT, and provides an overview of the key features of the digital economy that are illustrated by those business models. The statistical data for Israel are supplied by and under the responsibility of the relevant Israeli authorities. The use of such data by the OECD is without prejudice to the status of the Golan Heights, East Jerusalem and Israeli settlements in the West Bank under the terms of international law. ADDRESSING THE TAX CHALLENGES OF THE DIGITAL ECONOMY © OECD 2014 70 – 4. THEDIGITALECONOMY,NEWBUSINESSMODELSANDKEYFEATURES 4.1 The spread of ICT across business sectors: the digital economy All sectors of the economy have adopted ICT to enhance productivity, enlarge market reach, and reduce operational costs. This adoption of ICT is illustrated by the spread of broadband connectivity in businesses, which in almost all countries of the Organisation for Economic Co-operation and Development (OECD) is universal for large enterprises and reaches 90% or more even in smaller businesses. Figure 4.1. Enterprises with broadband connection, by employment size, 2012 Fixed and mobile connections, as a percentage of all enterprises % All Large Medium Small 100 95 90 85 80 75 70 60 50 40 FIN ISL CHE FRA SVN NLD AUS SWE ESP EST LUX CAN BEL ITA GBR DNK SVK CZE PRT IRL AUT DEU NOR HUN JPN POL GRC MEX For Australia, data refer to 2010/11 (fiscal year ending 30 June 2011) instead of 2012. -

A Practical Guide for Successful CMS Selection, Implementation, and Value Creation TABLE of CONTENTS

The Definitive Guide to Healthcare CMS A Practical Guide for Successful CMS Selection, Implementation, and Value Creation TABLE OF CONTENTS 3 Intro: Why should I read this guide? 5 Chapter 1: 5 challenges that scream, "We need a new CMS!" 8 Chapter 2: On-premise vs. hosted vs. SaaS 11 Chapter 3: An argument for decoupled architecture 15 Chapter 4: Common and advanced healthcare CMS features 31 Chapter 5: 7 steps to select the right healthcare CMS 40 Chapter 6: 6 key ingredients in building a healthcare CMS business case 44 Chapter 7: Getting prepared for project success 55 Chapter 8: The journey to delivering personalized experiences 63 Chapter 9: Measuring CMS success 65 Conclusion 67 About Healthgrades 68 Source List 3 INTRODUCTION Why should I read the definitive guide to healthcare CMS? For the last several years, each new year has been billed “the year of the consumer” in healthcare, led mostly by a shift from a provider-led to a consumer- led landscape that has become very competitive. According to Accenture,1 The problem is exacerbated by a marketing sea change called “liquid expectations,” when consumer experiences seep over from one industry into another, creating an expectations chasm. 59% of consumers now want their digital healthcare experience to mirror retail.2 Specifically, they expect personalized content and offers, transparency around quality and costs, and convenient self-service options that work seamlessly across responsive websites, mobile apps, social channels, and more. Introduction 4 Progress against these rising consumer expectations In this guide you will receive answers is painfully slow, in part because legacy web content to these important questions: management systems (CMS) make it difficult to 1 Does my organization need a new CMS? deliver. -

Skykit Digital Signage Guide

Chapter 1: History of Digital Signage Skykit Digital Signage Guide 1 Table of Contents Chapter 1: A History of Digital Signage . 4 Chapter 2: Digital Signage Benefits . 14 Chapter 3: 10 Biggest Digital Signage Challenges . 25 Chapter 4: What to Look For in Digital Signage Software (CMS) . 38 Chapter 5: Digital Signage Hardware: What to Look For . 50 Chapter 6: Cloud-Based Digital Signage . 65 Chapter 7: Google Chrome for Digital Signage .. 78 Chapter 8: What Content to Put on Your Displays . 93 Chapter 9: Installing and Rolling Out Digital Signage . 105 Chapter 10: Keep Up with Digital Signage Trends . 115 10 Digital Signage Topics You Need to Know Before Buying Digital Signage Guide The History Sales 1 sales@skykit .com of Phone D Toll i Free: 8 g 55 .383 ital .4518 Local: 612 .260 .7327 Signag Address e 4 20 N 5th Street Minneapolis, MN 55401 www.skykit.com Chapter 1: History of Digital Signage And Nordstrom’s digital denim kiosk is just one of thousands of potential digital signage campaigns, each targeted to a certain kind of user and designed to leverage unique strengths of different pieces of hardware . 1990’S TO TODAY: From holograms to jumbotrons, campus wayfinding, to tableside ordering kiosks, there’s a truly dizzying array of digital signage out there . A History of All these uses of the technology have a common root . Digital signage had its beginnings in Digital Signage fashion houses in the 70’s… or perhaps banks and trade shows in the 90’s… How it started is really affected by Digital signage is a powerful In late 2014, Nordstrom installed a how you define digital signage. -

Google Chrome for Digital Signage

10 Digital Signage Topics You Need to Digital Signage Guide Know Before Buying 7 Google Sales [email protected] Chrome for Phone Toll Free: 855.383.4518 Local: 612.260.7327 Digital Address 420 N 5th Street Sig Minneapolis, MN 55401 n www.s a kykit.co ge m Chapter 7: Google Chrome for Digital Signage players and give you a leg-up in deciding which is right for you. Get Google your PDF here. The Hardware Chrome for The hardware required to complete a digital signage solution is typically a commercial-grade screen and Digital Signage a media player . There are lots of options out there for displays, which we explored in Chapter 5, but now it’s time to look at the media players . There are also lots of options out there for media players, and for Google, that includes the Chromebit, Google has been making waves in Chrome bypasses all those concerns Chromebase, and Chromebox . We’ll the digital signage market for about that are very real for other platforms . also enlighten you about Chromecast, two years . They’ve made it clear that but Chromecast is not recommended You can choose for your Chrome is here . Chrome OS as a commercial-grade digital media players, manage those devices signage tool . The Google ecosystem has from the Chrome Management compelling hardware / media players Console (CMC), and control your It can be confusing to determine which available to run your digital signs . content using a CMS from one of device you need if you’re considering Chrome provides a platform for Google’s partners . -

Print This Article

Paper—Development and Integration of E-learning Services Using REST APIs Development and Integration of E-learning Services Using REST APIs https://doi.org/10.3991/ijet.v15i04.11687 Saleh Mowla, Sucheta V. Kolekar () Manipal Academy of Higher Education, Manipal, India [email protected] Abstract—E-Learning systems have gained a lot of traction amongst students and academicians due to their flexible nature in terms of location independence, time, effort, cost and other resources. The rapidly changing nature of the education domain makes the design, development, testing and maintenance of E-Learning systems complex and expensive. In order to adapt to the changing policies of educational institutes as well as improve the performance of students, the paper presents a Service-Oriented Architecture (SOA) approach to minimize the cost and time associated with the development of E-Learning systems. The paper illustrates the development of independent E- Learning web services and how they can be combined to implement the required policies of respective education institutes. The paper also presents a sample policy implemented using developed web services to achieve the required objectives. Keywords—E-learning;Web Services;Service Oriented Architecture;REST APIs 1 Introduction With the era of internet and online information, the development of various E- Learning systems has been on the rise. Many organizations have their own different requirements when it comes to how the content should be portrayed for students to learn in an easy and comprehensible manner online. However, most of the time, the basic purpose of any E-Learning system remains the same. Instead of spending valuable time and resources from scratch, it will be much easier to integrate or reuse available modules to meet the basic purpose. -

Intellectual Property Management Challenges Arising from Pervasive Digitalisation: the Effect of the Digital Transformation on Daily Life 67 Peter Bittner

Big Data in the Health and Life Sciences What Are the Challenges for European Competition Law and Where Can They Be Found? Minssen, Timo; Schovsbo, Jens Hemmingsen Published in: Intellectual Property and Digital Trade in the Age of Artificial Intelligence and Big Data Publication date: 2018 Document license: CC BY-NC-ND Citation for published version (APA): Minssen, T., & Schovsbo, J. H. (2018). Big Data in the Health and Life Sciences: What Are the Challenges for European Competition Law and Where Can They Be Found? In X. Seuba, C. Geiger, & J. Pénin (Eds.), Intellectual Property and Digital Trade in the Age of Artificial Intelligence and Big Data (pp. 121-130). Centre d'études internationales de la propriété intellectuelles (CEIPI). CEIPI / ICTSD Publication Series on Global Perspectives and Challenges for the Intellectual Property System No. 5 Download date: 30. sep.. 2021 Global Perspectives and Challenges for the Intellectual Property System A CEIPI-ICTSD publications series Intellectual Property and Digital Trade in the Age of Artificial Intelligence and Big Data Edited by Xavier Seuba, Christophe Geiger, and Julien Pénin With contributions by Keith E. Maskus, Yann Ménière, Ilja Rudyk, Sean M. O’Connor, Catalina Martínez, Peter Bittner, Alissa Zeller, Reto Hilty, Christophe Geiger, Giancarlo Frosio, Oleksandr Bulayenko, Ryan Abbott, Timo Minssen, Jens Schovsbo, Francesco Lissoni, Gabriele Cristelli, and Claudia Jamin Issue Number 5 June 2018 Global Perspectives and Challenges for the Intellectual Property System A CEIPI-ICTSD publications series Intellectual Property and Digital Trade in the Age of Artificial Intelligence and Big Data Edited by Xavier Seuba, Christophe Geiger, and Julien Pénin With contributions by Keith E. -

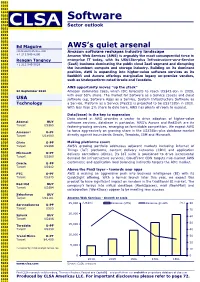

Amazon's Software Play

0101 1010 0101 1010 0101 1010 Software 0101 1010 Sector outlook 0101 1010 0101 1010 0101 1010 0101 Ed Maguire AWS’s quiet arsenal 1010 [email protected] Amazon software reshapes industry landscape 0101 +1 212 549 8200 Amazon Web Services (AWS) is arguably the most consequential force in 1010 0101 Reagan Tangney enterprise IT today, with its US$12bn-plus Infrastructure-as-a-Service 1010 +1 212 549 5028 (IaaS) business dominating the public cloud IaaS segment and disrupting 0101 the incumbent compute and storage industry. Building on its dominant 1010 position, AWS is expanding into higher-value software services as its 0101 1010 RedShift and Aurora offerings marginalize legacy on-premise vendors, 0101 such as Underperform-rated Oracle and Teradata. 1010 0101 AWS opportunity moves “up the stack” 1010 26 September 2016 Amazon dominates IaaS, which IDC forecasts to reach US$43.6bn in 2020, 0101 1010 with over 50% share. The market for Software as a Service (SaaS) and cloud 0101 USA software (eg, Software Apps as a Service, System Infrastructure Software as 1010 Technology a Service, Platform as a Service [PaaS]) is projected to be US$152bn in 2020. 0101 With less than 2% share to date here, AWS has plenty of room to expand. 1010 0101 Data(base) is the key to expansion 1010 0101 Data stored in AWS provides a vector to drive adoption of higher-value 1010 Akamai BUY software services, database in particular. AWS’s Aurora and RedShift are its 0101 Target US$65 fastest-growing services, emerging as formidable competition. -

Editor-In-Chief Marydee Ojala Introduces You to Magazine's

Editor-in-chief Marydee Ojala introduces you to magazine’s content and mission. Click here to subscribe contents MAR/APR 2016 10 28 38 46 56 features Meeting User Needs With adds important new features while retaining not only what they are, but also how best to the best of its classic version, writes experi- use them. Cataloger’s Desktop ..................10 enced chemical searcher Bob Buntrock. Bruce Johnson, Derek Rodriguez, and Susanne Ross Vendor Videos for The Library of Congress, assisted by Search Technol- Advanced Twitter Training and Sales ................... 56 ogies, Inc., redesigned Cataloger’s Desktop to help Search Commands ...............50 Marydee Ojala librarians around the world create metadata to biblio- Tracy Z. Maleeff Increasingly, our vendors are using videos to sell their graphically control library resources. This custom Billions of people use social media. But for products and effectively train both information profes- search application required paying close attention to information professionals, it’s the advanced sionals and end users on how to use those products. user needs and deploying ongoing, iterative changes. search capabilities that attract their atten- Online Searcher editor-in-chief Marydee Ojala de- tion, Tracy Maleeff goes deep into the ad- scribes the advantages and disadvantages of video vanced search features of Twitter, detailing and looks at individual vendors’ video channels. Synthesizing, Extracting, and Customizing Web Data .......16 Ernest R. Perez searcher’s voice... Tools such as the free import.io facilitate custom- ized data capture from websites and storage of information as formatted, easily transferable data files. Long time data librarian Ernest Perez walks THE SEARCHER’S VOICE ...33 Technological Singularity us through the process.