Rigidity of Microsphere Heaps

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

San Francisco Giants

SAN FRANCISCO GIANTS 2016 END OF SEASON NOTES 24 Willie Mays Plaza • San Francisco, CA 94107 • Phone: 415-972-2000 sfgiants.com • sfgigantes.com • sfgiantspressbox.com • @SFGiants • @SFGigantes • @SFG_Stats THE GIANTS: Finished the 2016 campaign (59th in San Francisco and 134th GIANTS BY THE NUMBERS overall) with a record of 87-75 (.537), good for second place in the National NOTE 2016 League West, 4.0 games behind the first-place Los Angeles Dodgers...the 2016 Series Record .............. 23-20-9 season marked the 10th time that the Dodgers and Giants finished in first and Series Record, home ..........13-7-6 second place (in either order) in the NL West...they also did so in 1971, 1994 Series Record, road ..........10-13-3 (strike-shortened season), 1997, 2000, 2003, 2004, 2012, 2014 and 2015. Series Openers ...............24-28 Series Finales ................29-23 OCTOBER BASEBALL: San Francisco advanced to the postseason for the Monday ...................... 7-10 fourth time in the last sevens seasons and for the 26th time in franchise history Tuesday ....................13-12 (since 1900), tied with the A's for the fourth-most appearances all-time behind Wednesday ..................10-15 the Yankees (52), Dodgers (30) and Cardinals (28)...it was the 12th postseason Thursday ....................12-5 appearance in SF-era history (since 1958). Friday ......................14-12 Saturday .....................17-9 Sunday .....................14-12 WILD CARD NOTES: The Giants and Mets faced one another in the one-game April .......................12-13 wild-card playoff, which was added to the MLB postseason in 2012...it was the May .........................21-8 second time the Giants played in this one-game playoff and the second time that June ...................... -

A Summer Wildfire: How the Greatest Debut in Baseball History Peaked and Dwindled Over the Course of Three Months

The Report committee for Colin Thomas Reynolds Certifies that this is the approved version of the following report: A Summer Wildfire: How the greatest debut in baseball history peaked and dwindled over the course of three months APPROVED BY SUPERVISING COMMITTEE: Co-Supervisor: ______________________________________ Tracy Dahlby Co-Supervisor: ______________________________________ Bill Minutaglio ______________________________________ Dave Sheinin A Summer Wildfire: How the greatest debut in baseball history peaked and dwindled over the course of three months by Colin Thomas Reynolds, B.A. Report Presented to the Faculty of the Graduate School of the University of Texas at Austin in Partial Fulfillment of the Requirements for the Degree of Master of Arts The University of Texas at Austin May, 2011 To my parents, Lyn & Terry, without whom, none of this would be possible. Thank you. A Summer Wildfire: How the greatest debut in baseball history peaked and dwindled over the course of three months by Colin Thomas Reynolds, M.A. The University of Texas at Austin, 2011 SUPERVISORS: Tracy Dahlby & Bill Minutaglio The narrative itself is an ageless one, a fundamental Shakespearean tragedy in its progression. A young man is deemed invaluable and exalted by the public. The hero is cast into the spotlight and bestowed with insurmountable expectations. But the acclamations and pressures are burdensome and the invented savior fails to fulfill the prospects once imagined by the public. He is cast aside, disregarded as a symbol of failure or one deserving of pity. It’s the quintessential tragedy of a fallen hero. The protagonist of this report is Washington Nationals pitcher Stephen Strasburg, who enjoyed a phenomenal rookie season before it ended abruptly due to a severe elbow injury. -

Former Mariner Guardado Takes on Autism | Seattle Mariners Insider - the News Tribune 2/5/12 9:00 AM

Former Mariner Guardado takes on autism | Seattle Mariners Insider - The News Tribune 2/5/12 9:00 AM MARINERS INSIDER Sunday, February 5, 2012 - Tacoma, WA Web Search powered by YAHOO! SEARCH NEWS SPORTS BUSINESS OPINION SOUNDLIFE ENTERTAINMENT OBITS CLASSIFIEDS JOBS CARS HOMES RENTALS FINDN SAVE Mariners Insider !"#$%#&'(#)*%#&+,(#-(-"&.(/%0&"*&(,.)0$!"#$%#&'(#)*%#&+,(#-(-"&.(/%0&"*&(,.)0$ Post by LarryLarry LarueLarue / The News Tribune on Jan. 13, 2012 at 8:16 am with 11 CommentComment »» When he closed games for the Seattle Mariners from 2004-2006, Ed- die Guardado MARINERS NEWS was serious MARINERS NEWS enough to save 59 games while Mariners reach back, acquire Carlos – off the field – Guillen terrorizing teammates with Mariners sign Carlos Guillen to minor- a penchant for league deal practical jokes. Talented trio waits in wings Now retired, the affable lefty and wife Lisa have MORE MLB NEWS a new cause: autism. It’s one Eddie Guardado Ex-MLB pitcher Penny signs with Softbank that hits close to home, be- Hawks cause their daughter Ava was diagnosed with it in 2007. Wife of Yankees GM Cashman files for divorce Living in Southern California, the Guardados have established a foundation with a more immediate goal than finding a cure. Rangers' Hamilton confirms alcohol relapse “These kids can’t wait 10 years for the research. We have to use what works now … the earlier the better,” Guardado told Teryl Zarnow of the Orange County Register. The Guardados donated $27,000 to buy i-Pads for families with autistic children, and have orga- BLOG SEARCH nized a Stars & Strikes celebrity bowling tournament Jan. -

![Topps Heritage SP[1]](https://docslib.b-cdn.net/cover/8453/topps-heritage-sp-1-1568453.webp)

Topps Heritage SP[1]

Topps Heritage Short Prints and Inserts 2001 Topps Heritage Short Prints 8 ‐ Ramiro Mendoza (Black Back) 18 ‐ Roger Cedeno (Red Back) 19 ‐ Randy Velarde (Red Back) 28 ‐ Randy WolF (Black Back) 34 ‐ Javy Lopez (Black Back) 35 ‐ Aubrey HuFF (Black Back) 36 ‐ Wally Joyner (Black Back) 37 ‐ Magglio Ordonez (Black Back) 39 ‐ Mariano Rivera (Black Back) 40 ‐ Andy Ashby (Black Back) 41 ‐ Mark Buehrle (Black Back) 42 ‐ Esteban Loaiza (Red Back) 43 ‐ Mark Redman (Red Back) (2) 44 ‐ Mark Quinn (Red Back) 44 ‐ Mark Quinn (Black Back) 45 ‐ Tino Martinez (Red Back) 46 ‐ Joe Mays (Red Back) 47 ‐ Walt Weiss (Red Back) 50 ‐ Richard Hidalgo (Red Back) 51 ‐ Orlando Hernandez (Red Back) 53 ‐ Ben Grieve (Red Back) 54 ‐ Jimmy Haynes (Red Back) 55 ‐ Ken Caminiti (Red Back) 56 ‐ Tim Salmon (Red Back) 57 ‐ Andy Pettitte (Red Back) 59 ‐ Marquis Grissom (Red Back) 62 ‐ Miguel Tejada (Red Back) 66 ‐ CliFF Floyd (Red Back) 72 ‐ Andruw Jones (Red Back) 403 ‐ Mike Bordick SP Classic Renditions CR1 ‐ Mark McGwire CR5 ‐ Chipper Jones CR6 ‐ Pat Burrell CR8 ‐ Manny Ramirez 2002 Topps Heritage Short Prints 53 ‐ Alex Rodriguez SP 244 ‐ Barry Bonds SP 368 ‐ RaFael Palmeiro SP 370 ‐ Jason Giambi SP 373 ‐ Todd Helton SP 374 ‐ Juan Gonzalez SP 377 ‐ Tony Gwynn SP 383 ‐ Ramon Ortiz SP 384 ‐ John Rocker SP 394 ‐ Terrence Long SP 395 ‐ Travis Lee SP 396 ‐ Earl Snyder SP Classic Renditions CR‐2 ‐ Brian Giles CR‐3 ‐ Roger Cedeno CR‐8 ‐ Jimmy Rollins (2) CR‐10 ‐ Shawn Green (2) 2003 Topps Heritage Short Prints / Variations 156 ‐ Randall Simon (Old Logo SP) 170 ‐ Andy Marte SP 375 ‐ Ken GriFFey Jr. -

Mariners Game Notes • Sunday • September 9, 2012 • Vs

GAME INFORMATION Safeco Field • PO Box 4100 • Seattle, WA 98194 • TEL: 206.346.4000 • FAX: 206.346.4000 • www.mariners.com Sunday, September 9, 2012 OAKLAND ATHLETICS (78-60) at SEATTLE MARINERS (67-73) Game #141 (67-73) Safeco Field LH Tommy Milone (11-10, 3.94) vs. LH Jason Vargas (14-9, 3.80) Home #72 (36-35) UPCOMING PROBABLE PITCHERS…all times PDT… Day Date Opp. Time Mariners Pitcher Opposing Pitcher TV Monday Sept. 10 -- Travel Day to Toronto -- Tuesday Sept. 11 @TOR 4:07 pm RH Erasmo Ramirez (0-2, 3.69) vs. RH Brandon Morrow (8-5, 3.42) ROOT Wednesday Sept. 12 @TOR 4:07 pm RH Kevin Millwood (5-12, 4.27) vs. LH Ricky Romero (8-13, 5.85) ROOT Thursday Sept. 13 @TOR 4:07 pm RH Felix Hernandez (13-7, 2.67) vs. RH Henderson Alvarez (8-12, 4.95) ROOT All games broadcast on 710 ESPN Seattle & Mariners Radio Network TODAY’S TILT…the Mariners conclude a 3-team, 10-day homestand with the finale of a three-game SCHEDULE REMINDER A reminder that today’s game will series vs. the A’s…opened the homestand losing 2 of 3 vs. the Angels, but took 2 of 3 against Boston… be broadcast on 770 AM and the will travel Monday to begin a 6-game trip at Toronto (3 G) and Texas (3 G)…today’s game will be Mariners radio network…(ESPN 710 televised on ROOT Sports in HD and broadcast live on 770 AM KTTH & the Mariners Radio Network. -

BASEBALL BOOKS FICTION J GIF All the Way Home by Patricia Reilly Giff, 2001 It’S 1941 in New York and Brick and Mariel Become Friends in an Unusual Turn of Events

BASEBALL BOOKS FICTION J GIF All the Way Home by Patricia Reilly Giff, 2001 It’s 1941 in New York and Brick and Mariel become friends in an unusual turn of events. A common love of baseball helps form their friend‐ ship. Brick comes to live with Mariel and her Mom when the farm he lives on suffers a terrible fire. Mariel, although she loves her mother dearly, wants to find her birth mother. They help each other to “find their way home.” J GUT Mickey & Me by Dan Gutman, 2003 Thirteen year‐old Joe Stoshack is like most other boys his age who play little league, except he can travel through time with the help of his baseball cards. When Joe’s dad gives him his prized Mickey Mantle rookie card, Joe is to go and warn Mickey Mantle about an upcoming injury. However, his cousin plays a trick on him, and Joe doesn’t end up in 1951, where he thinks he will. If you like this book, try any of the others in this “Baseball Card Adventure” series. J HOL Penny from Heaven by Jennifer L. Holm (2007 Newbery Honor), 2006 It is 1953, and Penny lives in New Jersey with her Mom and grandparents. Her dad’s big Italian family lives a few blocks away. Her dad died when she was a baby, and no one talks about him at all! Enjoy Penny’s day to day activities with her cousin Frankie as they get into trouble over the summer. Find out what happened to her dad‐and how she finds out. -

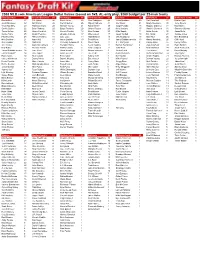

2008 MLB.Com American League Dollar Values (Based on 5X5, AL

2008 MLB.com American League Dollar Values (based on 5x5, AL-only play, $260 budget per 23-man team) First Basemen $$ Second Basemen $$ Shortstops $$ Third Basemen $$ Catchers $$ Outfielders $$ Outfielders (cont.) $$ David Ortiz* 37 B.J. Upton 28 Derek Jeter 26 Alex Rodriguez 48 Victor Martinez 29 Carl Crawford 37 Marlon Byrd 3 Justin Morneau 32 Ian Kinsler 26 Carlos Guillen 26 Miguel Cabrera 41 Joe Mauer 24 Grady Sizemore 36 Jerry Owens 3 Victor Martinez 29 Robinson Cano 25 Michael Young 21 Adrian Beltre 25 Jorge Posada 18 Magglio Ordonez 31 Matt Stairs 3 Carlos Guillen 26 Brian Roberts 25 Edgar Renteria 20 Chone Figgins 23 Kenji Johjima 15 Vladimir Guerrero 31 Shannon Stewart 3 Travis Hafner 25 Howie Kendrick 15 Jhonny Peralta 18 Alex Gordon 20 Mike Napoli 11 Ichiro Suzuki 30 Jason Botts 3 Carlos Pena 25 Dustin Pedroia 14 Orlando Cabrera 17 Mike Lowell 17 Jason Varitek 10 B.J. Upton 28 Jacque Jones 2 Paul Konerko 24 Placido Polanco 14 Julio Lugo 14 Hank Blalock 14 Ivan Rodriguez 10 Alex Rios 26 Ben Broussard 2 Nick Swisher 21 Aaron Hill 13 Jason Bartlett 10 Scott Rolen 13 Jarrod Saltalamacchia 10 Manny Ramirez 26 Cliff Floyd 2 Alex Gordon 20 Mark Ellis 12 Yuniesky Betancourt 8 Melvin Mora 12 A.J. Pierzynski 9 Bobby Abreu 25 Kenny Lofton 2 Jim Thome* 18 Asdrubal Cabrera 7 Brendan Harris 5 Kevin Youkilis 12 Ramon Hernandez 8 Gary Sheffield 24 Ryan Raburn 1 Billy Butler 15 Brendan Harris 5 Bobby Crosby 3 Evan Longoria 11 John Buck 6 Nick Markakis 24 Scott Podsednik 1 Jarrod Saltalamacchia 14 Jose Vidro 3 David Eckstein 3 Akinori Iwamura 9 Gerald Laird 5 Torii Hunter 24 David Murphy 1 Frank Thomas* 14 Jose Lopez 3 Adam Everett 2 Joe Crede 8 Dioner Navarro 5 Curtis Granderson 24 Jay Payton 1 Ryan Garko 14 Alexi Casilla 3 Erick Aybar 2 Aubrey Huff 8 Kurt Suzuki 5 Chone Figgins 23 Marcus Thames 1 Casey Kotchman 13 Danny Richar 3 Donnie Murphy 2 Eric Chavez 7 Mike Piazza* 3 Delmon Young 22 Joey Gathright 1 Kevin Youkilis 12 Maicer Izturis 2 Juan Uribe 2 Casey Blake 7 Gregg Zaun 3 Nick Swisher 21 Adam Lind 1 Richie Sexson 9 Mark Grudzielanek 2 Tony Pena Jr. -

Bulletin of the Center for Children's Books

I LLINO S UNIVERSITY OF ILLINOIS AT URBANA-CHAMPAIGN PRODUCTION NOTE University of Illinois at Urbana-Champaign Library Large-scale Digitization Project, 2007. BULLETIN THE UNIVERSITY OF CHICAGO LIBRARY * CHILDREN'S BOOK CENTER Volume IX October, 1955 Number 2 EXPLANATION OF CODE SYMBOLS USED WITH ANNOTATIONS R Recommended M Marginal book that is so slight in content or has so many weaknesses in style or format that it barely misses an NR rating. The book should be given careful consideration before purchase. NR Not recommended. Ad For collections that need additional material on the subject. SpC Subject matter or treatment will tend to limit the book to specialized collections. SpR A book that will have appeal for the unusual reader only. Recommended for the special few who will read it. Maryland, were living in constant fear of an attack from the British who were in control of WA·w" and WIonP "IWeoh Chesapeake Bay. Bob Pennington and his friend, Jeremy Caulk, were anxious to have a part in NR Anderson, Bertha C. Eric Duffy, Ameri- the war even though their families considered 5-7 can; illus. by Lloyd Coe. Little, 1955. them too young to enlist, even as drummer boys. '7 7 p. $2.75. Their chance came the night when the British Eric Duffy, a young Irish orphan, came to New did attack and the boys were able to fool the York as an indentured servant, partly to es- enemy into firing into the tree-tops, thus cape from his scolding aunt and partly be- saving most of the houses in the town. -

BASEBALL – GLOSSARIO 2018 A, AA, AAA: Le Tre Leghe in Cui E

BASEBALL – GLOSSARIO 2018 A A, AA, AAA: Le tre leghe in cui e organizzato il baseball professionistico minore; normalmente le squadre sono affiliate ai club di Major League. Aboard (Abordo): corridore in base, a bordo in gergo marinaro. Ace: il miglior lanciatore della squadra, normalmente il partente. A 5 $ ride in a yellow cab: una corsa in taxi fuoricampo lunghissimo. Alligator mouth and humming bird ass: “bocca da alligatore e sedere da colibrì”: giocatore che parla molto, ma non ha il coraggio di agire. Angle of delivery: angolo formato dal braccio del lanciatore rispetto alla perpendicolare del corpo per effettuare un lancio di sopra, di tre quarti, di fianco di sotto. Arm: braccio. Artificial turf: tappeto, erba artificiale; campo sintetico. Assist (Asistencia): passaggio della palla fra difensori per ottenere l’eliminazione. Assock: base. Airhead: chi ha dell’aria al posto del cervello; testa vuota, picchiatello. Arms: braccia; complesso dei lanciatori di una squadra. Una squadra “con buone braccia” è molto probabilmente competitiva. Around de horn: un doppio gioco, un tentativo di doppio gioco terza-seconda-prima. L’espressione deriva dal periplo del continente africano attraverso il Corno d’Africa (penisola di forma triangolare sul lato est del continente africano, che si protende, a forma di corno, nell'oceano Indiano e nel golfo di Aden). Aspirin tablet: un lancio così veloce che fa apparire la palla piccola come una pastiglia, quando ar- riva al piatto. Un lancio può essere chiamato anche “pillola”, “che fa il fumo”, caldo, caldissimo, benzina e simili. At ‘em ball: lancio che produce una battuta che va direttamente a un interno; si dice di un lanciato- re fortunato. -

2021 Seattle Mariners Media Guide

INFORMATION BOX INDEX 1-0 Games ..................................................... 165 Longest Mariners Games, Innings................... 99 5-Hit Mariners ................................................ 264 Low Hit Games .............................................. 294 7-RBI Mariners .............................................. 203 Manager, All-Time Records ............................. 25 15-Win Seasons ............................................ 112 Mariner Moose .............................................. 136 30-Home Run Seasons ................................. 139 Mariners Firsts ............................................... 145 100 RBI Seasons ............................................. 45 Mariners Hall of Fame, Criteria ...................... 179 All-Stars, By Position ..................................... 187 Minor League All-Time Affiliates .................... 237 All-Stars, Most in a Season ........................... 186 Most Players, Pitchers Used ......................... 115 Attendance, Top 10 ....................................... 214 Multi-Hit Games, Consecutive ...................... 164 Baseball America Top-100 Prospects ........... 284 Naming the Mariners ....................................... 37 Baseball Museum of the Pacific Northwest .. 298 No-Hitters, Mariners ...................................... 101 Best Record (by Month) ................................ 197 No-Hitters, Opponents .................................. 112 Birthdays ....................................................... 157 Opening -

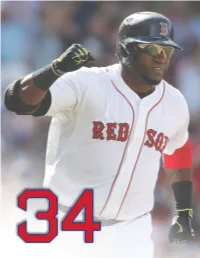

David Ortiz Number Retirement.Pdf

ALL-TIME MLB RANKINGS GAMES PLAYED HITS RUNS SCORED RBI WALKS 75. Chili Davis .......2,436 96. Garret Anderson ..2,529 80. Omar Vizquel .....1,445 13. Mel Ott .........1,864 32. Jason Giambi .....1,366 76. Harmon Killebrew .2,435 97. Heinie Manush ...2,524 81. Steve Finley ......1,443 14. Carl Yastrzemski ..1,844 33. Rafael Palmeiro ...1,353 77. Roberto Clemente .2,433 98. Todd Helton ......2,519 82. Adrian Beltre ..1,437 15. Ted Williams .....1,839 34. Willie McCovey ...1,345 78. Willie Davis ......2,429 99. Joe Morgan ......2,517 83. Harry Hooper .....1,429 16. Ken Griffey Jr. ....1,836 35. Alex Rodriguez ...1,338 79. Bobby Abreu .....2,425 100. Buddy Bell .......2,514 84. Dummy Hoy. .1,426 17. Rafael Palmeiro ...1,835 36. Todd Helton ......1,335 80. Luke Appling .....2,422 101. Mickey Vernon ....2,495 85. Joe Kelley .......1,425 18. Dave Winfield ....1,833 37. Eddie Murray .....1,333 81. Zack Wheat ......2,410 102. Fred McGriff .....2,490 Jim O’Rourke .....1,425 19. Manny Ramirez ...1,831 38. Tim Raines .......1,330 82. Mickey Vernon ....2,409 103. Bill Dahlen .......2,482 87. Rod Carew ......1,424 20. Al Simmons ......1,827 39. Manny Ramirez ...1,329 83. DAVID ORTIZ ...2,408 104. DAVID ORTIZ ...2,472 88. Jimmy Rollins ..1,421 21. Frank Robinson ...1,812 40. DAVID ORTIZ ...1,319 84. Buddy Bell .......2,405 Ted Simmons .....2,472 89. DAVID ORTIZ ...1,419 22. DAVID ORTIZ ...1,768 Tony Phillips .....1,319 DOUBLES HOME RUNS EXTRA-BASE HITS TOTAL BASES TIMES ON BASE 1. -

Sports 10/4 Pg. 8

FREE PRESS Page 10 Colby Free Press Friday, October 4, 2002 SSSPORTSPORTS Keeping the ball in play Golf team has good outing at Ulysses tourney By DARREL PATTILLO are getting better each week. This tour- regionals on Oct. 14. 474, Garden City JV 475, Dodge City Colby Free Press nament was composed of most of the Results from Ulysses: JV 477. The Colby Eagle golf team was in teams that will be in our regional tour- Individual Scores: (18 holes) Medalists: Ulysses Tuesday for the junior varsity/ nament, and gave us an idea about Joanna Hawk 101, Lindsey Schafer Charity Maune, Syracuse 83, Mel- varsity tournament. The Lady Eagle where we stand at this point. 106, Sara Nally 109, Kelsey Strickler issa Brown, Sublette 88, Kayla Crone, golfers placed seventh out of 12 teams. “We definitely have more improve- 112, Sly Cassidy 135, Regina Heier Lakin 89, Jordon Lohfink, Holcomb “We had our best tournament of the ment to make in order to qualify for 139 90, Maggie Welch, 90, Abby Trejo, season from the stand point of scores state.” Team Scores: Syracuse 370, Ulysses Ulysses 93, Michelle Ridgway, Lakin posted,” coach Ray Higley said. The team will be in Lakin Saturday, 386, Goodland 399, Lakin 402, Larned 93, Mel Kreie, Ulysses 93, Jessica “Three girls posted their lowest scores and have one more regular season tour- 402, Holcomb 423, Colby 428, Osborn, Goodland 94, Brittany Seger, of the year. I’m pleased that the girls nament (Syracuse, Oct. 10), before Hugoton 456, Scott City 462, Sublette Ulysses 95 Cardinals take second straight game from Arizona PHOENIX (AP) — Curt Schilling Rolen.