Quantifying the Impact of Shutdown Techniques for Energy-Efficient

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Shutdown Script for Retropie

Shutdown Script for Retropie Use pin number 5 & 14 for “Shutdown” 1. Update RetroPie: • sudo apt-get update 2. Upgrade RetroPie • sudo apt-get upgrade 3. Install Python • sudo apt-get install python-dev • sudo apt-get install python3-dev • sudo apt-get install gcc • sudo apt-get install python-pip 4. Next you need to get RPi.GPIO: • wget https://pypi.python.org/packages/source/R/RPi.GPIO/RPi.GPIO-0.5.11.tar.gz 5. Extract the packages: • sudo tar -zxvf RPi.GPIO-0.5.11.tar.gz 6. Move into the newly created directory: • cd RPi.GPIO-0.5.11 • 7. Install the module by doing: • sudo python setup.py install • sudo python3 setup.py install 8. Creating a directory to hold your scripts: • mkdir /home/pi/scripts 9. Call our script shutdown.py (it is written in python). Create and edit the script by doing: • sudo nano /home/pi/scripts/shutdown.py The content of the script: Paste it in the blank area #!/usr/bin/python import RPi.GPIO as GPIO import time import subprocess # we will use the pin numbering to match the pins on the Pi, instead of the # GPIO pin outs (makes it easier to keep track of things) GPIO.setmode(GPIO.BOARD) # use the same pin that is used for the reset button (one button to rule them all!) GPIO.setup(5, GPIO.IN, pull_up_down = GPIO.PUD_UP) oldButtonState1 = True while True: #grab the current button state buttonState1 = GPIO.input(5) # check to see if button has been pushed if buttonState1 != oldButtonState1 and buttonState1 == False: subprocess.call("shutdown -h now", shell=True, stdout=subprocess.PIPE, stderr=subprocess.PIPE) oldButtonState1 = buttonState1 time.sleep(.1) Press CRTL X Then Y and Enter 10. -

Adding a Shutdown Button to the Raspberry Pi B+ Version 1

Welcome, Guest Log in Register Activity Translate Content Search within content, members or groups Search Topics Resources Members Design Center Store All Places > Raspberry Pi > Raspberry Pi Projects > Documents Adding a Shutdown Button to the Raspberry Pi B+ Version 1 Created by ipv1 on Aug 4, 2015 3:05 AM. Last modified by ipv1 on Aug 18, 2015 9:52 AM. Introduction What do you need? Step 1. Setup the RPi Step 2. Connecting the button Step 3. Writing a Python Script Step 4. Adding it to startup Step 5. More to do Introduction For a beginner to the world of raspberry pi, there are a number of projects that can become the start of something big. In this article, I discuss such a simple project which is adding a button that can be used to shutdown the raspberry pi using a bit of software tinkering. I wrote a similar article in 2013 at my blog “embeddedcode.wordpress.com” and its got its share of attention since a lot of people starting out with a single board computer, kept looking for a power button. Additionally, those who wanted a headless system, needed a way to shutdown the computer without the mess of connecting to it over the network or attaching a monitor and keyboard to it. In this article, I revisit the tutorial on how to add a shutdown button while trying to explain the workings and perhaps beginners will find it an amusing to add find more things to do with this little recipe. What do you need? Here is a basic bill of materials required for this exercise. -

Shutdown Policies with Power Capping for Large Scale Computing Systems Anne Benoit, Laurent Lefèvre, Anne-Cécile Orgerie, Issam Raïs

Shutdown Policies with Power Capping for Large Scale Computing Systems Anne Benoit, Laurent Lefèvre, Anne-Cécile Orgerie, Issam Raïs To cite this version: Anne Benoit, Laurent Lefèvre, Anne-Cécile Orgerie, Issam Raïs. Shutdown Policies with Power Capping for Large Scale Computing Systems. Euro-Par: International European Conference on Parallel and Distributed Computing, Aug 2017, Santiago de Compostela, Spain. pp.134 - 146, 10.1109/COMST.2016.2545109. hal-01589555 HAL Id: hal-01589555 https://hal.archives-ouvertes.fr/hal-01589555 Submitted on 18 Sep 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Shutdown policies with power capping for large scale computing systems Anne Benoit1, Laurent Lef`evre1, Anne-C´ecileOrgerie2, and Issam Ra¨ıs1 1 Univ. Lyon, Inria, CNRS, ENS de Lyon, Univ. Claude-Bernard Lyon 1, LIP 2 CNRS, IRISA, Rennes, France Abstract Large scale distributed systems are expected to consume huge amounts of energy. To solve this issue, shutdown policies constitute an appealing approach able to dynamically adapt the resource set to the actual workload. However, multiple constraints have to be taken into account for such policies to be applied on real infrastructures, in partic- ular the time and energy cost of shutting down and waking up nodes, and power capping to avoid disruption of the system. -

Cloud Workbench a Web-Based Framework for Benchmarking Cloud Services

Bachelor August 12, 2014 Cloud WorkBench A Web-Based Framework for Benchmarking Cloud Services Joel Scheuner of Muensterlingen, Switzerland (10-741-494) supervised by Prof. Dr. Harald C. Gall Philipp Leitner, Jürgen Cito software evolution & architecture lab Bachelor Cloud WorkBench A Web-Based Framework for Benchmarking Cloud Services Joel Scheuner software evolution & architecture lab Bachelor Author: Joel Scheuner, [email protected] Project period: 04.03.2014 - 14.08.2014 Software Evolution & Architecture Lab Department of Informatics, University of Zurich Acknowledgements This bachelor thesis constitutes the last milestone on the way to my first academic graduation. I would like to thank all the people who accompanied and supported me in the last four years of study and work in industry paving the way to this thesis. My personal thanks go to my parents supporting me in many ways. The decision to choose a complex topic in an area where I had no personal experience in advance, neither theoretically nor technologically, made the past four and a half months challenging, demanding, work-intensive, but also very educational which is what remains in good memory afterwards. Regarding this thesis, I would like to offer special thanks to Philipp Leitner who assisted me during the whole process and gave valuable advices. Moreover, I want to thank Jürgen Cito for his mainly technologically-related recommendations, Rita Erne for her time spent with me on language review sessions, and Prof. Harald Gall for giving me the opportunity to write this thesis at the Software Evolution & Architecture Lab at the University of Zurich and providing fundings and infrastructure to realize this thesis. -

LAB MANUAL for Computer Network

LAB MANUAL for Computer Network CSE-310 F Computer Network Lab L T P - - 3 Class Work : 25 Marks Exam : 25 MARKS Total : 50 Marks This course provides students with hands on training regarding the design, troubleshooting, modeling and evaluation of computer networks. In this course, students are going to experiment in a real test-bed networking environment, and learn about network design and troubleshooting topics and tools such as: network addressing, Address Resolution Protocol (ARP), basic troubleshooting tools (e.g. ping, ICMP), IP routing (e,g, RIP), route discovery (e.g. traceroute), TCP and UDP, IP fragmentation and many others. Student will also be introduced to the network modeling and simulation, and they will have the opportunity to build some simple networking models using the tool and perform simulations that will help them evaluate their design approaches and expected network performance. S.No Experiment 1 Study of different types of Network cables and Practically implement the cross-wired cable and straight through cable using clamping tool. 2 Study of Network Devices in Detail. 3 Study of network IP. 4 Connect the computers in Local Area Network. 5 Study of basic network command and Network configuration commands. 6 Configure a Network topology using packet tracer software. 7 Configure a Network topology using packet tracer software. 8 Configure a Network using Distance Vector Routing protocol. 9 Configure Network using Link State Vector Routing protocol. Hardware and Software Requirement Hardware Requirement RJ-45 connector, Climping Tool, Twisted pair Cable Software Requirement Command Prompt And Packet Tracer. EXPERIMENT-1 Aim: Study of different types of Network cables and Practically implement the cross-wired cable and straight through cable using clamping tool. -

Maintenance Commands for Avaya Communication Manager, Media Gateways and Servers

Maintenance Commands for Avaya Communication Manager, Media Gateways and Servers 03-300431 Issue 4 January 2008 © 2008 Avaya Inc. All Rights Reserved. Notice While reasonable efforts were made to ensure that the information in this document was complete and accurate at the time of printing, Avaya Inc. can assume no liability for any errors. Changes and corrections to the information in this document may be incorporated in future releases. For full legal page information, please see the complete document, Avaya Legal Page for Software Documentation, Document number 03-600758. To locate this document on the website, simply go to http://www.avaya.com/support and search for the document number in the search box. Documentation disclaimer Avaya Inc. is not responsible for any modifications, additions, or deletions to the original published version of this documentation unless such modifications, additions, or deletions were performed by Avaya. Customer and/or End User agree to indemnify and hold harmless Avaya, Avaya's agents, servants and employees against all claims, lawsuits, demands and judgments arising out of, or in connection with, subsequent modifications, additions or deletions to this documentation to the extent made by the Customer or End User. Link disclaimer Avaya Inc. is not responsible for the contents or reliability of any linked Web sites referenced elsewhere within this documentation, and Avaya does not necessarily endorse the products, services, or information described or offered within them. We cannot guarantee that these links will work all of the time and we have no control over the availability of the linked pages. Warranty Avaya Inc. -

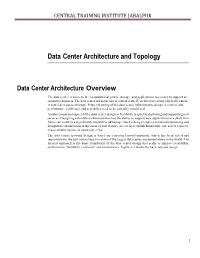

Data Center Architecture and Topology

CENTRAL TRAINING INSTITUTE JABALPUR Data Center Architecture and Topology Data Center Architecture Overview The data center is home to the computational power, storage, and applications necessary to support an enterprise business. The data center infrastructure is central to the IT architecture, from which all content is sourced or passes through. Proper planning of the data center infrastructure design is critical, and performance, resiliency, and scalability need to be carefully considered. Another important aspect of the data center design is flexibility in quickly deploying and supporting new services. Designing a flexible architecture that has the ability to support new applications in a short time frame can result in a significant competitive advantage. Such a design requires solid initial planning and thoughtful consideration in the areas of port density, access layer uplink bandwidth, true server capacity, and oversubscription, to name just a few. The data center network design is based on a proven layered approach, which has been tested and improved over the past several years in some of the largest data center implementations in the world. The layered approach is the basic foundation of the data center design that seeks to improve scalability, performance, flexibility, resiliency, and maintenance. Figure 1-1 shows the basic layered design. 1 CENTRAL TRAINING INSTITUTE MPPKVVCL JABALPUR Figure 1-1 Basic Layered Design Campus Core Core Aggregation 10 Gigabit Ethernet Gigabit Ethernet or Etherchannel Backup Access The layers of the data center design are the core, aggregation, and access layers. These layers are referred to extensively throughout this guide and are briefly described as follows: • Core layer—Provides the high-speed packet switching backplane for all flows going in and out of the data center. -

Command Reference Guide for Cisco Prime Infrastructure 3.9

Command Reference Guide for Cisco Prime Infrastructure 3.9 First Published: 2020-12-17 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS" WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

Architecting a Vmware Vsphere Compute Platform for Vmware Cloud Providers

VMware vCloud® Architecture Toolkit™ for Service Providers Architecting a VMware vSphere® Compute Platform for VMware Cloud Providers™ Version 2.9 January 2018 Martin Hosken Architecting a VMware vSphere Compute Platform for VMware Cloud Providers © 2018 VMware, Inc. All rights reserved. This product is protected by U.S. and international copyright and intellectual property laws. This product is covered by one or more patents listed at http://www.vmware.com/download/patents.html. VMware is a registered trademark or trademark of VMware, Inc. in the United States and/or other jurisdictions. All other marks and names mentioned herein may be trademarks of their respective companies. VMware, Inc. 3401 Hillview Ave Palo Alto, CA 94304 www.vmware.com 2 | VMware vCloud® Architecture Toolkit™ for Service Providers Architecting a VMware vSphere Compute Platform for VMware Cloud Providers Contents Overview ................................................................................................. 9 Scope ...................................................................................................... 9 Use Case Scenario ............................................................................... 10 3.1 Service Definition – Virtual Data Center Service .............................................................. 10 3.2 Service Definition – Hosted Private Cloud Service ........................................................... 12 3.3 Integrated Service Overview – Conceptual Design ......................................................... -

Pi-Uptimeups How to Use Python

How to use the Python demo program For Pi-UpTimeUPS and Pi-Zero-UpTime (PiZ-UpTime) boards (The >> line shows the commands to execute). You need to open a command line window to execute these scripts. Step 1 Make sure you have the latest version of the operating system. >> sudo apt-get update >> sudo apt-get dist-upgrade (Skip this step if you DO NOT want to upgrade to Raspbian STRETCH. Doing this will automatically upgrade you to STRETCH. Say Yes to installing updates/upgrades.) >> sudo apt-get upgrade >> sudo apt-get autoclean If the dist-upgrade went through with updates, please reboot the Pi to ensure you have the latest OS and updates. Step 2 In the home directory (or some other location), create a separate folder. >> mkdir some-folder-name (for example, mkdir uptime) >> cd some-folder-name (for example, cd uptime) Use wget and download the python script from the alchemy-power web site. >> wget http://alchemy-power.com/wp-content/uploads/2017/06/GPIO-shutdown-sample.zip This creates a file GPIO-shutdown-sample.zip file. Step 3 Unzip the GPIO-shutdown-sample.zip file using the unzip command >> unzip GPIO-shutdown-sample.zip This creates a GPIO-shutdown-sample.py file. Step 4 Run the program locally from the command line, from the directory where the file GPIO-shutdown- sample.py is located. >> sudo python GPIO-shutdown-sample.py How to use Python Code P a g e | 1 © 2017 Alchemy Power Inc., All Rights reserved. sudo privileges are needed so that when the battery runs low, the software can execute a proper shutdown. -

A Dynamic Power Management Schema for Multi-Tier Data Centers

A Dynamic Power Management Schema for Multi-Tier Data Centers By Aryan Azimzadeh April, 2016 Director of Thesis: Dr. Nasseh Tabrizi Major Department: Computer Science An issue of great concern as it relates to global warming is power consumption and efficient use of computers especially in large data centers. Data centers have an important role in IT infrastructures because of their huge power consumption. This thesis explores the sleep state of data centers’ servers under specific conditions such as setup time and identifies optimal number of servers. Moreover, their potential to greatly increase energy efficiency in data centers. We use a dynamic power management policy based on a mathematical model. Our new methodology is based on the optimal number of servers required in each tier while increasing servers’ setup time after sleep mode to reduce the power consumption. The Reactive approach is used to prove the validity of the results and energy efficiency by calculating the average power consumption of each server under specific sleep mode and setup time. We introduce a new methodology that uses average power consumption to calculate the Normalized-Performance-Per-Watt in order to evaluate the power efficiency. Our results indicate that the proposed schema is beneficial for data centers with high setup time. A Dynamic Power Management Schema for Multi-Tier Data Centers A Thesis Presented To the Faculty of the Department of Computer Science East Carolina University In Partial Fulfillment of the Requirements for the Degree Master of Science in Software Engineering By Aryan Azimzadeh April, 2016 © Aryan Azimzadeh, 2016 A Dynamic Power Management Schema for Multi-Tier Data Centers By Aryan Azimzadeh APPROVED BY: DIRECTOR OF THESIS: ____________________________________________________________________ Nasseh Tabrizi, PhD COMMITTEE MEMBER: ___________________________________________________________________ Mark Hills, PhD COMMITTEE MEMBER: ________________________________________________________ Venkat N. -

Freebsd Command Reference

FreeBSD command reference Command structure Each line you type at the Unix shell consists of a command optionally followed by some arguments , e.g. ls -l /etc/passwd | | | cmd arg1 arg2 Almost all commands are just programs in the filesystem, e.g. "ls" is actually /bin/ls. A few are built- in to the shell. All commands and filenames are case-sensitive. Unless told otherwise, the command will run in the "foreground" - that is, you won't be returned to the shell prompt until it has finished. You can press Ctrl + C to terminate it. Colour code command [args...] Command which shows information command [args...] Command which modifies your current session or system settings, but changes will be lost when you exit your shell or reboot command [args...] Command which permanently affects the state of your system Getting out of trouble ^C (Ctrl-C) Terminate the current command ^U (Ctrl-U) Clear to start of line reset Reset terminal settings. If in xterm, try Ctrl+Middle mouse button stty sane and select "Do Full Reset" exit Exit from the shell logout ESC :q! ENTER Quit from vi without saving Finding documentation man cmd Show manual page for command "cmd". If a page with the same man 5 cmd name exists in multiple sections, you can give the section number, man -a cmd or -a to show pages from all sections. man -k str Search for string"str" in the manual index man hier Description of directory structure cd /usr/share/doc; ls Browse system documentation and examples. Note especially cd /usr/share/examples; ls /usr/share/doc/en/books/handbook/index.html cd /usr/local/share/doc; ls Browse package documentation and examples cd /usr/local/share/examples On the web: www.freebsd.org Includes handbook, searchable mailing list archives System status Alt-F1 ..