MAL519 Introduction to Computers and Programming Venue MZ

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Significant Figures Instructor: J.T., P 1

CSUS – CH 6A Significant Figures Instructor: J.T., P 1 In Scientific calculation, there are two types of numbers: Exact numbers (1 dozen eggs = 12 eggs), (100 cm = 1 meter)... Inexact numbers: Arise form measurements. All measured numbers have an associated Uncertainty. (Where: The uncertainties drive from the instruments, human errors ...) Important to know: When reporting a measurement, so that it does not appear to be more accurate than the equipment used to make the measurement allows. The number of significant figures in a measurement: The number of digits that are known with some degree of confidence plus the last digit which is an estimate or approximation. 2.53 0.01 g 3 significant figures Precision: Is the degree to which repeated measurements under same conditions show the same results. Significant Figures: Number of digits in a figure that express the precision of a measurement. Significant Figures - Rules Significant figures give the reader an idea of how well you could actually measure/report your data. 1) ALL non-zero numbers (1, 2, 3, 4, 5, 6, 7, 8, 9) are ALWAYS significant. 2) ALL zeroes between non-zero numbers are ALWAYS significant. 3) ALL zeroes which are SIMULTANEOUSLY to the right of the decimal point AND at the end of the number are ALWAYS significant. 4) ALL zeroes which are to the left of a written decimal point and are in a number >= 10 are ALWAYS significant. CSUS – CH 6A Significant Figures Instructor: J.T., P 2 Example: Number # Significant Figures Rule(s) 48,923 5 1 3.967 4 1 900.06 5 1,2,4 0.0004 (= 4 E-4) 1 1,4 8.1000 5 1,3 501.040 6 1,2,3,4 3,000,000 (= 3 E+6) 1 1 10.0 (= 1.00 E+1) 3 1,3,4 Remember: 4 0.0004 4 10 CSUS – CH 6A Significant Figures Instructor: J.T., P 3 ADDITION AND SUBTRACTION: Count the Number of Decimal Places to determine the number of significant figures. -

About Significant Figures

About Significant Figures TABLE OF CONTENTS About Significant Figures................................................................................................... 1 What are SIGNIFICANT FIGURES? ............................................................................ 1 Introduction......................................................................................................................... 1 Introduction..................................................................................................................... 1 Rules of Significant Figures................................................................................................ 1 Determining the Number of Significant Figures ............................................................ 1 Significant Figures and Scientific Notations .................................................................. 2 Significant Figures in Calculations..................................................................................... 2 Significant Figures in Calculations................................................................................. 2 Addition and Subtraction ............................................................................................ 2 Multiplication and Division ........................................................................................ 3 Combining Operations................................................................................................ 3 Logarithms and Anti-Logarithms .............................................................................. -

International System of Measurements

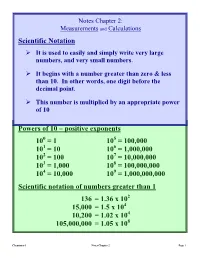

Notes Chapter 2: Measurements and Calculations Scientific Notation It is used to easily and simply write very large numbers, and very small numbers. It begins with a number greater than zero & less than 10. In other words, one digit before the decimal point. This number is multiplied by an appropriate power of 10 Powers of 10 – positive exponents 0 5 10 = 1 10 = 100,000 1 6 10 = 10 10 = 1,000,000 2 7 10 = 100 10 = 10,000,000 3 8 10 = 1,000 10 = 100,000,000 4 9 10 = 10,000 10 = 1,000,000,000 Scientific notation of numbers greater than 1 2 136 = 1.36 x 10 4 15,000 = 1.5 x 10 4 10,200 = 1.02 x 10 8 105,000,000 = 1.05 x 10 Chemistry-1 Notes Chapter 2 Page 1 Page 1 Powers of 10 – negative exponents 0 -4 1 10 = 1 10 = 0.0001 = /10,000 -1 1 -5 1 10 = 0.1 = /10 10 = 0.00001 = /100,000 -2 1 -6 1 10 = 0.01 = /100 10 = 0.000001 = /1,000,000 -3 1 -7 10 = 0.001 10 = 0.0000001 = /1,000 1 = /10,000,000 Scientific notation of numbers less than 1 -1 0.136 = 1.36 x 10 -2 0.0560 = 5.6 x 10 -5 0.0000352 = 3.52 x 10 -6 0.00000105 = 1.05 x 10 To Review: Positive exponents tells you how many decimal places you must move the decimal point to the right to get to the real number. -

Floating Point Computation (Slides 1–123)

UNIVERSITY OF CAMBRIDGE Floating Point Computation (slides 1–123) A six-lecture course D J Greaves (thanks to Alan Mycroft) Computer Laboratory, University of Cambridge http://www.cl.cam.ac.uk/teaching/current/FPComp Lent 2010–11 Floating Point Computation 1 Lent 2010–11 A Few Cautionary Tales UNIVERSITY OF CAMBRIDGE The main enemy of this course is the simple phrase “the computer calculated it, so it must be right”. We’re happy to be wary for integer programs, e.g. having unit tests to check that sorting [5,1,3,2] gives [1,2,3,5], but then we suspend our belief for programs producing real-number values, especially if they implement a “mathematical formula”. Floating Point Computation 2 Lent 2010–11 Global Warming UNIVERSITY OF CAMBRIDGE Apocryphal story – Dr X has just produced a new climate modelling program. Interviewer: what does it predict? Dr X: Oh, the expected 2–4◦C rise in average temperatures by 2100. Interviewer: is your figure robust? ... Floating Point Computation 3 Lent 2010–11 Global Warming (2) UNIVERSITY OF CAMBRIDGE Apocryphal story – Dr X has just produced a new climate modelling program. Interviewer: what does it predict? Dr X: Oh, the expected 2–4◦C rise in average temperatures by 2100. Interviewer: is your figure robust? Dr X: Oh yes, indeed it gives results in the same range even if the input data is randomly permuted . We laugh, but let’s learn from this. Floating Point Computation 4 Lent 2010–11 Global Warming (3) UNIVERSITY OF What could cause this sort or error? CAMBRIDGE the wrong mathematical model of reality (most subject areas lack • models as precise and well-understood as Newtonian gravity) a parameterised model with parameters chosen to fit expected • results (‘over-fitting’) the model being very sensitive to input or parameter values • the discretisation of the continuous model for computation • the build-up or propagation of inaccuracies caused by the finite • precision of floating-point numbers plain old programming errors • We’ll only look at the last four, but don’t forget the first two. -

Exact Numbers

Rules for Significant Figures Counting significant figures: 1. Nonzero integers . Nonzero integers are always counted as significant. For example, in the number 1.36, there are three significant figures. 2. Zeros . There are three different classes of zeros: a) Leading zeros are zeros that precede all the nonzero digits. Leading; zeros are never counted as significant. For example, in the number 0.0017, there are two significant figures, the 1 and the 7. The zeros are not significant because they simply indicate the position of the decimal. b) Captive zeros are zeros that fall between nonzero digits. Captive zeros are always counted as significant. For example, in the number 1006, there are four significant figures. c) Trailing zeros are zeros at the right end of the number. Trailing zeros are only significant if the number contains a decimal point. For example, the number 200 has only one significant figure, while the number 200. has three, and the number 200.00 has five significant figures. 3. Exact numbers . If a number was obtained by counting: 5 pennies, 10 apples, 2 beakers, then the number is considered to be exact. Exact numbers have an infinite number of significant figures. If a number is part of a definition then the number is also exact. For example, I inch is defined as exactly 2.54 centimeters. Thus in the statement .1 in. = 2.54 cm, neither the 1 nor the 2.54 limits the number of significant figures when its used in a calculation. Rules for counting significant figures also apply to numbers written in scientific notation. -

Significant Figures

SIGNIFICANT FIGURES UMERICAL DATA that are used to record Specimen Tem rature Nobservations or solve problems are seldom exact. (oE103) The numbers are generally rounded off and, conse- quently, are estimates of some true value, and the mathematical operations or assumptions involved in the calculations commonly are approximations. In numerical computations, no more than the necessary number of digits should be used; to report results with too many or too few digits may be misleading. To avoid surplus digits, numbers should be rounded off at the point where the figures cease to have real A consistent procedure should be followed in round- meaning. Conversely, the number of significant fig- ing off numbers to n significant figures. All digits to ures may be unnecessarily reduced by choosing the the right of the nth digit should be discarded, as illus- less meaningful of several possible methods of calcula- trated in the following six examples of rounded num- tion. Careful consideration, therefore, should be given bers, each of which has only three significant figures: to the significant digits and arithmetic involved in each measurement. Example Original number- -Rounded number The number of significant figures resulting from 1 --. 0.32891 0.329 any calculation involving simple arithmetic operations 2 - ----- -- 47,543 47,500 3 -- ----- ------ ----..-- 11.65 11.6 on measured quantities should not exceed the number 4 -------- - --------. 22.75 22.8 of significant figures of the least precise number 5 ------- -- 18.05 18.0 6 ---------- - ---- 18.051 18.1 entering into the calculation. In the calculation itself, one more significant figure may be retained in the more precise numbers than exist in the least precise If the first of the discarded digits is greater than 5, number. -

Scientific Notation Worksheet

Name: _____________________________ Course: _________________ Scientific Notation Part A: Express each of the following in standard form. 1. 5.2 x 103 5. 3.6 x 101 2. 9.65 x 10–4 6. 6.452 x 102 3. 8.5 x 10–2 7. 8.77 x 10–1 4. 2.71 x 104 8. 6.4 x 10–3 Part B: Express each of the following in scientific notation. 1. 78,000 5. 16 2. 0.00053 6. 0.0043 3. 250 7. 0.875 4. 2,687 8. 0.012654 Part C: Use the exponent function on your calculator (EE or EXP) to compute the following. 1. (6.02 x 1023) (8.65 x 104) 8. (5.4 x 104) (2.2 x 107) 4.5 x 105 2. (6.02 x 1023) (9.63 x 10–2) 9. (6.02 x 1023) (–1.42 x 10–15) 6.54 x 10–6 3. 5.6 x 10–18 10. (6.02 x 1023) (–5.11 x 10–27) 8.9 x 108 –8.23 x 105 4. (–4.12 x 10–4) (7.33 x 1012) 11. (3.1 x 1014) (4.4 x 10–12) –6.6 x 10–14 5. 1.0 x 10–14 12. (8.2 x 10–3) (–7.9 x 107) 4.2 x 10–6 7.3 x 10–16 6. 7.85 x 1026 13. (–1.6 x 105) (–2.4 x 1015) 6.02 x 1023 8.9 x 103 7. (–3.2 x 10–7) (–8.6 x 10–9) 14. -

Approximations and Round-Off Errors Definition: the Number of Significant

Approximations and Round-Off Errors Definition: The number of significant figures or significant digits in the representation of a number is the number of digits that can be used with confidence. In particular, for our purposes, the number of significant digits is equal to the number of digits that are known (or assumed) to be correct plus one estimated digit. Examples: 1. The output from a physical measuring device or sensor is generally known to be correct up to a fixed number of digits. For example, if the temperature is measured with a thermometer that is calibrated in tenths of a degree Fahrenheit, then we might know that the temperature is between 85.6 and 85.7 degrees. In this case, we know that the first three digits of the temperature are 8, 5, and 6, but we do not know the value of any subsequent digits. The first unknown digit is sometimes estimated at half of the value of the calibration size, or 0.05 degrees in our case. If we did this, the temperature would be reported to four significant digits as 85.65 degrees. 2. Alternatively, we could choose to report the temperature to only three significant digits. In this case, we could truncate or chop off the unknown digits to give a result of 48.6 degrees, or we could round off the result to the nearest tenth of a degree to give either 48.6 or 48.7 degrees depending on whether the actual reading was more or less than half-way between the two calibrations. -

Appendix 1, Significant Figures in Numerical Calculations

Appendix 1, Significant figures in numerical calculations In chemistry numerical calculations almost always involved numerical values determined by measurement in the laboratory. Such a measured numerical value is known only to a specific number of decimal digits, according to how well the measurement has been made. This number of digits is known as the number of significant figures in the numerical value. For example, if we measure a quantity and determine that its value is between 1.87 and 1.85, then we would report as 1.86, that is, with three significant figures, with the understanding that the rightmost (third) digit is uncertain by one unit. Here are some examples of how to interpret numerical values in terms of the number of significant figures they represent. numerical significant value figures 1.2 two 0.0012 two 0.001200 four 10. two 10 one 1.31×1021 three 1000 one 12300 three 123×102 three 123.0×102 four 12300. five 12300.0 six In doing calculations with such approximately known numerical values, we need to allow for the effect of our inexact knowledge of the numerical values on the final results. The detailed analysis of such cumulative effects can be subtle, but we will use rules of thumb that do a good job taking into account these effects. The rules apply separately to multiplication and division, addition and subtraction, and to logarithms. à Significant figures involving multiplication and division When multiplying or dividing two approximately known numbers, the number of significant figures in the result is the smaller of the number of significant figures in the factors. -

Coping with Significant Figures by Patrick Duffy Last Revised: August 30, 1999

Coping with Significant Figures by Patrick Duffy Last revised: August 30, 1999 1. WHERE DO THEY COME FROM? 2 2. GETTING STARTED 3 2.1 Exact Numbers 3 2.2 Recognizing Significant Figures 3 2.3 Counting Significant Figures 4 3. CALCULATIONS 5 3.1 Rounding Off Numbers: When to do it 5 3.2 Basic Addition and Subtraction 6 3.3 Multiplication and Division 6 3.4 Square Roots 7 3.4.1 What are Square Roots? 7 3.4.2 How to Use Significant Figures With Square Roots 7 3.5 Logarithms 8 3.5.1 What Are Logarithms? 8 3.5.2 How to Use Significant Figures With Logarithms 9 3.6 Using Exact Numbers 9 4. AN EXAMPLE CALCULATION 10 5. CONCLUDING COMMENTS 13 6. PRACTICE PROBLEMS 14 2 During the course of the chemistry lab this year, you will be introduced (or re-introduced) to the idea of using significant figures in your calculations. Significant figures have caused some trouble for students in the past and many marks have been lost as a result of the confusion about how to deal with them. Hopefully this little primer will help you understand where they come from, what they mean, and how to deal with them during lab write-ups. When laid out clearly and applied consistently, they are relatively easy to use. In any event, if you have difficulties I (or for that matter any member of the chemistry teaching staff at Kwantlen) will be only too happy to help sort them out with you. 1. -

Significant Figures

Significant Figures (Adapted from Prof. Pasternack, JHU Chemistry) A good tutorial on significant figures can be found at http://www.chem.sc.edu/faculty/morgan/resources/sigfigs/ The rules for significant figures can be summarized as follows: 1. To determine the number of significant figures: o All nonzero digits are significant. (1.234 has 4 sig figs) o Zeroes between nonzero digits are significant. (1.02 has 3 sig figs) o Zeroes to the left of the first nonzero digits are not significant. (0.012 has 2 sig figs) o Zeroes to the right of a decimal point in a number are significant. (0.100 has 3 sig figs) o When a number ends in zeroes that are not to the right of a decimal point, the zeroes are not necessarily significant. (120 may have 2 or 3 sig figs) 2 To avoid ambiguity, use scientific notation. (1.9 x 10 has 2 sig figs and 1.90 x 102 has 3 sig figs) 2. Some numbers are exact because they are known with complete certainty. Exact numbers have an infinite number of significant figures. For example: there are exactly 60 seconds in 1 minute. 325 seconds = 5.42 minutes. 3. For addition or subtraction, round off the result to the leftmost decimal place. For example: 40.123+20.34=60.46. 4. For multiplication or division, round off the result to the smallest number of significant figures. For example: 1.23x2.0=2.5, not 2.46. 5. For logarithms, retain in the mantissa (the number to the right of the decimal point in the logarithm) the same number of significant figures as there are in the number whose logarithm you are taking. -

A Significant Review

A Significant Review Let’s start off with scientific notation… Large numbers (numbers for which the absolute value is greater than 1) will always have a positive exponent when in scientific notation. When converting to scientific notation, you move the decimal point until there is a single digit to the left. The number of places that the decimal spot moved becomes the exponent and the “x10”. Example: -450000 Æ -4.5x105. The decimal point was moved 5 times to the left, so the exponent is 5. Example: 106709001 Æ 1.06709001x108. The decimal was moved to the left by 8 spots so the exponent is 8. Example: 57293.264 Æ 5.7293264x104 since the decimal was moved 4 times to the left. Small numbers (numbers between 1 and -1) will always have a negative exponent when in scientific notation. When converting to scientific notation, you move the decimal point until there is a single digit to the left. The number of places that the decimal spot moved becomes the exponent and the “x10-”. Example: 0.0003528 Æ 3.528x10-4. The decimal moved 4 times to the right, so the exponent become -4. Example: -0.0000000000000058500 Æ-5.8500x10-15. The decimal point was moved 15 times to the right, so the exponent became -15. Example: 0.002 Æ 2x10-3 since the decimal was moved 3 times to the right. You try: 1a) 54,670,000,000 1b) -5526.7 1c) 0.03289 1d) 100.00 1e) -0.000093740 1f) 9999.606 1g) 2800 1h) -0.00000005883 1i) 0.00008 1j) 0.11250 How many significant figures in a number: First and foremost, you need to be able to tell how many sig.