Array Databases Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Programming for Engineers Pointers in C Programming: Part 02

Programming For Engineers Pointers in C Programming: Part 02 by Wan Azhar Wan Yusoff1, Ahmad Fakhri Ab. Nasir2 Faculty of Manufacturing Engineering [email protected], [email protected] PFE – Pointers in C Programming: Part 02 by Wan Azhar Wan Yusoff and Ahmad Fakhri Ab. Nasir 0.0 Chapter’s Information • Expected Outcomes – To further use pointers in C programming • Contents 1.0 Pointer and Array 2.0 Pointer and String 3.0 Pointer and dynamic memory allocation PFE – Pointers in C Programming: Part 02 by Wan Azhar Wan Yusoff and Ahmad Fakhri Ab. Nasir 1.0 Pointer and Array • We will review array data type first and later we will relate array with pointer. • Previously, we learn about basic data types such as integer, character and floating numbers. In C programming language, if we have 5 test scores and would like to average the scores, we may code in the following way. PFE – Pointers in C Programming: Part 02 by Wan Azhar Wan Yusoff and Ahmad Fakhri Ab. Nasir 1.0 Pointer and Array PFE – Pointers in C Programming: Part 02 by Wan Azhar Wan Yusoff and Ahmad Fakhri Ab. Nasir 1.0 Pointer and Array • This program is manageable if the scores are only 5. What should we do if we have 100,000 scores? In such case, we need an efficient way to represent a collection of similar data type1. In C programming, we usually use array. • Array is a fixed-size sequence of elements of the same data type.1 • In C programming, we declare an array like the following statement: PFE – Pointers in C Programming: Part 02 by Wan Azhar Wan Yusoff and Ahmad Fakhri Ab. -

C Programming: Data Structures and Algorithms

C Programming: Data Structures and Algorithms An introduction to elementary programming concepts in C Jack Straub, Instructor Version 2.07 DRAFT C Programming: Data Structures and Algorithms, Version 2.07 DRAFT C Programming: Data Structures and Algorithms Version 2.07 DRAFT Copyright © 1996 through 2006 by Jack Straub ii 08/12/08 C Programming: Data Structures and Algorithms, Version 2.07 DRAFT Table of Contents COURSE OVERVIEW ........................................................................................ IX 1. BASICS.................................................................................................... 13 1.1 Objectives ...................................................................................................................................... 13 1.2 Typedef .......................................................................................................................................... 13 1.2.1 Typedef and Portability ............................................................................................................. 13 1.2.2 Typedef and Structures .............................................................................................................. 14 1.2.3 Typedef and Functions .............................................................................................................. 14 1.3 Pointers and Arrays ..................................................................................................................... 16 1.4 Dynamic Memory Allocation ..................................................................................................... -

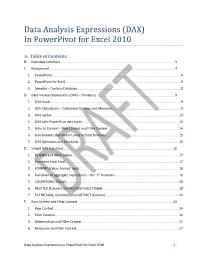

Data Analysis Expressions (DAX) in Powerpivot for Excel 2010

Data Analysis Expressions (DAX) In PowerPivot for Excel 2010 A. Table of Contents B. Executive Summary ............................................................................................................................... 3 C. Background ........................................................................................................................................... 4 1. PowerPivot ...............................................................................................................................................4 2. PowerPivot for Excel ................................................................................................................................5 3. Samples – Contoso Database ...................................................................................................................8 D. Data Analysis Expressions (DAX) – The Basics ...................................................................................... 9 1. DAX Goals .................................................................................................................................................9 2. DAX Calculations - Calculated Columns and Measures ...........................................................................9 3. DAX Syntax ............................................................................................................................................ 13 4. DAX uses PowerPivot data types ......................................................................................................... -

R from a Programmer's Perspective Accompanying Manual for an R Course Held by M

DSMZ R programming course R from a programmer's perspective Accompanying manual for an R course held by M. Göker at the DSMZ, 11/05/2012 & 25/05/2012. Slightly improved version, 10/09/2012. This document is distributed under the CC BY 3.0 license. See http://creativecommons.org/licenses/by/3.0 for details. Introduction The purpose of this course is to cover aspects of R programming that are either unlikely to be covered elsewhere or likely to be surprising for programmers who have worked with other languages. The course thus tries not be comprehensive but sort of complementary to other sources of information. Also, the material needed to by compiled in short time and perhaps suffers from important omissions. For the same reason, potential participants should not expect a fully fleshed out presentation but a combination of a text-only document (this one) with example code comprising the solutions of the exercises. The topics covered include R's general features as a programming language, a recapitulation of R's type system, advanced coding of functions, error handling, the use of attributes in R, object-oriented programming in the S3 system, and constructing R packages (in this order). The expected audience comprises R users whose own code largely consists of self-written functions, as well as programmers who are fluent in other languages and have some experience with R. Interactive users of R without programming experience elsewhere are unlikely to benefit from this course because quite a few programming skills cannot be covered here but have to be presupposed. -

Data Structure

EDUSAT LEARNING RESOURCE MATERIAL ON DATA STRUCTURE (For 3rd Semester CSE & IT) Contributors : 1. Er. Subhanga Kishore Das, Sr. Lect CSE 2. Mrs. Pranati Pattanaik, Lect CSE 3. Mrs. Swetalina Das, Lect CA 4. Mrs Manisha Rath, Lect CA 5. Er. Dillip Kumar Mishra, Lect 6. Ms. Supriti Mohapatra, Lect 7. Ms Soma Paikaray, Lect Copy Right DTE&T,Odisha Page 1 Data Structure (Syllabus) Semester & Branch: 3rd sem CSE/IT Teachers Assessment : 10 Marks Theory: 4 Periods per Week Class Test : 20 Marks Total Periods: 60 Periods per Semester End Semester Exam : 70 Marks Examination: 3 Hours TOTAL MARKS : 100 Marks Objective : The effectiveness of implementation of any application in computer mainly depends on the that how effectively its information can be stored in the computer. For this purpose various -structures are used. This paper will expose the students to various fundamentals structures arrays, stacks, queues, trees etc. It will also expose the students to some fundamental, I/0 manipulation techniques like sorting, searching etc 1.0 INTRODUCTION: 04 1.1 Explain Data, Information, data types 1.2 Define data structure & Explain different operations 1.3 Explain Abstract data types 1.4 Discuss Algorithm & its complexity 1.5 Explain Time, space tradeoff 2.0 STRING PROCESSING 03 2.1 Explain Basic Terminology, Storing Strings 2.2 State Character Data Type, 2.3 Discuss String Operations 3.0 ARRAYS 07 3.1 Give Introduction about array, 3.2 Discuss Linear arrays, representation of linear array In memory 3.3 Explain traversing linear arrays, inserting & deleting elements 3.4 Discuss multidimensional arrays, representation of two dimensional arrays in memory (row major order & column major order), and pointers 3.5 Explain sparse matrices. -

Array DBMS in Environmental Science

The 9th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications 21-23 September, 2017, Bucharest, Romania Array DBMS in Environmental Science: Satellite Sea Surface Height Data in the Cloud Ramon Antonio Rodriges Zalipynis National Research University Higher School of Economics, Moscow, Russia [email protected] Abstract – Nowadays environmental science experiences data model is designed to uniformly represent diverse tremendous growth of raster data: N-dimensional (N-d) raster data types and formats, take into account a dis- arrays coming mainly from numeric simulation and Earth tributed cluster environment, and be independent of the remote sensing. An array DBMS is a tool to streamline raster data processing. However, raster data are usually stored in underlying raster file formats at the same time. Also, new files, not in databases. Moreover, numerous command line distributed algorithms are proposed based on the model. tools exist for processing raster files. This paper describes a Four modern raster data management trends are rele- distributed array DBMS under development that partially vant to this paper: industrial raster data models, formal delegates raster data processing to such tools. Our DBMS offers a new N-d array data model to abstract from the array models and algebras, in situ data processing algo- files and the tools and processes data in a distributed fashion rithms, and raster (array) DBMS. Good survey on the directly in their native file formats. As a case study, popular algorithms is contained in [7]. A recent survey of existing satellite altimetry data were used for the experiments carried array DBMS and similar systems is in [5]. -

(BI) Using MS Excel Powerpivot

2018 ASCUE Proceedings Developing an Introductory Class in Business Intelligence (BI) Using MS Excel Powerpivot Dr. Sam Hijazi Trevor Curtis Texas Lutheran University 1000 West Court Street Seguin, Texas 78130 [email protected] Abstract Asking questions about your data is a constant application of all business organizations. To facilitate decision making and improve business performance, a business intelligence application must be an in- tegral part of everyday management practices. Microsoft Excel added PowerPivot and PowerPivot offi- cially to facilitate this process with minimum cost, knowing that many business people are already fa- miliar with MS Excel. This paper will design an introductory class to business intelligence (BI) using Excel PowerPivot. If an educator decides to adopt this paper for teaching an introductory BI class, students should have previ- ous familiarity with Excel’s functions and formulas. This paper will focus on four significant phases all students need to complete in a three-credit class. First, students must understand the process of achiev- ing small database normalization and how to bring these tables to Excel or develop them directly within Excel PowerPivot. This paper will walk the reader through these steps to complete the task of creating the normalization, along with the linking and bringing the tables and their relationships to excel. Sec- ond, an introduction to Data Analysis Expression (DAX) will be discussed. Introduction It is not that difficult to realize the increase in the amount of data we have generated in the recent memory of our existence as a human race. To realize that more than 90% of the world’s data has been amassed in the past two years alone (Vidas M.) is to realize the need to manage such volume. -

Parallel Functional Programming with APL

Parallel Functional Programming, APL Lecture 1 Parallel Functional Programming with APL Martin Elsman Department of Computer Science University of Copenhagen DIKU December 19, 2016 Martin Elsman (DIKU) Parallel Functional Programming, APL Lecture 1 December 19, 2016 1 / 18 Outline 1 Outline Course Outline 2 Introduction to APL What is APL APL Implementations and Material APL Scalar Operations APL (1-Dimensional) Vector Computations Declaring APL Functions (dfns) APL Multi-Dimensional Arrays Iterations Declaring APL Operators Function Trains Examples Reading Martin Elsman (DIKU) Parallel Functional Programming, APL Lecture 1 December 19, 2016 2 / 18 Outline Course Outline Teachers Martin Elsman (ME), Ken Friis Larsen (KFL), Andrzej Filinski (AF), and Troels Henriksen (TH) Location Lectures in Small Aud, Universitetsparken 1 (UP1); Labs in Old Library, UP1 Course Description See http://kurser.ku.dk/course/ndak14009u/2016-2017 Course Outline Week 47 48 49 50 51 1–3 Mon 13–15 Intro, Futhark Parallel SNESL APL (ME) Project Futhark (ME) Haskell (AF) (ME) (KFL) Mon 15–17 Lab Lab Lab Lab Project Wed 13–15 Futhark Parallel SNESL Invited APL Project (ME) Haskell (AF) Lecture (ME) / (KFL) (John Projects Reppy) Martin Elsman (DIKU) Parallel Functional Programming, APL Lecture 1 December 19, 2016 3 / 18 Introduction to APL What is APL APL—An Ancient Array Programming Language—But Still Used! Pioneered by Ken E. Iverson in the 1960’s. E. Dijkstra: “APL is a mistake, carried through to perfection.” There are quite a few APL programmers around (e.g., HIPERFIT partners). Very concise notation for expressing array operations. Has a large set of functional, essentially parallel, multi- dimensional, second-order array combinators. -

Programming Language

Programming language A programming language is a formal language, which comprises a set of instructions that produce various kinds of output. Programming languages are used in computer programming to implement algorithms. Most programming languages consist of instructions for computers. There are programmable machines that use a set of specific instructions, rather than general programming languages. Early ones preceded the invention of the digital computer, the first probably being the automatic flute player described in the 9th century by the brothers Musa in Baghdad, during the Islamic Golden Age.[1] Since the early 1800s, programs have been used to direct the behavior of machines such as Jacquard looms, music boxes and player pianos.[2] The programs for these The source code for a simple computer program written in theC machines (such as a player piano's scrolls) did not programming language. When compiled and run, it will give the output "Hello, world!". produce different behavior in response to different inputs or conditions. Thousands of different programming languages have been created, and more are being created every year. Many programming languages are written in an imperative form (i.e., as a sequence of operations to perform) while other languages use the declarative form (i.e. the desired result is specified, not how to achieve it). The description of a programming language is usually split into the two components ofsyntax (form) and semantics (meaning). Some languages are defined by a specification document (for example, theC programming language is specified by an ISO Standard) while other languages (such as Perl) have a dominant implementation that is treated as a reference. -

Programming the Capabilities of the PC Have Changed Greatly Since the Introduction of Electronic Computers

1 www.onlineeducation.bharatsevaksamaj.net www.bssskillmission.in INTRODUCTION TO PROGRAMMING LANGUAGE Topic Objective: At the end of this topic the student will be able to understand: History of Computer Programming C++ Definition/Overview: Overview: A personal computer (PC) is any general-purpose computer whose original sales price, size, and capabilities make it useful for individuals, and which is intended to be operated directly by an end user, with no intervening computer operator. Today a PC may be a desktop computer, a laptop computer or a tablet computer. The most common operating systems are Microsoft Windows, Mac OS X and Linux, while the most common microprocessors are x86-compatible CPUs, ARM architecture CPUs and PowerPC CPUs. Software applications for personal computers include word processing, spreadsheets, databases, games, and myriad of personal productivity and special-purpose software. Modern personal computers often have high-speed or dial-up connections to the Internet, allowing access to the World Wide Web and a wide range of other resources. Key Points: 1. History of ComputeWWW.BSSVE.INr Programming The capabilities of the PC have changed greatly since the introduction of electronic computers. By the early 1970s, people in academic or research institutions had the opportunity for single-person use of a computer system in interactive mode for extended durations, although these systems would still have been too expensive to be owned by a single person. The introduction of the microprocessor, a single chip with all the circuitry that formerly occupied large cabinets, led to the proliferation of personal computers after about 1975. Early personal computers - generally called microcomputers - were sold often in Electronic kit form and in limited volumes, and were of interest mostly to hobbyists and technicians. -

An Array DBMS for Simulation Analysis and ML Models Predictions

SAVIME: An Array DBMS for Simulation Analysis and ML Models Predictions Hermano. L. S. Lustosa1, Anderson C. Silva1, Daniel N. R. da Silva1, Patrick Valduriez2, Fabio Porto1 1 National Laboratory for Scientific Computing, Rio de Janeiro, Brazil {hermano, achaves, dramos, fporto}@lncc.br 2 Inria, University of Montpellier, CNRS, LIRMM, France [email protected] Abstract. Limitations in current DBMSs prevent their wide adoption in scientific applications. In order to make them benefit from DBMS support, enabling declarative data analysis and visualization over scientific data, we present an in-memory array DBMS called SAVIME. In this work we describe the system SAVIME, along with its data model. Our preliminary evaluation show how SAVIME, by using a simple storage definition language (SDL) can outperform the state-of-the-art array database system, SciDB, during the process of data ingestion. We also show that it is possible to use SAVIME as a storage alternative for a numerical solver without affecting its scalability, making it useful for modern ML based applications. Categories and Subject Descriptors: H.2.4 [Database Management]: Systems Keywords: Scientific Data Management, Multidimensional Array, Machine Learning 1. INTRODUCTION Due to the increasing computational power of HPC environments, vast amounts of data are now generated in different research fields, and a relevant part of this data is best represented as array data. Geospatial and temporal data in climate modeling, astronomical images, medical imagery, multimedia and simulation data are naturally represented as multidimensional arrays. To analyze such data, DBMSs offer many advantages, like query languages, which eases data analysis and avoids the need for extensive coding/scripting, and a logical data view that isolates data from the applications that consume it. -

Array Databases: Concepts, Standards, Implementations

Baumann et al. J Big Data (2021) 8:28 https://doi.org/10.1186/s40537-020-00399-2 SURVEY PAPER Open Access Array databases: concepts, standards, implementations Peter Baumann , Dimitar Misev, Vlad Merticariu and Bang Pham Huu* *Correspondence: b. Abstract phamhuu@jacobs-university. Multi-dimensional arrays (also known as raster data or gridded data) play a key role in de Large-Scale Scientifc many, if not all science and engineering domains where they typically represent spatio- Information Systems temporal sensor, image, simulation output, or statistics “datacubes”. As classic database Research Group, Jacobs technology does not support arrays adequately, such data today are maintained University, Bremen, Germany mostly in silo solutions, with architectures that tend to erode and not keep up with the increasing requirements on performance and service quality. Array Database systems attempt to close this gap by providing declarative query support for fexible ad-hoc analytics on large n-D arrays, similar to what SQL ofers on set-oriented data, XQuery on hierarchical data, and SPARQL and CIPHER on graph data. Today, Petascale Array Database installations exist, employing massive parallelism and distributed processing. Hence, questions arise about technology and standards available, usability, and overall maturity. Several papers have compared models and formalisms, and benchmarks have been undertaken as well, typically comparing two systems against each other. While each of these represent valuable research to the best of our knowledge there is no comprehensive survey combining model, query language, architecture, and practical usability, and performance aspects. The size of this comparison diferentiates our study as well with 19 systems compared, four benchmarked to an extent and depth clearly exceeding previous papers in the feld; for example, subsetting tests were designed in a way that systems cannot be tuned to specifcally these queries.