Optimization Using Tracing Jits Or Why Computers, Not Humans, Should Optimize Code

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Compilers & Translator Writing Systems

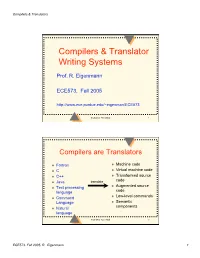

Compilers & Translators Compilers & Translator Writing Systems Prof. R. Eigenmann ECE573, Fall 2005 http://www.ece.purdue.edu/~eigenman/ECE573 ECE573, Fall 2005 1 Compilers are Translators Fortran Machine code C Virtual machine code C++ Transformed source code Java translate Augmented source Text processing language code Low-level commands Command Language Semantic components Natural language ECE573, Fall 2005 2 ECE573, Fall 2005, R. Eigenmann 1 Compilers & Translators Compilers are Increasingly Important Specification languages Increasingly high level user interfaces for ↑ specifying a computer problem/solution High-level languages ↑ Assembly languages The compiler is the translator between these two diverging ends Non-pipelined processors Pipelined processors Increasingly complex machines Speculative processors Worldwide “Grid” ECE573, Fall 2005 3 Assembly code and Assemblers assembly machine Compiler code Assembler code Assemblers are often used at the compiler back-end. Assemblers are low-level translators. They are machine-specific, and perform mostly 1:1 translation between mnemonics and machine code, except: – symbolic names for storage locations • program locations (branch, subroutine calls) • variable names – macros ECE573, Fall 2005 4 ECE573, Fall 2005, R. Eigenmann 2 Compilers & Translators Interpreters “Execute” the source language directly. Interpreters directly produce the result of a computation, whereas compilers produce executable code that can produce this result. Each language construct executes by invoking a subroutine of the interpreter, rather than a machine instruction. Examples of interpreters? ECE573, Fall 2005 5 Properties of Interpreters “execution” is immediate elaborate error checking is possible bookkeeping is possible. E.g. for garbage collection can change program on-the-fly. E.g., switch libraries, dynamic change of data types machine independence. -

Libffi This Manual Is for Libffi, a Portable Foreign-Function Interface Library

Libffi This manual is for Libffi, a portable foreign-function interface library. Copyright c 2008, 2010, 2011 Red Hat, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU General Public License as published by the Free Software Foundation; either version 2, or (at your option) any later version. A copy of the license is included in the section entitled \GNU General Public License". Chapter 2: Using libffi 1 1 What is libffi? Compilers for high level languages generate code that follow certain conventions. These conventions are necessary, in part, for separate compilation to work. One such convention is the calling convention. The calling convention is a set of assumptions made by the compiler about where function arguments will be found on entry to a function. A calling convention also specifies where the return value for a function is found. The calling convention isalso sometimes called the ABI or Application Binary Interface. Some programs may not know at the time of compilation what arguments are to be passed to a function. For instance, an interpreter may be told at run-time about the number and types of arguments used to call a given function. `Libffi' can be used in such programs to provide a bridge from the interpreter program to compiled code. The `libffi' library provides a portable, high level programming interface to various calling conventions. This allows a programmer to call any function specified by a call interface description at run time. FFI stands for Foreign Function Interface. A foreign function interface is the popular name for the interface that allows code written in one language to call code written in another language. -

Source-To-Source Translation and Software Engineering

Journal of Software Engineering and Applications, 2013, 6, 30-40 http://dx.doi.org/10.4236/jsea.2013.64A005 Published Online April 2013 (http://www.scirp.org/journal/jsea) Source-to-Source Translation and Software Engineering David A. Plaisted Department of Computer Science, University of North Carolina at Chapel Hill, Chapel Hill, USA. Email: [email protected] Received February 5th, 2013; revised March 7th, 2013; accepted March 15th, 2013 Copyright © 2013 David A. Plaisted. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. ABSTRACT Source-to-source translation of programs from one high level language to another has been shown to be an effective aid to programming in many cases. By the use of this approach, it is sometimes possible to produce software more cheaply and reliably. However, the full potential of this technique has not yet been realized. It is proposed to make source- to-source translation more effective by the use of abstract languages, which are imperative languages with a simple syntax and semantics that facilitate their translation into many different languages. By the use of such abstract lan- guages and by translating only often-used fragments of programs rather than whole programs, the need to avoid writing the same program or algorithm over and over again in different languages can be reduced. It is further proposed that programmers be encouraged to write often-used algorithms and program fragments in such abstract languages. Libraries of such abstract programs and program fragments can then be constructed, and programmers can be encouraged to make use of such libraries by translating their abstract programs into application languages and adding code to join things together when coding in various application languages. -

Tdb: a Source-Level Debugger for Dynamically Translated Programs

Tdb: A Source-level Debugger for Dynamically Translated Programs Naveen Kumar†, Bruce R. Childers†, and Mary Lou Soffa‡ †Department of Computer Science ‡Department of Computer Science University of Pittsburgh University of Virginia Pittsburgh, Pennsylvania 15260 Charlottesville, Virginia 22904 {naveen, childers}@cs.pitt.edu [email protected] Abstract single stepping through program execution, watching for par- ticular conditions and requests to add and remove breakpoints. Debugging techniques have evolved over the years in response In order to respond, the debugger has to map the values and to changes in programming languages, implementation tech- statements that the user expects using the source program niques, and user needs. A new type of implementation vehicle viewpoint, to the actual values and locations of the statements for software has emerged that, once again, requires new as found in the executable program. debugging techniques. Software dynamic translation (SDT) As programming languages have evolved, new debugging has received much attention due to compelling applications of techniques have been developed. For example, checkpointing the technology, including software security checking, binary and time stamping techniques have been developed for lan- translation, and dynamic optimization. Using SDT, program guages with concurrent constructs [6,19,30]. The pervasive code changes dynamically, and thus, debugging techniques use of code optimizations to improve performance has necessi- developed for statically generated code cannot be used to tated techniques that can respond to queries even though the debug these applications. In this paper, we describe a new optimization may have changed the number of statement debug architecture for applications executing with SDT sys- instances and the order of execution [15,25,29]. -

Virtual Machine Part II: Program Control

Virtual Machine Part II: Program Control Building a Modern Computer From First Principles www.nand2tetris.org Elements of Computing Systems, Nisan & Schocken, MIT Press, www.nand2tetris.org , Chapter 8: Virtual Machine, Part II slide 1 Where we are at: Human Abstract design Software abstract interface Thought Chapters 9, 12 hierarchy H.L. Language Compiler & abstract interface Chapters 10 - 11 Operating Sys. Virtual VM Translator abstract interface Machine Chapters 7 - 8 Assembly Language Assembler Chapter 6 abstract interface Computer Machine Architecture abstract interface Language Chapters 4 - 5 Hardware Gate Logic abstract interface Platform Chapters 1 - 3 Electrical Chips & Engineering Hardware Physics hierarchy Logic Gates Elements of Computing Systems, Nisan & Schocken, MIT Press, www.nand2tetris.org , Chapter 8: Virtual Machine, Part II slide 2 The big picture Some . Some Other . Jack language language language Chapters Some Jack compiler Some Other 9-13 compiler compiler Implemented in VM language Projects 7-8 VM implementation VM imp. VM imp. VM over the Hack Chapters over CISC over RISC emulator platforms platforms platform 7-8 A Java-based emulator CISC RISC is included in the course written in Hack software suite machine machine . a high-level machine language language language language Chapters . 1-6 CISC RISC other digital platforms, each equipped Any Hack machine machine with its VM implementation computer computer Elements of Computing Systems, Nisan & Schocken, MIT Press, www.nand2tetris.org , Chapter 8: Virtual Machine, -

Dell Wyse Management Suite Version 2.1 Third Party Licenses

Dell Wyse Management Suite Version 2.1 Third Party Licenses October 2020 Rev. A01 Notes, cautions, and warnings NOTE: A NOTE indicates important information that helps you make better use of your product. CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you how to avoid the problem. WARNING: A WARNING indicates a potential for property damage, personal injury, or death. © 2020 Dell Inc. or its subsidiaries. All rights reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. Contents Chapter 1: Third party licenses...................................................................................................... 4 Contents 3 1 Third party licenses The table provides the details about third party licenses for Wyse Management Suite 2.1. Table 1. Third party licenses Component name License type jdk1.8.0_112 Oracle Binary Code License jre11.0.5 Oracle Binary Code License bootstrap-2.3.2 Apache License, Version 2.0 backbone-1.3.3 MIT MIT aopalliance-1.0.jar Public Domain aspectjweaver-1.7.2.jar Eclipse Public licenses- v 1.0 bcprov-jdk16-1.46.jar MIT commons-codec-1.9.jar Apache License, Version 2.0 commons-logging-1.1.1.jar Apache License, Version 2.0 hamcrest-core-1.3.jar BSD-3 Clause jackson-annotations.2.10.2.jar Apache License, Version 2.0 The Apache Software License, Version 2.0 jackson-core.2.10.2.jar Apache License, Version 2.0 The Apache Software License, Version 2.0 jackson-databind.2.10.2.jar Apache License, Version 2.0 The Apache Software License, Version 2.0 log4j-1.2.17.jar Apache License, Version 2.0 mosquitto-3.1 Eclipse Public licenses- v 1.0 Gradle Wrapper 2.14 Apache 2.0 License Gradle Wrapper 3.3 Apache 2.0 License HockeySDK-Ios3.7.0 MIT Relayrides / pushy - v0.9.3 MIT zlib-1.2.8 zlib license yaml-cpp-0.5.1 MIT libssl.dll (1.1.1c) Open SSL License 4 Third party licenses Table 1. -

A Brief History of Just-In-Time Compilation

A Brief History of Just-In-Time JOHN AYCOCK University of Calgary Software systems have been using “just-in-time” compilation (JIT) techniques since the 1960s. Broadly, JIT compilation includes any translation performed dynamically, after a program has started execution. We examine the motivation behind JIT compilation and constraints imposed on JIT compilation systems, and present a classification scheme for such systems. This classification emerges as we survey forty years of JIT work, from 1960–2000. Categories and Subject Descriptors: D.3.4 [Programming Languages]: Processors; K.2 [History of Computing]: Software General Terms: Languages, Performance Additional Key Words and Phrases: Just-in-time compilation, dynamic compilation 1. INTRODUCTION into a form that is executable on a target platform. Those who cannot remember the past are con- What is translated? The scope and na- demned to repeat it. ture of programming languages that re- George Santayana, 1863–1952 [Bartlett 1992] quire translation into executable form covers a wide spectrum. Traditional pro- This oft-quoted line is all too applicable gramming languages like Ada, C, and in computer science. Ideas are generated, Java are included, as well as little lan- explored, set aside—only to be reinvented guages [Bentley 1988] such as regular years later. Such is the case with what expressions. is now called “just-in-time” (JIT) or dy- Traditionally, there are two approaches namic compilation, which refers to trans- to translation: compilation and interpreta- lation that occurs after a program begins tion. Compilation translates one language execution. into another—C to assembly language, for Strictly speaking, JIT compilation sys- example—with the implication that the tems (“JIT systems” for short) are com- translated form will be more amenable pletely unnecessary. -

Language Translators

Student Notes Theory LANGUAGE TRANSLATORS A. HIGH AND LOW LEVEL LANGUAGES Programming languages Low – Level Languages High-Level Languages Example: Assembly Language Example: Pascal, Basic, Java Characteristics of LOW Level Languages: They are machine oriented : an assembly language program written for one machine will not work on any other type of machine unless they happen to use the same processor chip. Each assembly language statement generally translates into one machine code instruction, therefore the program becomes long and time-consuming to create. Example: 10100101 01110001 LDA &71 01101001 00000001 ADD #&01 10000101 01110001 STA &71 Characteristics of HIGH Level Languages: They are not machine oriented: in theory they are portable , meaning that a program written for one machine will run on any other machine for which the appropriate compiler or interpreter is available. They are problem oriented: most high level languages have structures and facilities appropriate to a particular use or type of problem. For example, FORTRAN was developed for use in solving mathematical problems. Some languages, such as PASCAL were developed as general-purpose languages. Statements in high-level languages usually resemble English sentences or mathematical expressions and these languages tend to be easier to learn and understand than assembly language. Each statement in a high level language will be translated into several machine code instructions. Example: number:= number + 1; 10100101 01110001 01101001 00000001 10000101 01110001 B. GENERATIONS OF PROGRAMMING LANGUAGES 4th generation 4GLs 3rd generation High Level Languages 2nd generation Low-level Languages 1st generation Machine Code Page 1 of 5 K Aquilina Student Notes Theory 1. MACHINE LANGUAGE – 1ST GENERATION In the early days of computer programming all programs had to be written in machine code. -

CFFI Documentation Release 0.8.6

CFFI Documentation Release 0.8.6 Armin Rigo, Maciej Fijalkowski May 19, 2015 Contents 1 Installation and Status 3 1.1 Platform-specific instructions......................................4 2 Examples 5 2.1 Simple example (ABI level).......................................5 2.2 Real example (API level).........................................5 2.3 Struct/Array Example..........................................6 2.4 What actually happened?.........................................6 3 Distributing modules using CFFI7 3.1 Cleaning up the __pycache__ directory.................................7 4 Reference 9 4.1 Declaring types and functions......................................9 4.2 Loading libraries............................................. 10 4.3 The verification step........................................... 10 4.4 Working with pointers, structures and arrays.............................. 12 4.5 Python 3 support............................................. 14 4.6 An example of calling a main-like thing................................. 15 4.7 Function calls............................................... 15 4.8 Variadic function calls.......................................... 16 4.9 Callbacks................................................. 17 4.10 Misc methods on ffi........................................... 18 4.11 Unimplemented features......................................... 20 4.12 Debugging dlopen’ed C libraries..................................... 20 4.13 Reference: conversions.......................................... 21 4.14 Reference: -

Compiler Fuzzing: How Much Does It Matter?

Compiler Fuzzing: How Much Does It Matter? MICHAËL MARCOZZI∗, QIYI TANG∗, ALASTAIR F. DONALDSON, and CRISTIAN CADAR, Imperial College London, United Kingdom Despite much recent interest in randomised testing (fuzzing) of compilers, the practical impact of fuzzer-found compiler bugs on real-world applications has barely been assessed. We present the first quantitative and qualitative study of the tangible impact of miscompilation bugs in a mature compiler. We follow a rigorous methodology where the bug impact over the compiled application is evaluated based on (1) whether the bug appears to trigger during compilation; (2) the extent to which generated assembly code changes syntactically due to triggering of the bug; and (3) whether such changes cause regression test suite failures, or whether we can manually find application inputs that trigger execution divergence due to such changes. Thestudy is conducted with respect to the compilation of more than 10 million lines of C/C++ code from 309 Debian 155 packages, using 12% of the historical and now fixed miscompilation bugs found by four state-of-the-art fuzzers in the Clang/LLVM compiler, as well as 18 bugs found by human users compiling real code or as a by-product of formal verification efforts. The results show that almost half of the fuzzer-found bugs propagate tothe generated binaries for at least one package, in which case only a very small part of the binary is typically affected, yet causing two failures when running the test suites of all the impacted packages. User-reported and formal verification bugs do not exhibit a higher impact, with a lower rate of triggered bugs andonetest failure. -

VSI's Open Source Strategy

VSI's Open Source Strategy Plans and schemes for Open Source so9ware on OpenVMS Bre% Cameron / Camiel Vanderhoeven April 2016 AGENDA • Programming languages • Cloud • Integraon technologies • UNIX compability • Databases • Analy;cs • Web • Add-ons • Libraries/u;li;es • Other consideraons • SoDware development • Summary/conclusions tools • Quesons Programming languages • Scrip;ng languages – Lua – Perl (probably in reasonable shape) – Tcl – Python – Ruby – PHP – JavaScript (Node.js and friends) – Also need to consider tools and packages commonly used with these languages • Interpreted languages – Scala (JVM) – Clojure (JVM) – Erlang (poten;ally a good fit with OpenVMS; can get good support from ESL) – All the above are seeing increased adop;on 3 Programming languages • Compiled languages – Go (seeing rapid adop;on) – Rust (relavely new) – Apple Swi • Prerequisites (not all are required in all cases) – LLVM backend – Tweaks to OpenVMS C and C++ compilers – Support for latest language standards (C++) – Support for some GNU C/C++ extensions – Updates to OpenVMS C RTL and threads library 4 Programming languages 1. JavaScript 2. Java 3. PHP 4. Python 5. C# 6. C++ 7. Ruby 8. CSS 9. C 10. Objective-C 11. Perl 12. Shell 13. R 14. Scala 15. Go 16. Haskell 17. Matlab 18. Swift 19. Clojure 20. Groovy 21. Visual Basic 5 See h%p://redmonk.com/sogrady/2015/07/01/language-rankings-6-15/ Programming languages Growing programming languages, June 2015 Steve O’Grady published another edi;on of his great popularity study on programming languages: RedMonk Programming Language Rankings: June 2015. As usual, it is a very valuable piece. There are many take-away from this research. -

Structured Foreign Types

Ftypes: Structured foreign types Andrew W. Keep R. Kent Dybvig Indiana University fakeep,[email protected] Abstract When accessing scalar elements within an ftype, the value of the High-level programming languages, like Scheme, typically repre- scalar is automatically marshaled into the equivalent Scheme rep- sent data in ways that differ from the host platform to support resentation. When setting scalar elements, the Scheme value is consistent behavior across platforms and automatic storage man- checked to ensure compatibility with the specified foreign type, and agement, i.e., garbage collection. While crucial to the program- then marshaled into the equivalent foreign representation. Ftypes ming model, differences in data representation can complicate in- are well integrated into the system, with compiler support for ef- teraction with foreign programs and libraries that employ machine- ficient access to foreign data and debugger support for convenient dependent data structures that do not readily support garbage col- inspection of foreign data. lection. To bridge this gap, many high-level languages feature for- The ftype syntax is convenient and flexible. While it is similar in eign function interfaces that include some ability to interact with some ways to foreign data declarations in other Scheme implemen- foreign data, though they often do not provide complete control tations, and language design is largely a matter of taste, we believe over the structure of foreign data, are not always fully integrated our syntax is cleaner and more intuitive than most. Our system also into the language and run-time system, and are often not as effi- has a more complete set of features, covering all C data types along cient as possible.