The Journal of Computing Sciences in Colleges

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Charles Munson

Charles Munson Current Address Permanent Address France [email protected] Florida, USA http://www.JetWhiz.com OBJECTIVE To obtain a software development or web programming position that utilizes a wide range of computer languages. EDUCATION Georgia Institute of Technology GPA 3.8 Doctor of Philosophy in Electrical & Computer Engineering (Ph.D. in progress) The University of Florida – Gainesville, Florida GPA 3.8, w/ honors Bachelor of Science in Computer Engineering RELEVANT Environments: KNOWLEDGE Experienced in Linux, BSD and Windows environments Knowledgeable with Macintosh-based systems Programming: Expert experience with Perl (14 years), PHP (14 years), JavaScript (15 years), C/C++ (11 years) and SQL Skilled with C# (7 years), Java (10 years), JSON/AJAX (Web 2.0 design), shell scripting (*Nix and Windows) and mobile application development Experience with assembly programming for various processors and microcontrollers (such as the MSP430 and 68HC12), including SPI communication Knowledge with Python, VBScript, QBasic, Pascal, ABAP (SAP), ASP.NET, Haskell Graphical interface (GUI) and user interface design using Java AWT and Swing, and also in C with the GTK+ toolkit and Win32 API Markup Languages: Expert knowledge of HTML5, (X)HTML, DHTML and XML (incl. Atom and RSS) Highly experienced with document formatting and transformations using XSL/XSLT and CSS Hardware: Reconfigurable computing with FPGA and CPLD devices in conjunction with VHDL and schematic design (Quartus) Circuit board (PCB) layout using Altium Designer -

Mcandrew 10783720.Pdf (2.064Mb)

T-4046 ELECTROCHEMICAL ASPECTS OF MAGNESIUM DISSOLUTION IN AQUEOUS KCl-NaCl ELECTROLYTES by Jerrilyn P. McAndrew ARTHUR LAKES LIBRARY COLORADO SCHOOL OF MINES GOLDEN, CO 80401 ProQuest Number: 10783720 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a com plete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. uest ProQuest 10783720 Published by ProQuest LLC(2018). Copyright of the Dissertation is held by the Author. All rights reserved. This work is protected against unauthorized copying under Title 17, United States C ode Microform Edition © ProQuest LLC. ProQuest LLC. 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106- 1346 T—4046 A thesis submitted to the Faculty and Board of Trustees of the Colorado School of Hines in partial fulfillment of the requirements for the degree of Master of Science (Metallurgical and Materials Engineering). Golden, Colorado Date:: A J w j . S T Signed: ]A1 L U Approved Dr. Gerapd-'F'T Martins Thesis Advisor Golden, Colorado Date: /&<r-<5r / ? 9 & un. J. J. Moore Professor and Head, Department of Metallurgical and Materials Engineering T—4046 ABSTRACT The dissolution of magnesium metal in equimolar sodium chloride/potassium chloride aqueous electrolytes was investigated. The focus of the work conducted was on the electrochemical aspects of the dissolution process, as it relates to the equilibria in the electrolyte and the dissolution kinetics. -

Run BASIC – a Breakthrough Web Application Server “Web Programming for People Who Really Like to Program!” Carl Gundel, [email protected]

Run BASIC – A Breakthrough Web Application Server “Web programming for people who really like to program!” http://www.runbasic.com Carl Gundel, [email protected] Run BASIC allows you to apply your desktop programming skills to create web applications without struggling with the overhead of typical web programming frameworks. Unlike other web programming technologies, with Run BASIC there is no state to manage, you don't need to GET or POST anything, and you never even have to think about the Common Gateway Interface. You can easily deliver interesting and rich applications that are difficult to deliver with other tools. Run BASIC hides the web technology so you can just code. Honoring a Tradition of Simplicity Run BASIC is a version of the BASIC programming language. BASIC was designed from the start to be easy for the non-computer scientist to use. A small language with simple syntax and English-like commands, BASIC is easily learned. Modern implementations of BASIC also add important features such as support for structured programming and scoped subroutines. Inspired by the approachability of BASIC we decided to create a web programming system with those important qualities. When BASIC was at the peak of its popularity in the 1980's most people who used it were users of home computers. These were usually 8-bit microcomputers that plugged into a television set. With the flick of a power switch the user would almost instantly see something like this: SuperDuper BASIC v2.3 15485 Bytes Free Ready So the user was able to immediately begin typing commands and programming the machine. -

Programming Shadows

Programming Shadows Computer programming in the context of the Sundial Simon Wheaton-Smith FRI, MBCS, CITP Phoenix, AZ 1 ILLUSTRATING TIME’S SHADOW Programming Shadows by Simon Wheaton-Smith my business card in 1970 ISBN 978-0-9960026-2-2 Library of Congress Control Number: 2014904841 Simon Wheaton-Smith www.illustratingshadows.com [email protected] (c) 2004-2020 Simon Wheaton-Smith All rights reserved. February 14, 2017 April 1, 2020 2 THE ILLUSTRATING SHADOWS COLLECTION Illustrating Shadows provides several books or booklets:- Simple Shadows Build a horizontal dial for your location. Appropriate theory. Cubic Shadows Introducing a cube dial for your location. Appropriate theory. Cutting Shadows Paper cutouts for you to make sundials with. Illustrating Times Shadow the big book Illustrating Times Shadow ~ Some 400 pages covering almost every aspect of dialing. Includes a short appendix. Appendices Illustrating Times Shadow ~ The Appendices ~ Some 180 pages of optional detailed appendix material. Supplement Supplemental Shadows ~ Material in the form of a series of articles, covers more on the kinds of time, declination confusion, other proofs for the vertical decliner, Saxon, scratch, and mass dials, Islamic prayer times (asr), dial furniture, and so on! Programming Shadows A book discussing many programming languages, their systems and how to get them, many being free, and techniques for graphical depictions. This covers the modern languages, going back into the mists of time. Legacy languages include ALGOL, FORTRAN, the IBM 1401 Autocoder and SPS, the IBM 360 assembler, and Illustrating Shadows provides simulators for them, including the source code. Then C, PASCAL, BASIC, JAVA, Python, and the Lazarus system, as well as Octave, Euler, and Scilab. -

Liberty BASIC for Windows V4

Contents Liberty BASIC v4.0 Help Document Copyright 1992-2003 Shoptalk Systems - http://www.libertybasic.com/ "Windows is a registered trademark of Microsoft Corporation in the United States and other countries." What's New! What's new in version 4. Overview An overview of Liberty BASIC. The Liberty BASIC Language Syntax and Usage for the Liberty BASIC Language. Gui Programming Creating and using Graphical User Interfaces. Command Reference Detailed Listing of Liberty BASIC Commands. API and DLL Making API and DLL calls in Liberty BASIC. Graphical Sprites Sprites for Games and Graphics. Port I/O Controlling Hardware Ports. File Operations Accessing Disk Files. Mathematics Mathematical Operators and Functions. Text Text Manipulation and Functions. Graphics Using Color and Drawing Commands. Troubleshooting Solving Problems. Registering Liberty BASIC Why and how to register Liberty BASIC. http://www.libertybasic.com/ The official Liberty BASIC web site. Installing Liberty BASIC Installing and uninstalling Liberty BASIC. What's New! What's new in Liberty BASIC 4 - additions and modifications that make Liberty BASIC 4 different from previous versions of Liberty BASIC. Improvements to DIM TAB( n ) Printing columns with commas Handle Variables Subs for Event Handlers Global Variables BYREF - Passing by reference MAPHANDLE - changing the handle of open devices Graphics window scrollbar changes EVAL(code$) EVAL$(code$) Breakpoints for debugging DO LOOP Printerfont$ Printer Graphics Now Scaled Debugger Improvements ON ERROR GOTO and RESUME ENABLE, DISABLE, SHOW AND HIDE Groupboxes Accept New Commands PLAYMIDI, MIDIPOS(), STOPMIDI Named Drawing Segments Joystick Support STYLEBITS New Sprite Commands centersprite removesprite spriteoffset spritetravelxy spritetofront spritetoback spriteround Glossary Glossary of General Computer and Programming Terms Alphanumeric Any letter of the alphabet or any digit from 0 to 9. -

Using-Visual-Basic

Using Visual Basic Using Visual Basic Books Available By both authors: BP327 DOS one step at a time BP337 A Concise User's Guide to Lotus 1-2-3 for Windows BP341 MS-DOS explained BP346 Programming in Visual Basic for Windows BP352 Excel 5 explained BP362 Access one step at a time BP387 Windows one step at a time BP388 Why not personalise your PC BP400 Windows 95 explained BP406 MS Word 95 explained BP407 Excel 95 explained BP408 Access 95 one step at a time BP409 MS Office 95 one step at a time BP415 Using Netscape on the Internet* BP420 E-mail on the Internet* BP426 MS -Office 97 explained BP428 MS -Word 97 explained BP429 MS -Excel 97 explained BP430 MS -Access 97 one step at a time BP433 Your own Web site on the Internet BP448 Lotus SmartSuite 97 explained BP456Windows 98 explained* BP460 Using Microsoft Explorer 4 on the Internet* BP464 E-mail and news with Outlook Express* BP465 Lotus SmartSuite Millennium explained BP471 Microsoft Office 2000 explained BP472 Microsoft Word 2000 explained BP473 Microsoft Excel 2000 explained BP474 Microsoft Access 2000 explained BP478 Microsoft Works 2000 explained BP486 Using Linux the easy way* BP487 Quicken 2000 UK explained* BP488 Internet Explorer 5 explained* BP491 Windows 2000 explained* BP493 Windows Me explained* BP498 Using Visual Basic By Noel Kantaris: BP258 Learning to Program in C BP259 A Concise Introduction to UNIX* BP284 Programming in QuickBASIC BP325 A Concise User's Guide to Windows 3.1 ii Using Visual Basic Using Visual Basic by P.R.M. -

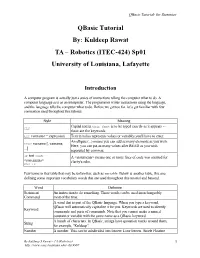

Qbasic Tutorial By: Kuldeep Rawat TA – Robotics (ITEC-424) Sp01 University of Louisiana, Lafayette

QBasic Tutorial- for Dummies QBasic Tutorial By: Kuldeep Rawat TA – Robotics (ITEC-424) Sp01 University of Louisiana, Lafayette Introduction A computer program is actually just a series of instructions telling the computer what to do. A computer language acts as an interpreter. The programmer writes instructions using the language, and the language tells the computer what to do. Before we get too far, let’s get familiar with few convention used throughout this tutorial. Style Meaning Capital text in this font is to be typed exactly as it appears -- CLS these are the keywords. LET varname = expression Text in italics represents values or variables you'll have to enter. An ellipsis (...) means you can add as many elements as you wish. varname [, varname, READ Here, you can put as many values after READ as you wish, ...] separated by commas. IF test THEN A <statements> means one or more lines of code was omitted for <statements> clarity's sake. END IF Few terms in that table that may be unfamiliar, such as variable. Below is another table, this one defining some important vocabulary words that are used throughout this tutorial and beyond. Word Definition Statement An instruction to do something. These words can be used interchangeably Command most of the time; A word that is part of the QBasic language. When you type a keyword, QBasic will automatically capitalize it for you. Keywords are used to identify Keyword commands and parts of commands. Note that you cannot make a named constant or variable with the same name as a QBasic keyword. -

An Introduction to Microsoft Small Basic

AN INTRODUCTION TO MICROSOFT SMALL BASIC RUSTY KEELE UETN TECH SUMMIT 2019 INTRODUCTION TODAY’S PLAN • History lesson: BASIC language • What Small Basic is and isn’t • How to get Small Basic • A Demonstration • Resources for learning and teaching ABOUT ME • Work at UEN • Programming since 4th grade • BASIC (on Commodore 64) was my first programming language! A SHORT HISTORY OF BASIC BEGINNINGS AT DARTMOUTH • Early 1960s - 2 Math Professors • Wanted an easy language for non- science students • Beginner’s All-purpose Symbolic Instruction Code MICRO COMPUTERS AND MICRO-SOFT • 1975 – Gates & Allen’s first product • Built in or included with most home computers of the 1970s and 1980s TYPE-IN PROGRAMS • From magazines and books • 101 BASIC Computer Games • School text book examples: “Try It In BASIC” BASIC BASHING • GOTO and GOSUB created spaghetti code • Dijkstra’s infamous quote • "It is practically impossible to teach good programming to students that have had a prior exposure to BASIC.” • The rise of structured programming: Pascal and C THE DECLINE OF BASIC • More powerful home computers • Graphical environments • Increase in commercial and free software “WHY JOHNNY CAN'T CODE” • No easy way for kids to get hooked on programming • Searched for an easy to use BASIC • …ended up buying a Commodore 64! • Issued a challenge to Microsoft VIJAYE RAJI • Microsoft employee • Read Brin’s article • Created a new version of BASIC – Small Basic WHAT IS MICROSOFT SMALL BASIC? GOALS OF MICROSOFT SMALL BASIC • For beginners • A real (but simple) language -

Qbasic/Full Book View

QBasic/Full Book View < QBasic This page may need to be reviewed for quality. Contents [hide] 1 Text Output 1.1 Print 1.2 1HELLO.BAS 1.3 Understanding 1HELLO.BAS 1.4 2HELLO.BAS 1.5 Commas and Semicolons in PRINT and CLS 1.6 3HELLO.BAS 1.7 Variables 1.8 4FACE.BAS 1.9 Locate statement 1.10 5FACE.BAS 1.11 Colors 1.12 Color by Number 1.13 Summary 2 Basic Input 2.1 6INPUT.BAS 3 Basic Math 3.1 Equation Setup 3.2 7MATH.BAS 3.3 Understanding 7MATH.BAS 3.4 8MATH.BAS 3.5 Understanding 8MATH.BAS 3.6 9TIP.BAS 3.7 Tip Calculator 3.8 10OROP.BAS 3.9 Parentheses and Order of Operations 3.10 Random Numbers 3.11 11RND.BAS 3.12 More on RND 4 Flow Control 4.1 Conditional execution 4.2 True or False 4.3 IF 4.4 IF...THEN...ELSE 4.5 13 ELSEIF 4.6 FOR...NEXT 4.7 14FOR.BAS 4.8 WHILE...WEND 4.9 15WHILE.BAS 4.10 DO...LOOP 4.11 12IF.BAS 4.12 SELECT CASE 5 Advanced Input 5.1 INKEY$ 5.2 The keyboard buffer 5.3 Scancodes 6 Subroutines and Functions 6.1 Purpose 6.2 Procedure vs. Function 6.3 GOTO and GOSUB 6.4 ON ERROR 6.5 Declaring a subroutine 6.6 Declaring a function 7 Arrays and Types 7.1 Built-in Types 7.2 User-defined type 7.3 Array 7.4 Multidimensional array 7.5 Non-zero base 8 Appendix 8.1 Commands 8.1.1 ABS() 8.1.2 ACCESS 8.1.3 ASC() 8.1.4 ATN() 8.1.5 BEEP 8.1.6 CASE 8.1.7 CHAIN 8.1.8 CHDIR 8.1.9 CHR$() 8.1.10 CINT() 8.1.11 CIRCLE 8.1.12 CLEAR 8.1.13 CLOSE 8.1.14 CLS 8.1.15 COLOR 8.1.16 COMMON 8.1.17 CONST 8.1.18 DATA 8.1.19 DATE$ 8.1.20 DIM 8.1.21 DO . -

Beginner's Programming Tutorial in Qbasic

Beginner's Programming Tutorial in QBasic This document is meant to get you started into programming, and assumes you have some experience with computers and with Windows 95 (or 98, etc.). Since this tutorial is written for people who don't like to read a lot of text, it includes a number of examples. Therefore, you can do a lot of work in not much time. The more important chapters have a star ( ). Feel free to distribute this tutorial, upload it to your website, link to it from your site, etc. http://www.geocities.com/progsharehouse/qbtutor Mirror: http://development.freeservers.com/qbtutor Table of Contents Part I: Q-Basics Chapter 1: Before you start Chapter 2: Your first program Chapter 3: Variables Chapter 4: Retrieving keyboard input from the user Chapter 5: The IF and THEN commands Chapter 6: Labels and the GOTO and GOSUB commands Chapter 7: Loops Chapter 8: What next? Part II: Intermediate topics Chapter 9: QBasic interface Chapter 10: Adding documentation to your programs Chapter 11: Reading and writing to files Chapter 12: Displaying graphics Chapter 13: Mathematics functions Chapter 14: Getting the current date and time Part III: Advanced topics Chapter 15: Arrays Chapter 16: Variable types Chapter 17: Subroutines and functions Chapter 18: Numbering systems Chapter 19: Memory Before you start Before you can create a program in QBasic, you need the QBasic interpreter. It is available from your Windows 95 (or 98) CD, or you can download it below. To access QBasic from the Windows 95 CD: 1. Insert the CD into your CD-ROM drive. -

Basic Programming Software for Mac

Basic programming software for mac Chipmunk Basic is an interpreter for the BASIC Programming Language. It runs on multiple Chipmunk Basic for Mac OS X - (Version , Apr01). Learning to program your Mac is a great idea, and there are plenty of great (and mostly free) resources out there to help you learn coding. other BASIC compiler you may have used, whether for the Amiga, PC or Mac. PureBasic is a portable programming language which currently works Linux. KBasic is a powerful programming language, which is simply intuitive and easy to learn. It is a new programming language, a further BASIC dialect and is related. Objective-Basic is a powerful BASIC programming language for Mac, which is simply intuitive and fast easy to learn. It is related to Visual Basic. Swift is a new programming language created by Apple for building iOS and Mac apps. It's powerful and easy to use, even for beginners. QB64 isn't exactly pretty, but it's a dialect of QBasic, with mac, windows, a structured basic with limited variable scoping (subroutine or program-wide), I have compiled old QBasic code unmodified provided it didn't do file. BASIC for Linux(R), Mac(R) OS X and Windows(R). KBasic is a powerful programming language, which is simply intuitive and easy to learn. It is a new. the idea to make software available for everybody: a programming language Objective-Basic requires Mac OS X Lion ( or higher) and Xcode 4 ( or. BASIC is an easy to use version of BASIC designed to teach There is hope for kids to learn an amazing programming language. -

M:\Department\7 Oct 2002 Backup

Table of Contents Introduction .................................................... -3- Computer Languages .............................................. -4- Problem Solving Strategies ......................................... -7- The Liberty Basic Environment ...................................... -11- Liberty Basic Commands ........................................... -13- Variables and Constants ............................................ -19- Mathematical Operators ............................................ -23- Error Checking and Debugging ...................................... -27- Manipulating Strings .............................................. -31- Mathematical Functions ........................................... -32- Decision Statements .............................................. -37- Iteration ....................................................... -44- Structured Programming ........................................... -47- Graphic User Interface (GUI) ....................................... -49- Graphics ....................................................... -64- Preface This guide was produced to introduce students to the Liberty Basic programming language. The topics of graphical user interface and graphics introduce concepts related to “Windows” object oriented programming. These topics were included so students might experience the transition from code-based programming to the object oriented environment of Visual Basic that is used in the CMP 621 course. The Teacher's Guide and Lab Manual for Liberty Basic were