Towards Effective Interaction in 3D Data Visualizations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pegi Annual Report

PEGI ANNUAL REPORT ANNUAL REPORT INTRODUCTION 2 CHAPTER 1 The PEGI system and how it functions 4 AGE CATEGORIES 5 CONTENT DESCRIPTORS 6 THE PEGI OK LABEL 7 PARENTAL CONTROL SYSTEMS IN GAMING CONSOLES 7 STEPS OF THE RATING PROCESS 9 ARCHIVE LIBRARY 9 CHAPTER 2 The PEGI Organisation 12 THE PEGI STRUCTURE 12 PEGI S.A. 12 BOARDS AND COMMITTEES 12 THE PEGI CONGRESS 12 PEGI MANAGEMENT BOARD 12 PEGI COUNCIL 12 PEGI EXPERTS GROUP 13 COMPLAINTS BOARD 13 COMPLAINTS PROCEDURE 14 THE FOUNDER: ISFE 17 THE PEGI ADMINISTRATOR: NICAM 18 THE PEGI ADMINISTRATOR: VSC 20 PEGI IN THE UK - A CASE STUDY? 21 PEGI CODERS 22 CHAPTER 3 The PEGI Online system 24 CHAPTER 4 PEGI Communication tools and activities 28 Introduction 28 Website 28 Promotional materials 29 Activities per country 29 ANNEX 1 PEGI Code of Conduct 34 ANNEX 2 PEGI Online Safety Code (POSC) 38 ANNEX 3 The PEGI Signatories 44 ANNEX 4 PEGI Assessment Form 50 ANNEX 5 PEGI Complaints 58 1 INTRODUCTION Dear reader, We all know how quickly technology moves on. Yesterday’s marvel is tomorrow’s museum piece. The same applies to games, although it is not just the core game technology that continues to develop at breakneck speed. The human machine interfaces we use to interact with games are becoming more sophisticated and at the same time, easier to use. The Wii Balance Board™ and the MotionPlus™, Microsoft’s Project Natal and Sony’s PlayStation® Eye are all reinventing how we interact with games, and in turn this is playing part in a greater shift. -

Off the Beaten Track

Off the Beaten Track To have your recording considered for review in Sing Out!, please submit two copies (one for one of our reviewers and one for in- house editorial work, song selection for the magazine and eventual inclusion in the Sing Out! Resource Center). All recordings received are included in “Publication Noted” (which follows “Off the Beaten Track”). Send two copies of your recording, and the appropriate background material, to Sing Out!, P.O. Box 5460 (for shipping: 512 E. Fourth St.), Bethlehem, PA 18015, Attention “Off The Beaten Track.” Sincere thanks to this issue’s panel of musical experts: Richard Dorsett, Tom Druckenmiller, Mark Greenberg, Victor K. Heyman, Stephanie P. Ledgin, John Lupton, Angela Page, Mike Regenstreif, Seth Rogovoy, Ken Roseman, Peter Spencer, Michael Tearson, Theodoros Toskos, Rich Warren, Matt Watroba, Rob Weir and Sule Greg Wilson. that led to a career traveling across coun- the two keyboard instruments. How I try as “The Singing Troubadour.” He per- would have loved to hear some of the more formed in a variety of settings with a rep- unusual groupings of instruments as pic- ertoire that ranged from opera to traditional tured in the notes. The sound of saxo- songs. He also began an investigation of phones, trumpets, violins and cellos must the music of various utopian societies in have been glorious! The singing is strong America. and sincere with nary a hint of sophistica- With his investigation of the music of tion, as of course it should be, as the Shak- VARIOUS the Shakers he found a sect which both ers were hardly ostentatious. -

Journal of Games Is Here to Ask Himself, "What Design-Focused Pre- Hideo Kojima Need an Editor?" Inferiors

WE’RE PROB NVENING ABLY ALL A G AND CO BOUT V ONFERRIN IDEO GA BOUT C MES ALSO A JournalThe IDLE THUMBS of Games Ultraboost Ad Est’d. 2004 TOUCHING THE INDUSTRY IN A PROVOCATIVE PLACE FUN FACTOR Sessions of Interest Former developers Game Developers Confer We read the program. sue 3D Realms Did you? Probably not. Read this instead. Computer game entreprenuers claim by Steve Gaynor and Chris Remo Duke Nukem copyright Countdown to Tears (A history of tears?) infringement Evolving Game Design: Today and Tomorrow, Eastern and Western Game Design by Chris Remo Two founders of long-defunct Goichi Suda a.k.a. SUDA51 Fumito Ueda British computer game developer Notable Industry Figure Skewered in Print Crumpetsoft Disk Systems have Emil Pagliarulo Mark MacDonald sued 3D Realms, claiming the lat- ter's hit game series Duke Nukem Wednesday, 10:30am - 11:30am infringes copyright of Crumpetsoft's Room 132, North Hall vintage game character, The Duke of industry session deemed completely unnewswor- Newcolmbe. Overview: What are the most impor- The character's first adventure, tant recent trends in modern game Yuan-Hao Chiang The Duke of Newcolmbe Finds Himself design? Where are games headed in the thy, insightful next few years? Drawing on their own in a Bit of a Spot, was the Walton-on- experiences as leading names in game the-Naze-based studio's thirty-sev- design, the panel will discuss their an- enth game title. Released in 1986 for swers to these questions, and how they the Amstrad CPC 6128, it features see them affecting the industry both in Japan and the West. -

Nintendo Switch

Nintendo Switch Last Updated on September 30, 2021 Title Publisher Qty Box Man Comments サムライフォース斬!Natsume Atari Inc. NA Publi... 1-2-Switch Nintendo Aleste Collection M2 Arcade Love: Plus Pengo! Mebius Armed Blue Gunvolt: Striker Pack Inti Creates ARMS Nintendo Astral Chain Nintendo Atsumare Dōbutsu no Mori Nintendo Bare Knuckle IV 3goo Battle Princess Madelyn 3goo Biohazard: Revelations - Unveiled Edition Capcom Blaster Master Zero Trilogy: MetaFight Chronicle: English Version Inti Creates Bloodstained: Ritual of the Night 505 Games Boku no Kanojo wa Ningyo Hime!? Sekai Games Capcom Belt Action Collection Capcom Celeste Flyhigh Works Chocobo no Fushigi na Dungeon: Every Buddy! Square Enix Clannad Prototype CLANNAD: Hikari Mimamoru Sakamichi de Prototype Code of Princess EX Pikii Coffee Talk Coma, The: Double Cut Chorus Worldwide Cotton Reboot!: Limited Edition BEEP Cotton Reboot! BEEP Daedalus: The Awakening of Golden Jazz: Limited Edition Arc System Works Dairantō Smash Bros. Special Nintendo Darius Cozmic Collection Taito Darius Cozmic Collection Special Edition Taito Darius Cozmic Revelation Taito Dead or School Studio Nanafushi Devil May Cry Triple Pack Capcom Donkey Kong: Tropical Freeze Nintendo Dragon Marked For Death: Limited Edition Inti Creates Dragon Marked For Death Inti Creates Dragon Quest Heroes I-II Square Enix Enter the Gungeon Kakehashi Games ESP RA.DE. ψ M2 Fate/Extella: Limited Box XSEED Games Fate/Extella Link XSEED Games Fight Crab -

Notes on Superflat and Its Expression in Videogames - David Surman

Archived from: Refractory: a Journal of Entertainment Media, Vol. 13 (May 2008) Refractory: a Journal of Entertainment Media Notes On SuperFlat and Its Expression in Videogames - David Surman Abstract: In this exploratory essay the author describes the shared context of Sculptor turned Games Designer Keita Takahashi, best known for his PS2 title Katamari Damacy, and superstar contemporary artist Takashi Murakami. The author argues that Takahashi’s videogame is an expression of the technical, aesthetic and cultural values Murakami describes as SuperFlat, and as such expresses continuity between the popular culture and contemporary art of two of Japan’s best-known international creative practitioners. Introduction In the transcript accompanying the film-essay Sans Soleil (1983), Chris Marker describes the now oft- cited allegory at work in Pac-Man (Atarisoft, 1981). For Marker, playing the chomping Pac-Man reveals a semantic layer between graphic and gameplay. The character-in-action is charged with a symbolic intensity that exceeds its apparent simplicity. Videogames are the first stage in a plan for machines to help the human race, the only plan that offers a future for intelligence. For the moment, the insufferable philosophy of our time is contained in the Pac-Man. I didn’t know when I was sacrificing all my coins to him that he was going to conquer the world. Perhaps [this is] because he is the most graphic metaphor of Man’s Fate. He puts into true perspective the balance of power between the individual and the environment, and he tells us soberly that though there may be honor in carrying out the greatest number of enemy attacks, it always comes a cropper (Marker, 1984, p. -

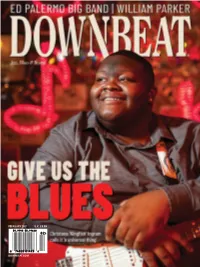

Downbeat.Com February 2021 U.K. £6.99

FEBRUARY 2021 U.K. £6.99 DOWNBEAT.COM FEBRUARY 2021 DOWNBEAT 1 FEBRUARY 2021 VOLUME 88 / NUMBER 2 President Kevin Maher Publisher Frank Alkyer Editor Bobby Reed Reviews Editor Dave Cantor Contributing Editor Ed Enright Creative Director ŽanetaÎuntová Design Assistant Will Dutton Assistant to the Publisher Sue Mahal Bookkeeper Evelyn Oakes ADVERTISING SALES Record Companies & Schools Jennifer Ruban-Gentile Vice President of Sales 630-359-9345 [email protected] Musical Instruments & East Coast Schools Ritche Deraney Vice President of Sales 201-445-6260 [email protected] Advertising Sales Associate Grace Blackford 630-359-9358 [email protected] OFFICES 102 N. Haven Road, Elmhurst, IL 60126–2970 630-941-2030 / Fax: 630-941-3210 http://downbeat.com [email protected] CUSTOMER SERVICE 877-904-5299 / [email protected] CONTRIBUTORS Senior Contributors: Michael Bourne, Aaron Cohen, Howard Mandel, John McDonough Atlanta: Jon Ross; Boston: Fred Bouchard, Frank-John Hadley; Chicago: Alain Drouot, Michael Jackson, Jeff Johnson, Peter Margasak, Bill Meyer, Paul Natkin, Howard Reich; Indiana: Mark Sheldon; Los Angeles: Earl Gibson, Sean J. O’Connell, Chris Walker, Josef Woodard, Scott Yanow; Michigan: John Ephland; Minneapolis: Andrea Canter; Nashville: Bob Doerschuk; New Orleans: Erika Goldring, Jennifer Odell; New York: Herb Boyd, Bill Douthart, Philip Freeman, Stephanie Jones, Matthew Kassel, Jimmy Katz, Suzanne Lorge, Phillip Lutz, Jim Macnie, Ken Micallef, Bill Milkowski, Allen Morrison, Dan Ouellette, Ted Panken, Tom Staudter, Jack Vartoogian; Philadelphia: Shaun Brady; Portland: Robert Ham; San Francisco: Yoshi Kato, Denise Sullivan; Seattle: Paul de Barros; Washington, D.C.: Willard Jenkins, John Murph, Michael Wilderman; Canada: J.D. Considine, James Hale; France: Jean Szlamowicz; Germany: Hyou Vielz; Great Britain: Andrew Jones; Portugal: José Duarte; Romania: Virgil Mihaiu; Russia: Cyril Moshkow. -

The Play of Contradictions in the Music of Katamari Damacy

Chaos in the Cosmos: The Play of Contradictions in the Music of Katamari Damacy. Steven B. Reale (Youngstown, OH) Ausgabe 2011/2 ACT – Zeitschrift für Musik & Performance, Ausgabe 2011/2 2 Steven B. Reale: Chaos in the Cosmos: The Play of Contradictions in the Music of Katamari Damacy. Abstract. At first glance, Katamari Damacy (Namco, 2004) is a simple and cheery video game. Yet the game is full of thematic complexities and complications, which raise a number of ethical and aesthetic prob- lems, including the relationships between childhood and terror, father and son, and digital and analog; furthermore, the complexities gov- erning each of these pairs are cleverly underscored by the game’s mu- sic. The article traces a musical theme that serves as the game’s idée fixe which is transformed in the music across several of the game’s levels. Because the music has the potential to affect player performance, even non-diegetic, non-dynamic video game music can serve profoundly different functions than non-diegetic film music. Zusammenfassung. Auf den ersten Blick ist Katamari Damacy (Namco, 2004) ein simples und heiteres Computerspiel. Dennoch steckt es voller thematischer Verwicklungen und Komplikationen, die eine Reihe von ethischen und ästhetischen Fragen aufwerfen, beispielsweise die Beziehung von Kind- heit und Schrecken, zwischen Vater und Sohn sowie von digital und analog; darüber hinaus werden all die Verwicklungen, die diese Paa- rungen bestimmen, geschickt von der Musik des Spiels unterstrichen. Der Artikel spürt einem musikalischen Thema nach, welches als idée fixe des Spiels fungiert und im Verlauf mehrerer Levels des Spiels musikalisch umgestaltet wird. -

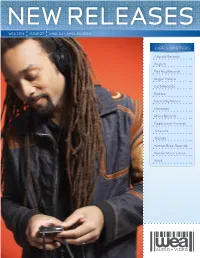

AUDIO + VIDEO 4/13/10 Audio & Video Releases *Click on the Artist Names to Be Taken Directly to the Sell Sheet

NEW RELEASES WEA.COM ISSUE 07 APRIL 13 + APRIL 20, 2010 LABELS / PARTNERS Atlantic Records Asylum Bad Boy Records Bigger Picture Curb Records Elektra Fueled By Ramen Nonesuch Rhino Records Roadrunner Records Time Life Top Sail Warner Bros. Records Warner Music Latina Word AUDIO + VIDEO 4/13/10 Audio & Video Releases *Click on the Artist Names to be taken directly to the Sell Sheet. Click on the Artist Name in the Order Due Date Sell Sheet to be taken back to the Recap Page Street Date CD- NON 521074 ALLEN, TONY Secret Agent $18.98 4/13/10 3/24/10 CD- RAA 523695 BECK, JEFF Emotion & Commotion $18.98 4/13/10 3/24/10 CD- ATL 521144 CASTRO, JASON Jason Castro $9.94 4/13/10 3/24/10 DV- WRN 523924 CUMMINS, DAN Crazy With A Capital F (DVD) $16.95 4/13/10 3/17/10 CD- ASW 523890 GUCCI MANE Burrrprint (2) HD $13.99 4/13/10 3/24/10 CD- LAT 524047 MAGO DE OZ Gaia III - Atlantia $20.98 4/13/10 3/24/10 CD- MERCHANT, NON 522304 NATALIE Leave Your Sleep (2CD) $24.98 4/13/10 3/24/10 CD- MERCHANT, Selections From The Album NON 522301 NATALIE Leave Your Sleep $18.98 4/13/10 3/24/10 CD- LAT 524161 MIJARES Vivir Asi - Vol. II $16.98 4/13/10 3/24/10 CD- STRAIGHT NO ATL 523536 CHASER With A Twist $18.98 4/13/10 3/24/10 4/13/10 Late Additions Street Date Order Due Date CD- ERP 524163 GLORIANA Gloriana $13.99 4/13/10 3/24/10 CD- MFL 524110 GREAVER, PAUL Guitar Lullabies $11.98 4/13/10 3/24/10 Last Update: 03/03/10 ARTIST: Tony Allen TITLE: Secret Agent Label: NON/Nonesuch Config & Selection #: CD 521074 Street Date: 04/13/10 Order Due Date: 03/24/10 Compact Disc UPC: 075597981001 Box Count: 30 Unit Per Set: 1 SRP: $18.98 Alphabetize Under: T File Under: World For the latest up to date info on this release visit WEA.com. -

Society for Ethnomusicology 58Th Annual Meeting Abstracts

Society for Ethnomusicology 58th Annual Meeting Abstracts Sounding Against Nuclear Power in Post-Tsunami Japan examine the musical and cultural features that mark their music as both Marie Abe, Boston University distinctively Jewish and distinctively American. I relate this relatively new development in Jewish liturgical music to women’s entry into the cantorate, In April 2011-one month after the devastating M9.0 earthquake, tsunami, and and I argue that the opening of this clergy position and the explosion of new subsequent crises at the Fukushima nuclear power plant in northeast Japan, music for the female voice represent the choice of American Jews to engage an antinuclear demonstration took over the streets of Tokyo. The crowd was fully with their dual civic and religious identity. unprecedented in its size and diversity; its 15 000 participants-a number unseen since 1968-ranged from mothers concerned with radiation risks on Walking to Tsuglagkhang: Exploring the Function of a Tibetan their children's health to environmentalists and unemployed youths. Leading Soundscape in Northern India the protest was the raucous sound of chindon-ya, a Japanese practice of Danielle Adomaitis, independent scholar musical advertisement. Dating back to the late 1800s, chindon-ya are musical troupes that publicize an employer's business by marching through the From the main square in McLeod Ganj (upper Dharamsala, H.P., India), streets. How did this erstwhile commercial practice become a sonic marker of Temple Road leads to one main attraction: Tsuglagkhang, the home the 14th a mass social movement in spring 2011? When the public display of merriment Dalai Lama. -

Mukokuseki and the Narrative Mechanics in Japanese Games

Mukokuseki and the Narrative Mechanics in Japanese Games Hiloko Kato and René Bauer “In fact the whole of Japan is a pure invention. There is no such country, there are no such peo- ple.”1 “I do realize there’s a cultural difference be- tween what Japanese people think and what the rest of the world thinks.”2 “I just want the same damn game Japan gets to play, translated into English!”3 Space Invaders, Frogger, Pac-Man, Super Mario Bros., Final Fantasy, Street Fighter, Sonic The Hedgehog, Pokémon, Harvest Moon, Resident Evil, Silent Hill, Metal Gear Solid, Zelda, Katamari, Okami, Hatoful Boyfriend, Dark Souls, The Last Guardian, Sekiro. As this very small collection shows, Japanese arcade and video games cover the whole range of possible design and gameplay styles and define a unique way of narrating stories. Many titles are very successful and renowned, but even though they are an integral part of Western gaming culture, they still retain a certain otherness. This article explores the uniqueness of video games made in Japan in terms of their narrative mechanics. For this purpose, we will draw on a strategy which defines Japanese culture: mukokuseki (borderless, without a nation) is a concept that can be interpreted either as Japanese commod- ities erasing all cultural characteristics (“Mario does not invoke the image of Ja- 1 Wilde (2007 [1891]: 493). 2 Takahashi Tetsuya (Monolith Soft CEO) in Schreier (2017). 3 Funtime Happysnacks in Brian (@NE_Brian) (2017), our emphasis. 114 | Hiloko Kato and René Bauer pan” [Iwabuchi 2002: 94])4, or as a special way of mixing together elements of cultural origins, creating something that is new, but also hybrid and even ambig- uous. -

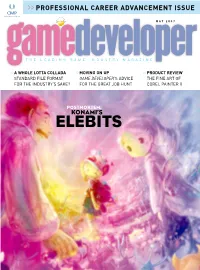

Game Developer’S Advice the Fine Art of for the Industry’S Sake? for the Great Job Hunt Corel Painter X

>> PROFESSIONAL CAREER ADVANCEMENT ISSUE MAY 2007 THE LEADING GAME INDUSTRY MAGAZINE >>A WHOLE LOTTA COLLADA >>MOVING ON UP >>PRODUCT REVIEW STANDARD FILE FORMAT GAME DEVELOPER’S ADVICE THE FINE ART OF FOR THE INDUSTRY’S SAKE? FOR THE GREAT JOB HUNT COREL PAINTER X POSTMORTEM: ELEBITSKONAMI’S ¼ËÉ9gÉÛпÉP¿g9ËÃÉ Ù9ËÉËÉGgPgÉ¿g9ËÛÉ Larger data sets and escalating build times are the harsh realities that face anyone trying to achieve a greater sense of realism in 3D simulations and games. That’s why SGI and PipelineFX have teamed to deliver a scalable, easy-to-deploy render management solution—including SGI® Altix® XE integrated server systems built on Quad-Core Intel® Xeon® 5300 processors and Qube!™ software from PipelineFX—that can move projects from rendering to reality in no time. Read how you can accelerate your rendering workflow with our new White Paper, “Managing Rich-Media Workflows,” at www.sgi.com/go/create ©ÏßßÆÉ,ÉÉ¿zËÃÉ¿gÃg¿Øg^É,ÉÃÉ9ÉË¿9^g9¿ÉsÉ,PÉ¿9§PÃ[ÉP[ÉÉËgÉ.Ëg^É,Ë9ËgÃÉ9^È¿ÉËg¿ÉPÐË¿gÃÉÙ¿^Ù^gÉ Ëg[ÉËgÉz[É5g[É9^É5gÉÃ^gÉ9¿gÉË¿9^g9¿ÃÉ¿É¿gzÃËg¿g^ÉË¿9^g9¿ÃÉsÉËgÉ ¿§¿9ËÉ¿ÉËÃÉÃÐGÃ^9¿gÃÉÉËgÉ.Ëg^É,Ë9ËgÃÉ9^ÉËg¿ÉPÐË¿gà -gÉ)§gg5É9^É*ÐGgqÉzÃÉ9¿gÉË¿9^g9¿ÃÉsÉ)§gg5[É [ÉÐÐ[É9Ù9 []CONTENTS MAY 2007 VOLUME 14, NUMBER 5 PROFESSIONAL CAREER GUIDE 7 FEATURE Moving On Up A career move doesn’t always involve leaving a company. Developers have a bounty of choices within reach, from changing job titles to moving to a different studio within the same company. -

000NAG Xbox Insider November 2006

Free NAG supplement (not to be sold separately) THE ONLY RESOURCE YOU’LL NEED FOR EVERYTHING XBOX 360 November 2006 Issue 2 THE SPIRIT OF SYSTEM SHOCK THE NEW ERA VENUS RISES OVER GAMING INSIDE! 360 SOUTH AFRICA RAGE LAUNCH EVERYTHING X06 TONY HAWK’S PROJECT 8 DASHBOARD WHAT’S IN THE BOX? 8 THE BUZZ: News and 360 views from around the planet 10 FEATURE: Local Launch We went, we stood in line, we watched people pick up their preorders. South Africa just got a little greener 12 FEATURE: The New Era As graphics improve, as gameplay evolves, naturally it is only a matter of time before seperation between male and female gamers dissolves 20 PREVIEW: Bioshock It is System Shock 3 in everything but the name. And it lacks Shodan. But it is still very System Shock, so much so that we call it BioSystemShock 28 FEATURE: Project 8 Mr. Hawk returns and this time he’s left the zany hijinks of Bam Magera behind. Project 8 is all about the motion, expression and momentum 29 HARDWARE: Madcatz offers up some gamepad and HD VGA cable love, and the gamepad can be used on the PC too! 36 OPINION: The Class of 360 This month James Francis reminds us why we bought a PlayStation 2 in the first place, and then calls us fanboys 4 11.2006 EDITOR SPEAK AND HE SAID, “LET THERE BE RING OF LIGHT” publisher tide media managing editor michael james [email protected] +27 83 409 8220 editor miktar dracon [email protected] assistant editor lauren das neves [email protected] copy editor nati de jager contributors matt handrahan james francis ryan king jon denton group sales and marketing manager len nery | [email protected] +27 84 594 9909 advertising sales jacqui jacobs | [email protected] +27 82 778 8439 t was nothing short of incredible to see so dave gore | [email protected] +27 82 829 1392 cheryl bassett | [email protected] much enthusiasm and support for the Xbox +27 72 322 9875 art director 360, as was seen at both rAge and at the BT chris bistline I designer Games Xbox 360 launch party.