Pattern Recognition with Raspberry Pi Platform

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cubietruck – Mini PC

SPRZĘT Cubietruck – mini PC Rynek komputerków jednopłytkowych opartych o procesory ARM zapoczątkowany przez Raspberry Pi rozwija się doskonale. Może nie jak grzyby po deszczu, ale systematycznie pojawiają się nowe rozwiązania: BeagleBoard, Marsboard, Cubieboard, Olinuxino itp. Różnią się one wyposażeniem, wydajnością, dostępnością dokumentacji oraz wsparciem technicznym. Ciekawie rozwija się propozycja Cubieboard. mocujących. Niby nic, ale te trzy kawałki two- org, zapoczątkowana płytką Cubieboard A10 rzywa i paczka tulejek umożliwiają poskładanie Fotografi a 3. Obudowa Cubietruck (opisaną w EP06/2013) i Cubieboard2 zgod- samodzielnego systemu mini-PC wyposażo- ną mechanicznie, ale zbudowaną w oparciu nego w dysk HDD 2,5”, wystarczająco zabez- rolę domowego centrum multimedialnego lub o nowszy, dwurdzeniowy procesor A20, zwięk- pieczając mechanicznie jego elementy. Osłony Linuxowego komputera PC. Jedyne zastrzeżenie szający wydajność Cubie i paletę jej zastosowań w odpowiednich miejscach mają wyfrezowane można mieć do kilku różnokolorowych LED, (fotografi a 1). Najnowsza propozycja to Cubie- otwory umożliwiające korzystanie z GPIO bez bezlitośnie informujących nasze oczy o stanie truck (Cubieboard3), oparty podobnie jak Cu- zdejmowania obudowy. pracy Cubie. bieboard2 (fotografi a 2) o procesor Allwinner Ciekawą propozycją dla osób wykorzy- Cubieboard3 oparty jest o SoC w architektu- A20, lecz mający znacznie bogatsze wyposaże- stujących Cubieboard3 w roli samodzielnego rze ARM7 – Allwinner A20, który w połączeniu nie, co niestety wiąże się z wyższą ceną. Porów- mini-PC, jest pełna obudowa pokazana na fo- ze sporej wielkości dyskiem NAND Flash oraz nanie parametrów poszczególnych komputer- tografi i 3. W swoim wnętrzu mieści swobodnie zwiększoną pamięcią RAM bezproblemowo ków Cubieboard umieszczono w tabeli 1. płytkę Cubieboard3, dysk HDD 2,5” (fotogra- sprawdza się w roli komputera PC pracującego Podobnie jak w przypadku poprzednich fi a 4) i przewody połączeniowe. -

Development Boards This Product Is Rohs Compliant

Development Boards This product is RoHS compliant. PANDABOARD DEVELOPMENT PLATFORM Features: • Core Logic: OMAP4460 applications Processor • Interface: (1) General Purpose Expansion Header • Wireless Connectivity: 802.11 b/g/n (WiLink™ 6.0) • Memory: 1GB DDR2 RAM (I2C, GPMC, USB, MMC, DSS, ETM) • Debug options: JTAG, UART/RS-232, 1 GPIO button NTL • Full Size SD/MMC card port • Camera Expansion Header • Graphics APIs: OpenGL ES v2.0, OpenGL ES v1.1, • 10/100 Ethernet • Display Connectors: HDMI v1.3, DVI-D. LCD Expansion OpenVGv1.1, and EGL v1.3 • USB: (1) USB 2.0 OTG port, (2) USB 2.0 High-speed port • Audio Connectors: 3.5" In/Out, HDMI audio out For quantities greater than listed, call for quote. MOUSER Pandaboard Price Description STOCK NO. Part No. Each 595-PANDABOARD UEVM4430G-01-00-00 Pandaboard ARM Cortex-A9 MPCore 1GHz OMAP4430 SoC Platform 179.00 595-PANDABOARD-ES UEVM4460G-02-01-00 Pandaboard ARM Cortex-A9 MPCore 1GHz OMAP4460 SoC Platform 185.00 Embedded Modules Embedded BEAGLEBOARD SOC PLATFORMS BeagleBoard.org develops low-cost, fan-less single-board computers based on low-power Texas Instruments processors featuring the ARM Cortex-A8 core with all of the expandability of today's desktop machines, but without the bulk, expense, or noise. BeagleBoard.org provides an open source development platform for A B the creation of high-performance embedded designs. Beagleboard C4 Features: Beagleboard xM Features: Beaglebone Features: • Over 1,200 Dhrystone MIPS using the superscalar • Over 2,000 Dhrystone MIPS using the Super-scalar -

Openbricks Embedded Linux Framework - User Manual I

OpenBricks Embedded Linux Framework - User Manual i OpenBricks Embedded Linux Framework - User Manual OpenBricks Embedded Linux Framework - User Manual ii Contents 1 OpenBricks Introduction 1 1.1 What is it ?......................................................1 1.2 Who is it for ?.....................................................1 1.3 Which hardware is supported ?............................................1 1.4 What does the software offer ?............................................1 1.5 Who’s using it ?....................................................1 2 List of supported features 2 2.1 Key Features.....................................................2 2.2 Applicative Toolkits..................................................2 2.3 Graphic Extensions..................................................2 2.4 Video Extensions...................................................3 2.5 Audio Extensions...................................................3 2.6 Media Players.....................................................3 2.7 Key Audio/Video Profiles...............................................3 2.8 Networking Features.................................................3 2.9 Supported Filesystems................................................4 2.10 Toolchain Features..................................................4 3 OpenBricks Supported Platforms 5 3.1 Supported Hardware Architectures..........................................5 3.2 Available Platforms..................................................5 3.3 Certified Platforms..................................................7 -

Cubieboard Cubieboard2 Cubietruck Beaglebone Black

Raspberry Pi (Model B rev.2) Cubieboard Cubieboard2 Cubietruck Beaglebone Black 1 Ghz (OC) ARM® Cortex-A6 1 Ghz ARM® Cortex-A8 1 Ghz ARM® Cortex-A7 Dual Core 1 Ghz ARM® Cortex-A7 Dual Core 1 Ghz ARM® Cortex-A8 CPU ARM1176JZF-F Allwinner A10 C8096CA Allwinner A20 Allwinner A20 AM335x GPU/FPU VideoCore IV Mali-400 (CedarX, OpenGL) Mali-400MP2 (CedarX, OpenGL) Mali-400MP2 (CedarX, OpenGL) SGX350 3D / NEON FPU accelerator RAM 512 MB 1 GB DDR3 2 GB 2 GB 512 MB DDR3 Storage micro SD/SDHC 4 GB NAND Flash, micro SD/SDHC, SATA 4 GB NAND Flash, micro SD/SDHC, SATA 4 GB NAND Flash, micro SD/SDHC, SATA 2.0 2GB eMMC Power micro USB (5V/1A) 3.5 W DC 5v/2A DC 5v/2A DC 5v/2.5A DC 5V/500mA Video RCA Composite Video, HDMI 1.4 HDMI HDMI HDMI/VGA microHDMI Audio 3.5 mm Headphone Jack 3.5 mm Headphone Jack / Line In 3.5 mm Headphone Jack 3.5 mm Headphone Jack, SPDIF Network 10/100 Mbps 10/100 Mbps 10/100 Mbps 10/100/1000 Mbps, Wifi, Bluetooth 10/100 Mbps 2x46 PIN GPIO I/O ports 26 PIN GPIO, 2x Ribon 2x48 PIN GPIO, 4PIN Serial, 1IR 2x48 PIN GPIO, 4PIN Serial, 1IR 1x 54 PIN GPIO (Arduino Shield Compatible) USB ports 2x USB 2.0 2x USB 2.0 2x USB 2.0, 1 mini USB OTG 2x USB 2.0, 1 mini USB OTG 1x USB 2.0 Linux (Raspbian, Debian, Fedora, Arch, Gentoo, Kali), Andoid, Angstrom, Ubuntu, Fedora, Gentoo. -

WLAN Hacking Workshop

WLAN Hacking Workshop Oz Krakowski – [email protected] Luciano Coelho – [email protected] Agenda What is “OpenLink™”? Workshop intro Board setup Getting WLAN to work on BeagleBoard The OpenLink Challenge Wrap-up – Demo – Ubuntu – Prizes – What’s next What is “OpenLink™”? An open source initiative Wireless connectivity technologies – Wi-Fi™ – Bluetooth® – FM – Roadmap: • Bluetooth Low Energy • ANT • Zigbee® • NFC What is “OpenLink™”? Open link drivers available today Part of mainline Linux kernel Easily attach to open source development platform such as BeagleBoard and PandaBoard What is “OpenLink™”? Website – OpenLink.org – Community – News – Projects – Support – Platforms – Resources … And more to come… Workshop Outline Short hands-on demo Learn how to connect to an AP from the CLI Your chance to get your own BeagleBoard with a WLAN daughter card! Board Setup Pre-requisite: minicom (or another terminal app) Insert the micro-SD card Connect the USB-serial adapter Connect the USB cable (power supply) Serial Console Start minicom – minicom -s -o • /dev/ttyUSB0 • 115200 8N1 • No HW/SW flow control Log in to the serial console • user: root • pwd: rootguri BeagleBoard boot basics Two-phase boot – X-Loader (MLO) – u-boot.bin • Environment setup • Kernel parameters Kernel – Mainline-based (2.6.39-rc1) – One patch for the WLAN daughtercard Connecting to an open Access Point Set your own MAC address ifconfig wlan0 hw ether 08:00:28:00:00:<number> ifconfig wlan0 up Using the iw tool to scan iw wlan0 scan Connecting to an open AP iw wlan0 connect -

Raspberry Pi Computer Vision Programming – Second Edition Programming – Second Edition

Raspberry Pi Computer Vision Pajankar Ashwin Edition Second – Programming Vision Computer Pi Raspberry Programming – Second Edition Raspberry Pi is one of the popular on Raspberry Pi, before covering major Raspberry Pi single-board computers of our generation. techniques and algorithms in image All the major image processing and computer processing, manipulation, and computer vision algorithms and operations can be vision. By sequentially working through the implemented easily with OpenCV on steps in each chapter, you'll understand Computer Vision Raspberry Pi. This updated second edition is essential OpenCV features. Later sections packed with cutting-edge examples and new will take you through creating graphical user topics, and covers the latest versions of key interface (GUI) apps with GPIO and OpenCV. technologies such as Python 3, Raspberry Pi, You'll also learn how to use the new computer Programming and OpenCV. This book will equip you with vision library, Mahotas, to perform various the skills required to successfully design and image processing operations. Finally, you'll implement your own OpenCV, Raspberry Pi, explore the Jupyter notebook and how to and Python-based computer vision projects. set up a Windows computer and Ubuntu for computer vision. Second Edition At the start, you'll learn the basics of Python 3, and the fundamentals of single-board By the end of this book, you'll be able to computers and NumPy. Next, you'll discover confi dently build and deploy computer how to install OpenCV 4 for Python 3 vision apps. Design -

A $35 Firewall for the Developing World

RC25442 (IRE1401-011) January 20, 2014 Computer Science IBM Research Report A $35 Firewall for the Developing World Zubair Nabi IBM Research Smarter Cities Technology Centre Mulhuddart Dublin 15, Ireland Research Division Almaden – Austin – Beijing – Cambridge – Dublin - Haifa – India – Melbourne - T.J. Watson – Tokyo - Zurich LIMITED DISTRIBUTION NOTICE: This report has been submitted for publication outside of IBM and will probably be copyrighted if accepted for publication. It has been issued as a Research Report for early dissemination of its contents. In view of the transfer of copyright to the outside publisher, its distribution outside of IBM prior to publication should be limited to peer communications and specific requests. After outside publication, requests should be filled only by reprints or legally obtained copies of the article (e.g., payment of royalties). Many reports are available at http://domino.watson.ibm.com/library/CyberDig.nsf/home. A $35 Firewall for the Developing World Zubair Nabi IBM Research, Dublin [email protected] ABSTRACT countries [20]. In addition, maturity in the Internet A number of recent efforts aim to bridge the global digital ecosystem has resulted in a higher standard of life [20]. divide, particularly with respect to Internet access. We take In the same vein, Internet access coupled with social this endeavor one step further and argue that Internet access media has become a catalyst for social, cultural, and and web security go hand in glove in the developing world. political activism and change [32, 35, 33, 36]. While the To remedy the situation, we explore whether low-cost plat- Internet has been declared a basic human right [29], in forms, such as Raspberry Pi ($35) and Cubieboard ($59), reality more than two-thirds of the world population| can be used to implement security mechanisms. -

Proyecto Fin De Grado

ESCUELA TÉCNICA SUPERIOR DE INGENIERÍA Y SISTEMAS DE TELECOMUNICACIÓN PROYECTO FIN DE GRADO TÍTULO: Despliegue de Liota (Little IoT Agent) en Raspberry Pi AUTOR: Ricardo Amador Pérez TITULACIÓN: Ingeniería Telemática TUTOR (o Director en su caso): Antonio da Silva Fariña DEPARTAMENTO: Departamento de Ingeniería Telemática y Electrónica VºBº Miembros del Tribunal Calificador: PRESIDENTE: David Luengo García VOCAL: Antonio da Silva Fariña SECRETARIO: Ana Belén García Hernando Fecha de lectura: Calificación: El Secretario, Despliegue de Liota (Little IoT Agent) en Raspberry Pi Quizás de todas las líneas que he escrito para este proyecto, estas sean a la vez las más fáciles y las más difíciles de todas. Fáciles porque podría doblar la longitud de este proyecto solo agradeciendo a mis padres la infinita paciencia que han tenido conmigo, el apoyo que me han dado siempre, y el esfuerzo que han hecho para que estas líneas se hagan realidad. Por todo ello y mil cosas más, gracias. Mamá, papá, lo he conseguido. Fáciles porque sin mi tutor Antonio, este proyecto tampoco sería una realidad, no solo por su propia labor de tutor, si no porque literalmente sin su ayuda no se hubiera entregado a tiempo y funcionando. Después de esto Antonio, voy a tener que dejarme ganar algún combate en kenpo como agradecimiento. Fáciles porque, sí melones os toca a vosotros, Alex, Alfonso, Manu, Sama, habéis sido mi apoyo más grande en los momentos más difíciles y oscuros, y mis mejores compañeros en los momentos de felicidad. Amigos de Kulturales, los hermanos Baños por empujarme a mejorar, Pablo por ser un ejemplo a seguir, Chou, por ser de los mejores profesores y amigos que he tenido jamás. -

Pocketbeagle

PocketBeagle®, the tiniest $25 key-fob computer you can buy The newest BeagleBoard.org® board is PocketBeagle®, an ultra-tiny-yet-complete Linux-enabled, community-supported, open-source USB-key-fob computer. PocketBeagle® features an incredible low cost, slick design and simple usage, making PocketBeagle® the ideal development board for beginners and professionals alike. You develop directly in a web browser and PocketBeagle® can easily be set back to factory conditions, leaving you free to experiment. Key features: * Low cost Linux computer with tremendous expansibility * Opportunity to learn many programming aspects from educators on-line * Openness and flexibility tear-down limits on your imagination ® beagleboard.org PocketBeagle® 173401 The whats and whys of PocketBeagle® What is a USB key-fob computer? PocketBeagle® is the size of a tiny mint-tin (35mm by 55mm), less than half the size of the larger mint-tin or credit-card sized BeagleBone® Black (55mm by 86mm). Unlike a desktop computer where you connect a monitor, keyboard and mouse, PocketBeagle® is made to live inside your project and enables you to define its interfaces. PocketBeagle® is easily programmed through a web browser running on any other connected desktop. What can I do with PocketBeagle®? Getting to the Linux command-line and text editor via your web browser is simple, providing you with a playground for programming and electronics. Exploring is made easy with several available libraries and tutorials and many more coming. Once you get a bit familiar with Linux and electronics, you are free to explore numerous more advanced projects from the community. The sky is no limit; PocketBeagle® makes a great starting point for building something as advanced as a computer for a CubeSat and there are several BeagleBone® Black examples out there already today. -

Using the Beaglebone Real-‐Time

USING THE BEAGLEBONE REAL-TIME MICROCONTROLLERS Jason Kridner, [email protected] BeagleBone Black: Open hardware computer for makers Truly flexible open hardware and BeagleBone Black soRware development plaorm • Ready to use: ~$50 • 1 GHz performance • On-board HDMI to connect directly to TVs and All you need is in the box monitors • More and faster memory now with 512MB DDR3 • On-board flash storage Proven ecosystem from prototype frees up the microSD card slot to product • Support for exis^ng Most affordable and proven open hardware Linux platform available Cape plug-in boards What are PRUs ¨ “Programmable Real-^me Units” ¨ 32-bit RISC processors at 200MHz with single-cycle pin access for hard real-^me ¨ Op^mized for packet processing/switching and soRware implementaons of peripherals ¨ Part of the PRU-ICSS, “Industrial Communicaons SubSystem” Why and when to use PRUs ¨ Free from running on an operang system, so can be dedicated to a func^on ¨ Real-^me because it can’t be interrupted from its given task by other tasks ¤ Interrupts are simply registered into an event register ¤ Operaons scheduled in an event loop ¨ Low, low, low latency from input to output ¤ Zero-depth pipeline ¨ You can’t interface an external MCU to DDR memory so fast! Examples usage ¨ Tight control loops ¤ Driving motors in a mobile robot, CNC machine or 3D printer ¨ Custom protocols ¤ WS28x LEDs, DMX512, ... ¤ EtherCAT, ProfiBUS, ProfiNET, ... ¨ SoR peripherals ¤ PWM, UART (LEGO), ... Example projects (see wiki page) ¨ 6502 memory slave ¨ DMX512 ¨ WS28xx LEDs (OLA, LEDscape) ¨ MachineKit for 3D prinng or CNC ¨ GSoC: pruspeak, BeagleLogic ¨ GCC, Forth, .. -

Design of a Low-Cost Wireless NIRS System with Embedded Linux and a Smartphone Interface

Design of a low-cost wireless NIRS system with embedded Linux and a smartphone interface A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Biomedical Engineering by Diogo D.S. Dias BEng, Fundac¸a~o Federal do ABC, 2014 Bac., Fundac¸a~o Federal do ABC, 2012 2015 Wright State University Wright State University GRADUATE SCHOOL October 28th, 2015 I HEREBY RECOMMEND THAT THE THESIS PREPARED UNDER MY SUPER- VISION BY Diogo D.S. Dias ENTITLED Design of a low-cost wireless NIRS system with embedded Linux and a smartphone interface BE ACCEPTED IN PARTIAL FUL- FILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF Master of Science in Biomedical Engineering. Nasser H. Kashou Thesis Director Jaime E. Ramirez-Vick, Ph.D., Chair, Department of Biomedical, Industrial and Human Factors Engineering Committee on Final Examination Jack Jean, Ph.D. Ulas Sunar, Ph.D. Nasser H. Kashou, Ph.D. Robert E.W. Fyffe , Ph.D. Vice President for Research and Dean of the Graduate School ABSTRACT Dias, Diogo. M.S.B.M.E, Department of Biomedical, Industrial and Human Factors Engineering, Wright State University, 2015. Design of a low-cost wireless NIRS system with embedded Linux and a smartphone interface. Wireless near-infrared spectroscopy (NIRS) systems can help to reduce movement ar- tifacts, and distraction due cables. Utilizing Embedded Linux (EL) can reduce size, devel- opment time, cost of a project and allow portability. The goal of this project is to develop a low-cost wireless small-sized NIRS system using EL and a Smartphone as the interface. -

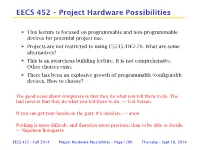

EECS 452 – Project Hardware Possibilities

EECS 452 – Project Hardware Possibilities ñ This lecture is focused on programmable and non-programmable devices for potential project use. ñ Projects are not restricted to using C5515/DE2-70. What are some alternatives? ñ This is an awareness building lecture. It is not comprehensive. Other choices exist. ñ There has been an explosive growth of programmable/configurable devices. How to choose? The good news about computers is that they do what you tell them to do. The bad news is that they do what you tell them to do. — Ted Nelson If you can get your hands on the part, it’s obsolete. — anon Nothing is more difficult, and therefore more precious, than to be able to decide. — Napoleon Bonaparte EECS 452 – Fall 2014 Project Hardware Possibilities – Page 1/90 Thursday – Sept 18, 2014 Before we start . In today’s world there exist many, moderately powerful, very low cost, single board computers that one can use to self-educate. When doing DSP one very often wants/needs to generate an observe signals. The cost of the needed equipment can greatly dwarf the board cost. However, the cost of “equipment” is dropping. Consider the Digilent Analog Discovery. ñ $159 academic ñ 2-channel oscilloscope ñ 2-channel waveform generator ñ 16-channel logic analyzer ñ 16-channel digital pattern generator ñ Spectrum Analyzer ñ Network Analyzer ñ Voltmeter ñ Digital I/O ñ ≈$220 with bells and whistles From a Digilent web page. EECS 452 – Fall 2014 Project Hardware Possibilities – Page 2/90 Thursday – Sept 18, 2014 Risk and other ñ The safest choice in implementing your project is to make use of the C5515 and/or DE2-70 (or DE0-nano).